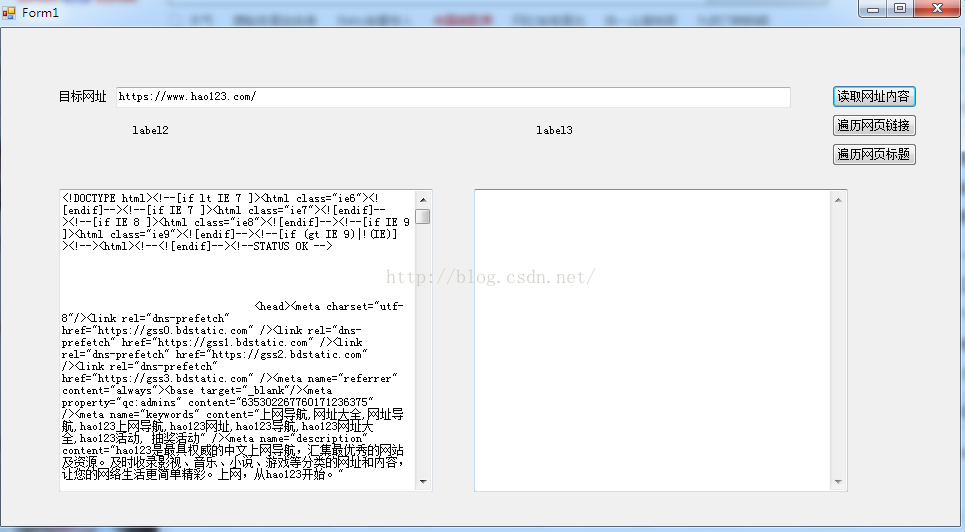

公司编辑妹子需要爬取网页内容,叫我帮忙做了一简单的爬取工具

这是爬取网页内容,像是这对大家来说都是不难得,但是在这里有一些小改动,代码献上,大家参考

1 private string GetHttpWebRequest(string url) 2 { 3 HttpWebResponse result; 4 string strHTML = string.Empty; 5 try 6 { 7 Uri uri = new Uri(url); 8 WebRequest webReq = WebRequest.Create(uri); 9 WebResponse webRes = webReq.GetResponse(); 10 11 HttpWebRequest myReq = (HttpWebRequest)webReq; 12 myReq.UserAgent = "User-Agent:Mozilla/4.0 (compatible; MSIE 6.0; Windows NT 5.2; .NET CLR 1.0.3705"; 13 myReq.Accept = "*/*"; 14 myReq.KeepAlive = true; 15 myReq.Headers.Add("Accept-Language", "zh-cn,en-us;q=0.5"); 16 result = (HttpWebResponse)myReq.GetResponse(); 17 Stream receviceStream = result.GetResponseStream(); 18 StreamReader readerOfStream = new StreamReader(receviceStream, System.Text.Encoding.GetEncoding("utf-8")); 19 strHTML = readerOfStream.ReadToEnd(); 20 readerOfStream.Close(); 21 receviceStream.Close(); 22 result.Close(); 23 } 24 catch 25 { 26 Uri uri = new Uri(url); 27 WebRequest webReq = WebRequest.Create(uri); 28 HttpWebRequest myReq = (HttpWebRequest)webReq; 29 myReq.UserAgent = "User-Agent:Mozilla/4.0 (compatible; MSIE 6.0; Windows NT 5.2; .NET CLR 1.0.3705"; 30 myReq.Accept = "*/*"; 31 myReq.KeepAlive = true; 32 myReq.Headers.Add("Accept-Language", "zh-cn,en-us;q=0.5"); 33 //result = (HttpWebResponse)myReq.GetResponse(); 34 try 35 { 36 result = (HttpWebResponse)myReq.GetResponse(); 37 } 38 catch (WebException ex) 39 { 40 result = (HttpWebResponse)ex.Response; 41 } 42 Stream receviceStream = result.GetResponseStream(); 43 StreamReader readerOfStream = new StreamReader(receviceStream, System.Text.Encoding.GetEncoding("gb2312")); 44 strHTML = readerOfStream.ReadToEnd(); 45 readerOfStream.Close(); 46 receviceStream.Close(); 47 result.Close(); 48 } 49 return strHTML; 50 }

这是根据url爬取网页远吗,有一些小改动,很多网页有不同的编码格式,甚至有些网站做了反爬取的防范,这个方法经过能够改动也能爬去

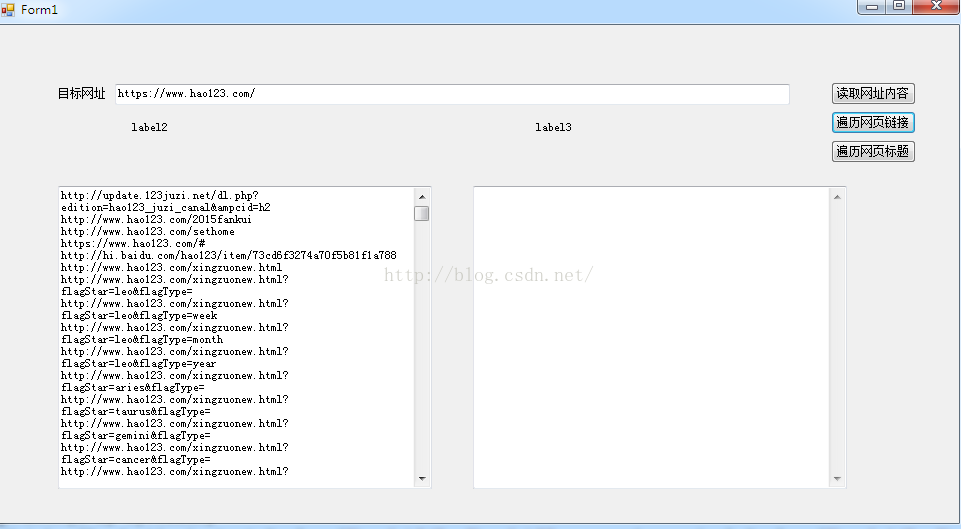

以下是爬取网页所有的网址链接

/// <summary> /// 提取HTML代码中的网址 /// </summary> /// <param name="htmlCode"></param> /// <returns></returns> private static List<string> GetHyperLinks(string htmlCode, string url) { ArrayList al = new ArrayList(); bool IsGenxin = false; StringBuilder weburlSB = new StringBuilder();//SQL StringBuilder linkSb = new StringBuilder();//展示数据 List<string> Weburllistzx = new List<string>();//新增 List<string> Weburllist = new List<string>();//旧的 string ProductionContent = htmlCode; Regex reg = new Regex(@"http(s)?://([w-]+.)+[w-]+/?"); string wangzhanyuming = reg.Match(url, 0).Value; MatchCollection mc = Regex.Matches(ProductionContent.Replace("href="/", "href="" + wangzhanyuming).Replace("href='/", "href='" + wangzhanyuming).Replace("href=/", "href=" + wangzhanyuming).Replace("href="./", "href="" + wangzhanyuming), @"<[aA][^>]* href=[^>]*>", RegexOptions.Singleline); int Index = 1; foreach (Match m in mc) { MatchCollection mc1 = Regex.Matches(m.Value, @"[a-zA-z]+://[^s]*", RegexOptions.Singleline); if (mc1.Count > 0) { foreach (Match m1 in mc1) { string linkurlstr = string.Empty; linkurlstr = m1.Value.Replace(""", "").Replace("'", "").Replace(">", "").Replace(";", ""); weburlSB.Append("$-$"); weburlSB.Append(linkurlstr); weburlSB.Append("$_$"); if (!Weburllist.Contains(linkurlstr) && !Weburllistzx.Contains(linkurlstr)) { IsGenxin = true; Weburllistzx.Add(linkurlstr); linkSb.AppendFormat("{0}<br/>", linkurlstr); } } } else { if (m.Value.IndexOf("javascript") == -1) { string amstr = string.Empty; string wangzhanxiangduilujin = string.Empty; wangzhanxiangduilujin = url.Substring(0, url.LastIndexOf("/") + 1); amstr = m.Value.Replace("href="", "href="" + wangzhanxiangduilujin).Replace("href='", "href='" + wangzhanxiangduilujin); MatchCollection mc11 = Regex.Matches(amstr, @"[a-zA-z]+://[^s]*", RegexOptions.Singleline); foreach (Match m1 in mc11) { string linkurlstr = string.Empty; linkurlstr = m1.Value.Replace(""", "").Replace("'", "").Replace(">", "").Replace(";", ""); weburlSB.Append("$-$"); weburlSB.Append(linkurlstr); weburlSB.Append("$_$"); if (!Weburllist.Contains(linkurlstr) && !Weburllistzx.Contains(linkurlstr)) { IsGenxin = true; Weburllistzx.Add(linkurlstr); linkSb.AppendFormat("{0}<br/>", linkurlstr); } } } } Index++; } return Weburllistzx; }

这块的技术其实就是简单的使用了正则去匹配!接下来献上获取标题,以及存储到xml文件的方法

1 /// <summary> 2 /// // 把网址写入xml文件 3 /// </summary> 4 /// <param name="strURL"></param> 5 /// <param name="alHyperLinks"></param> 6 private static void WriteToXml(string strURL, List<string> alHyperLinks) 7 { 8 XmlTextWriter writer = new XmlTextWriter(@"D:HyperLinks.xml", Encoding.UTF8); 9 writer.Formatting = Formatting.Indented; 10 writer.WriteStartDocument(false); 11 writer.WriteDocType("HyperLinks", null, "urls.dtd", null); 12 writer.WriteComment("提取自" + strURL + "的超链接"); 13 writer.WriteStartElement("HyperLinks"); 14 writer.WriteStartElement("HyperLinks", null); 15 writer.WriteAttributeString("DateTime", DateTime.Now.ToString()); 16 foreach (string str in alHyperLinks) 17 { 18 string title = GetDomain(str); 19 string body = str; 20 writer.WriteElementString(title, null, body); 21 } 22 writer.WriteEndElement(); 23 writer.WriteEndElement(); 24 writer.Flush(); 25 writer.Close(); 26 } 27 /// <summary> 28 /// 获取网址的域名后缀 29 /// </summary> 30 /// <param name="strURL"></param> 31 /// <returns></returns> 32 private static string GetDomain(string strURL) 33 { 34 string retVal; 35 string strRegex = @"(.com/|.net/|.cn/|.org/|.gov/)"; 36 Regex r = new Regex(strRegex, RegexOptions.IgnoreCase); 37 Match m = r.Match(strURL); 38 retVal = m.ToString(); 39 strRegex = @".|/$"; 40 retVal = Regex.Replace(retVal, strRegex, "").ToString(); 41 if (retVal == "") 42 retVal = "other"; 43 return retVal; 44 } 45 /// <summary> 46 /// 获取标题 47 /// </summary> 48 /// <param name="html"></param> 49 /// <returns></returns> 50 private static string GetTitle(string html) 51 { 52 string titleFilter = @"<title>[sS]*?</title>"; 53 string h1Filter = @"<h1.*?>.*?</h1>"; 54 string clearFilter = @"<.*?>"; 55 56 string title = ""; 57 Match match = Regex.Match(html, titleFilter, RegexOptions.IgnoreCase); 58 if (match.Success) 59 { 60 title = Regex.Replace(match.Groups[0].Value, clearFilter, ""); 61 } 62 63 // 正文的标题一般在h1中,比title中的标题更干净 64 match = Regex.Match(html, h1Filter, RegexOptions.IgnoreCase); 65 if (match.Success) 66 { 67 string h1 = Regex.Replace(match.Groups[0].Value, clearFilter, ""); 68 if (!String.IsNullOrEmpty(h1) && title.StartsWith(h1)) 69 { 70 title = h1; 71 } 72 } 73 return title; 74 }

这就是所用的全部方法,还是有很多需要改进之处!大家如果有发现不足之处还请指出,谢谢!

有兴趣可以加入企鹅群一起学习:495104593

源码送上:http://download.csdn.net/detail/nightmareyan/9584256