Our goal was to minimize the risk of a malicious actor being able to access our networks and servers, invoke the AWS API, and, ultimately, perform destructive or unauthorized actions in our environments.

It is crucial that the data that we are storing in the cloud is encrypted and that the encryption keys are correctly managed! In the event that there were to be a vulnerability to our network or AWS account settings, we want to reduce the risk of data being readable by an unauthorized party.

Protecting Data Stored in the Cloud

Understand the Application

An architect needs to be able to identify all application code and services that will run in an environment which are persisting or potentially persisting data to cloud data stores.

Classify Data

Your organization should have data classification standards that will allow you to identify and tag cloud components so that components storing sensitive data are easily identifiable.

Understand Roles and Responsibilities around Key Management

Determine who needs access encryption keys in order to encrypt data, decrypt data, manage key permissions and destroy or rotate keys.

Understand Organizational Security and Compliance Requirements

Many design choices in the cloud will hinge on specific regulatory or organization compliance policies around data encryption.

Application Data Encryption

There are multiple strategies for ensuring that data processed and generated by an application ends up in an encrypted state on disk or cloud storage.

In this concept, we will examine a few common scenarios of persisting data in a cloud environment and see how the data is encrypted.

Methods For Encrypting Application Data

There are multiple strategies for ensuring that data processed and generated by an application ends up in an encrypted state on disk.

Applications and underlying infrastructure services will leverage the following concepts:

Key Management Service and Encryption Keys

Refer to a key management service to determine which encryption key will be used to encrypt the data.

AWS provides a key management service called KMS which is tightly integrated with the AWS services and the AWS SDKs that a developer would be using.

Client-Side Encryption

You can choose to encrypt the data within the application prior to persisting or writing. We will loosely refer to this as client-side encryption.

Server-Side or AWS Service native encryption

Alternatively, have the AWS service handle the underlying encryption activities. We can refer to this broadly as server-side encryption.

Writing Data to Disk With Client-Side Encryption

An application may write data to a disk mount such as an EBS volume or EFS mount. The application can use AWS SDKs and the KMS service to encrypt a file prior to writing to disk.

Once a data encryption key is obtained, Developers can choose to use language native encryption libraries. They can also leverage the AWS SDK to go through the encryption process. The AWS SDK helps by encapsulating many of the steps required to encrypt and store the data.

Using Encrypted Disk Volumes in AWS

On the infrastructure side we need to ensure that disk volumes are configured to use KMS encryption so that the entire disk remains encrypted regardless of the fact that the application chooses to encrypt or not.

-

The EC2 Service can obtain a data encryption key from KMS. The encryption key is based on a master key that the EBS volume is associated with.

-

The data encryption key is then used by the hypervisor to ensure that all I/O operations result in encrypted data on disk. Write operations will use the key to encrypt and read operations will use the key to decrypt.

-

The hypervisor will make the data available and decrypted to the instance operating system.

Writing Data to a Database Table

The same concept we looked at for encrypting file data on disk volumes applies to databases too. The application should encrypt sensitive data prior to storing the record value in the database, using the encryption SDKs. On the platform service or server-side, we need to ensure that encryption is enabled when provisioning the database platform of choice (e.g. RDS, DynamoDB etc).

Writing Data to Object Storage, such as S3

Highly sensitive data should be encrypted by the application prior to putting an object in S3. In this case, because we use client-side encryption from within the application code and encryption SDK, S3 will not be aware about the encryption.

In the case of S3, the application code can also set parameters for server-side encryption so that the S3 service will handle the encryption for the particular objects. S3 buckets can also be configured to have default server-side encryption enabled for all new objects. In this case encryption is transparent to the application code.

As a best practice we want to ensure that S3 server-side encryption is enabled by default where possible.

Client-Side vs.Server-Side

Client-Side Encryption

Pros

The cloud provider, that is AWS, or anyone with access to the underlying disk volumes will only see ciphered or encrypted data. The data is effectively useless to them unless of course they have permissions or access to the encryption keys.

Cons

The code will have additional complexity of using SDK's and libraries required to perform cryptographic functions. Some additional understanding of encryption is also needed to do this effectively. There is always the potential of this additional functionality being inadvertently omitted from the code .

Server-Side Encryption

Pros

For most use cases server-side encryption can be completely transparent to the developer or application code. So, from an implementation perspective, it is simpler and easier to use.

Cons

Anyone with read access permissions to the service in question will perform a read and be able to see the plain text data.

Best Practices

As a best practice it is highly advisable to leverage server-side encryption as a default deployment pattern for any AWS services being used to store data.

The decision to use client-side encryption in your applications may be made on a case-by-case basis for sensitive data or to meet any compliance requirements.

Additional Resources

S3 Bucket Encryption

Let's take a closer look at S3 bucket encryption! This is by far one of the most common services that cloud native applications use to store objects.

S3 Bucket Server-Side Encryption

As discussed previously, client-side encryption allows the application to encrypt data prior to sending it to disk or other persistent cloud storage.

In many cases, client-side encryption may not be required. Regardless of the choice to use client-side encryption, as a best practice we want to ensure that any data stored in the cloud should at minimum make use of the cloud provider's server-side encryption capabilities.

S3 buckets provisioned in AWS support a few different methods of ensuring your data is encrypted when physically being stored on disk.

S3-Managed Keys

With this simple option, we can specify that any object written to S3 will be encrypted by S3 and the S3 service will manage the encryption keys behind the scenes. Because the key management aspect is transparent to the caller, this is the simplest and easiest option. It is important to keep in mind that with this option anyone with read access permissions to the bucket and file. Anyone with read permission will be able to make calls to the service to retrieve the file unencrypted.

AWS-Managed Master Keys

In this option, the caller will need to specify that KMS will manage encryption keys for the S3 service. This provides additional auditability of S3's use of the encryption keys.

Customer-Managed Master Keys

Again, the caller will need to specify that KMS will manage encryption keys for the S3 service. The caller will also need to specify the key that will be used for encryption. Additionally, the caller needs to have permissions to use the key. This provides additional ability to control and restrict which principals can access or decrypt sensitive data.

Customer-Provided Keys

In this case, the customer can provide encryption keys to S3. S3 will perform the encryption on the server without keeping the key itself. The key would then be provided with the request to decrypt the object. With this option the burden of managing the key falls on the customer.

Implementation Options

Encrypt On Write

In this case, API calls to S3 for uploading or copying file objects will need to explicitly specify the type of server-side encryption desired. The code will need some awareness of the choice to use server-side encryption and which key to use.

Default Encryption

Configures S3 buckets with default encryption enabled. This will automatically encrypt files that are written to S3.

Default encryption will allow you to specify which type of server-side encryption will be used and designate a specific KMS key if you choose to do so

Summary

Server-side encryption for AWS services is a very powerful and transparent way to ensure that security best practices are implemented. We have highlighted this with S3, however, other AWS services, for example DynamoDB, also provide this functionality.

Key Terms

Key Management Service (KMS)

AWS service that allows provisioning, storage and management of master encryption keys. KMS also provides the ability to manage permissions pertaining to cryptographic actions on encryption keys.

Customer Master Key (CMK)

The customer master key is the master encryption key that will be used to encrypt and store underlying data encryption keys in the KMS service. Other services or applications will select a CMK to use for their cryptographic operations.

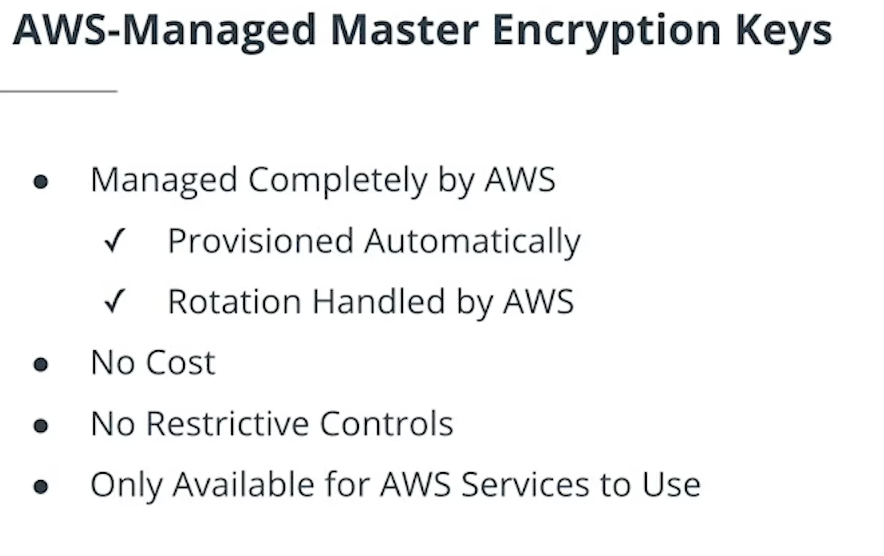

AWS-Managed CMK

AWS managed customer master keys are provisioned, rotated and managed by AWS. AWS will provision a new master key for each AWS service in the AWS account at the time the service needs to start encrypting data. These keys are not available to use by your applications for client-side encryption.

You may not change or assign permissions on these keys.

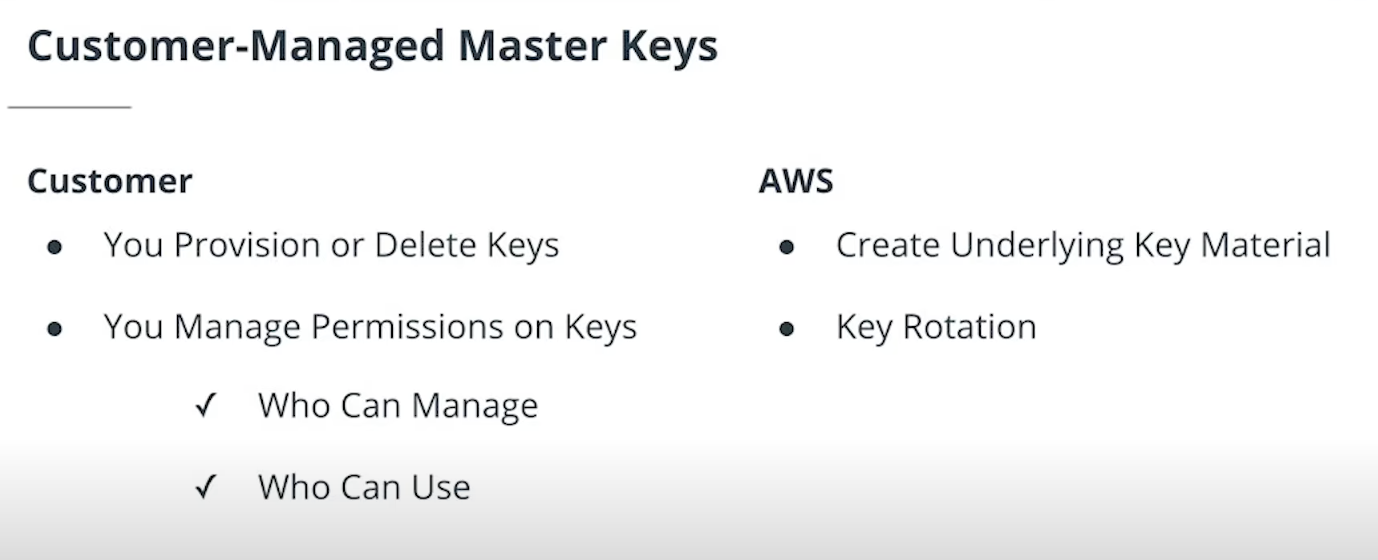

Customer-Managed CMK

Customer managed master keys are provisioned and managed by the customer (you). Once you provision a key, you may use that key with any AWS services or applications.

You can manage permissions on customer managed CMKs to control which IAM users or roles can manage or use the encryption keys. Permissions to use the keys can also be granted to AWS services and other AWS accounts.

Default Encryption

A configuration setting on an AWS resource, such as an S3 bucket, designating that the storage as a whole, or all objects written, will be encrypted by the service being used (e.g. S3).

Additional Resources

Encryption Key Management

Previously, we looked at using encryption keys in applications that are running in the cloud and options to ensure that objects stored in S3 cloud storage are encrypted at rest.

Let's look at a few best practices for ensuring that encryption keys themselves are managed securely.

Using The AWS Key Management Service

Let's look at a few options and best practices for ensuring that encryption keys themselves are managed securely using the AWS Key Management Service. KMS provides multiple avenues for creating CMKs. It is important to understand the difference between these options.

AWS-Managed Customer Master Keys

This is the default and easiest type of KMS key to use. It requires very little management and no cost on the part of the customer.

The key is provisioned automatically by KMS when a service such as S3 or EC2 needs to use KMS to encrypt underlying data. A separate master key would be created for each service that starts using KMS.

Permissions to AWS managed keys are also handled behind the scenes. Any principal or user who has access to a particular service would inherently have access to any encrypted data that the service had encrypted using the AWS managed keys.

Using an example of a DynamoDB table that has encryption using AWS managed keys, all users or roles in the account that have read access to the dynamodb table would be able to read data from the table.

With this method key rotation is also handled behind the scenes by AWS and keys are rotated every 3 years.

This approach is acceptable if the sole requirement is to ensure that data is encrypted at rest in AWS' data centers.

Limitations With AWS Managed CMKs

The main drawback here is that it does not allow granular and least privileged access to the keys.

It would NOT be possible to segment and isolate permissions to certain keys and encrypted data.

In addition to this limitation, AWS managed keys are not available for applications to use for client-side encryption since they are only available for use by AWS services.

This approach is also NOT recommended for accounts where sensitive data is present since in the event of the AWS account or role compromised for some reason, encrypted data may not be protected.

Customer-Managed Customer Master Keys

The second option is to explicitly provision the keys using KMS. In this case the user creates and manages permissions to the keys.

AWS will create the key material and rotate keys on a yearly basis, and the user does have the option to choose to manually rotate keys.

The main benefit to this approach is that permissions to manage and use the keys can be explicitly defined and controlled. This allows separation of duties, segmentation of key usage etc.

Again using DynamoDB as an example, we can have much more flexibility to restrict access to data by restricting access to encryption keys. For example we can have 2 separate master keys, for two different sets of tables or data classifications, for example non-sensitive and sensitive tables. We can also assign certain IAM roles to be able to use the keys, and other IAM roles to be able to manage the keys.

This second approach is a good balance between manageability and security, and it will generally provide the capabilities mandated by most compliance standards.

KMS also provides the ability for the user to create master key material outside of AWS and import it into KMS.

Bring Your Own Key

When new customer master keys are provisioned in KMS, by default, KMS creates and maintains the key material for you. However, KMS also provides the customer the option of importing their own key material which may be maintained in a key store external to KMS.

With this option the customer has full control of the key's lifecycle including expiration, deletion, and rotation.

A potential use case for importing key material may be to maintain backup copies of the key material external to AWS to fulfill disaster recovery requirements. Customers may also find this option useful if they have a desire to use one key management system for cloud and on-premise infrastructure.

Best Practices for Managing Customer Master Keys (CMKs)

1. Use Key Policies

Use Key Policies to Explicitly Restrict the Following, Where Possible:

- Allow certain IAM roles to manage keys.

- Enforce the precense of MFA for management actions such as changing the key policy or deleting keys.

- Allow certain IAM roles or users to use the keys for encryption, such as decryption or encryption.

- Restrict specific AWS services to be allowed to use the key. For example we can restrict a key to be only used for decrypting S3 objects by application ABC however application will not be able perform decryption actions on DynamoDB.

The Default Key Policy

When a key is created, the default key policy will allow the following:

- Ability for IAM principals to be assigned privileges to manage the key.

- Ability for select IAM principals to manage a key.

- Ability for select IAM principals to use the key for cryptographic operations.

- Ability for certain IAM principals to grant permissions to AWS services to use the key.

In most cases, the default key policy is sufficient with slight modifications depending on the need for other IAM users or accounts to be able to make use of the keys.

2. Least Privilege IAM Policies

When using IAM policies to allow access to KMS keys, continue to apply the principle of least privilege wherein the policy only grants specific least required actions to a specific set of key resources.

3. Monitoring and Auditing

Audit and Monitor Calls to KMS and Events Related to Customer Master Keys

Using monitoring services such as CloudTrail and CloudWatch you can be aware of events generated by the KMS service related to management and usage. Examples of this would be being notified of a keys scheduled deletion or auditing key usage.

4. Enforcing Encryption

In some cases it will be required to have safe-guards in place to prevent a situation where data can be stored without encryption.

Here are a few suggestions to ensure that this does not happen:

IAM Policies

Configure user or role IAM policies that contain policy conditions that will only allow the creation of a resource if it is set to use encryption.

Resource Policies

Using resource policies, for example in S3, S3 buckets can be set up with bucket policies only allowing write of objects with server-side encryption enabled.

Real-Time Monitoring and Remediation

Using real-time monitoring, lambda functions or AWS config can be used to immediately identify resources that have been created without the mandated encryption settings.

Using Lambda for example + SNS for notification.

Take this a step further and trigger automation to remove, disable, or update encryption settings on those resources.

Additional Resources

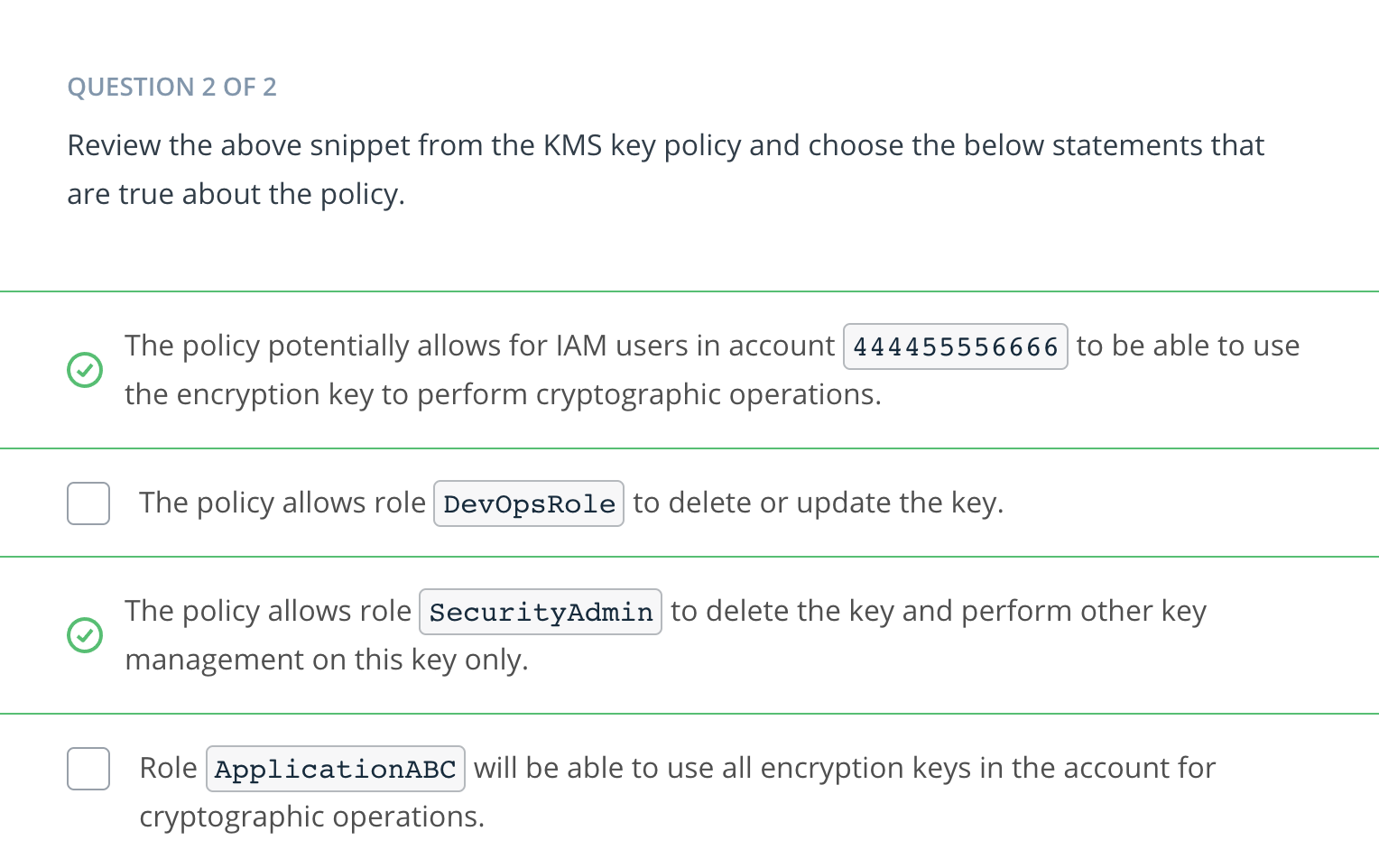

ExampleÖ

A KMS customer master key has been created in account 111122223333.

{

"Version": "2012-10-17",

"Id": "key-consolepolicy-2",

"Statement": [

{

"Sid": "Enable IAM User Permissions",

"Effect": "Allow",

"Principal": {"AWS": "arn:aws:iam::111122223333:root"},

"Action": "kms:*",

"Resource": "*"

},

{

"Sid": "Allow access for Key Administrators",

"Effect": "Allow",

"Principal": {"AWS": [

"arn:aws:iam::111122223333:role/SecurityAdmin"

]},

"Action": [

"kms:Create*",

"kms:Describe*",

"kms:Enable*",

"kms:List*",

"kms:Put*",

"kms:Update*",

"kms:Revoke*",

"kms:Disable*",

"kms:Get*",

"kms:Delete*",

"kms:TagResource",

"kms:UntagResource",

"kms:ScheduleKeyDeletion",

"kms:CancelKeyDeletion"

],

"Resource": "*"

},

{

"Sid": "Allow use of the key",

"Effect": "Allow",

"Principal": {"AWS": [

"arn:aws:iam::111122223333:role/ApplicationABC",

"arn:aws:iam::111122223333:role/DevOpsRole",

"arn:aws:iam::444455556666:root"

]},

"Action": [

"kms:Encrypt",

"kms:Decrypt",

"kms:ReEncrypt*",

"kms:GenerateDataKey*",

"kms:DescribeKey"

],

"Resource": "*"

},

{

"Sid": "Allow attachment of persistent resources",

"Effect": "Allow",

"Principal": {"AWS": [

"arn:aws:iam::111122223333:role/ApplicationABC",

"arn:aws:iam::111122223333:role/DevOpsRole",

"arn:aws:iam::444455556666:root"

]},

"Action": [

"kms:CreateGrant",

"kms:ListGrants",

"kms:RevokeGrant"

],

"Resource": "*",

"Condition": {"Bool": {"kms:GrantIsForAWSResource": "true"}}

}

]

}

Best Practices for Securing S3

The importance of ensuring that S3 buckets are configured securely can not be understated! The vast majority of cloud data breaches in the last few years were of private data being leaked from S3 buckets.

Some best practices for Securing S3 include:

- Use Object Versioning: This makes it difficult for infiltrators to corrupt or delete data.

- Block Public Access: This lessens the attack surface.

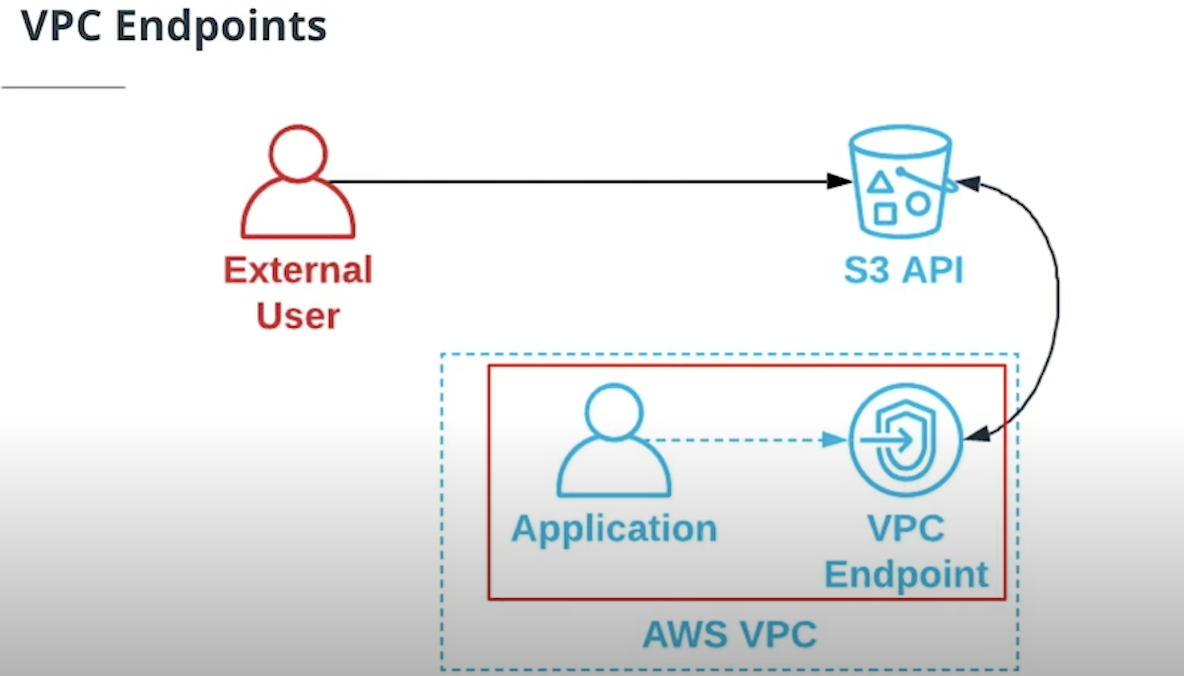

- Use VPC Endpoints: This allows you to block requests that do not originate from your VPC network.

- Create S3 Bucket Policies: Use policies to restrict and control access.