当今疫情情况下,检测是否戴口罩还是比较流行的,然后就搞啦一个检测人脸是否戴口罩的程序。程序调用笔记本摄像头,实时检测人脸是否佩戴口罩。

一、数据集

如果数据集大家有,就用自己的。没有的话,也可以找我要啊(3000+戴口罩/1000+不戴口罩)。在此就不分享出来了,我也是找别人要的。

二、开始上代码

1、导入keras库

1 import keras 2 keras.__version__

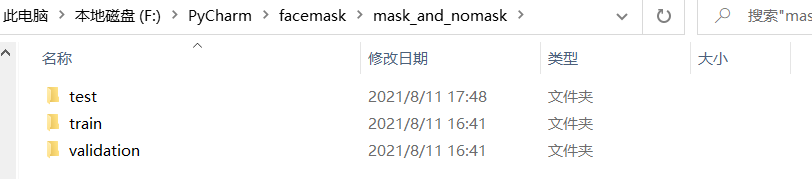

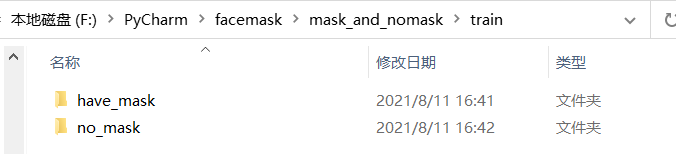

2、创建train、test、validation文件夹,并且三个文件夹里面都有have_mask和no_mask文件,运行之后将数据集中的照片放入对应的文件夹中。

1 import os, shutil 2 # The path to the directory where the original 3 # dataset was uncompressed 4 original_dataset_dir = 'F:\PyCharm\facemask\mask' 5 6 # The directory where we will 7 # store our smaller dataset 8 base_dir = 'F:\PyCharm\facemask\mask_and_nomask' 9 os.mkdir(base_dir) 10 11 # Directories for our training, 12 # validation and test splits 13 train_dir = os.path.join(base_dir, 'train') 14 os.mkdir(train_dir) 15 validation_dir = os.path.join(base_dir, 'validation') 16 os.mkdir(validation_dir) 17 test_dir = os.path.join(base_dir, 'test') 18 os.mkdir(test_dir) 19 20 # Directory with our training smile pictures 21 train_smile_dir = os.path.join(train_dir, 'have_mask') 22 os.mkdir(train_smile_dir) 23 24 # Directory with our training nosmile pictures 25 train_nosmile_dir = os.path.join(train_dir, 'no_mask') 26 os.mkdir(train_nosmile_dir) 27 28 # Directory with our validation smile pictures 29 validation_smile_dir = os.path.join(validation_dir, 'have_mask') 30 os.mkdir(validation_smile_dir) 31 32 # Directory with our validation nosmile pictures 33 validation_nosmile_dir = os.path.join(validation_dir, 'no_mask') 34 os.mkdir(validation_nosmile_dir) 35 36 # Directory with our validation smile pictures 37 test_smile_dir = os.path.join(test_dir, 'have_mask') 38 os.mkdir(test_smile_dir) 39 40 # Directory with our validation nosmile pictures 41 test_nosmile_dir = os.path.join(test_dir, 'no_mask') 42 os.mkdir(test_nosmile_dir)

3、记数,统计一下各个文件中照片的数量

1 print('total training smile images:', len(os.listdir(train_smile_dir))) 2 print('total training nosmile images:', len(os.listdir(train_nosmile_dir))) 3 print('total validation smile images:', len(os.listdir(validation_smile_dir))) 4 print('total validation nosmile images:', len(os.listdir(validation_nosmile_dir))) 5 print('total test smile images:', len(os.listdir(test_smile_dir))) 6 print('total test nosmile images:', len(os.listdir(test_nosmile_dir)))

4、构建小型卷积网络

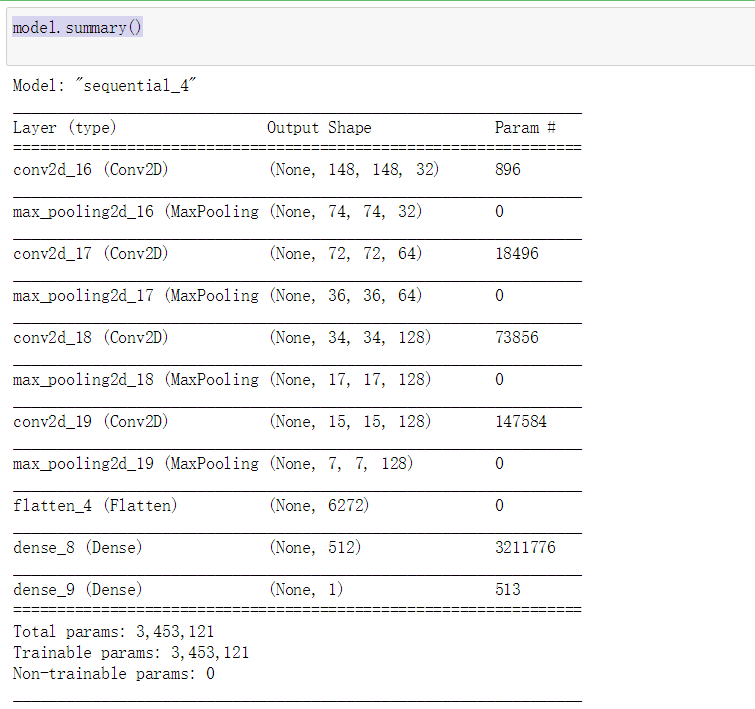

我们已经为MNIST构建了一个小型卷积网,所以您应该熟悉它们。我们将重用相同的通用结构:我们的卷积网将是一个交替的Conv2D(激活relu)和MaxPooling2D层的堆栈。然而,由于我们处理的是更大的图像和更复杂的问题,因此我们将使我们的网络相应地更大:它将有一个更多的Conv2D + MaxPooling2D阶段。这样既可以扩大网络的容量,又可以进一步缩小特征图的大小,这样当我们到达平坦层时,特征图就不会太大。在这里,由于我们从大小为150x150的输入开始(有点随意的选择),我们在Flatten层之前得到大小为7x7的feature map。

注意:feature map的深度在网络中逐渐增加(从32到128),而feature map的大小在减少(从148x148到7x7)。这是你会在几乎所有convnets中看到的模式。由于我们解决的是一个二元分类问题,我们用一个单一单元(一个大小为1的稠密层)和一个s型激活来结束网络。这个单元将对网络正在查看一个类或另一个类的概率进行编码。

1 from keras import layers 2 from keras import models 3 4 model = models.Sequential() 5 model.add(layers.Conv2D(32, (3, 3), activation='relu', 6 input_shape=(150, 150, 3))) 7 model.add(layers.MaxPooling2D((2, 2))) 8 model.add(layers.Conv2D(64, (3, 3), activation='relu')) 9 model.add(layers.MaxPooling2D((2, 2))) 10 model.add(layers.Conv2D(128, (3, 3), activation='relu')) 11 model.add(layers.MaxPooling2D((2, 2))) 12 model.add(layers.Conv2D(128, (3, 3), activation='relu')) 13 model.add(layers.MaxPooling2D((2, 2))) 14 model.add(layers.Flatten()) 15 model.add(layers.Dense(512, activation='relu')) 16 model.add(layers.Dense(1, activation='sigmoid'))

5、输出模型各层的参数状况

1 model.summary()

6、告知训练时用的优化器、损失函数和准确率评测标准

1 from keras import optimizers 2 3 model.compile(loss='binary_crossentropy', 4 optimizer=optimizers.RMSprop(lr=1e-4), 5 metrics=['acc'])

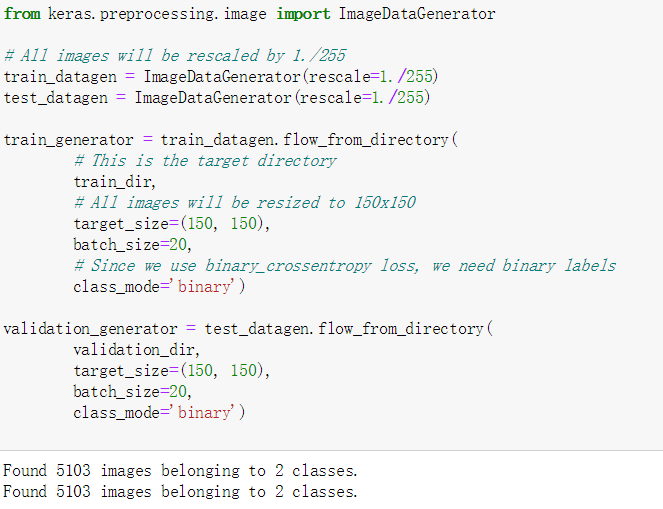

7、数据预处理

在将数据输入到我们的网络之前,应该将数据格式化为经过适当预处理的浮点张量。目前,我们的数据以JPEG文件的形式保存在硬盘上,因此将其导入网络的步骤大致如下:

- 读取图片文件

- 解码JPEG内容到RBG像素网格

- 把它们转换成浮点张量

- 将像素值(从0到255)缩放到[0,1]区间

1 from keras.preprocessing.image import ImageDataGenerator 2 3 # All images will be rescaled by 1./255 4 train_datagen = ImageDataGenerator(rescale=1./255) 5 test_datagen = ImageDataGenerator(rescale=1./255) 6 7 train_generator = train_datagen.flow_from_directory( 8 # This is the target directory 9 train_dir, 10 # All images will be resized to 150x150 11 target_size=(150, 150), 12 batch_size=20, 13 # Since we use binary_crossentropy loss, we need binary labels 14 class_mode='binary') 15 16 validation_generator = test_datagen.flow_from_directory( 17 validation_dir, 18 target_size=(150, 150), 19 batch_size=20, 20 class_mode='binary')

让我们看看其中一个生成器的输出:它生成150×150 RGB图像的批次(Shape(20,150,150,3))和二进制标签(Shape(20,))。20是每批样品的数量(批次大小)。注意,生成器无限期地生成这些批:它只是无休止地循环目标文件夹中的图像。因此,我们需要在某个点中断迭代循环。

1 for data_batch, labels_batch in train_generator: 2 print('data batch shape:', data_batch.shape) 3 print('labels batch shape:', labels_batch.shape) 4 break

使用生成器使我们的模型适合于数据

1 history = model.fit_generator( 2 train_generator, 3 steps_per_epoch=100, 4 epochs=50, 5 validation_data=validation_generator, 6 validation_steps=50)

保存模型

1 model.save('F:\PyCharm\facemask\mask_and_nomask\test\mask_and_nomask.h5')

在训练和验证数据上绘制模型的损失和准确性

1 import matplotlib.pyplot as plt 2 3 acc = history.history['acc'] 4 val_acc = history.history['val_acc'] 5 loss = history.history['loss'] 6 val_loss = history.history['val_loss'] 7 8 epochs = range(len(acc)) 9 10 plt.plot(epochs, acc, 'bo', label='Training acc') 11 plt.plot(epochs, val_acc, 'b', label='Validation acc') 12 plt.title('Training and validation accuracy') 13 plt.legend() 14 15 plt.figure() 16 17 plt.plot(epochs, loss, 'bo', label='Training loss') 18 plt.plot(epochs, val_loss, 'b', label='Validation loss') 19 plt.title('Training and validation loss') 20 plt.legend() 21 plt.show()

8、数据准确性提高

1 datagen = ImageDataGenerator( 2 rotation_range=40, 3 width_shift_range=0.2, 4 height_shift_range=0.2, 5 shear_range=0.2, 6 zoom_range=0.2, 7 horizontal_flip=True, 8 fill_mode='nearest')

查看提高后的图像

1 # This is module with image preprocessing utilities 2 from keras.preprocessing import image 3 4 fnames = [os.path.join(train_smile_dir, fname) for fname in os.listdir(train_smile_dir)] 5 6 # We pick one image to "augment" 7 img_path = fnames[3] 8 9 # Read the image and resize it 10 img = image.load_img(img_path, target_size=(150, 150)) 11 12 # Convert it to a Numpy array with shape (150, 150, 3) 13 x = image.img_to_array(img) 14 15 # Reshape it to (1, 150, 150, 3) 16 x = x.reshape((1,) + x.shape) 17 18 # The .flow() command below generates batches of randomly transformed images. 19 # It will loop indefinitely, so we need to `break` the loop at some point! 20 i = 0 21 for batch in datagen.flow(x, batch_size=1): 22 plt.figure(i) 23 imgplot = plt.imshow(image.array_to_img(batch[0])) 24 i += 1 25 if i % 4 == 0: 26 break 27 28 plt.show()

如果我们使用这种数据增加配置训练一个新的网络,我们的网络将永远不会看到两次相同的输入。然而,它看到的输入仍然是高度相关的,因为它们来自少量的原始图像——我们不能产生新的信息,我们只能混合现有的信息。因此,这可能还不足以完全消除过度拟合。

为了进一步对抗过拟合,我们还将在我们的模型中增加一个Dropout层,就在密集连接分类器之前:

1 model = models.Sequential() 2 model.add(layers.Conv2D(32, (3, 3), activation='relu', 3 input_shape=(150, 150, 3))) 4 model.add(layers.MaxPooling2D((2, 2))) 5 model.add(layers.Conv2D(64, (3, 3), activation='relu')) 6 model.add(layers.MaxPooling2D((2, 2))) 7 model.add(layers.Conv2D(128, (3, 3), activation='relu')) 8 model.add(layers.MaxPooling2D((2, 2))) 9 model.add(layers.Conv2D(128, (3, 3), activation='relu')) 10 model.add(layers.MaxPooling2D((2, 2))) 11 model.add(layers.Flatten()) 12 model.add(layers.Dropout(0.5)) 13 model.add(layers.Dense(512, activation='relu')) 14 model.add(layers.Dense(1, activation='sigmoid')) 15 16 model.compile(loss='binary_crossentropy', 17 optimizer=optimizers.RMSprop(lr=1e-4), 18 metrics=['acc'])

用数据增强和退出来训练我们的网络:

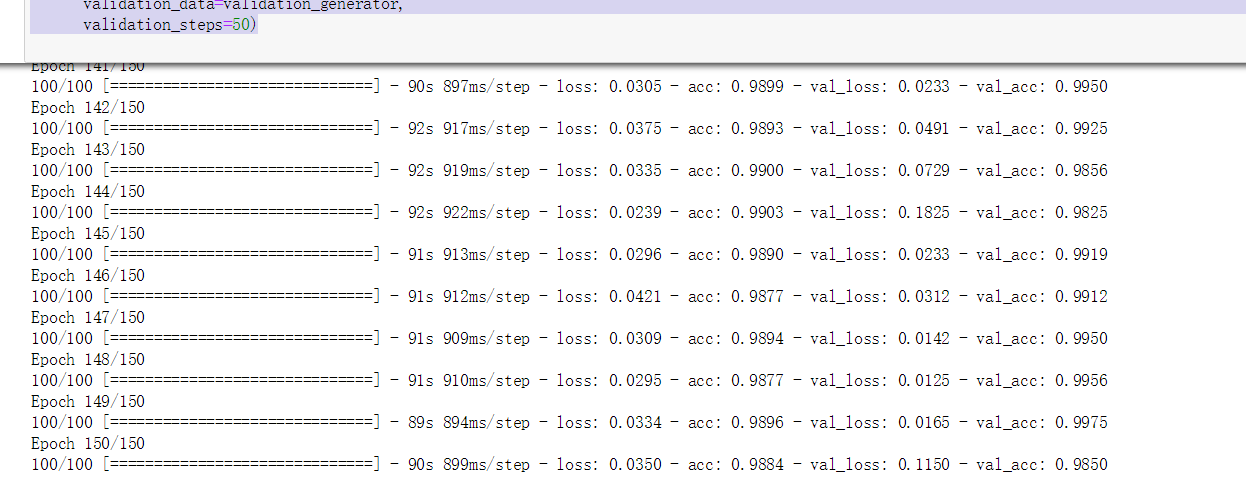

1 train_datagen = ImageDataGenerator( 2 rescale=1./255, 3 rotation_range=40, 4 width_shift_range=0.2, 5 height_shift_range=0.2, 6 shear_range=0.2, 7 zoom_range=0.2, 8 horizontal_flip=True,) 9 10 # Note that the validation data should not be augmented! 11 test_datagen = ImageDataGenerator(rescale=1./255) 12 13 train_generator = train_datagen.flow_from_directory( 14 # This is the target directory 15 train_dir, 16 # All images will be resized to 150x150 17 target_size=(150, 150), 18 batch_size=32, 19 # Since we use binary_crossentropy loss, we need binary labels 20 class_mode='binary') 21 22 validation_generator = test_datagen.flow_from_directory( 23 validation_dir, 24 target_size=(150, 150), 25 batch_size=32, 26 class_mode='binary') 27 28 history = model.fit_generator( 29 train_generator, 30 steps_per_epoch=100, 31 epochs=150, 32 validation_data=validation_generator, 33 validation_steps=50)

这里程序会跑很久,我跑了几个小时,用GPU跑会快很多很多。

保存模型在convnet可视化部分使用:

1 model.save('F:\PyCharm\facemask\mask_and_nomask\test\mask_and_nomask.h5')

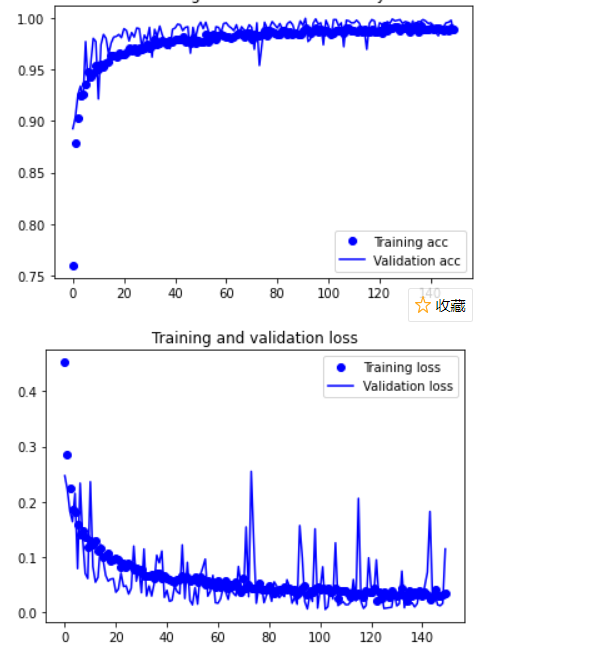

看一下结果:(挺好的)

1 acc = history.history['acc'] 2 val_acc = history.history['val_acc'] 3 loss = history.history['loss'] 4 val_loss = history.history['val_loss'] 5 6 epochs = range(len(acc)) 7 8 plt.plot(epochs, acc, 'bo', label='Training acc') 9 plt.plot(epochs, val_acc, 'b', label='Validation acc') 10 plt.title('Training and validation accuracy') 11 plt.legend() 12 13 plt.figure() 14 15 plt.plot(epochs, loss, 'bo', label='Training loss') 16 plt.plot(epochs, val_loss, 'b', label='Validation loss') 17 plt.title('Training and validation loss') 18 plt.legend() 19 plt.show()

8、优化提高笑脸图像分类模型精度

构建卷积网络

1 from keras import layers 2 from keras import models 3 from keras import optimizers 4 model = models.Sequential() 5 #输入图片大小是150*150 3表示图片像素用(R,G,B)表示 6 model.add(layers.Conv2D(32, (3,3), activation='relu', input_shape=(150 , 150, 3))) 7 model.add(layers.MaxPooling2D((2,2))) 8 model.add(layers.Conv2D(64, (3,3), activation='relu')) 9 model.add(layers.MaxPooling2D((2,2))) 10 model.add(layers.Conv2D(128, (3,3), activation='relu')) 11 model.add(layers.MaxPooling2D((2,2))) 12 model.add(layers.Conv2D(128, (3,3), activation='relu')) 13 model.add(layers.MaxPooling2D((2,2))) 14 model.add(layers.Flatten()) 15 model.add(layers.Dense(512, activation='relu')) 16 model.add(layers.Dense(1, activation='sigmoid')) 17 model.compile(loss='binary_crossentropy', optimizer=optimizers.RMSprop(lr=1e-4), 18 metrics=['acc']) 19 model.summary()

三、代码测试

1 import cv2 2 from keras.preprocessing import image 3 from keras.models import load_model 4 import numpy as np 5 import dlib 6 from PIL import Image 7 model = load_model('mask_and_nomask.h5') 8 detector = dlib.get_frontal_face_detector() 9 video=cv2.VideoCapture(0) 10 font = cv2.FONT_HERSHEY_SIMPLEX 11 def rec(img): 12 gray=cv2.cvtColor(img,cv2.COLOR_BGR2GRAY) 13 dets=detector(gray,1) 14 if dets is not None: 15 for face in dets: 16 left=face.left() 17 top=face.top() 18 right=face.right() 19 bottom=face.bottom() 20 cv2.rectangle(img,(left,top),(right,bottom),(0,255,0),2) 21 img1=cv2.resize(img[top:bottom,left:right],dsize=(150,150)) 22 img1=cv2.cvtColor(img1,cv2.COLOR_BGR2RGB) 23 img1 = np.array(img1)/255. 24 img_tensor = img1.reshape(-1,150,150,3) 25 prediction =model.predict(img_tensor) 26 print(prediction) 27 if prediction[0][0]>0.5: 28 result='nomask' 29 else: 30 result='mask' 31 cv2.putText(img, result, (left,top), font, 2, (0, 255, 0), 2, cv2.LINE_AA) 32 cv2.imshow('mask detector', img) 33 while video.isOpened(): 34 res, img_rd = video.read() 35 if not res: 36 break 37 rec(img_rd) 38 if cv2.waitKey(1) & 0xFF == ord('q'): 39 break 40 video.release() 41 cv2.destroyAllWindows()

这里就不上运行结果了。

本文转载:https://blog.csdn.net/weixin_45137708/article/details/107142706