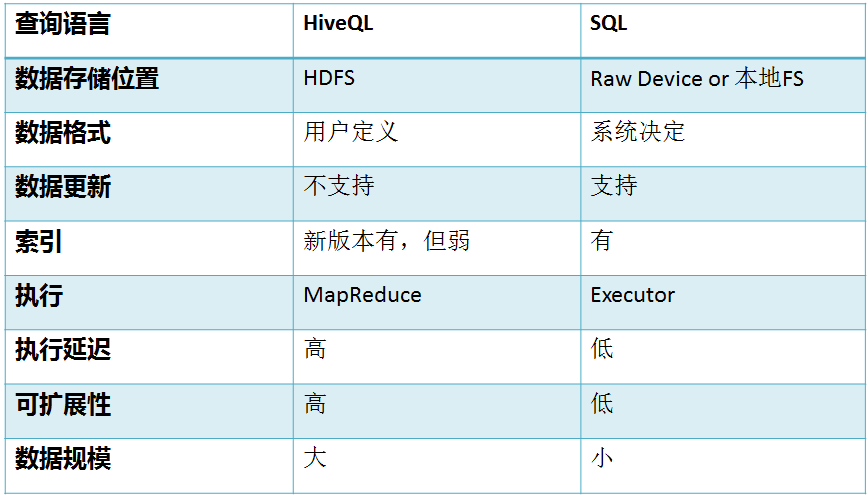

Hive中没有定义专门的数据格式,数据格式可以由用户指定,用户定义数据格式需要指定三个属性:列分隔符(通常为空格、” ”、”x001″)、行分隔符 (” ”)以及读取文件数据的方法(Hive 中默认有三个文件格式 TextFile,SequenceFile 以及 RCFile)。由于在加载数据的过程中,不需要从用户数据格式到 Hive 定义的数据格式的转换,因此,Hive 在加载的过程中不会对数据本身进行任何修改,而只是将数据内容复制或者移动到相应的 HDFS 目录中。而在数据库中,不同的数据库有不同的存储引擎,定义了自己的数据格式。所有数据都会按照一定的组织存储,因此,数据库加载数据的过程会比较耗时。

基本数据类型

tinyint/smallint/int/bigint

float/double

boolean

string

复杂数据类型

Array/Map/Struct

external:

//external CREATE EXTERNAL TABLE tab_ip_ext(id int, name string, ip STRING, country STRING) ROW FORMAT DELIMITED FIELDS TERMINATED BY ',' STORED AS TEXTFILE LOCATION '/external/hive';

hdfs上的数据存在/external/hive上面。这样删除tab_ip_ext表,/external/hive里面的内容不会删除,而且/external/hive里面的内容也不会被剪切。

CTAS:

// CTAS 用于创建一些临时表存储中间结果 CREATE TABLE tab_ip_ctas AS SELECT id new_id, name new_name, ip new_ip,country new_country FROM tab_ip_ext SORT BY new_id;

insert:

//insert from select 用于向临时表中追加中间结果数据 create table tab_ip_like like tab_ip; insert overwrite table tab_ip_like select * from tab_ip;

Hive中不能一条一条insert,但是可以成组的insert。不加overwrite会报错。

insert into table t_cost_like select * from t_cost_ctas;

这种方式可以追加。

partition:

create table t_cost_pt( id int, name string, capacity string, price double) partitioned by (data string) row format delimited fields terminated by ' ';

load data local inpath '/home/hadoop/hivedata/cost.data' into table t_cost_pt partition(data='001'); load data local inpath '/home/hadoop/hivedata/cost.data' into table t_cost_pt partition(data='002'); load data local inpath '/home/hadoop/hivedata/cost.data' into table t_cost_pt partition(data='003');

我们可以看一下partition实际的存储结构,其实就是文件夹。

show partitions t_cost_pt;

select * from t_cost_pt where data='001'

array:

//array create table tab_array(a array<int>,b array<string>) row format delimited fields terminated by ' ' collection items terminated by ','; 示例数据 tobenbrone,laihama,woshishui 13866987898,13287654321 abc,iloveyou,itcast 13866987898,13287654321 select a[0] from tab_array; select * from tab_array where array_contains(b,'word'); insert into table tab_array select array(0),array(name,ip) from tab_ext t;

map:

//map create table tab_map(name string,info map<string,string>) row format delimited fields terminated by ' ' collection items terminated by ';' map keys terminated by ':'; 示例数据: fengjie age:18;size:36A;addr:usa furong age:28;size:39C;addr:beijing;weight:180KG load data local inpath '/home/hadoop/hivetemp/tab_map.txt' overwrite into table tab_map; insert into table tab_map select name,map('name',name,'ip',ip) from tab_ext;

struct:

//struct create table tab_struct(name string,info struct<age:int,tel:string,addr:string>) row format delimited fields terminated by ' ' collection items terminated by ',' load data local inpath '/home/hadoop/hivetemp/tab_st.txt' overwrite into table tab_struct; insert into table tab_struct select name,named_struct('age',id,'tel',name,'addr',country) from tab_ext;

select:

select * from tab_ext sort by id desc limit 5; select a.ip,b.book from tab_ext a join tab_ip_book b on(a.name=b.name);

在shell下执行hive语句:

hive -S -e 'select country,count(*) from chenchi.tab_ext' > /home/hadoop/hivetemp/e.txt

chenchi是库名。

有了这种执行机制,就使得我们可以利用脚本语言(bash shell,python)进行hql语句的批量执行。

hive的udf(自定义函数):

0.要继承org.apache.hadoop.hive.ql.exec.UDF类实现evaluate 自定义函数调用过程: 1.添加jar包(在hive命令行里面执行) hive> add jar /root/NUDF.jar; 2.创建临时函数 hive> create temporary function getNation as 'cn.itcast.hive.udf.NationUDF'; 3.调用 hive> select id, name, getNation(nation) from beauty; 4.将查询结果保存到HDFS中 hive> create table result row format delimited fields terminated by ' ' as select * from beauty order by id desc; hive> select id, getAreaName(id) as name from tel_rec; create table result row format delimited fields terminated by ' ' as select id, getNation(nation) from beauties;

实战:自定义函数UDF

需求:

13884243554 234 450

13664243554 242 440

13994243554 211 430

13444243554 222 420

自定义一个识别手机号所在地区的函数getarea,然后通过该函数进行hive的查询。

写一个Java类,定义相关的函数逻辑

打成jar包

上传到hive的lib下

在hive中创建一个函数getarea,跟jar包中的自定义java类建立关联。

代码如下:要把hive的lib下面的jar包全部导入。

package com.darrenchan.bigdata; import java.util.HashMap; import org.apache.hadoop.hive.ql.exec.UDF; public class PhoneNumToArea extends UDF { private static HashMap<String, String> areaMap = new HashMap<>(); static { areaMap.put("1388", "beijing"); areaMap.put("1399", "tianjin"); areaMap.put("1366", "nanjing"); } // 一定要用public修饰才能被hive调用 // 返回值以及参数个数及类型都可以随意指定 public String evaluate(String pnb) { String result = areaMap.get(pnb.substring(0, 4)) == null ? (pnb + " huoxing") : (pnb + " " + areaMap.get(pnb.substring(0, 4))); return result; } }

将程序打成jar包,并加入到hive中:

hive>add jar /home/hadoop/phonenum-to-area.jar;

创建该函数:

hive>create temporary function getarea as 'com.darrenchan.bigdata.PhoneNumToArea';

创建表:

hive>create table t_flow(phonenum string, upflow int, downflow int)

> row format delimited

> fields terminated by ' ';

为表导入数据:

hive>load data local inpath '/home/hadoop/hivedata/flow.txt' into table t_flow;

进行查询验证:

hive>select getarea(phonenum),upflow,downflow from t_flow;

结果如下:

13884243554 beijing 234 450

13664243554 nanjing 242 440

13994243554 tianjin 211 430

13444243554 huoxing 222 420

实战:自定义脚本Transform

Hive的 TRANSFORM 关键字提供了在SQL中调用自写脚本的功能

适合实现Hive中没有的功能又不想写UDF的情况。

1、先加载rating.json文件到hive的一个原始表 rat_json

create table rat_json(line string) row format delimited; load data local inpath '/home/hadoop/rating.json' into table rat_json;

如下:

+----------------------------------------------------------------+--+

| rat_json.line |

+----------------------------------------------------------------+--+

| {"movie":"1193","rate":"5","timeStamp":"978300760","uid":"1"} |

| {"movie":"661","rate":"3","timeStamp":"978302109","uid":"1"} |

| {"movie":"914","rate":"3","timeStamp":"978301968","uid":"1"} |

| {"movie":"3408","rate":"4","timeStamp":"978300275","uid":"1"} |

| {"movie":"2355","rate":"5","timeStamp":"978824291","uid":"1"} |

| {"movie":"1197","rate":"3","timeStamp":"978302268","uid":"1"} |

| {"movie":"1287","rate":"5","timeStamp":"978302039","uid":"1"} |

| {"movie":"2804","rate":"5","timeStamp":"978300719","uid":"1"} |

| {"movie":"594","rate":"4","timeStamp":"978302268","uid":"1"} |

| {"movie":"919","rate":"4","timeStamp":"978301368","uid":"1"} |

+----------------------------------------------------------------+--+

2、需要解析json数据成四个字段,插入一张新的表 t_rating

create table t_rating as select get_json_object(line,'$.movie') movie,get_json_object(line,'$.rate') rate, get_json_object(line,'$.timeStamp') timestring,get_json_object(line,'$.uid') uid from rat_json;

3、使用transform+python的方式去转换unixtime为weekday

先编辑一个python脚本文件

########python######代码

vi weekday_mapper.py

#!/bin/python import sys import datetime for line in sys.stdin: line = line.strip() movieid, rating, unixtime,userid = line.split(' ') weekday = datetime.datetime.fromtimestamp(float(unixtime)).isoweekday() print ' '.join([movieid, rating, str(weekday),userid])

保存文件

然后,将文件加入hive的classpath:

hive>add FILE /home/hadoop/weekday_mapper.py;

hive>create TABLE u_data_new as SELECT TRANSFORM (movie, rate, timestring,uid) USING 'python weekday_mapper.py' AS (movie, rate, weekday,uid) FROM t_rating;

检验: