本文主要以实例的形式去熟悉sls的部署流程及相关模块的使用

文件下载:https://github.com/unixhot/saltbook-code

目录结构

[root@k8s_master saltbook-code-master]# tree saltstack-haproxy/ saltstack-haproxy/ ├── pillar │ └── base │ ├── top.sls │ └── zabbix │ └── agent.sls └── salt ├── base │ ├── init │ │ ├── audit.sls │ │ ├── dns.sls │ │ ├── env_init.sls │ │ ├── epel.sls │ │ ├── files │ │ │ ├── resolv.conf │ │ │ └── zabbix_agentd.conf │ │ ├── history.sls │ │ ├── sysctl.sls │ │ └── zabbix_agent.sls │ └── top.sls └── prod ├── cluster │ ├── files │ │ ├── haproxy-outside.cfg │ │ └── haproxy-outside-keepalived.conf │ ├── haproxy-outside-keepalived.sls │ └── haproxy-outside.sls ├── haproxy │ ├── files │ │ ├── haproxy-1.5.3.tar.gz │ │ └── haproxy.init │ └── install.sls ├── keepalived │ ├── files │ │ ├── keepalived-1.2.17.tar.gz │ │ ├── keepalived.init │ │ └── keepalived.sysconfig │ └── install.sls ├── libevent │ ├── files │ │ └── libevent-2.0.22-stable.tar.gz │ └── install.sls ├── memcached │ ├── files │ │ └── memcached-1.4.24.tar.gz │ ├── install.sls │ └── service.sls ├── nginx │ ├── files │ │ ├── nginx-1.9.1.tar.gz │ │ ├── nginx.conf │ │ └── nginx-init │ ├── install.sls │ └── service.sls ├── pcre │ ├── files │ │ └── pcre-8.37.tar.gz │ └── install.sls ├── php │ ├── files │ │ ├── init.d.php-fpm │ │ ├── memcache-2.2.7.tgz │ │ ├── php-5.6.9.tar.gz │ │ ├── php-fpm.conf.default │ │ ├── php.ini-production │ │ └── redis-2.2.7.tgz │ ├── install.sls │ ├── php-memcache.sls │ └── php-redis.sls ├── pkg │ └── pkg-init.sls ├── user │ └── www.sls └── web ├── bbs.sls └── files └── bbs.conf

1)、pillar

[root@k8s_master saltstack-haproxy]# tree pillar/ pillar/ └── base ├── top.sls └── zabbix └── agent.sls 2 directories, 2 files [root@k8s_master base]# cat base/top.sls base: '*': - zabbix.agent [root@k8s_master base]# cat base/zabbix/agent.sls #配置zabbix server的变量及值 zabbix-agent: Zabbix_Server: 192.168.56.21

2)、salt(全部目录结构看上面)

[root@k8s_master base]# cat top.sls base: '*': - init.env_init ##首先跳到init目录下的环境初始化sls prod: #然后跳到对所有主机安装haproxy 目录下的sls '*': - cluster.haproxy-outside - cluster.haproxy-outside-keepalived - web.bbs 'saltstack-node2.example.com': #最后对特定主机安装memcache服务 - memcached.service

init目录(入口),初始化环境配置

#目录结构:

[root@k8s_master base]# tree init/ init/ ├── audit.sls ├── dns.sls ├── env_init.sls ├── epel.sls ├── files │ ├── resolv.conf │ └── zabbix_agentd.conf ├── history.sls ├── sysctl.sls └── zabbix_agent.sls 1 directory, 9 files

入口sls文件为env_init.sls

[root@k8s_master init]# cat env_init.sls include: - init.dns - init.history - init.audit - init.sysctl - init.epel - init.zabbix_agent

对初始化环境sls文件进行分析(根据eve_init.sls中的位置依次查看)

dns.sls分析及扩展(涉及模块file.managed)

[root@k8s_master init]# cat dns.sls /etc/resolv.conf: file.managed: - source: salt://init/files/resolv.conf #表示将source所指目录下的文件覆盖到/etc/resolv.conf(其他写法看下面扩展) - user: root - gourp: root - mode: 644 #file.managed 其他用法(扩展) [root@k8s_master salt]# cat test.sls test: #随意命名 file.managed: - name: /tmp/aaa.txt #表示将source指定的文件拷贝到/tmp目录,并命名为aaa.txt(此处不能直接写目录) - source: salt://top.sls - backup: minion #表示先备份在进行source操作 #- source: salt://nginx/nginx.conf #注释的这两行要同时存在,表示以jinja模板引擎进行覆盖(其中nginx.conf里内容可以以pillar,grains变量的内容存在) #- template: jinja [root@k8s_master salt]# cat /tmp/aaa.txt base: '*': - test #此处的source还支持从http,https/ftp 上下载,但是需要提供hash值 示例: tomdroid-src-0.7.3.tar.gz: file.managed: - name: /tmp/tomdroid-src-0.7.3.tar.gz #从source下载到指定目录,并命名 - source: https://launchpad.net/tomdroid/beta/0.7.3/+download/tomdroid-src-0.7.3.tar.gz - source_hash: https://launchpad.net/tomdroid/beta/0.7.3/+download/tomdroid-src-0.7.3.hash

history.sls(涉及模块file.append)

[root@k8s_master init]# cat history.sls /etc/profile: file.append: #将text下内容添加到/etc/profile文件 - text: - export HISTTIMEFORMAT="%F %T `whoami` " #其他用法(扩展) /etc/profile: file.append: - text: "export ...." #可直接写成此格式

audit.sls

[root@k8s_master init]# cat audit.sls

/etc/bashrc:

file.append:

- text:

- export PROMPT_COMMAND='{ msg=$(history 1 | { read x y; echo $y; });logger "[euid=$(whoami)]":$(whoami):[`pwd`]"$msg"; }'

sysctl.sls(涉及模块sysctl)

[root@k8s_master init]# cat sysctl.sls #用sysclt内核参数模块,进行内核文件sysctl的配置(格式固定) net.ipv4.ip_local_port_range: sysctl.present: - value: 10000 65000 fs.file-max: sysctl.present: - value: 2000000 net.ipv4.ip_forward: sysctl.present: - value: 1 vm.swappiness: sysctl.present: - value: 0

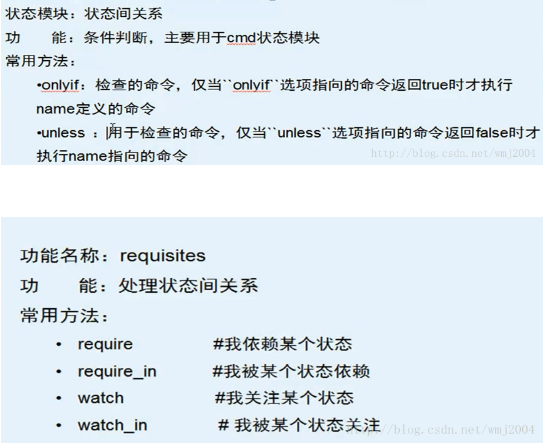

epel.sls(涉及模块pkg,方法unless)

[root@k8s_master init]# cat epel.sls yum_repo_release: pkg.installed: - sources: - epel-release: http://mirrors.aliyun.com/epel/6/x86_64/epel-release-6-8.noarch.rpm - unless: rpm -qa | grep epel-release-6-8 #除非结果为真,否则安装(个人理解)

zabbix_agents.sls(涉及模块:pkg,file,service,方法watch,watch_in)

[root@k8s_master init]# cat zabbix_agent.sls zabbix-agent: #stateID,标签 pkg.installed: #pkg模块(状态定义)调用installed函数(函数定义) - name: zabbix22-agent file.managed: - name: /etc/zabbix_agentd.conf - source: salt://init/files/zabbix_agentd.conf ##拷贝并覆盖文件(从source指定目录到name指定目录) - template: jinja #使用jinja模板引擎,针对source使用 - defaults: Server: {{ pillar['zabbix-agent']['Zabbix_Server'] }} - require: #require,这里表示在zabbix-agent标签下pkg模块执行成功的情况下执行file模块下的任务(pkg为一个模块,installed为pkg下的一个函数) - pkg: zabbix-agent service.running: - enable: True #表示开机自动启动 - watch: #watch表示监视 pkg和file函数是否执行成功(是否有变化),如果成功(有变化),则执行running和enable() - pkg: zabbix-agent - file: zabbix-agent zabbix_agentd.conf.d: #定义又一个stateID,标签 file.directory: #调用file的directory函数 - name: /etc/zabbix_agentd.conf.d - watch_in: #name所指定的目录 应该被zabbix-agent下的service模块变化watch - service: zabbix-agent - require: #同上面require - pkg: zabbix-agent - file: zabbix-agent

files(resolv.conf/zabbix_agentd.conf)(以下配置文件根据实际情况更改)

[root@k8s_master files]# cat resolv.conf ; generated by /sbin/dhclient-script nameserver 202.106.0.20 nameserver 114.114.114.114

[root@k8s_master files]# cat zabbix_agentd.conf |grep -v '^#|^$' PidFile=/var/run/zabbix/zabbix_agentd.pid LogFile=/var/log/zabbix/zabbix_agentd.log LogFileSize=0 Server={{pillar['zabbix-agent']['Zabbix_Server']}} #此处server已经在pillar里设置,直接引用即可,另:这里可以直接写ip,毕竟zabbix-server的数量有限,定义变量也麻烦 ServerActive={{pillar['zabbix-agent']['Zabbix_Server']}} Hostname={{ grains['ip4_interfaces']['ens33'][0] }} #这里应该写客户端ip地址或者主机名(通过grains获取),如,这里写的是ipv4网卡名为ens33的ip地址 Include=/etc/zabbix_agentd.conf.d/

主任务线,安装haproxy服务包

继续回到/srv/salt/top.sls

[root@k8s_master base]# cat top.sls base: '*': - init.env_init prod: '*': - cluster.haproxy-outside - cluster.haproxy-outside-keepalived - web.bbs 'saltstack-node2.example.com': - memcached.service

进入pod目录,首先调用cluster.haproxy-outside(include)

[root@k8s_master cluster]# cat haproxy-outside.sls include: - haproxy.install #首先调用haproxy目录下的install.sls(下面讲解) haproxy-service: file.managed: - name: /etc/haproxy/haproxy.cfg - source: salt://cluster/files/haproxy-outside.cfg #下面列出 - user: root - group: root - mode: 644 service.running: - name: haproxy - enable: True - reload: True #启用重启 - require: #这里表示的是service.running依赖于haproxy目录下install.sls里的haproxy-init标签下的cmd模块以及所调用函数是否执行成功, - cmd: haproxy-init - watch: #监视 haproxy-service 标签 file模块下配置文件的变动 - file: haproxy-service

cluster/files/haproxy-outside.cfg

[root@k8s_master haproxy]# cat ../cluster/files/haproxy-outside.cfg global maxconn 100000 chroot /usr/local/haproxy uid 99 gid 99 daemon nbproc 1 pidfile /usr/local/haproxy/logs/haproxy.pid log 127.0.0.1 local3 info defaults option http-keep-alive maxconn 100000 mode http timeout connect 5000ms timeout client 50000ms timeout server 50000ms listen stats mode http bind 0.0.0.0:8888 stats enable stats uri /haproxy-status stats auth haproxy:saltstack frontend frontend_www_example_com bind 192.168.56.21:80 mode http option httplog log global default_backend backend_www_example_com backend backend_www_example_com option forwardfor header X-REAL-IP option httpchk HEAD / HTTP/1.0 balance source server web-node1 192.168.56.21:8080 check inter 2000 rise 30 fall 15 server web-node2 192.168.56.22:8080 check inter 2000 rise 30 fall 15

haproxy目录下的install.sls

[root@k8s_master haproxy]# cat install.sls include: #调用pkg目录下的pkg-init.sls - pkg.pkg-init haproxy-install: file.managed: - name: /usr/local/src/haproxy-1.5.3.tar.gz - source: salt://haproxy/files/haproxy-1.5.3.tar.gz - mode: 755 - user: root - group: root cmd.run: - name: cd /usr/local/src && tar zxf haproxy-1.5.3.tar.gz && cd haproxy-1.5.3 && make TARGET=linux26 PREFIX=/usr/local/haproxy && make install PREFIX=/usr/local/haproxy - unless: test -d /usr/local/haproxy #除非haproxy目录存在 - require: #依赖于 - pkg: pkg-init - file: haproxy-install /etc/init.d/haproxy: file.managed: - source: salt://haproxy/files/haproxy.init - mode: 755 - user: root - group: root - require: #依赖于haproxy-install 下的cmd模块执行成功 - cmd: haproxy-install net.ipv4.ip_nonlocal_bind: #内核参数设置 sysctl.present: - value: 1 haproxy-config-dir: file.directory: #默认是创建目录 - name: /etc/haproxy - mode: 755 - user: root - group: root haproxy-init: cmd.run: - name: chkconfig --add haproxy - unless: chkconfig --list | grep haproxy - require: - file: /etc/init.d/haproxy #依赖于 标签 /etc/init.d/haproxy 下的file模块执行成功

files/proxy_init

#!/bin/sh if [ -f /etc/init.d/functions ]; then . /etc/init.d/functions elif [ -f /etc/rc.d/init.d/functions ] ; then . /etc/rc.d/init.d/functions else exit 0 fi # Source networking configuration. . /etc/sysconfig/network # Check that networking is up. [ ${NETWORKING} = "no" ] && exit 0 # This is our service name BASENAME=`basename $0` if [ -L $0 ]; then BASENAME=`find $0 -name $BASENAME -printf %l` BASENAME=`basename $BASENAME` fi [ -f /etc/$BASENAME/$BASENAME.cfg ] || exit 1 RETVAL=0 start() { /usr/local/haproxy/sbin/$BASENAME -c -q -f /etc/$BASENAME/$BASENAME.cfg if [ $? -ne 0 ]; then echo "Errors found in configuration file, check it with '$BASENAME check'." return 1 fi echo -n "Starting $BASENAME: " daemon /usr/local/haproxy/sbin/$BASENAME -D -f /etc/$BASENAME/$BASENAME.cfg -p /var/run/$BASENAME.pid RETVAL=$? echo [ $RETVAL -eq 0 ] && touch /var/lock/subsys/$BASENAME return $RETVAL } stop() { echo -n "Shutting down $BASENAME: " killproc $BASENAME -USR1 RETVAL=$? echo [ $RETVAL -eq 0 ] && rm -f /var/lock/subsys/$BASENAME [ $RETVAL -eq 0 ] && rm -f /var/run/$BASENAME.pid return $RETVAL } restart() { /usr/local/haproxy/sbin/$BASENAME -c -q -f /etc/$BASENAME/$BASENAME.cfg if [ $? -ne 0 ]; then echo "Errors found in configuration file, check it with '$BASENAME check'." return 1 fi stop start } reload() { /usr/local/haproxy/sbin/$BASENAME -c -q -f /etc/$BASENAME/$BASENAME.cfg if [ $? -ne 0 ]; then echo "Errors found in configuration file, check it with '$BASENAME check'." return 1 fi /usr/local/haproxy/sbin/$BASENAME -D -f /etc/$BASENAME/$BASENAME.cfg -p /var/run/$BASENAME.pid -sf $(cat /var/run/$BASENAME.pid) } check() { /usr/local/haproxy/sbin/$BASENAME -c -q -V -f /etc/$BASENAME/$BASENAME.cfg } rhstatus() { status $BASENAME } condrestart() { [ -e /var/lock/subsys/$BASENAME ] && restart || : } # See how we were called. case "$1" in start) start ;; stop) stop ;; restart) restart ;; reload) reload ;; condrestart) condrestart ;; status) rhstatus ;; check) check ;; *) echo $"Usage: $BASENAME {start|stop|restart|reload|condrestart|status|check}" exit 1 esac exit $?

pkg/pkg.sls

[root@k8s_master prod]# cat pkg/pkg-init.sls

pkg-init:

pkg.installed:

- names:

- gcc

- gcc-c++

- glibc

- make

- autoconf

- openssl

- openssl-devel

2.3)主任务线,keepalived包安装及配置

回到top.sls cluster.haproxy-outside-keepalived(claster目录)

[root@k8s_master cluster]# cat haproxy-outside-keepalived.sls include: #首先安装keepalived(下面解释) - keepalived.install keepalived-server: #配置keepalived file.managed: - name: /etc/keepalived/keepalived.conf - source: salt://cluster/files/haproxy-outside-keepalived.conf - mode: 644 - user: root - group: root - template: jinja #使用模板引擎 {% if grains['fqdn'] == 'saltstack-node1.example.com' %} #修改keepalived配置文件 - ROUTEID: haproxy_ha - STATEID: MASTER - PRIORITYID: 150 {% elif grains['fqdn'] == 'saltstack-node2.example.com' %} - ROUTEID: haproxy_ha - STATEID: BACKUP - PRIORITYID: 100 {% endif %} service.running: - name: keepalived - enable: True - watch: #监视 标签keepalive-server的file动作 - file: keepalived-server

文件haproxy-outside-keepalived.conf 模板文件(需要自己定义)

[root@k8s_master files]# cat haproxy-outside-keepalived.conf ! Configuration File for keepalived global_defs { notification_email { saltstack@example.com } notification_email_from keepalived@example.com smtp_server 127.0.0.1 smtp_connect_timeout 30 router_id {{ROUTEID}} } vrrp_instance haproxy_ha { state {{STATEID}} interface eth0 virtual_router_id 36 priority {{PRIORITYID}} advert_int 1 authentication { auth_type PASS auth_pass 1111 } virtual_ipaddress { 192.168.56.20 } }

keepalived/install.sls (安装keepalived)

[root@k8s_master keepalived]# cat install.sls keepalived-install: file.managed: - name: /usr/local/src/keepalived-1.2.17.tar.gz - source: salt://keepalived/files/keepalived-1.2.17.tar.gz - mode: 755 - user: root - group: root cmd.run: - name: cd /usr/local/src && tar zxf keepalived-1.2.17.tar.gz && cd keepalived-1.2.17 && ./configure --prefix=/usr/local/keepalived --disable-fwmark && make && make install - unless: test -d /usr/local/keepalived #需要目录存在 - require: #需要keepalived.tar.gz文件存在 - file: keepalived-install /etc/sysconfig/keepalived: file.managed: - source: salt://keepalived/files/keepalived.sysconfig - mode: 644 - user: root - group: root /etc/init.d/keepalived: file.managed: - source: salt://keepalived/files/keepalived.init - mode: 755 - user: root - group: root keepalived-init: cmd.run: - name: chkconfig --add keepalived - unless: chkconfig --list | grep keepalived - require: - file: /etc/init.d/keepalived #需要上面/etc/init.d/keepalived标签下的file状态执行成功 /etc/keepalived: file.directory: - user: root - group: root

keepalived/file/{keepalived.sysconfig,keepalived.init}

[root@k8s_master keepalived]# cat files/keepalived.sysconfig |grep -v "^#|^$" KEEPALIVED_OPTIONS="-D" [root@k8s_master keepalived]# cat files/keepalived.init |grep -v "^#|^$" . /etc/rc.d/init.d/functions . /etc/sysconfig/keepalived RETVAL=0 prog="keepalived" start() { echo -n $"Starting $prog: " daemon /usr/local/keepalived/sbin/keepalived ${KEEPALIVED_OPTIONS} RETVAL=$? echo [ $RETVAL -eq 0 ] && touch /var/lock/subsys/$prog } stop() { echo -n $"Stopping $prog: " killproc keepalived RETVAL=$? echo [ $RETVAL -eq 0 ] && rm -f /var/lock/subsys/$prog } reload() { echo -n $"Reloading $prog: " killproc keepalived -1 RETVAL=$? echo } case "$1" in start) start ;; stop) stop ;; reload) reload ;; restart) stop start ;; condrestart) if [ -f /var/lock/subsys/$prog ]; then stop start fi ;; status) status keepalived RETVAL=$? ;; *) echo "Usage: $0 {start|stop|reload|restart|condrestart|status}" RETVAL=1 esac exit $RETVAL

安装web服务器,主top.sls下的web.bbs(省略。。太尼玛多了,不过总体也就是这样了)