案例 1:爬取百度产品列表

# ------------------------------------------------1.导包

import requests

# -------------------------------------------------2.确定url

base_url = 'https://www.baidu.com/more/'

# ----------------------------------------------3.发送请求,获取响应

response = requests.get(base_url)

# -----------------------------------------------4.查看页面内容,可能出现 乱码

# print(response.text)

# print(response.encoding)

# ---------------------------------------------------5.解决乱码

# ---------------------------方法一:转换成utf-8格式

# response.encoding='utf-8'

# print(response.text)

# -------------------------------方法二:解码为utf-8

with open('index.html', 'w', encoding='utf-8') as fp:

fp.write(response.content.decode('utf-8'))

print(response.status_code)

print(response.headers)

print(type(response.text))

print(type(response.content))

案例 2:爬取新浪新闻指定搜索内容

import requests

# ------------------爬取带参数的get请求-------------------爬取新浪新闻,指定的内容

# 1.寻找基础url

base_url = 'https://search.sina.com.cn/?'

# 2.设置headers字典和params字典,再发请求

headers = {

'user-agent': 'Mozilla/5.0 (Windows NT 6.1; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/77.0.3865.90 Safari/537.36',

}

key = '孙悟空' # 搜索内容

params = {

'q': key,

'c': 'news',

'from': 'channel',

'ie': 'utf-8',

}

response = requests.get(base_url, headers=headers, params=params)

with open('sina_news.html', 'w', encoding='gbk') as fp:

fp.write(response.content.decode('gbk'))

-

分页类型

-

第一步:找出分页参数的规律

-

第二步:headers 和 params 字典

-

第三步:用 for 循环

-

案例 3:爬取百度贴吧前十页(get 请求)

# _--------------------爬取百度贴吧搜索某个贴吧的前十页

import requests, os

base_url = 'https://tieba.baidu.com/f?'

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 6.1; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/77.0.3865.90 Safari/537.36',

}

dirname = './tieba/woman/'

if not os.path.exists(dirname):

os.makedirs(dirname)

for i in range(0, 10):

params = {

'ie': 'utf-8',

'kw': '美女',

'pn': str(i * 50)

}

response = requests.get(base_url, headers=headers, params=params)

with open(dirname + '美女第%s页.html' % (i+1), 'w', encoding='utf-8') as file:

file.write(response.content.decode('utf-8'))

案例 4:爬取百度翻译接口

python

import requests

base_url = 'https://fanyi.baidu.com/sug'

kw = input('请输入要翻译的英文单词:')

data = {

'kw': kw

}

headers = {

# 由于百度翻译没有反扒措施,因此可以不写请求头

'content-length': str(len(data)),

'content-type': 'application/x-www-form-urlencoded; charset=UTF-8',

'referer': 'https://fanyi.baidu.com/',

'x-requested-with': 'XMLHttpRequest'

}

response = requests.post(base_url, headers=headers, data=data)

# print(response.json())

#结果:{'errno': 0, 'data': [{'k': 'python', 'v': 'n. 蟒; 蚺蛇;'}, {'k': 'pythons', 'v': 'n. 蟒; 蚺蛇; python的复数;'}]}

#-----------------------------把他变成一行一行

result=''

for i in response.json()['data']:

result+=i['v']+'

'

print(kw+'的翻译结果为:')

print(result)

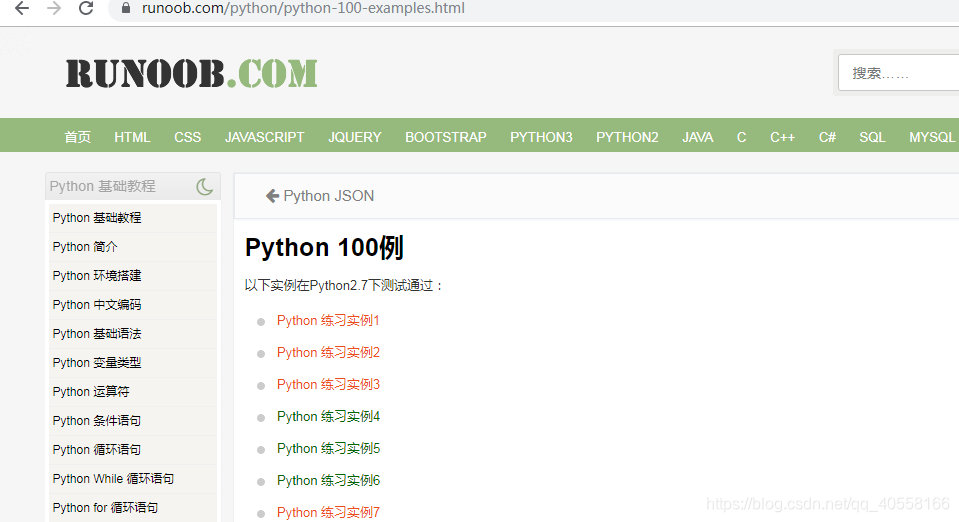

案例 5:爬取菜鸟教程的 python100 例

import requests

from lxml import etree

base_url = 'https://www.runoob.com/python/python-exercise-example%s.html'

def get_element(url):

headers = {

'cookie': '__gads=Test; Hm_lvt_3eec0b7da6548cf07db3bc477ea905ee=1573454862,1573470948,1573478656,1573713819; Hm_lpvt_3eec0b7da6548cf07db3bc477ea905ee=1573714018; SERVERID=fb669a01438a4693a180d7ad8d474adb|1573713997|1573713863',

'referer': 'https://www.runoob.com/python/python-100-examples.html',

'user-agent': 'Mozilla/5.0 (Windows NT 6.1; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/77.0.3865.90 Safari/537.36'

}

response = requests.get(url, headers=headers)

return etree.HTML(response.text)

def write_py(i, text):

with open('练习实例%s.py' % i, 'w', encoding='utf-8') as file:

file.write(text)

def main():

for i in range(1, 101):

html = get_element(base_url % i)

content = '题目:' + html.xpath('//div[@id="content"]/p[2]/text()')[0] + '

'

fenxi = html.xpath('//div[@id="content"]/p[position()>=2]/text()')[0]

daima = ''.join(html.xpath('//div[@class="hl-main"]/span/text()')) + '

'

haha = '"""

' + content + fenxi + daima + '

"""'

write_py(i, haha)

print(fenxi)

if __name__ == '__main__':

main()

案例 6:登录人人网(cookie)

import requests

base_url = 'http://www.renren.com/909063513'

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 6.1; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/77.0.3865.90 Safari/537.36',

'Cookie': 'cookie',

}

response=requests.get(base_url,headers=headers)

if '死性不改' in response.text:

print('登录成功')

else:

print('登录失败')

由于我们登录进入人人网在人人网 html 页面就会显示用户名,因此可以通过用户名是否存在来判断是否登录成功

案例 7:登录人人网(session)

import requests

base_url = 'http://www.renren.com/PLogin.do'

headers= {

'Host': 'www.renren.com',

'Referer': 'http://safe.renren.com/security/account',

'User-Agent': 'Mozilla/5.0 (Windows NT 6.1; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/78.0.3904.70 Safari/537.36',

}

data = {

'email':邮箱,

'password':密码,

}

#创建一个session对象

se = requests.session()

#用session对象来发送post请求进行登录。

se.post(base_url,headers=headers,data=data)

response = se.get('http://www.renren.com/971682585')

if '鸣人' in response.text:

print('登录成功!')

else:

print(response.text)

print('登录失败!')

案例 8:爬取猫眼电影(正则表达式)

爬取目标:爬取前一百个电影的信息

爬取目标:爬取前一百个电影的信息

import re, requests, json

class Maoyan:

def __init__(self, url):

self.url = url

self.movie_list = []

self.headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 6.1; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/78.0.3904.70 Safari/537.36'

}

self.parse()

def parse(self):

# 爬去页面的代码

# 1.发送请求,获取响应

# 分页

for i in range(10):

url = self.url + '?offset={}'.format(i * 10)

response = requests.get(url, headers=self.headers)

'''

1.电影名称

2、主演

3、上映时间

4、评分

'''

# 用正则筛选数据,有个原则:不断缩小筛选范围。

dl_pattern = re.compile(r'<dl class="board-wrapper">(.*?)</dl>', re.S)

dl_content = dl_pattern.search(response.text).group()

dd_pattern = re.compile(r'<dd>(.*?)</dd>', re.S)

dd_list = dd_pattern.findall(dl_content)

# print(dd_list)

movie_list = []

for dd in dd_list:

print(dd)

item = {}

# ------------电影名字

movie_pattern = re.compile(r'title="(.*?)" class=', re.S)

movie_name = movie_pattern.search(dd).group(1)

# print(movie_name)

actor_pattern = re.compile(r'<p class="star">(.*?)</p>', re.S)

actor = actor_pattern.search(dd).group(1).strip()

# print(actor)

play_time_pattern = re.compile(r'<p class="releasetime">(.*?):(.*?)</p>', re.S)

play_time = play_time_pattern.search(dd).group(2).strip()

# print(play_time)

# 评分

score_pattern_1 = re.compile(r'<i class="integer">(.*?)</i>', re.S)

score_pattern_2 = re.compile(r'<i class="fraction">(.*?)</i>', re.S)

score = score_pattern_1.search(dd).group(1).strip() + score_pattern_2.search(dd).group(1).strip()

# print(score)

item['电影名字:'] = movie_name

item['主演:'] = actor

item['时间:'] = play_time

item['评分:'] = score

# print(item)

self.movie_list.append(item)

# 将电影信息保存到json文件中

with open('movie.json', 'w', encoding='utf-8') as fp:

json.dump(self.movie_list, fp)

if __name__ == '__main__':

base_url = 'https://maoyan.com/board/4'

Maoyan(base_url)

with open('movie.json', 'r') as fp:

movie_list = json.load(fp)

print(movie_list)

案例 9:爬取股吧(正则表达式)

爬取目标: 爬取前十页的阅读数, 评论数, 标题, 作者, 更新时间, 详情页 url

爬取目标: 爬取前十页的阅读数, 评论数, 标题, 作者, 更新时间, 详情页 url

import json

import re

import requests

class GuBa(object):

def __init__(self):

self.base_url = 'http://guba.eastmoney.com/default,99_%s.html'

self.headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 6.1; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/78.0.3904.70 Safari/537.36'

}

self.infos = []

self.parse()

def parse(self):

for i in range(1, 13):

response = requests.get(self.base_url % i, headers=self.headers)

'''阅读数,评论数,标题,作者,更新时间,详情页url'''

ul_pattern = re.compile(r'<ul id="itemSearchList" class="itemSearchList">(.*?)</ul>', re.S)

ul_content = ul_pattern.search(response.text)

if ul_content:

ul_content = ul_content.group()

li_pattern = re.compile(r'<li>(.*?)</li>', re.S)

li_list = li_pattern.findall(ul_content)

# print(li_list)

for li in li_list:

item = {}

reader_pattern = re.compile(r'<cite>(.*?)</cite>', re.S)

info_list = reader_pattern.findall(li)

# print(info_list)

reader_num = ''

comment_num = ''

if info_list:

reader_num = info_list[0].strip()

comment_num = info_list[1].strip()

print(reader_num, comment_num)

title_pattern = re.compile(r'title="(.*?)" class="note">', re.S)

title = title_pattern.search(li).group(1)

# print(title)

author_pattern = re.compile(r'target="_blank"><font>(.*?)</font></a><input type="hidden"', re.S)

author = author_pattern.search(li).group(1)

# print(author)

date_pattern = re.compile(r'<cite class="last">(.*?)</cite>', re.S)

date = date_pattern.search(li).group(1)

# print(date)

detail_pattern = re.compile(r' <a href="(.*?)" title=', re.S)

detail_url = detail_pattern.search(li)

if detail_url:

detail_url = 'http://guba.eastmoney.com' + detail_url.group(1)

else:

detail_url = ''

print(detail_url)

item['title'] = title

item['author'] = author

item['date'] = date

item['reader_num'] = reader_num

item['comment_num'] = comment_num

item['detail_url'] = detail_url

self.infos.append(item)

with open('guba.json', 'w', encoding='utf-8') as fp:

json.dump(self.infos, fp)

gb=GuBa()

案例 10:爬取某药品网站(正则表达式)

爬取目标:爬取五十页的药品信息

爬取目标:爬取五十页的药品信息

'''

要求:抓取50页

字段:总价,描述,评论数量,详情页链接

用正则爬取。

'''

import requests, re,json

class Drugs:

def __init__(self):

self.url = url = 'https://www.111.com.cn/categories/953710-j%s.html'

self.headers = {

'user-agent': 'Mozilla/5.0 (Windows NT 6.1; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/77.0.3865.90 Safari/537.36'

}

self.Drugs_list=[]

self.parse()

def parse(self):

for i in range(51):

response = requests.get(self.url % i, headers=self.headers)

# print(response.text)

# 字段:药名,总价,评论数量,详情页链接

Drugsul_pattern = re.compile('<ul id="itemSearchList" class="itemSearchList">(.*?)</ul>', re.S)

Drugsul = Drugsul_pattern.search(response.text).group()

# print(Drugsul)

Drugsli_list_pattern = re.compile('<li id="producteg(.*?)</li>', re.S)

Drugsli_list = Drugsli_list_pattern.findall(Drugsul)

Drugsli_list = Drugsli_list

# print(Drugsli_list)

for drug in Drugsli_list:

# ---药名

item={}

name_pattern = re.compile('alt="(.*?)"', re.S)

name = name_pattern.search(str(drug)).group(1)

# print(name)

# ---总价

total_pattern = re.compile('<span>(.*?)</span>', re.S)

total = total_pattern.search(drug).group(1).strip()

# print(total)

# ----评论

comment_pattern = re.compile('<em>(.*?)</em>')

comment = comment_pattern.search(drug)

if comment:

comment_group = comment.group(1)

else:

comment_group = '0'

# print(comment_group)

# ---详情页链接

href_pattern = re.compile('" href="//(.*?)"')

href='https://'+href_pattern.search(drug).group(1).strip()

# print(href)

item['药名']=name

item['总价']=total

item['评论']=comment

item['链接']=href

self.Drugs_list.append(item)

drugs = Drugs()

print(drugs.Drugs_list)

案例 11:使用 xpath 爬取扇贝英语单词(xpath)

需求:爬取三页单词

import json

import requests

from lxml import etree

base_url = 'https://www.shanbay.com/wordlist/110521/232414/?page=%s'

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 6.1; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/78.0.3904.70 Safari/537.36'

}

def get_text(value):

if value:

return value[0]

return ''

word_list = []

for i in range(1, 4):

# 发送请求

response = requests.get(base_url % i, headers=headers)

# print(response.text)

html = etree.HTML(response.text)

tr_list = html.xpath('//tbody/tr')

# print(tr_list)

for tr in tr_list:

item = {}#构造单词列表

en = get_text(tr.xpath('.//td[@class="span2"]/strong/text()'))

tra = get_text(tr.xpath('.//td[@class="span10"]/text()'))

print(en, tra)

if en:

item[en] = tra

word_list.append(item)

面向对象:

import requests

from lxml import etree

class Shanbei(object):

def __init__(self):

self.base_url = 'https://www.shanbay.com/wordlist/110521/232414/?page=%s'

self.headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 6.1; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/78.0.3904.70 Safari/537.36'

}

self.word_list = []

self.parse()

def get_text(self, value):

# 防止为空报错

if value:

return value[0]

return ''

def parse(self):

for i in range(1, 4):

# 发送请求

response = requests.get(self.base_url % i, headers=self.headers)

# print(response.text)

html = etree.HTML(response.text)

tr_list = html.xpath('//tbody/tr')

# print(tr_list)

for tr in tr_list:

item = {} # 构造单词列表

en = self.get_text(tr.xpath('.//td[@class="span2"]/strong/text()'))

tra = self.get_text(tr.xpath('.//td[@class="span10"]/text()'))

print(en, tra)

if en:

item[en] = tra

self.word_list.append(item)

shanbei = Shanbei()

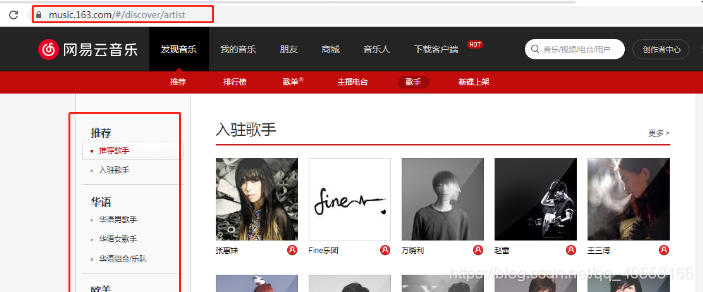

案例 12:爬取网易云音乐的所有歌手名字(xpath)

import requests,json

from lxml import etree

url = 'https://music.163.com/discover/artist'

singer_infos = []

# ---------------通过url获取该页面的内容,返回xpath对象

def get_xpath(url):

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 6.1; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/78.0.3904.70 Safari/537.36'

}

response = requests.get(url, headers=headers)

return etree.HTML(response.text)

# --------------通过get_xpath爬取到页面后,我们获取华宇,华宇男等分类

def parse():

html = get_xpath(url)

fenlei_url_list = html.xpath('//ul[@class="nav f-cb"]/li/a/@href') # 获取华宇等分类的url

# print(fenlei_url_list)

# --------将热门和推荐两栏去掉筛选

new_list = [i for i in fenlei_url_list if 'id' in i]

for i in new_list:

fenlei_url = 'https://music.163.com' + i

parse_fenlei(fenlei_url)

# print(fenlei_url)

# -------------通过传入的分类url,获取A,B,C页面内容

def parse_fenlei(url):

html = get_xpath(url)

# 获得字母排序,每个字母的链接

zimu_url_list = html.xpath('//ul[@id="initial-selector"]/li[position()>1]/a/@href')

for i in zimu_url_list:

zimu_url = 'https://music.163.com' + i

parse_singer(zimu_url)

# ---------------------传入获得的字母链接,开始爬取歌手内容

def parse_singer(url):

html = get_xpath(url)

item = {}

singer_names = html.xpath('//ul[@id="m-artist-box"]/li/p/a/text()')

# --详情页看到页面结构会有两个a标签,所以取第一个

singer_href = html.xpath('//ul[@id="m-artist-box"]/li/p/a[1]/@href')

# print(singer_names,singer_href)

for i, name in enumerate(singer_names):

item['歌手名'] = name

item['音乐链接'] = 'https://music.163.com' + singer_href[i].strip()

# 获取歌手详情页的链接

url = item['音乐链接'].replace(r'?id', '/desc?id')

# print(url)

parse_detail(url, item)

print(item)

# ---------获取详情页url和存着歌手名字和音乐列表的字典,在字典中添加详情页数据

def parse_detail(url, item):

html = get_xpath(url)

desc_list = html.xpath('//div[@class="n-artdesc"]/p/text()')

item['歌手信息'] = desc_list

singer_infos.append(item)

write_singer(item)

# ----------------将数据字典写入歌手文件

def write_singer(item):

with open('singer.json', 'a+', encoding='utf-8') as file:

json.dump(item,file)

if __name__ == '__main__':

parse()

面向对象

import json, requests

from lxml import etree

class Wangyiyun(object):

def __init__(self):

self.url = 'https://music.163.com/discover/artist'

self.singer_infos = []

self.headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 6.1; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/78.0.3904.70 Safari/537.36'

}

self.parse()

# ---------------通过url获取该页面的内容,返回xpath对象

def get_xpath(self, url):

response = requests.get(url, headers=self.headers)

return etree.HTML(response.text)

# --------------通过get_xpath爬取到页面后,我们获取华宇,华宇男等分类

def parse(self):

html = self.get_xpath(self.url)

fenlei_url_list = html.xpath('//ul[@class="nav f-cb"]/li/a/@href') # 获取华宇等分类的url

# print(fenlei_url_list)

# --------将热门和推荐两栏去掉筛选

new_list = [i for i in fenlei_url_list if 'id' in i]

for i in new_list:

fenlei_url = 'https://music.163.com' + i

self.parse_fenlei(fenlei_url)

# print(fenlei_url)

# -------------通过传入的分类url,获取A,B,C页面内容

def parse_fenlei(self, url):

html = self.get_xpath(url)

# 获得字母排序,每个字母的链接

zimu_url_list = html.xpath('//ul[@id="initial-selector"]/li[position()>1]/a/@href')

for i in zimu_url_list:

zimu_url = 'https://music.163.com' + i

self.parse_singer(zimu_url)

# ---------------------传入获得的字母链接,开始爬取歌手内容

def parse_singer(self, url):

html = self.get_xpath(url)

item = {}

singer_names = html.xpath('//ul[@id="m-artist-box"]/li/p/a/text()')

# --详情页看到页面结构会有两个a标签,所以取第一个

singer_href = html.xpath('//ul[@id="m-artist-box"]/li/p/a[1]/@href')

# print(singer_names,singer_href)

for i, name in enumerate(singer_names):

item['歌手名'] = name

item['音乐链接'] = 'https://music.163.com' + singer_href[i].strip()

# 获取歌手详情页的链接

url = item['音乐链接'].replace(r'?id', '/desc?id')

# print(url)

self.parse_detail(url, item)

print(item)

# ---------获取详情页url和存着歌手名字和音乐列表的字典,在字典中添加详情页数据

def parse_detail(self, url, item):

html = self.get_xpath(url)

desc_list = html.xpath('//div[@class="n-artdesc"]/p/text()')[0]

item['歌手信息'] = desc_list

self.singer_infos.append(item)

self.write_singer(item)

# ----------------将数据字典写入歌手文件

def write_singer(self, item):

with open('sing.json', 'a+', encoding='utf-8') as file:

json.dump(item, file)

music = Wangyiyun()

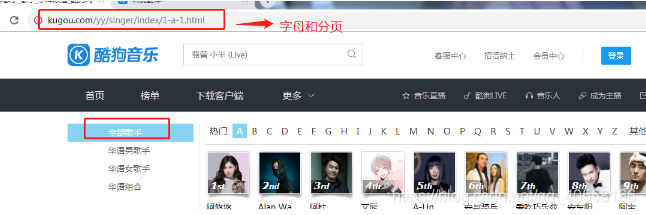

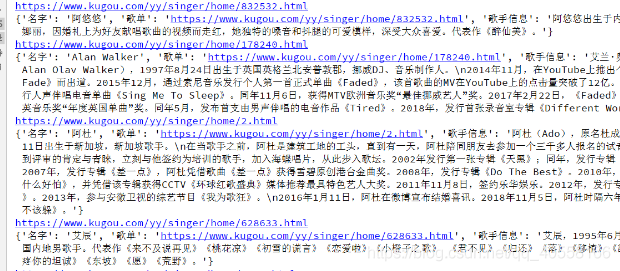

案例 13:爬取酷狗音乐的歌手和歌单(xpath)

需求:爬取酷狗音乐的歌手和歌单和歌手简介

import json, requests

from lxml import etree

base_url = 'https://www.kugou.com/yy/singer/index/%s-%s-1.html'

# ---------------通过url获取该页面的内容,返回xpath对象

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 6.1; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/78.0.3904.70 Safari/537.36'

}

# ---------------通过url获取该页面的内容,返回xpath对象

def get_xpath(url, headers):

try:

response = requests.get(url, headers=headers)

return etree.HTML(response.text)

except Exception:

print(url, '该页面没有相应!')

return ''

# --------------------通过歌手详情页获取歌手简介

def parse_info(url):

html = get_xpath(url, headers)

info = html.xpath('//div[@class="intro"]/p/text()')

return info

# --------------------------写入方法

def write_json(value):

with open('kugou.json', 'a+', encoding='utf-8') as file:

json.dump(value, file)

# -----------------------------用ASCII码值来变换abcd...

for j in range(97, 124):

# 小写字母为97-122,当等于123的时候我们按歌手名单的其他算,路由为null

if j < 123:

p = chr(j)

else:

p = "null"

for i in range(1, 6):

response = requests.get(base_url % (i, p), headers=headers)

# print(response.text)

html = etree.HTML(response.text)

# 由于数据分两个url,所以需要加起来数据列表

name_list1 = html.xpath('//ul[@id="list_head"]/li/strong/a/text()')

sing_list1 = html.xpath('//ul[@id="list_head"]/li/strong/a/@href')

name_list2 = html.xpath('//div[@id="list1"]/ul/li/a/text()')

sing_list2 = html.xpath('//div[@id="list1"]/ul/li/a/@href')

singer_name_list = name_list1 + name_list2

singer_sing_list = sing_list1 + sing_list2

# print(singer_name_list,singer_sing_list)

for i, name in enumerate(singer_name_list):

item = {}

item['名字'] = name

item['歌单'] = singer_sing_list[i]

# item['歌手信息']=parse_info(singer_sing_list[i])#被封了

write_json(item)

面向对象:

import json, requests

from lxml import etree

class KuDog(object):

def __init__(self):

self.base_url = 'https://www.kugou.com/yy/singer/index/%s-%s-1.html'

self.headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 6.1; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/78.0.3904.70 Safari/537.36'

}

self.parse()

# ---------------通过url获取该页面的内容,返回xpath对象

def get_xpath(self, url, headers):

try:

response = requests.get(url, headers=headers)

return etree.HTML(response.text)

except Exception:

print(url, '该页面没有相应!')

return ''

# --------------------通过歌手详情页获取歌手简介

def parse_info(self, url):

html = self.get_xpath(url, self.headers)

info = html.xpath('//div[@class="intro"]/p/text()')

return info[0]

# --------------------------写入方法

def write_json(self, value):

with open('kugou.json', 'a+', encoding='utf-8') as file:

json.dump(value, file)

# -----------------------------用ASCII码值来变换abcd...

def parse(self):

for j in range(97, 124):

# 小写字母为97-122,当等于123的时候我们按歌手名单的其他算,路由为null

if j < 123:

p = chr(j)

else:

p = "null"

for i in range(1, 6):

response = requests.get(self.base_url % (i, p), headers=self.headers)

# print(response.text)

html = etree.HTML(response.text)

# 由于数据分两个url,所以需要加起来数据列表

name_list1 = html.xpath('//ul[@id="list_head"]/li/strong/a/text()')

sing_list1 = html.xpath('//ul[@id="list_head"]/li/strong/a/@href')

name_list2 = html.xpath('//div[@id="list1"]/ul/li/a/text()')

sing_list2 = html.xpath('//div[@id="list1"]/ul/li/a/@href')

singer_name_list = name_list1 + name_list2

singer_sing_list = sing_list1 + sing_list2

# print(singer_name_list,singer_sing_list)

for i, name in enumerate(singer_name_list):

item = {}

item['名字'] = name

item['歌单'] = singer_sing_list[i]

# item['歌手信息']=parse_info(singer_sing_list[i])#被封了

print(item)

self.write_json(item)

music = KuDog()

案例 14:爬取扇贝读书图书信息(selenium+Phantomjs)

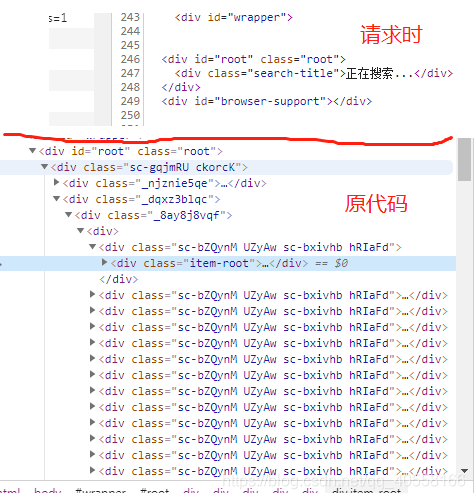

由于数据有 js 方法写入,因此不好在利用 requests 模块获取,所以使用 selenium+Phantomjs 获取

import time, json

from lxml import etree

from selenium import webdriver

base_url = 'https://search.douban.com/book/subject_search?search_text=python&cat=1001&start=%s'

driver = webdriver.PhantomJS()

def get_text(text):

if text:

return text[0]

return ''

def parse_page(text):

html = etree.HTML(text)

div_list = html.xpath('//div[@id="root"]/div/div/div/div/div/div[@class="item-root"]')

# print(div_list)

for div in div_list:

item = {}

'''

图书名称,评分,评价数,详情页链接,作者,出版社,价格,出版日期

'''

name = get_text(div.xpath('.//div[@class="title"]/a/text()'))

scores = get_text(div.xpath('.//span[@class="rating_nums"]/text()'))

comment_num = get_text(div.xpath('.//span[@class="pl"]/text()'))

detail_url = get_text(div.xpath('.//div[@class="title"]/a/@href'))

detail = get_text(div.xpath('.//div[@class="meta abstract"]/text()'))

if detail:

detail_list = detail.split('/')

else:

detail_list = ['未知', '未知', '未知', '未知']

# print(detail_list)

if all([name, detail_url]): # 如果名字和详情链接为true

item['书名'] = name

item['评分'] = scores

item['评论'] = comment_num

item['详情链接'] = detail_url

item['出版社'] = detail_list[-3]

item['价格'] = detail_list[-1]

item['出版日期'] = detail_list[-2]

author_list = detail_list[:-3]

author = ''

for aut in author_list:

author += aut + ' '

item['作者'] = author

print(item)

write_singer(item)

def write_singer(item):

with open('book.json', 'a+', encoding='utf-8') as file:

json.dump(item, file)

if __name__ == '__main__':

for i in range(10):

driver.get(base_url % (i * 15))

# 等待

time.sleep(2)

html_str = driver.page_source

parse_page(html_str)

面向对象:

from lxml import etree

from selenium import webdriver

from selenium.webdriver.support.wait import WebDriverWait

from selenium.webdriver.support import expected_conditions as EC

from selenium.webdriver.common.by import By

from urllib import parse

class Douban(object):

def __init__(self, url):

self.url = url

self.driver = webdriver.PhantomJS()

self.wait = WebDriverWait(self.driver, 10)

self.parse()

# 判断数据是否存在,不存在返回空字符

def get_text(self, text):

if text:

return text[0]

return ''

def get_content_by_selenium(self, url, xpath):

self.driver.get(url)

# 等待,locator对象是一个元组,此处获取xpath对应的元素并加载出来

webelement = self.wait.until(EC.presence_of_element_located((By.XPATH, xpath)))

return self.driver.page_source

def parse(self):

html_str = self.get_content_by_selenium(self.url, '//div[@id="root"]/div/div/div/div')

html = etree.HTML(html_str)

div_list = html.xpath('//div[@id="root"]/div/div/div/div/div')

for div in div_list:

item = {}

'''图书名称+评分+评价数+详情页链接+作者+出版社+价格+出版日期'''

name = self.get_text(div.xpath('.//div[@class="title"]/a/text()'))

scores = self.get_text(div.xpath('.//span[@class="rating_nums"]/text()'))

comment_num = self.get_text(div.xpath('.//span[@class="pl"]/text()'))

detail_url = self.get_text(div.xpath('.//div[@class="title"]/a/@href'))

detail = self.get_text(div.xpath('.//div[@class="meta abstract"]/text()'))

if detail:

detail_list = detail.split('/')

else:

detail_list = ['未知', '未知', '未知', '未知']

if all([name, detail_url]): # 如果列表里的数据为true方可执行

item['书名'] = name

item['评分'] = scores

item['评论'] = comment_num

item['详情链接'] = detail_url

item['出版社'] = detail_list[-3]

item['价格'] = detail_list[-1]

item['出版日期'] = detail_list[-2]

author_list = detail_list[:-3]

author = ''

for aut in author_list:

author += aut + ' '

item['作者'] = author

print(item)

if __name__ == '__main__':

kw = 'python'

base_url = 'https://search.douban.com/book/subject_search?'

for i in range(10):

params = {

'search_text': kw,

'cat': '1001',

'start': str(i * 15),

}

url = base_url + parse.urlencode(params)

Douban(url)

案例 15:爬取腾讯招聘的招聘信息(selenium+Phantomjs)

import time

from lxml import etree

from selenium import webdriver

driver = webdriver.PhantomJS()

base_url = 'https://careers.tencent.com/search.html?index=%s'

job=[]

def getText(text):

if text:

return text[0]

else:

return ''

def parse(text):

html = etree.HTML(text)

div_list = html.xpath('//div[@class="correlation-degree"]/div[@class="recruit-wrap recruit-margin"]/div')

# print(div_list)

for i in div_list:

item = {}

job_name = i.xpath('a/h4/text()') # ------职位

job_loc = i.xpath('a/p/span[2]/text()') # --------地点

job_gangwei = i.xpath('a/p/span[3]/text()') # -----岗位

job_time = i.xpath('a/p/span[4]/text()') # -----发布时间

item['职位']=job_name

item['地点']=job_loc

item['岗位']=job_gangwei

item['发布时间']=job_time

job.append(item)

if __name__ == '__main__':

for i in range(1, 11):

driver.get(base_url % i)

text = driver.page_source

# print(text)

time.sleep(1)

parse(text)

print(job)

面向对象:

import json

from lxml import etree

from selenium import webdriver

from selenium.webdriver.support.wait import WebDriverWait

from selenium.webdriver.support import expected_conditions as EC

from selenium.webdriver.common.by import By

from urllib import parse

class Tencent(object):

def __init__(self,url):

self.url = url

self.driver = webdriver.PhantomJS()

self.wait = WebDriverWait(self.driver,10)

self.parse()

def get_text(self,text):

if text:

return text[0]

return ''

def get_content_by_selenium(self,url,xpath):

self.driver.get(url)

webelement = self.wait.until(EC.presence_of_element_located((By.XPATH,xpath)))

return self.driver.page_source

def parse(self):

html_str = self.get_content_by_selenium(self.url,'//div[@class="correlation-degree"]')

html = etree.HTML(html_str)

div_list = html.xpath('//div[@class="recruit-wrap recruit-margin"]/div')

# print(div_list)

for div in div_list:

'''title,工作简介,工作地点,发布时间,岗位类别,详情页链接'''

job_name = self.get_text(div.xpath('.//h4[@class="recruit-title"]/text()'))

job_loc = self.get_text(div.xpath('.//p[@class="recruit-tips"]/span[2]/text()'))

job_gangwei = self.get_text(div.xpath('.//p/span[3]/text()') ) # -----岗位

job_time = self.get_text(div.xpath('.//p/span[4]/text()') ) # -----发布时间

item = {}

item['职位'] = job_name

item['地点'] = job_loc

item['岗位'] = job_gangwei

item['发布时间'] = job_time

print(item)

self.write_(item)

def write_(self,item):

with open('Tencent_job_100page.json', 'a+', encoding='utf-8') as file:

json.dump(item, file)

if __name__ == '__main__':

base_url = 'https://careers.tencent.com/search.html?index=%s'

for i in range(1,100):

Tencent(base_url %i)

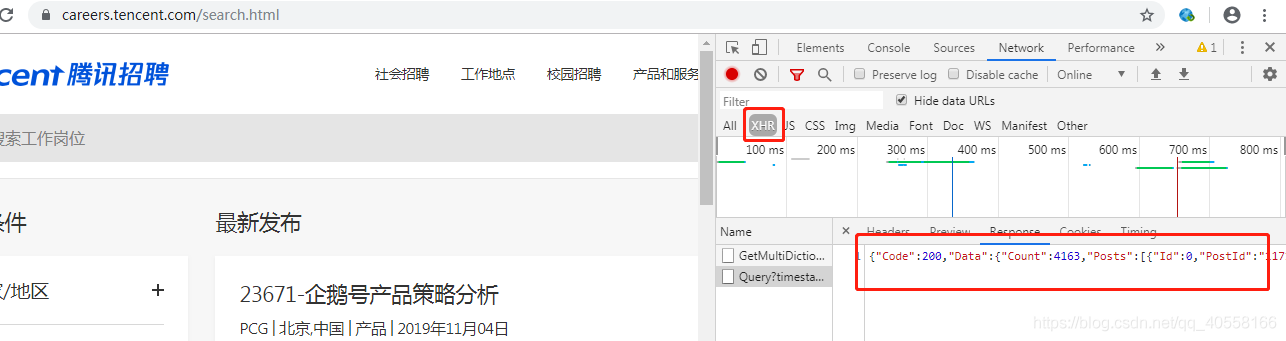

案例 16:爬取腾讯招聘(ajax 版 + 多线程版)

通过分析我们发现,腾讯招聘使用的是 ajax 的数据接口,因此我们直接去寻找 ajax 的数据接口链接。

通过分析我们发现,腾讯招聘使用的是 ajax 的数据接口,因此我们直接去寻找 ajax 的数据接口链接。

import requests, json

class Tencent(object):

def __init__(self):

self.base_url = 'https://careers.tencent.com/tencentcareer/api/post/Query?'

self.headers = {

'sec-fetch-mode': 'cors',

'sec-fetch-site': 'same-origin',

'user-agent': 'Mozilla/5.0 (Windows NT 6.1; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/77.0.3865.90 Safari/537.36',

'referer': 'https://careers.tencent.com/search.html'

}

self.parse()

def parse(self):

for i in range(1, 3):

params = {

'timestamp': '1572850838681',

'countryId': '',

'cityId': '',

'bgIds': '',

'productId': '',

'categoryId': '',

'parentCategoryId': '',

'attrId': '',

'keyword': '',

'pageIndex': str(i),

'pageSize': '10',

'language': 'zh-cn',

'area': 'cn'

}

response = requests.get(self.base_url, headers=self.headers, params=params)

self.parse_json(response.text)

def parse_json(self, text):

# 将json字符串编程python内置对象

infos = []

json_dict = json.loads(text)

for data in json_dict['Data']['Posts']:

RecruitPostName = data['RecruitPostName']

CategoryName = data['CategoryName']

Responsibility = data['Responsibility']

LastUpdateTime = data['LastUpdateTime']

detail_url = data['PostURL']

item = {}

item['RecruitPostName'] = RecruitPostName

item['CategoryName'] = CategoryName

item['Responsibility'] = Responsibility

item['LastUpdateTime'] = LastUpdateTime

item['detail_url'] = detail_url

# print(item)

infos.append(item)

self.write_to_file(infos)

def write_to_file(self, list_):

for item in list_:

with open('infos.txt', 'a+', encoding='utf-8') as fp:

fp.writelines(str(item))

if __name__ == '__main__':

t = Tencent()

改为多线程版后

import requests, json, threading

class Tencent(object):

def __init__(self):

self.base_url = 'https://careers.tencent.com/tencentcareer/api/post/Query?'

self.headers = {

'sec-fetch-mode': 'cors',

'sec-fetch-site': 'same-origin',

'user-agent': 'Mozilla/5.0 (Windows NT 6.1; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/77.0.3865.90 Safari/537.36',

'referer': 'https://careers.tencent.com/search.html'

}

self.parse()

def parse(self):

for i in range(1, 3):

params = {

'timestamp': '1572850838681',

'countryId': '',

'cityId': '',

'bgIds': '',

'productId': '',

'categoryId': '',

'parentCategoryId': '',

'attrId': '',

'keyword': '',

'pageIndex': str(i),

'pageSize': '10',

'language': 'zh-cn',

'area': 'cn'

}

response = requests.get(self.base_url, headers=self.headers, params=params)

self.parse_json(response.text)

def parse_json(self, text):

# 将json字符串编程python内置对象

infos = []

json_dict = json.loads(text)

for data in json_dict['Data']['Posts']:

RecruitPostName = data['RecruitPostName']

CategoryName = data['CategoryName']

Responsibility = data['Responsibility']

LastUpdateTime = data['LastUpdateTime']

detail_url = data['PostURL']

item = {}

item['RecruitPostName'] = RecruitPostName

item['CategoryName'] = CategoryName

item['Responsibility'] = Responsibility

item['LastUpdateTime'] = LastUpdateTime

item['detail_url'] = detail_url

# print(item)

infos.append(item)

self.write_to_file(infos)

def write_to_file(self, list_):

for item in list_:

with open('infos.txt', 'a+', encoding='utf-8') as fp:

fp.writelines(str(item))

if __name__ == '__main__':

tencent = Tencent()

t = threading.Thread(target=tencent.parse)

t.start()

改成多线程版的线程类:

import requests, json, threading

class Tencent(threading.Thread):

def __init__(self, i):

super().__init__()

self.i = i

self.base_url = 'https://careers.tencent.com/tencentcareer/api/post/Query?'

self.headers = {

'sec-fetch-mode': 'cors',

'sec-fetch-site': 'same-origin',

'user-agent': 'Mozilla/5.0 (Windows NT 6.1; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/77.0.3865.90 Safari/537.36',

'referer': 'https://careers.tencent.com/search.html'

}

def run(self):

self.parse()

def parse(self):

params = {

'timestamp': '1572850838681',

'countryId': '',

'cityId': '',

'bgIds': '',

'productId': '',

'categoryId': '',

'parentCategoryId': '',

'attrId': '',

'keyword': '',

'pageIndex': str(self.i),

'pageSize': '10',

'language': 'zh-cn',

'area': 'cn'

}

response = requests.get(self.base_url, headers=self.headers, params=params)

self.parse_json(response.text)

def parse_json(self, text):

# 将json字符串编程python内置对象

infos = []

json_dict = json.loads(text)

for data in json_dict['Data']['Posts']:

RecruitPostName = data['RecruitPostName']

CategoryName = data['CategoryName']

Responsibility = data['Responsibility']

LastUpdateTime = data['LastUpdateTime']

detail_url = data['PostURL']

item = {}

item['RecruitPostName'] = RecruitPostName

item['CategoryName'] = CategoryName

item['Responsibility'] = Responsibility

item['LastUpdateTime'] = LastUpdateTime

item['detail_url'] = detail_url

# print(item)

infos.append(item)

self.write_to_file(infos)

def write_to_file(self, list_):

for item in list_:

with open('infos.txt', 'a+', encoding='utf-8') as fp:

fp.writelines(str(item) + '

')

if __name__ == '__main__':

for i in range(1, 50):

t = Tencent(i)

t.start()

这样的弊端是如果有多个多线程同时运行,会导致系统的崩溃,因此我们使用队列,控制线程数量

import requests,json,time,threading

from queue import Queue

class Tencent(threading.Thread):

def __init__(self,url,headers,name,q):

super().__init__()

self.url= url

self.name = name

self.q = q

self.headers = headers

def run(self):

self.parse()

def write_to_file(self,list_):

with open('infos1.txt', 'a+', encoding='utf-8') as fp:

for item in list_:

fp.write(str(item))

def parse_json(self,text):

#将json字符串编程python内置对象

infos = []

json_dict = json.loads(text)

for data in json_dict['Data']['Posts']:

RecruitPostName = data['RecruitPostName']

CategoryName = data['CategoryName']

Responsibility = data['Responsibility']

LastUpdateTime = data['LastUpdateTime']

detail_url = data['PostURL']

item = {}

item['RecruitPostName'] = RecruitPostName

item['CategoryName'] = CategoryName

item['Responsibility'] = Responsibility

item['LastUpdateTime'] = LastUpdateTime

item['detail_url'] = detail_url

# print(item)

infos.append(item)

self.write_to_file(infos)

def parse(self):

while True:

if self.q.empty():

break

page = self.q.get()

print(f'==================第{page}页==========================in{self.name}')

params = {

'timestamp': '1572850797210',

'countryId':'',

'cityId':'',

'bgIds':'',

'productId':'',

'categoryId':'',

'parentCategoryId':'',

'attrId':'',

'keyword':'',

'pageIndex': str(page),

'pageSize': '10',

'language': 'zh-cn',

'area': 'cn'

}

response = requests.get(self.url,params=params,headers=self.headers)

self.parse_json(response.text)

if __name__ == '__main__':

start = time.time()

base_url = 'https://careers.tencent.com/tencentcareer/api/post/Query?'

headers= {

'referer': 'https: // careers.tencent.com / search.html',

'user-agent': 'Mozilla/5.0 (Windows NT 6.1; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/78.0.3904.70 Safari/537.36',

'sec-fetch-mode': 'cors',

'sec-fetch-site': 'same-origin'

}

#1创建任务队列

q = Queue()

#2给队列添加任务,任务是每一页的页码

for page in range(1,50):

q.put(page)

# print(queue)

# while not q.empty():

# print(q.get())

#3.创建一个列表

crawl_list = ['aa','bb','cc','dd','ee']

list_ = []

for name in crawl_list:

t = Tencent(base_url,headers,name,q)

t.start()

list_.append(t)

for l in list_:

l.join()

# 3.4171955585479736

print(time.time()-start)

案例 17:爬取英雄联盟所有英雄名字和技能(selenium+phantomjs+ajax 接口)

from selenium import webdriver

from lxml import etree

import requests, json

driver = webdriver.PhantomJS()

base_url = 'https://lol.qq.com/data/info-heros.shtml'

driver.get(base_url)

html = etree.HTML(driver.page_source)

hero_url_list = html.xpath('.//ul[@id="jSearchHeroDiv"]/li/a/@href')

hero_list = [] # 存放所有英雄的列表

for hero_url in hero_url_list:

id = hero_url.split('=')[-1]

# print(id)

detail_url = 'https://game.gtimg.cn/images/lol/act/img/js/hero/' + id + '.js'

# print(detail_url)

headers = {

'Referer': 'https://lol.qq.com/data/info-defail.shtml?id =4',

'Sec-Fetch-Mode': 'cors',

'User-Agent': 'Mozilla/5.0 (Windows NT 6.1; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/77.0.3865.90 Safari/537.36'

}

response = requests.get(detail_url, headers=headers)

n = json.loads(response.text)

hero = [] # 存放单个英雄

item_name = {}

item_name['英雄名字'] = n['hero']['name'] + ' ' + n['hero']['title']

hero.append(item_name)

for i in n['spells']: # 技能

item_skill = {}

item_skill['技能名字'] = i['name']

item_skill['技能描述'] = i['description']

hero.append(item_skill)

hero_list.append(hero)

# print(hero_list)

with open('hero.json','w') as file:

json.dump(hero_list,file)

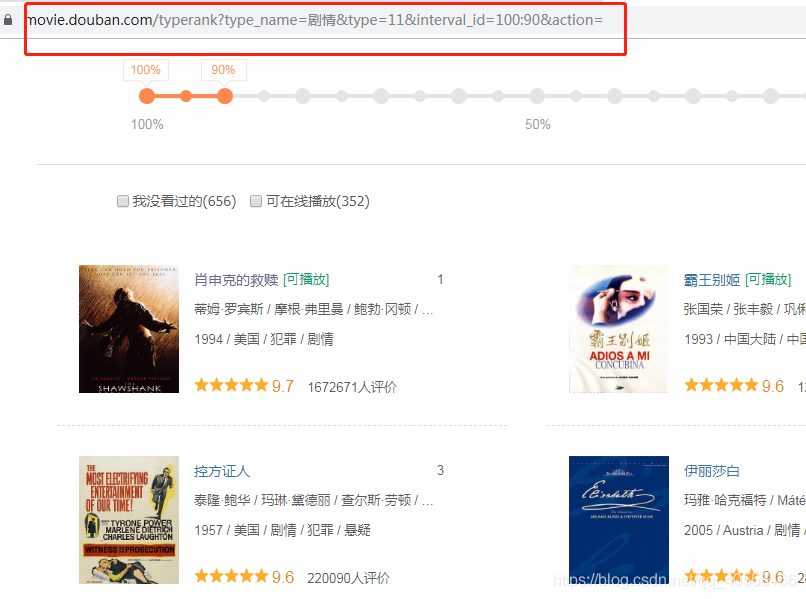

案例 18:爬取豆瓣电影(requests + 多线程)

需求:获得每个分类里的所有电影

import json

import re, requests

from lxml import etree

# 获取网页的源码

def get_content(url, headers):

response = requests.get(url, headers=headers)

return response.text

# 获取电影指定信息

def get_movie_info(text):

text = json.loads(text)

item = {}

for data in text:

score = data['score']

image = data['cover_url']

title = data['title']

actors = data['actors']

detail_url = data['url']

vote_count = data['vote_count']

types = data['types']

item['评分'] = score

item['图片'] = image

item['电影名'] = title

item['演员'] = actors

item['详情页链接'] = detail_url

item['评价数'] = vote_count

item['电影类别'] = types

print(item)

# 获取电影api数据的

def get_movie(type, url):

headers = {

'X-Requested-With': 'XMLHttpRequest',

'User-Agent': 'Mozilla/5.0 (Windows NT 6.1; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/77.0.3865.90 Safari/537.36',

}

n = 0

# 获取api数据,并判断分页

while True:

text = get_content(url.format(type, n), headers=headers)

if text == '[]':

break

get_movie_info(text)

n += 20

# 主方法

def main():

base_url = 'https://movie.douban.com/chart'

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 6.1; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/77.0.3865.90 Safari/537.36',

'Referer': 'https://movie.douban.com/explore'

}

html_str = get_content(base_url, headers=headers) # 分类页首页

html = etree.HTML(html_str)

movie_urls = html.xpath('//div[@class="types"]/span/a/@href') # 获得每个分类的连接,但是切割type

for url in movie_urls:

p = re.compile('type=(.*?)&interval_id=')

type_ = p.search(url).group(1)

ajax_url = 'https://movie.douban.com/j/chart/top_list?type={}&interval_id=100%3A90&action=&start={}&limit=20'

get_movie(type_, ajax_url)

if __name__ == '__main__':

main()

多线程

import json, threading

import re, requests

from lxml import etree

from queue import Queue

class DouBan(threading.Thread):

def __init__(self, q=None):

super().__init__()

self.base_url = 'https://movie.douban.com/chart'

self.headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 6.1; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/77.0.3865.90 Safari/537.36',

'Referer': 'https://movie.douban.com/explore'

}

self.q = q

self.ajax_url = 'https://movie.douban.com/j/chart/top_list?type={}&interval_id=100%3A90&action=&start={}&limit=20'

# 获取网页的源码

def get_content(self, url, headers):

response = requests.get(url, headers=headers)

return response.text

# 获取电影指定信息

def get_movie_info(self, text):

text = json.loads(text)

item = {}

for data in text:

score = data['score']

image = data['cover_url']

title = data['title']

actors = data['actors']

detail_url = data['url']

vote_count = data['vote_count']

types = data['types']

item['评分'] = score

item['图片'] = image

item['电影名'] = title

item['演员'] = actors

item['详情页链接'] = detail_url

item['评价数'] = vote_count

item['电影类别'] = types

print(item)

# 获取电影api数据的

def get_movie(self):

headers = {

'X-Requested-With': 'XMLHttpRequest',

'User-Agent': 'Mozilla/5.0 (Windows NT 6.1; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/77.0.3865.90 Safari/537.36',

}

# 获取api数据,并判断分页

while True:

if self.q.empty():

break

n = 0

while True:

text = self.get_content(self.ajax_url.format(self.q.get(), n), headers=headers)

if text == '[]':

break

self.get_movie_info(text)

n += 20

# 获取所有类型的type——id

def get_types(self):

html_str = self.get_content(self.base_url, headers=self.headers) # 分类页首页

html = etree.HTML(html_str)

types = html.xpath('//div[@class="types"]/span/a/@href') # 获得每个分类的连接,但是切割type

# print(types)

type_list = []

for i in types:

p = re.compile('type=(.*?)&interval_id=') # 筛选id,拼接到api接口的路由

type = p.search(i).group(1)

type_list.append(type)

return type_list

def run(self):

self.get_movie()

if __name__ == '__main__':

# 创建消息队列

q = Queue()

# 将任务队列初始化,将我们的type放到消息队列中

t = DouBan()

types = t.get_types()

for tp in types:

q.put(tp[0])

# 创建一个列表,列表的数量就是开启线程的树木

crawl_list = [1, 2, 3, 4]

for crawl in crawl_list:

# 实例化对象

movie = DouBan(q=q)

movie.start()

案例 19:爬取瓜子二手车的所有车(requests)

需求:获得每个车类型的所有信息

import json

import requests, re

from lxml import etree

# 获取网页的源码

def get_content(url, headers):

response = requests.get(url, headers=headers)

return response.text

# 获取子页原代码

def get_info(text):

item = {}

title_list = text.xpath('//ul[@class="carlist clearfix js-top"]/li/a/@title')

price_list = text.xpath('//div[@class="t-price"]/p/text()')

year_list = text.xpath('//div[@class="t-i"]/text()[1]')

millon_list = text.xpath('//div[@class="t-i"]/text()[2]')

picture_list = text.xpath('//ul[@class="carlist clearfix js-top"]/li/a/img/@src')

details_list = text.xpath('//ul[@class="carlist clearfix js-top"]/li/a/@href')

for i, title in enumerate(title_list):

item['标题'] = title

item['价格'] = price_list[i] + '万'

item['公里数'] = millon_list[i]

item['年份'] = year_list[i]

item['照片链接'] = picture_list[i]

item['详情页链接'] = 'https://www.guazi.com' + details_list[i]

print(item)

# 主函数

def main():

base_url = 'https://www.guazi.com/bj/buy/'

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 6.1; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/77.0.3865.90 Safari/537.36',

'Cookie': 'track_id=7534369675321344; uuid=c129325e-6fea-4fd0-dea5-3632997e0419; antipas=wL2L859nHt69349594j71850u61; cityDomain=bj; clueSourceCode=10103000312%2300; user_city_id=12; ganji_uuid=6616956591030214317551; sessionid=5f3261c7-27a6-4bd6-e909-f70312d46c39; lg=1; cainfo=%7B%22ca_a%22%3A%22-%22%2C%22ca_b%22%3A%22-%22%2C%22ca_s%22%3A%22pz_baidu%22%2C%22ca_n%22%3A%22tbmkbturl%22%2C%22ca_medium%22%3A%22-%22%2C%22ca_term%22%3A%22-%22%2C%22ca_content%22%3A%22%22%2C%22ca_campaign%22%3A%22%22%2C%22ca_kw%22%3A%22-%22%2C%22ca_i%22%3A%22-%22%2C%22scode%22%3A%2210103000312%22%2C%22keyword%22%3A%22-%22%2C%22ca_keywordid%22%3A%22-%22%2C%22ca_transid%22%3A%22%22%2C%22platform%22%3A%221%22%2C%22version%22%3A1%2C%22track_id%22%3A%227534369675321344%22%2C%22display_finance_flag%22%3A%22-%22%2C%22client_ab%22%3A%22-%22%2C%22guid%22%3A%22c129325e-6fea-4fd0-dea5-3632997e0419%22%2C%22ca_city%22%3A%22bj%22%2C%22sessionid%22%3A%225f3261c7-27a6-4bd6-e909-f70312d46c39%22%7D; preTime=%7B%22last%22%3A1572951901%2C%22this%22%3A1572951534%2C%22pre%22%3A1572951534%7D',

}

html = etree.HTML(get_content(base_url, headers))

brand_url_list = html.xpath('//div[@class="dd-all clearfix js-brand js-option-hid-info"]/ul/li/p/a/@href')

for url in brand_url_list:

headers = {

'Referer': 'https://www.guazi.com/bj/buy/',

'User-Agent': 'Mozilla/5.0 (Windows NT 6.1; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/77.0.3865.90 Safari/537.36',

'Cookie': 'track_id=7534369675321344; uuid=c129325e-6fea-4fd0-dea5-3632997e0419; antipas=wL2L859nHt69349594j71850u61; cityDomain=bj; clueSourceCode=10103000312%2300; user_city_id=12; ganji_uuid=6616956591030214317551; sessionid=5f3261c7-27a6-4bd6-e909-f70312d46c39; lg=1; cainfo=%7B%22ca_a%22%3A%22-%22%2C%22ca_b%22%3A%22-%22%2C%22ca_s%22%3A%22pz_baidu%22%2C%22ca_n%22%3A%22tbmkbturl%22%2C%22ca_medium%22%3A%22-%22%2C%22ca_term%22%3A%22-%22%2C%22ca_content%22%3A%22%22%2C%22ca_campaign%22%3A%22%22%2C%22ca_kw%22%3A%22-%22%2C%22ca_i%22%3A%22-%22%2C%22scode%22%3A%2210103000312%22%2C%22keyword%22%3A%22-%22%2C%22ca_keywordid%22%3A%22-%22%2C%22ca_transid%22%3A%22%22%2C%22platform%22%3A%221%22%2C%22version%22%3A1%2C%22track_id%22%3A%227534369675321344%22%2C%22display_finance_flag%22%3A%22-%22%2C%22client_ab%22%3A%22-%22%2C%22guid%22%3A%22c129325e-6fea-4fd0-dea5-3632997e0419%22%2C%22ca_city%22%3A%22bj%22%2C%22sessionid%22%3A%225f3261c7-27a6-4bd6-e909-f70312d46c39%22%7D; preTime=%7B%22last%22%3A1572953403%2C%22this%22%3A1572951534%2C%22pre%22%3A1572951534%7D',

}

brand_url = 'https://www.guazi.com' + url.split('/#')[0] + '/o%s/#bread' # 拼接每个品牌汽车的url

for i in range(1, 3):

html = etree.HTML(get_content(brand_url % i, headers=headers))

get_info(html)

if __name__ == '__main__':

main()

多线程:

import requests, threading

from lxml import etree

from queue import Queue

class Guazi(threading.Thread):

def __init__(self, list_=None):

super().__init__()

self.base_url = 'https://www.guazi.com/bj/buy/'

self.headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 6.1; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/77.0.3865.90 Safari/537.36',

'Cookie': 'track_id=7534369675321344; uuid=c129325e-6fea-4fd0-dea5-3632997e0419; antipas=wL2L859nHt69349594j71850u61; cityDomain=bj; clueSourceCode=10103000312%2300; user_city_id=12; ganji_uuid=6616956591030214317551; sessionid=5f3261c7-27a6-4bd6-e909-f70312d46c39; lg=1; cainfo=%7B%22ca_a%22%3A%22-%22%2C%22ca_b%22%3A%22-%22%2C%22ca_s%22%3A%22pz_baidu%22%2C%22ca_n%22%3A%22tbmkbturl%22%2C%22ca_medium%22%3A%22-%22%2C%22ca_term%22%3A%22-%22%2C%22ca_content%22%3A%22%22%2C%22ca_campaign%22%3A%22%22%2C%22ca_kw%22%3A%22-%22%2C%22ca_i%22%3A%22-%22%2C%22scode%22%3A%2210103000312%22%2C%22keyword%22%3A%22-%22%2C%22ca_keywordid%22%3A%22-%22%2C%22ca_transid%22%3A%22%22%2C%22platform%22%3A%221%22%2C%22version%22%3A1%2C%22track_id%22%3A%227534369675321344%22%2C%22display_finance_flag%22%3A%22-%22%2C%22client_ab%22%3A%22-%22%2C%22guid%22%3A%22c129325e-6fea-4fd0-dea5-3632997e0419%22%2C%22ca_city%22%3A%22bj%22%2C%22sessionid%22%3A%225f3261c7-27a6-4bd6-e909-f70312d46c39%22%7D; preTime=%7B%22last%22%3A1572951901%2C%22this%22%3A1572951534%2C%22pre%22%3A1572951534%7D',

}

self.list_ = list_

# 获取网页的源码

def get_content(self, url, headers):

response = requests.get(url, headers=headers)

return response.text

# 获取子页原代码

def get_info(self, text):

item = {}

title_list = text.xpath('//ul[@class="carlist clearfix js-top"]/li/a/@title')

price_list = text.xpath('//div[@class="t-price"]/p/text()')

year_list = text.xpath('//div[@class="t-i"]/text()[1]')

millon_list = text.xpath('//div[@class="t-i"]/text()[2]')

picture_list = text.xpath('//ul[@class="carlist clearfix js-top"]/li/a/img/@src')

details_list = text.xpath('//ul[@class="carlist clearfix js-top"]/li/a/@href')

for i, title in enumerate(title_list):

item['标题'] = title

item['价格'] = price_list[i] + '万'

item['公里数'] = millon_list[i]

item['年份'] = year_list[i]

item['照片链接'] = picture_list[i]

item['详情页链接'] = 'https://www.guazi.com' + details_list[i]

print(item)

# 获取汽车链接列表

def get_carsurl(self):

html = etree.HTML(self.get_content(self.base_url, self.headers))

brand_url_list = html.xpath('//div[@class="dd-all clearfix js-brand js-option-hid-info"]/ul/li/p/a/@href')

brand_url_list = ['https://www.guazi.com' + url.split('/#')[0] + '/o%s/#bread' for url in brand_url_list]

return brand_url_list

def run(self):

while True:

if self.list_.empty():

break

url = self.list_.get()

for i in range(1, 3):

html = etree.HTML(self.get_content(url % i, headers=self.headers))

self.get_info(html)

if __name__ == '__main__':

q = Queue()

gz = Guazi()

cars_url = gz.get_carsurl()

for car in cars_url:

q.put(car)

# 创建一个列表,列表的数量就是开启线程的树木

crawl_list = [1, 2, 3, 4]

for crawl in crawl_list:

# 实例化对象

car = Guazi(list_=q)

car.start()

结果:

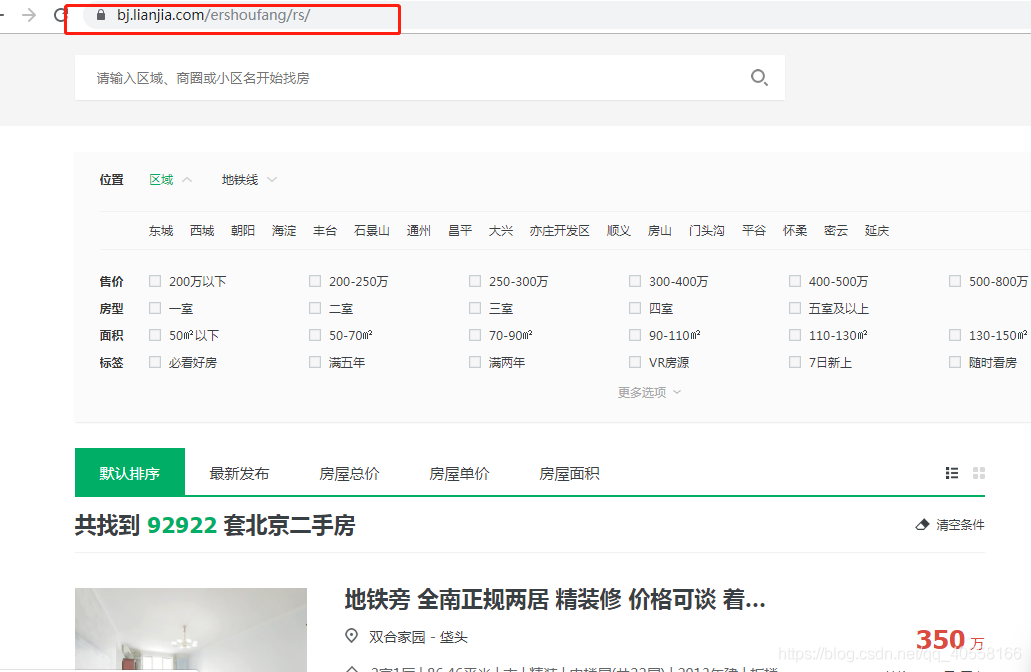

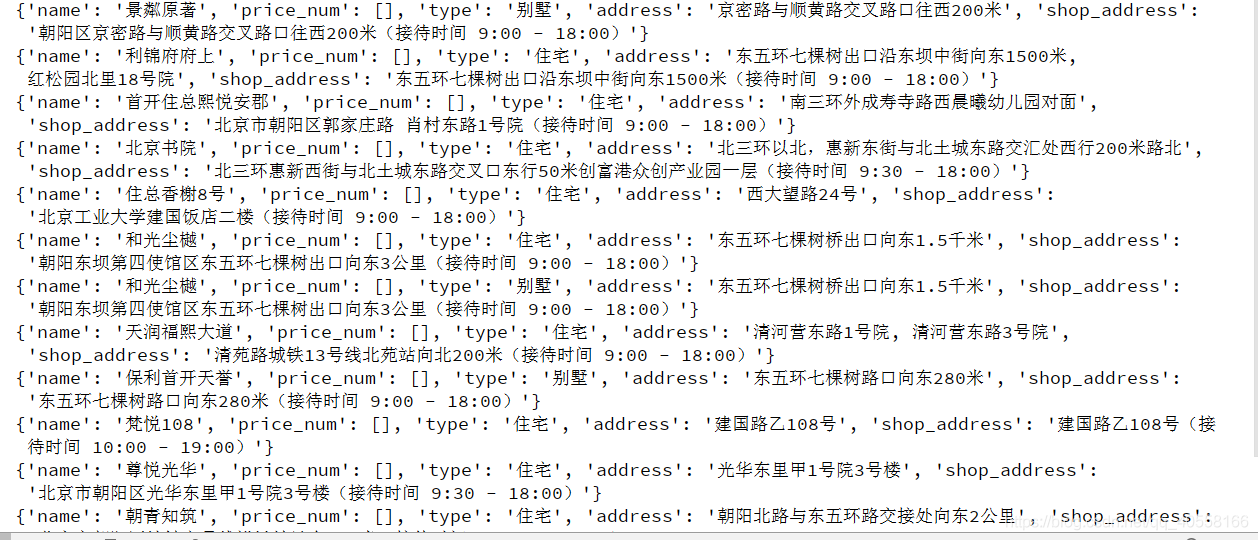

案例 20:爬取链家网北京每个区域的所有房子(selenium+Phantomjs + 多线程)

#爬取链家二手房信息。

# 要求:

# 1.爬取的字段:

# 名称,房间规模、价格,建设时间,朝向,详情页链接

# 2.写三个文件:

# 1.简单py 2.面向对象 3.改成多线程

from selenium import webdriver

from lxml import etree

def get_element(url):

driver.get(url)

html = etree.HTML(driver.page_source)

return html

lis = [] # 存放所有区域包括房子

driver = webdriver.PhantomJS()

html = get_element('https://bj.lianjia.com/ershoufang/')

city_list = html.xpath('//div[@data-role="ershoufang"]/div/a/@href')

city_name_list = html.xpath('//div[@data-role="ershoufang"]/div/a/text()')

for num, city in enumerate(city_list):

item = {} # 存放一个区域

sum_house = [] # 存放每个区域的房子

item['区域'] = city_name_list[num] # 城区名字

for page in range(1, 3):

city_url = 'https://bj.lianjia.com' + city + 'pg' + str(page)

html = get_element(city_url)

'''名称, 房间规模,建设时间, 朝向, 详情页链接'''

title_list = html.xpath('//div[@class="info clear"]/div/a/text()') # 所有标题

detail_url_list = html.xpath('//div[@class="info clear"]/div/a/@href') # 所有详情页

detail_list = html.xpath('//div[@class="houseInfo"]/text()') # 该页所有的房子信息列表,

city_price_list = html.xpath('//div[@class="totalPrice"]/span/text()')

for i, content in enumerate(title_list):

house = {}

detail = detail_list[i].split('|')

house['名称'] = content # 名称

house['价格']=city_price_list[i]+'万'#价格

house['规模'] = detail[0] + detail[1] # 规模

house['建设时间'] = detail[-2] # 建设时间

house['朝向'] = detail[2] # 朝向

house['详情链接'] = detail_url_list[i] # 详情链接

sum_house.append(house)

item['二手房'] = sum_house

print(item)

lis.append(item)

面向对象 + 多线程:

import json, threading

from selenium import webdriver

from lxml import etree

from queue import Queue

class Lianjia(threading.Thread):

def __init__(self, city_list=None, city_name_list=None):

super().__init__()

self.driver = webdriver.PhantomJS()

self.city_name_list = city_name_list

self.city_list = city_list

def get_element(self, url): # 获取element对象的

self.driver.get(url)

html = etree.HTML(self.driver.page_source)

return html

def get_city(self):

html = self.get_element('https://bj.lianjia.com/ershoufang/')

city_list = html.xpath('//div[@data-role="ershoufang"]/div/a/@href')

city_list = ['https://bj.lianjia.com' + url + 'pg%s' for url in city_list]

city_name_list = html.xpath('//div[@data-role="ershoufang"]/div/a/text()')

return city_list, city_name_list

def run(self):

lis = [] # 存放所有区域包括房子

while True:

if self.city_name_list.empty() and self.city_list.empty():

break

item = {} # 存放一个区域

sum_house = [] # 存放每个区域的房子

item['区域'] = self.city_name_list.get() # 城区名字

for page in range(1, 3):

# print(self.city_list.get())

html = self.get_element(self.city_list.get() % page)

'''名称, 房间规模,建设时间, 朝向, 详情页链接'''

title_list = html.xpath('//div[@class="info clear"]/div/a/text()') # 所有标题

detail_url_list = html.xpath('//div[@class="info clear"]/div/a/@href') # 所有详情页

detail_list = html.xpath('//div[@class="houseInfo"]/text()') # 该页所有的房子信息列表,

for i, content in enumerate(title_list):

house = {}

detail = detail_list[i].split('|')

house['名称'] = content # 名称

house['规模'] = detail[0] + detail[1] # 规模

house['建设时间'] = detail[-2] # 建设时间

house['朝向'] = detail[2] # 朝向

house['详情链接'] = detail_url_list[i] # 详情链接

sum_house.append(house)

item['二手房'] = sum_house

lis.append(item)

print(item)

if __name__ == '__main__':

q1 = Queue()#路由

q2 = Queue()#名字

lj = Lianjia()

city_url, city_name = lj.get_city()

for c in city_url:

q1.put(c)

for c in city_name:

q2.put(c)

# 创建一个列表,列表的数量就是开启线程的数量

crawl_list = [1, 2, 3, 4, 5]

for crawl in crawl_list:

# 实例化对象

LJ = Lianjia(city_name_list=q2,city_list=q1)

LJ.start()

结果:

案例 21:爬取笔趣阁的所有小说(requests)

import requests

from lxml import etree

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 6.1; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/77.0.3865.90 Safari/537.36',

'Referer': 'http://www.xbiquge.la/7/7931/',

'Cookie': '_abcde_qweasd=0; BAIDU_SSP_lcr=https://www.baidu.com/link?url=jUBgtRGIR19uAr-RE9YV9eHokjmGaII9Ivfp8FJIwV7&wd=&eqid=9ecb04b9000cdd69000000035dc3f80e; Hm_lvt_169609146ffe5972484b0957bd1b46d6=1573124137; _abcde_qweasd=0; bdshare_firstime=1573124137783; Hm_lpvt_169609146ffe5972484b0957bd1b46d6=1573125463',

'Accept-Encoding': 'gzip, deflate'

}

# 获取网站源码

def get_text(url, headers):

response = requests.get(url, headers=headers)

response.encoding = 'utf-8'

return response.text

# 获取小说的信息

def get_novelinfo(list1, name_list):

for i, url in enumerate(list1):

html = etree.HTML(get_text(url, headers))

name = name_list[i] # 书名

title_url = html.xpath('//div[@id="list"]/dl/dd/a/@href')

title_url = ['http://www.xbiquge.la' + i for i in title_url] # 章节地址

titlename_list = html.xpath('//div[@id="list"]/dl/dd/a/text()') # 章节名字列表

get_content(title_url, titlename_list, name)

# # 获取小说每章节的内容

def get_content(url_list, title_list, name):

for i, url in enumerate(url_list):

item = {}

html = etree.HTML(get_text(url, headers))

content_list = html.xpath('//div[@id="content"]/text()')

content = ''.join(content_list)

content=content+'

'

item['title'] = title_list[i]

item['content'] = content.replace('

', '

').replace('xa0', ' ')

print(item)

with open(name + '.txt', 'a+',encoding='utf-8') as file:

file.write(item['title']+'

')

file.write(item['content'])

def main():

base_url = 'http://www.xbiquge.la/xiaoshuodaquan/'

html = etree.HTML(get_text(base_url, headers))

novelurl_list = html.xpath('//div[@class="novellist"]/ul/li/a/@href')

name_list = html.xpath('//div[@class="novellist"]/ul/li/a/text()')

get_novelinfo(novelurl_list, name_list)

if __name__ == '__main__':

main()

多线程

import requests, threading

from lxml import etree

from queue import Queue

class Novel(threading.Thread):

def __init__(self, novelurl_list=None, name_list=None):

super().__init__()

self.headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 6.1; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/77.0.3865.90 Safari/537.36',

'Referer': 'http://www.xbiquge.la/7/7931/',

'Cookie': '_abcde_qweasd=0; BAIDU_SSP_lcr=https://www.baidu.com/link?url=jUBgtRGIR19uAr-RE9YV9eHokjmGaII9Ivfp8FJIwV7&wd=&eqid=9ecb04b9000cdd69000000035dc3f80e; Hm_lvt_169609146ffe5972484b0957bd1b46d6=1573124137; _abcde_qweasd=0; bdshare_firstime=1573124137783; Hm_lpvt_169609146ffe5972484b0957bd1b46d6=1573125463',

'Accept-Encoding': 'gzip, deflate'

}

self.novelurl_list = novelurl_list

self.name_list = name_list

# 获取网站源码

def get_text(self, url):

response = requests.get(url, headers=self.headers)

response.encoding = 'utf-8'

return response.text

# 获取小说的信息

def get_novelinfo(self):

while True:

if self.name_list.empty() and self.novelurl_list.empty():

break

url = self.novelurl_list.get()

# print(url)

html = etree.HTML(self.get_text(url))

name = self.name_list.get() # 书名

# print(name)

title_url = html.xpath('//div[@id="list"]/dl/dd/a/@href')

title_url = ['http://www.xbiquge.la' + i for i in title_url] # 章节地址

titlename_list = html.xpath('//div[@id="list"]/dl/dd/a/text()') # 章节名字列表

self.get_content(title_url, titlename_list, name)

# # 获取小说每章节的内容

def get_content(self, url_list, title_list, name):

for i, url in enumerate(url_list):

item = {}

html = etree.HTML(self.get_text(url))

content_list = html.xpath('//div[@id="content"]/text()')

content = ''.join(content_list)

content = content + '

'

item['title'] = title_list[i]

item['content'] = content.replace('

', '

').replace('xa0', ' ')

print(item)

with open(name + '.txt', 'a+', encoding='utf-8') as file:

file.write(item['title'] + '

')

file.write(item['content'])

#------------------通过多线程,返回每本书的名字和每本书的连接

def get_name_url(self):

base_url = 'http://www.xbiquge.la/xiaoshuodaquan/'

html = etree.HTML(self.get_text(base_url))

novelurl_list = html.xpath('//div[@class="novellist"]/ul/li/a/@href')

name_list = html.xpath('//div[@class="novellist"]/ul/li/a/text()')

return novelurl_list, name_list

def run(self):

self.get_novelinfo()

if __name__ == '__main__':

n = Novel()

url_list, name_list = n.get_name_url()

name_queue = Queue()

url_queue = Queue()

for url in url_list:

url_queue.put(url)

for name in name_list:

name_queue.put(name)

crawl_list = [1, 2, 3, 4, 5] # 定义五个线程

for crawl in crawl_list:

# 实例化对象

novel = Novel(name_list=name_queue, novelurl_list=url_queue)

novel.start()

结果:

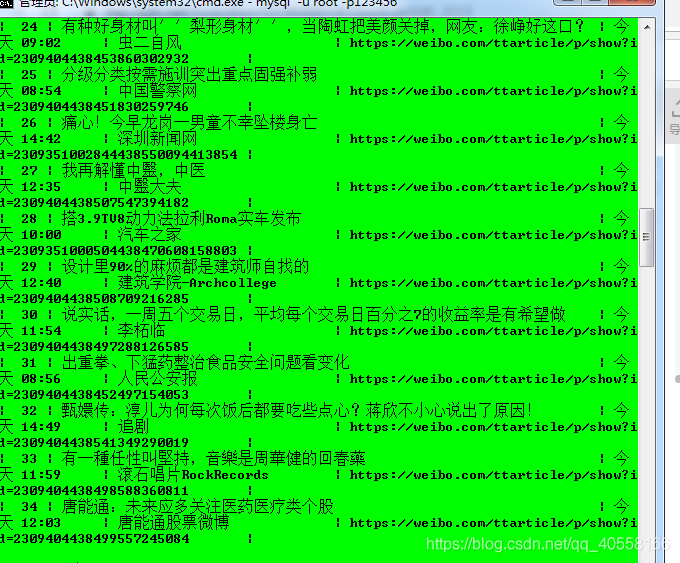

案例 22:爬取新浪微博头条前 20 页(ajax+mysql)

import requests, pymysql

from lxml import etree

def get_element(i):

base_url = 'https://weibo.com/a/aj/transform/loadingmoreunlogin?'

headers = {

'Referer': 'https://weibo.com/?category=1760',

'Sec-Fetch-Mode': 'cors',

'Sec-Fetch-Site': 'same-origin',

'User-Agent': 'Mozilla/5.0 (Windows NT 6.1; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/77.0.3865.90 Safari/537.36',

'X-Requested-With': 'XMLHttpRequest'

}

params = {

'ajwvr': '6',

'category': '1760',

'page': i,

'lefnav': '0',

'cursor': '',

'__rnd': '1573735870072',

}

response = requests.get(base_url, headers=headers, params=params)

response.encoding = 'utf-8'

info = response.json()

return etree.HTML(info['data'])

def main():

for i in range(1, 20):

html = get_element(i)

# 标题,发布人,发布时间,详情链接

title = html.xpath('//a[@class="S_txt1"]/text()')

author_time = html.xpath('//span[@class]/text()')

author = [author_time[i] for i in range(len(author_time)) if i % 2 == 0]

time = [author_time[i] for i in range(len(author_time)) if i % 2 == 1]

url = html.xpath('//a[@class="S_txt1"]/@href')

for j,tit in enumerate(title):

title1=tit

time1=time[j]

url1=url[j]

author1=author[j]

# print(title1,url1,time1,author1)

connect_mysql(title1,time1,author1,url1)

def connect_mysql(title, time, author, url):

db = pymysql.connect(host='localhost', user='root', password='123456',database='news')

cursor = db.cursor()

sql = 'insert into sina_news(title,send_time,author,url) values("' + title + '","' + time + '","' + author + '","' + url + '")'

print(sql)

cursor.execute(sql)

db.commit()

cursor.close()

db.close()

if __name__ == '__main__':

main()

提前创库 news 和表 sina_news

create table sina_news(

id int not null auto_increment primary key,

title varchar(100),

send_time varchar(100),

author varchar(20),

url varchar(100)

);

案例 23:爬取搜狗指定图片(requests + 多线程)

```python

import requests, json, threading, time, os

from queue import Queue

class Picture(threading.Thread):

# 初始化

def __init__(self, num, search, url_queue=None):

super().__init__()

self.headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 6.1; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/77.0.3865.90 Safari/537.36'

}

self.num = num

self.search = search

# 获取爬取的页数的每页图片接口url

def get_url(self):

url_list = []

for start in range(self.num):

url = 'https://pic.sogou.com/pics?query=' + self.search + '&mode=1&start=' + str(

start * 48) + '&reqType=ajax&reqFrom=result&tn=0'

url_list.append(url)

return url_list

# 获取每页的接口资源详情

def get_page(self, url):

response = requests.get(url.format('蔡徐坤'), headers=self.headers)

return response.text

#

def run(self):

while True:

# 如果队列为空代表制定页数爬取完毕

if url_queue.empty():

break

else:

url = url_queue.get() # 本页地址

data = json.loads(self.get_page(url)) # 获取到本页图片接口资源

try:

# 每页48张图片

for i in range(1, 49):

pic = data['items'][i]['pic_url']

reponse = requests.get(pic)

# 如果文件夹不存在,则创建

if not os.path.exists(r'C:/Users/Administrator/Desktop/' + self.search):

os.mkdir(r'C:/Users/Administrator/Desktop/' + self.search)

with open(r'C:/Users/Administrator/Desktop/' + self.search + '/%s.jpg' % (

str(time.time()).replace('.', '_')), 'wb') as f:

f.write(reponse.content)

print('下载成功!')

except:

print('该页图片保存完毕')

if __name__ == '__main__':

# 1.获取初始化的爬取url

num = int(input('请输入爬取页数(每页48张):'))

content = input('请输入爬取内容:')

pic = Picture(num, content)

url_list = pic.get_url()

# 2.创建队列

url_queue = Queue()

for i in url_list:

url_queue.put(i)

# 3.创建线程任务

crawl = [1, 2, 3, 4, 5]

for i in crawl:

pic = Picture(num, content, url_queue=url_queue)

pic.start()

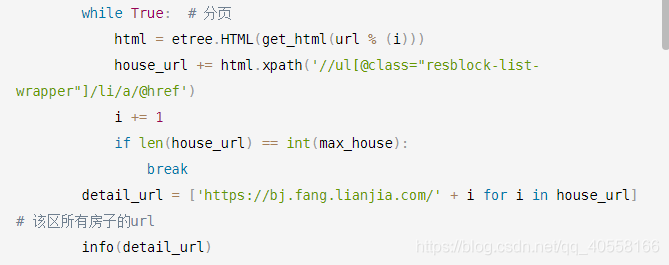

案例 24:爬取链家网北京所有房子(requests + 多线程)

链家:https://bj.fang.lianjia.com/loupan/

-

1、获取所有的城市的拼音

-

2、根据拼音去拼接 url,获取所有的数据。

-

3、列表页:楼盘名称,均价,建筑面积,区域,商圈详情页:户型([“8 室 5 厅 8 卫”, “4 室 2 厅 3 卫”, “5 室 2 厅 2 卫”]), 朝向,图片(列表),用户点评(选爬)

难点 1: 当该区没房子的时候,猜你喜欢这个会和有房子的块 class 一样,因此需要判断  难点 2: 获取每个区的页数,使用 js 将页数隐藏 https://bj.fang.lianjia.com/loupan / 区 / pg 页数 %s 我们可以发现规律,明明三页,当我们写 pg5 时候,会跳转第一页 因此我们可以使用 while 判断,当每个房子的链接和该区最大房子数相等代表该区爬取完毕

难点 2: 获取每个区的页数,使用 js 将页数隐藏 https://bj.fang.lianjia.com/loupan / 区 / pg 页数 %s 我们可以发现规律,明明三页,当我们写 pg5 时候,会跳转第一页 因此我们可以使用 while 判断,当每个房子的链接和该区最大房子数相等代表该区爬取完毕

完整代码:

import requests

from lxml import etree

# 获取网页源码

def get_html(url):

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 6.1; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/77.0.3865.90 Safari/537.36',

}

response = requests.get(url, headers=headers)

return response.text

# 获取城市拼音列表

def get_city_url():

url = 'https://bj.fang.lianjia.com/loupan/'

html = etree.HTML(get_html(url))

city = html.xpath('//div[@class="filter-by-area-container"]/ul/li/@data-district-spell')

city_url = ['https://bj.fang.lianjia.com/loupan/{}/pg%s'.format(i) for i in city]

return city_url

# 爬取对应区的所有房子url

def get_detail(url):

# 使用第一页来判断是否有分页

html = etree.HTML(get_html(url % (1)))

empty = html.xpath('//div[@class="no-result-wrapper hide"]')

if len(empty) != 0: # 不存在此标签代表没有猜你喜欢

i = 1

max_house = html.xpath('//span[@class="value"]/text()')[0]

house_url = []

while True: # 分页

html = etree.HTML(get_html(url % (i)))

house_url += html.xpath('//ul[@class="resblock-list-wrapper"]/li/a/@href')

i += 1

if len(house_url) == int(max_house):

break

detail_url = ['https://bj.fang.lianjia.com/' + i for i in house_url] # 该区所有房子的url

info(detail_url)

# 获取每个房子的详细信息

def info(url):

for i in url:

item = {}

page = etree.HTML(get_html(i))

item['name'] = page.xpath('//h2[@class="DATA-PROJECT-NAME"]/text()')[0]

item['price_num'] = page.xpath('//span[@class="price-number"]/text()')[0] + page.xpath(

'//span[@class="price-unit"]/text()')[0]

detail_page = etree.HTML(get_html(i + 'xiangqing'))

item['type'] = detail_page.xpath('//ul[@class="x-box"]/li[1]/span[2]/text()')[0]

item['address'] = detail_page.xpath('//ul[@class="x-box"]/li[5]/span[2]/text()')[0]

item['shop_address'] = detail_page.xpath('//ul[@class="x-box"]/li[6]/span[2]/text()')[0]

print(item)

def main():

# 1、获取所有的城市的拼音

city = get_city_url()

# 2、根据拼音去拼接url,获取所有的数据。

for url in city:

get_detail(url)

if __name__ == '__main__':

main()

多线程版:

import requests, threading

from lxml import etree

from queue import Queue

import pymongo

class House(threading.Thread):

def __init__(self, q=None):

super().__init__()

self.headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 6.1; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/77.0.3865.90 Safari/537.36',

}

self.q = q

# 获取网页源码

def get_html(self, url):

response = requests.get(url, headers=self.headers)

return response.text

# 获取城市拼音列表

def get_city_url(self):

url = 'https://bj.fang.lianjia.com/loupan/'

html = etree.HTML(self.get_html(url))

city = html.xpath('//div[@class="filter-by-area-container"]/ul/li/@data-district-spell')

city_url = ['https://bj.fang.lianjia.com/loupan/{}/pg%s'.format(i) for i in city]

return city_url

# 爬取对应区的所有房子url

def get_detail(self, url):

# 使用第一页来判断是否有分页

html = etree.HTML(self.get_html(url % (1)))

empty = html.xpath('//div[@class="no-result-wrapper hide"]')

if len(empty) != 0: # 不存在此标签代表没有猜你喜欢

i = 1

max_house = html.xpath('//span[@class="value"]/text()')[0]

house_url = []

while True: # 分页

html = etree.HTML(self.get_html(url % (i)))

house_url += html.xpath('//ul[@class="resblock-list-wrapper"]/li/a/@href')

i += 1

if len(house_url) == int(max_house):

break

detail_url = ['https://bj.fang.lianjia.com/' + i for i in house_url] # 该区所有房子的url

self.info(detail_url)

# 获取每个房子的详细信息

def info(self, url):

for i in url:

item = {}

page = etree.HTML(self.get_html(i))

item['name'] = page.xpath('//h2[@class="DATA-PROJECT-NAME"]/text()')[0]

item['price_num'] = page.xpath('//span[@class="price-number"]/text()')[0] + page.xpath(

'//span[@class="price-unit"]/text()')[0]

detail_page = etree.HTML(self.get_html(i + 'xiangqing'))

item['type'] = detail_page.xpath('//ul[@class="x-box"]/li[1]/span[2]/text()')[0]

item['address'] = detail_page.xpath('//ul[@class="x-box"]/li[5]/span[2]/text()')[0]

item['shop_address'] = detail_page.xpath('//ul[@class="x-box"]/li[6]/span[2]/text()')[0]

print(item)

def run(self):

# 1、获取所有的城市的拼音

# city = self.get_city_url()

# 2、根据拼音去拼接url,获取所有的数据。

while True:

if self.q.empty():

break

self.get_detail(self.q.get())

if __name__ == '__main__':

# 1.先获取区列表

house = House()

city_list = house.get_city_url()

# 2.将去加入队列

q = Queue()

for i in city_list:

q.put(i)

# 3.创建线程任务

a = [1, 2, 3, 4]

for i in a:

p = House(q)

p.start()