背景

- SQLSERVER数据库中单表数据几十亿,分区方案也已经无法查询出结果。故:采用导出功能,导出数据到Text文本(文本>40G)中。

- 因上原因,所以本次的实验样本为:【数据量:61w条,文本大小:74M】

选择DataX原因

- 试图维持统一的异构数据源同步方案。(其实行不通)

- 试图进入Hive时,已经是压缩ORC格式,降低存储大小,提高列式查询效率,以便后续查询HIVE数据导入KUDU时提高效率(其实行不通)

1. 建HIVE表

进入HIVE,必须和TextFile中的字段类型保持一致

create table event_hive_3(

`#auto_id` string

,`#product_id` int

,`#event_name` string

,`#part_date` int

,`#server_id` int

,`#account_id` bigint

,`#user_id` bigint

,part_time STRING

,GetItemID bigint

,ConsumeMoneyNum bigint

,Price bigint

,GetItemCnt bigint

,TaskState bigint

,TaskType bigint

,BattleLev bigint

,Level bigint

,ItemID bigint

,ItemCnt bigint

,MoneyNum bigint

,MoneyType bigint

,VIP bigint

,LogID bigint

)

ROW FORMAT DELIMITED

FIELDS TERMINATED BY ' '

STORED AS ORC;

2. 建Kudu表

这个过程,自行发挥~

#Idea中,执行单元测试【EventAnalysisRepositoryTest.createTable()】即可

public void createTable() throws Exception {

repository.getClient();

repository.createTable(Event_Sjmy.class,true);

}

3. 建立Impala表

进入Impala-shell 或者hue;

use sd_dev_sdk_mobile;

CREATE EXTERNAL TABLE `event_sjmy_datax` STORED AS KUDU

TBLPROPERTIES(

'kudu.table_name' = 'event_sjmy_datax',

'kudu.master_addresses' = 'sdmain:7051')

4. 编辑Datax任务

不直接load进hive的目的是为了进行一步文件压缩,降低内存占用,转为列式存储。

# 编辑一个任务

vi /home/jobs/textToHdfs.json;

{

"setting": {},

"job": {

"setting": {

"speed": {

"channel": 2

}

},

"content": [

{

"reader": {

"name": "txtfilereader",

"parameter": {

"path": ["/home/data"],

"encoding": "GB2312",

"column": [

{

"index": 0,

"type": "string"

},

{

"index": 1,

"type": "int"

},

{

"index": 2,

"type": "string"

},

{

"index": 3,

"type": "int"

},

{

"index": 4,

"type": "int"

},

{

"index": 5,

"type": "long"

},

{

"index": 6,

"type": "long"

},

{

"index": 7,

"type": "string"

},

{

"index": 8,

"type": "long"

},

{

"index": 9,

"type": "long"

},

{

"index": 10,

"type": "long"

},{

"index": 11,

"type": "long"

},{

"index": 12,

"type": "long"

},

{

"index": 13,

"type": "long"

},

{

"index": 14,

"type": "long"

},

{

"index": 15,

"type": "long"

},

{

"index": 17,

"type": "long"

},

{

"index": 18,

"type": "long"

},

{

"index": 19,

"type": "long"

},

{

"index": 20,

"type": "long"

},

{

"index": 21,

"type": "long"

}

],

"fieldDelimiter": "/t"

}

},

"writer": {

"name": "hdfswriter",

"parameter": {

"column": [{"name":"#auto_id","type":" STRING"},{"name":"#product_id","type":" int"},{"name":"#event_name","type":" STRING"},{"name":"#part_date","type":"int"},{"name":"#server_id","type":"int"},{"name":"#account_id","type":"bigint"},{"name":"#user_id","type":" bigint"},{"name":"part_time","type":" STRING"},{"name":"GetItemID","type":" bigint"},{"name":"ConsumeMoneyNum","type":"bigint"},{"name":"Price ","type":"bigint"},{"name":"GetItemCnt ","type":"bigint"},{"name":"TaskState ","type":"bigint"},{"name":"TaskType ","type":"bigint"},{"name":"BattleLev ","type":"bigint"},{"name":"Level","type":"bigint"},{"name":"ItemID ","type":"bigint"},{"name":"ItemCnt ","type":"bigint"},{"name":"MoneyNum ","type":"bigint"},{"name":"MoneyType ","type":"bigint"},{"name":"VIP ","type":"bigint"},{"name":"LogID ","type":"bigint"}],

"compress": "NONE",

"defaultFS": "hdfs://sdmain:8020",

"fieldDelimiter": " ",

"fileName": "event_hive_3",

"fileType": "orc",

"path": "/user/hive/warehouse/dataxtest.db/event_hive_3",

"writeMode": "append"

}

}

}

]

}

}

4.1 执行datax任务

注意哦,数据源文件,先放在/home/data下哦。数据源文件必须是个数据二维表。

#textfile中数据例子如下:

{432297B4-CA5F-4116-901E-E19DF3170880} 701 获得筹码 201906 2 4974481 1344825 00:01:06 0 0 0 0 0 0 0 0 0 0 100 2 3 31640

{CAAF09C6-037D-43B9-901F-4CB5918FB774} 701 获得筹码 201906 2 5605253 1392330 00:02:25 0 0 0 0 0 0 0 0 0 0 390 2 10 33865

cd $DATAX_HOME/bin

python datax.py /home/job/textToHdfs.json

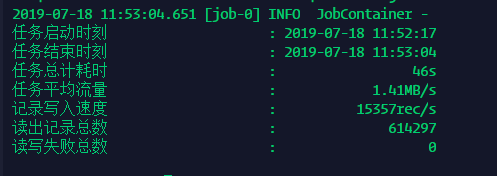

效果图:

使用Kudu从HIVE读取写入到Kudu表中

进入shell

#进入shell:

impala-shell;

#选中库--如果表名有指定库名,可省略

use sd_dev_sdk_mobile;

输入SQL:

INSERT INTO sd_dev_sdk_mobile.event_sjmy_datax

SELECT `#auto_id`,`#event_name`,`#part_date`,`#product_id`,`#server_id`,`#account_id`,`#user_id`,part_time,GetItemID,ConsumeMoneyNum,Price,GetItemCnt,TaskState,TaskType,BattleLev,Level,ItemID,ItemCnt,MoneyNum,MoneyType,VIP,LogID

FROM event_hive_3 ;

效果图:

看看这可怜的结果

这速度难以接受,我选择放弃。

打脸环节-原因分析:

- DataX读取TextFile到HIVE中的速度慢: DataX对TextFile的读取是单线程的,(2.0版本后可能会提供多线程ReaderTextFile的能力),这直接浪费了集群能力和12核的CPU。且,文件还没法手动切割任务分节点执行。

- Hive到KUDU的数据慢:insert into xxx select * 这个【*】一定要注意,如果读取所有列,那列式查询的优势就没多少了,所以,转ORC多此一举。

- Impala读取HIVE数据时,内存消耗大!

唯一的好处: 降低硬盘资源的消耗(74M文件写到HDFS,压缩后只有15M),但是!!!这有何用?我要的是导入速度!如果只是为了压缩,应该Load进Hive,然后启用Hive的Insert到ORC新表,充分利用集群资源!

代码如下

//1. 数据加载到textfile表中

load data inpath '/home/data/event-19-201906.txt' into table event_hive_3normal;

//2. 数据查询出来写入到ORC表中。

insert into event_hive_3orc

select * from event_hive_3normal

实验失败~

优化思路:1.充分使用集群的CPU资源

2.避免大批量数据查询写入

优化方案:掏出我的老家伙,单Flume读取本地数据文件sink到Kafka, 集群中多Flume消费KAFKA集群,sink到Kudu !下午见!