论文

Povey, D., Cheng, G., Wang, Y., Li, K., Xu, H., Yarmohamadi, M., & Khudanpur, S. (2018). Semi-orthogonal low-rank matrix factorization for deep neural networks. In Proceedings of the 19th Annual Conference of the International Speech Communication Association (INTERSPEECH 2018), Hyderabad, India.

Kaldi recipe

swbd/s5c/local/chain/tuning/run_tdnn_7q.sh

A factorized TDNN has a similar structure as a vanilla TDNN,

except the weight matrices (of the layers) are factorized (using

SVD) into two factors, with one of them constrained to be

semi-orthonormal.

论文笔记

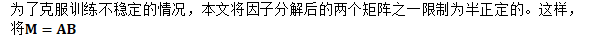

TDNN又被称为1维CNN(1-d CNNs)。本文提出的TDNN-F,结构与经过SVD分解的TDNN相同。但TDNN-F的训练开始于随机初始化,SVD分解后,其中一个矩阵被限制为半正定的。这对TDNNs以及TDNN-LSTM有实质上的提升。

一种减少已训练模型大小的方法是使用奇异值分解(Singular Value Decomposition,SVD)对每个权重矩阵因子分解为两个更小的因子,丢弃更小的奇异值;然后,再次对网络参数进行调优。

很明显,直接训练随机初始化后的上述带有线性瓶颈层的网络会更为高效。虽然,有人以这一方法训练成功,但还是出现了训练不稳定的情况。

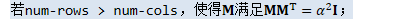

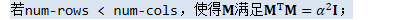

之中的一个矩阵限制为半正定矩阵不会损失任何建模能力;并且,这一限制也符合SVD的结果(即,SVD分解后,其中一个子矩阵也是半正定的)。

此外,受到"dense LSTM"的启发,本文使用了跳层连接(skip connection)。这一定程度上类似于残差学习的捷径连接(shortcut connection)和公路连接(highway connection)。

前向计算

nnet3/nnet-training.cc const NnetComputation &computation) { // note: because we give the 1st arg (nnet_) as a pointer to the // constructor of 'computer', it will use that copy of the nnet to // store stats. NnetComputer computer(config_.compute_config, computation, nnet_, delta_nnet_); // give the inputs to the computer object. computer.AcceptInputs(*nnet_, eg.io); computer.Run();

this->ProcessOutputs(false, eg, &computer); computer.Run();

// If relevant, add in the part of the gradient that comes from L2 // regularization. ApplyL2Regularization(*nnet_, GetNumNvalues(eg.io, false) * config_.l2_regularize_factor, delta_nnet_);

// Update the parameters of nnet bool success = UpdateNnetWithMaxChange(*delta_nnet_, config_.max_param_change, 1.0, 1.0 - config_.momentum, nnet_, &num_max_change_per_component_applied_, &num_max_change_global_applied_);

// Scale down the batchnorm stats (keeps them fresh... this affects what // happens when we use the model with batchnorm test-mode set). ScaleBatchnormStats(config_.batchnorm_stats_scale, nnet_);

// The following will only do something if we have a LinearComponent // or AffineComponent with orthonormal-constraint set to a nonzero value. ConstrainOrthonormal(nnet_);

// Scale deta_nnet if (success) ScaleNnet(config_.momentum, delta_nnet_); else ScaleNnet(0.0, delta_nnet_); }

|

nnet3/nnet-utils.cc

如果对于某个组件,orthonormal-constraint > 0.0,那么该参数就变为上述alpha。若orthonormal-constraint == 0.0,则什么都不做。若orthonormal-constraint < 0.0,那么使得alpha浮动,也就是说,试图使得M接近于一alpha乘以一个半正交矩阵。 为了确保该操作在GPU上的有效性,这里不会使得矩阵M完全正交,只是使其更接近于正交(乘以'orthonormal_constraint')。在多次iterations后,该操作会使得矩阵M十分接近与正交矩阵。 void ConstrainOrthonormal(Nnet *nnet) {

for (int32 c = 0; c < nnet->NumComponents(); c++) { Component *component = nnet->GetComponent(c); CuMatrixBase<BaseFloat> *params = NULL; BaseFloat orthonormal_constraint = 0.0;

LinearComponent *lc = dynamic_cast<LinearComponent*>(component); if (lc != NULL && lc->OrthonormalConstraint() != 0.0) { orthonormal_constraint = lc->OrthonormalConstraint(); params = &(lc->Params()); } AffineComponent *ac = dynamic_cast<AffineComponent*>(component); if (ac != NULL && ac->OrthonormalConstraint() != 0.0) { orthonormal_constraint = ac->OrthonormalConstraint(); params = &(ac->LinearParams()); } TdnnComponent *tc = dynamic_cast<TdnnComponent*>(component); if (tc != NULL && tc->OrthonormalConstraint() != 0.0) { orthonormal_constraint = tc->OrthonormalConstraint(); params = &(tc->LinearParams()); } if (orthonormal_constraint == 0.0 || RandInt(0, 3) != 0) { // For efficiency, only do this every 4 or so minibatches-- it won't have // time stray far from the constraint in between. continue; }

int32 rows = params->NumRows(), cols = params->NumCols(); if (rows <= cols) { ConstrainOrthonormalInternal(orthonormal_constraint, params); } else { CuMatrix<BaseFloat> params_trans(*params, kTrans); ConstrainOrthonormalInternal(orthonormal_constraint, ¶ms_trans); params->CopyFromMat(params_trans, kTrans); } } }

对矩阵M做一个更新,使其更接近与一个正交矩阵(带有正交行的矩阵)乘以'scale'。注意:若'scale'离奇异值太远,则可能会发散。 void ConstrainOrthonormalInternal(BaseFloat scale, CuMatrixBase<BaseFloat> *M) { KALDI_ASSERT(scale != 0.0);

// We'd like to enforce the rows of M to be orthonormal. // define P = M M^T. If P is unit then M has orthonormal rows. // We actually want P to equal scale^2 * I, so that M's rows are // orthogonal with 2-norms equal to 'scale'. // We (notionally) add to the objective function, the value // -alpha times the sum of squared elements of Q = (P - scale^2 * I). int32 rows = M->NumRows(), cols = M->NumCols(); CuMatrix<BaseFloat> M_update(rows, cols); CuMatrix<BaseFloat> P(rows, rows); P.SymAddMat2(1.0, *M, kNoTrans, 0.0); P.CopyLowerToUpper();

// The 'update_speed' is a constant that determines how fast we approach a // matrix with the desired properties (larger -> faster). Larger values will // update faster but will be more prone to instability. 0.125 (1/8) is the // value that gives us the fastest possible convergence when we are already // close to be a semi-orthogonal matrix (in fact, it will lead to quadratic // convergence). // See http://www.danielpovey.com/files/2018_interspeech_tdnnf.pdf // for more details. BaseFloat update_speed = 0.125; bool floating_scale = (scale < 0.0);

if (floating_scale) { // This (letting the scale "float") is described in Sec. 2.3 of // http://www.danielpovey.com/files/2018_interspeech_tdnnf.pdf, // where 'scale' here is written 'alpha' in the paper. // // We pick the scale that will give us an update to M that is // orthogonal to M (viewed as a vector): i.e., if we're doing // an update M := M + X, then we want to have tr(M X^T) == 0. // The following formula is what gives us that. // With P = M M^T, our update formula is doing to be: // M := M + (-4 * alpha * (P - scale^2 I) * M). // (The math below explains this update formula; for now, it's // best to view it as an established fact). // So X (the change in M) is -4 * alpha * (P - scale^2 I) * M, // where alpha == update_speed / scale^2. // We want tr(M X^T) == 0. First, forget the -4*alpha, because // we don't care about constant factors. So we want: // tr(M * M^T * (P - scale^2 I)) == 0. // Since M M^T == P, that means: // tr(P^2 - scale^2 P) == 0, // or scale^2 = tr(P^2) / tr(P). // Note: P is symmetric so it doesn't matter whether we use tr(P P) or // tr(P^T P); we use tr(P^T P) because I believe it's faster to compute.

BaseFloat trace_P = P.Trace(), trace_P_P = TraceMatMat(P, P, kTrans);

scale = std::sqrt(trace_P_P / trace_P);

// The following is a tweak to avoid divergence when the eigenvalues aren't // close to being the same. trace_P is the sum of eigenvalues of P, and // trace_P_P is the sum-square of eigenvalues of P. Treat trace_P as a sum // of positive values, and trace_P_P as their sumsq. Then mean = trace_P / // dim, and trace_P_P cannot be less than dim * (trace_P / dim)^2, // i.e. trace_P_P >= trace_P^2 / dim. If ratio = trace_P_P * dim / // trace_P^2, then ratio >= 1.0, and the excess above 1.0 is a measure of // how far we are from convergence. If we're far from convergence, we make // the learning rate slower to reduce the risk of divergence, since the // update may not be stable for starting points far from equilibrium. BaseFloat ratio = (trace_P_P * P.NumRows() / (trace_P * trace_P)); KALDI_ASSERT(ratio > 0.999); if (ratio > 1.02) { update_speed *= 0.5; // Slow down the update speed to reduce the risk of divergence. if (ratio > 1.1) update_speed *= 0.5; // Slow it down even more. } } P.AddToDiag(-1.0 * scale * scale);

// We may want to un-comment the following code block later on if we have a // problem with instability in setups with a non-floating orthonormal // constraint. /* if (!floating_scale) { // This is analogous to the stuff with 'ratio' above, but when we don't have // a floating scale. It reduces the chances of divergence when we have // a bad initialization. BaseFloat error = P.FrobeniusNorm(), error_proportion = error * error / P.NumRows(); // 'error_proportion' is the sumsq of elements in (P - I) divided by the // sumsq of elements of I. It should be much less than one (i.e. close to // zero) if the error is small. if (error_proportion > 0.02) { update_speed *= 0.5; if (error_proportion > 0.1) update_speed *= 0.5; } } */

if (GetVerboseLevel() >= 1) { BaseFloat error = P.FrobeniusNorm(); KALDI_VLOG(2) << "Error in orthogonality is " << error; }

// see Sec. 2.2 of http://www.danielpovey.com/files/2018_interspeech_tdnnf.pdf // for explanation of the 1/(scale*scale) factor, but there is a difference in // notation; 'scale' here corresponds to 'alpha' in the paper, and // 'update_speed' corresponds to 'nu' in the paper. BaseFloat alpha = update_speed / (scale * scale);

// At this point, the matrix P contains what, in the math, would be Q = // P-scale^2*I. The derivative of the objective function w.r.t. an element q(i,j) // of Q is now equal to -2*alpha*q(i,j), i.e. we could write q_deriv(i,j) // = -2*alpha*q(i,j) This is also the derivative of the objective function // w.r.t. p(i,j): i.e. p_deriv(i,j) = -2*alpha*q(i,j). // Suppose we have define this matrix as 'P_deriv'. // The derivative of the objective w.r.t M equals // 2 * P_deriv * M, which equals -4*alpha*(P-scale^2*I)*M. // (Currently the matrix P contains what, in the math, is P-scale^2*I). M_update.AddMatMat(-4.0 * alpha, P, kNoTrans, *M, kNoTrans, 0.0); M->AddMat(1.0, M_update); }

|