执行以下插入语句报如下错误:

insert into table log_orc select * from log_text;

Query ID = atguigu_20210426104635_32601bfb-de63-411d-b4a0-a9f612b43c27 Total jobs = 1 Launching Job 1 out of 1 Number of reduce tasks determined at compile time: 1 In order to change the average load for a reducer (in bytes): set hive.exec.reducers.bytes.per.reducer=<number> In order to limit the maximum number of reducers: set hive.exec.reducers.max=<number> In order to set a constant number of reducers: set mapreduce.job.reduces=<number> Starting Job = job_1619397804275_0009, Tracking URL = http://hadoop103:8088/proxy/application_1619397804275_0009/ Kill Command = /opt/module/hadoop-3.1.3/bin/mapred job -kill job_1619397804275_0009 Hadoop job information for Stage-1: number of mappers: 1; number of reducers: 1 2021-04-26 10:46:41,270 Stage-1 map = 0%, reduce = 0% 2021-04-26 10:46:47,378 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 7.4 sec 2021-04-26 10:47:05,821 Stage-1 map = 100%, reduce = 100%, Cumulative CPU 7.4 sec MapReduce Total cumulative CPU time: 7 seconds 400 msec Ended Job = job_1619397804275_0009 with errors Error during job, obtaining debugging information... Examining task ID: task_1619397804275_0009_m_000000 (and more) from job job_1619397804275_0009 Task with the most failures(4): ----- Task ID: task_1619397804275_0009_r_000000 URL: http://hadoop103:8088/taskdetails.jsp?jobid=job_1619397804275_0009&tipid=task_1619397804275_0009_r_000000 ----- Diagnostic Messages for this Task: Error: java.lang.RuntimeException: Hive Runtime Error while closing operators: org.apache.hadoop.hive.ql.metadata.HiveException: org.apache.hadoop.hive.ql.metadata.HiveException: org.apache.hadoop.hive.ql.metadata.HiveException: java.lang.IllegalArgumentException: No enum constant org.apache.hadoop.io.SequenceFile.CompressionType.block at org.apache.hadoop.hive.ql.exec.mr.ExecReducer.close(ExecReducer.java:286) at org.apache.hadoop.mapred.ReduceTask.runOldReducer(ReduceTask.java:454) at org.apache.hadoop.mapred.ReduceTask.run(ReduceTask.java:393) at org.apache.hadoop.mapred.YarnChild$2.run(YarnChild.java:174) at java.security.AccessController.doPrivileged(Native Method) at javax.security.auth.Subject.doAs(Subject.java:422) at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1729) at org.apache.hadoop.mapred.YarnChild.main(YarnChild.java:168) Caused by: org.apache.hadoop.hive.ql.metadata.HiveException: org.apache.hadoop.hive.ql.metadata.HiveException: org.apache.hadoop.hive.ql.metadata.HiveException: org.apache.hadoop.hive.ql.metadata.HiveException: java.lang.IllegalArgumentException: No enum constant org.apache.hadoop.io.SequenceFile.CompressionType.block at org.apache.hadoop.hive.ql.exec.GroupByOperator.closeOp(GroupByOperator.java:1112) at org.apache.hadoop.hive.ql.exec.Operator.close(Operator.java:733) at org.apache.hadoop.hive.ql.exec.mr.ExecReducer.close(ExecReducer.java:277) ... 7 more Caused by: org.apache.hadoop.hive.ql.metadata.HiveException: org.apache.hadoop.hive.ql.metadata.HiveException: org.apache.hadoop.hive.ql.metadata.HiveException: java.lang.IllegalArgumentException: No enum constant org.apache.hadoop.io.SequenceFile.CompressionType.block at org.apache.hadoop.hive.ql.exec.GroupByOperator.flush(GroupByOperator.java:1086) at org.apache.hadoop.hive.ql.exec.GroupByOperator.closeOp(GroupByOperator.java:1109) ... 9 more Caused by: org.apache.hadoop.hive.ql.metadata.HiveException: org.apache.hadoop.hive.ql.metadata.HiveException: java.lang.IllegalArgumentException: No enum constant org.apache.hadoop.io.SequenceFile.CompressionType.block at org.apache.hadoop.hive.ql.exec.FileSinkOperator.createBucketFiles(FileSinkOperator.java:742) at org.apache.hadoop.hive.ql.exec.FileSinkOperator.process(FileSinkOperator.java:897) at org.apache.hadoop.hive.ql.exec.Operator.baseForward(Operator.java:995) at org.apache.hadoop.hive.ql.exec.Operator.forward(Operator.java:941) at org.apache.hadoop.hive.ql.exec.Operator.forward(Operator.java:928) at org.apache.hadoop.hive.ql.exec.GroupByOperator.forward(GroupByOperator.java:1050) at org.apache.hadoop.hive.ql.exec.GroupByOperator.flush(GroupByOperator.java:1076) ... 10 more Caused by: org.apache.hadoop.hive.ql.metadata.HiveException: java.lang.IllegalArgumentException: No enum constant org.apache.hadoop.io.SequenceFile.CompressionType.block at org.apache.hadoop.hive.ql.io.HiveFileFormatUtils.getHiveRecordWriter(HiveFileFormatUtils.java:285) at org.apache.hadoop.hive.ql.exec.FileSinkOperator.createBucketForFileIdx(FileSinkOperator.java:780) at org.apache.hadoop.hive.ql.exec.FileSinkOperator.createBucketFiles(FileSinkOperator.java:731) ... 16 more Caused by: java.lang.IllegalArgumentException: No enum constant org.apache.hadoop.io.SequenceFile.CompressionType.block at java.lang.Enum.valueOf(Enum.java:238) at org.apache.hadoop.io.SequenceFile$CompressionType.valueOf(SequenceFile.java:234) at org.apache.hadoop.mapred.SequenceFileOutputFormat.getOutputCompressionType(SequenceFileOutputFormat.java:108) at org.apache.hadoop.hive.ql.exec.Utilities.createSequenceWriter(Utilities.java:1044) at org.apache.hadoop.hive.ql.io.HiveSequenceFileOutputFormat.getHiveRecordWriter(HiveSequenceFileOutputFormat.java:64) at org.apache.hadoop.hive.ql.io.HiveFileFormatUtils.getRecordWriter(HiveFileFormatUtils.java:297) at org.apache.hadoop.hive.ql.io.HiveFileFormatUtils.getHiveRecordWriter(HiveFileFormatUtils.java:282) ... 18 more FAILED: Execution Error, return code 2 from org.apache.hadoop.hive.ql.exec.mr.MapRedTask MapReduce Jobs Launched: Stage-Stage-1: Map: 1 Reduce: 1 Cumulative CPU: 7.4 sec HDFS Read: 19024345 HDFS Write: 8063208 FAIL Total MapReduce CPU Time Spent: 7 seconds 400 msec

解决方案:

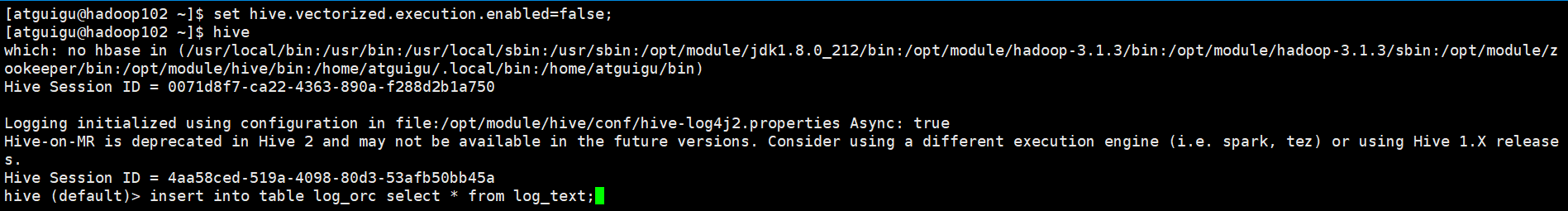

第一步:先退出hive客户端

quit;

第二步:控制是否启用查询执行的向量模式

set hive.vectorized.execution.enabled=false;

第三步:再次进入hive客户端执行 insert 语句即可

insert into table log_orc select * from log_text;