@

import pandas as pd

import numpy as np

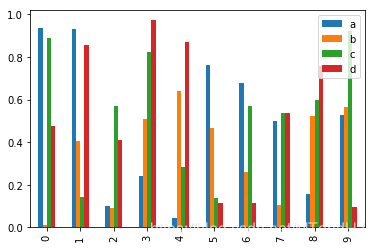

Group_By

ipl_data = {'Team': ['Riders', 'Riders', 'Devils', 'Devils', 'Kings',

'kings', 'Kings', 'Kings', 'Riders', 'Royals', 'Royals', 'Riders'],

'Rank': [1, 2, 2, 3, 3,4 ,1 ,1,2 , 4,1,2],

'Year': [2014,2015,2014,2015,2014,2015,2016,2017,2016,2014,2015,2017],

'Points':[876,789,863,673,741,812,756,788,694,701,804,690]}

df = pd.DataFrame(ipl_data)

df

|

Team |

Rank |

Year |

Points |

| 0 |

Riders |

1 |

2014 |

876 |

| 1 |

Riders |

2 |

2015 |

789 |

| 2 |

Devils |

2 |

2014 |

863 |

| 3 |

Devils |

3 |

2015 |

673 |

| 4 |

Kings |

3 |

2014 |

741 |

| 5 |

kings |

4 |

2015 |

812 |

| 6 |

Kings |

1 |

2016 |

756 |

| 7 |

Kings |

1 |

2017 |

788 |

| 8 |

Riders |

2 |

2016 |

694 |

| 9 |

Royals |

4 |

2014 |

701 |

| 10 |

Royals |

1 |

2015 |

804 |

| 11 |

Riders |

2 |

2017 |

690 |

对数据进行分组

有以下几种方式:

.groupby('key')

.groupby(['key1', 'key2'])

.groupby(key, axis=1)

df.groupby('Team')

<pandas.core.groupby.groupby.DataFrameGroupBy object at 0x00000162D1D4A940>

df.groupby('Team').groups #可视化

{'Devils': Int64Index([2, 3], dtype='int64'),

'Kings': Int64Index([4, 6, 7], dtype='int64'),

'Riders': Int64Index([0, 1, 8, 11], dtype='int64'),

'Royals': Int64Index([9, 10], dtype='int64'),

'kings': Int64Index([5], dtype='int64')}

df.groupby(['Team', 'Year']).groups

{('Devils', 2014): Int64Index([2], dtype='int64'),

('Devils', 2015): Int64Index([3], dtype='int64'),

('Kings', 2014): Int64Index([4], dtype='int64'),

('Kings', 2016): Int64Index([6], dtype='int64'),

('Kings', 2017): Int64Index([7], dtype='int64'),

('Riders', 2014): Int64Index([0], dtype='int64'),

('Riders', 2015): Int64Index([1], dtype='int64'),

('Riders', 2016): Int64Index([8], dtype='int64'),

('Riders', 2017): Int64Index([11], dtype='int64'),

('Royals', 2014): Int64Index([9], dtype='int64'),

('Royals', 2015): Int64Index([10], dtype='int64'),

('kings', 2015): Int64Index([5], dtype='int64')}

对 group进行迭代

grouped = df.groupby('Year')

for name, group in grouped:

print(name)

print(group)

2014

Team Rank Year Points

0 Riders 1 2014 876

2 Devils 2 2014 863

4 Kings 3 2014 741

9 Royals 4 2014 701

2015

Team Rank Year Points

1 Riders 2 2015 789

3 Devils 3 2015 673

5 kings 4 2015 812

10 Royals 1 2015 804

2016

Team Rank Year Points

6 Kings 1 2016 756

8 Riders 2 2016 694

2017

Team Rank Year Points

7 Kings 1 2017 788

11 Riders 2 2017 690

选择一个group get_group()

grouped.get_group(2014)

|

Team |

Rank |

Year |

Points |

| 0 |

Riders |

1 |

2014 |

876 |

| 2 |

Devils |

2 |

2014 |

863 |

| 4 |

Kings |

3 |

2014 |

741 |

| 9 |

Royals |

4 |

2014 |

701 |

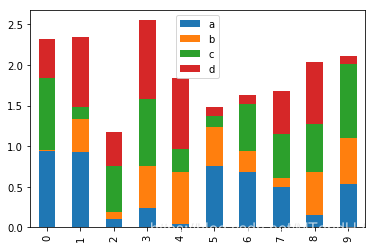

Aggregations 在group的基础上传入函数整合

grouped['Points']

<pandas.core.groupby.groupby.SeriesGroupBy object at 0x00000162D2DCF048>

grouped['Points'].agg(np.mean)

Year

2014 795.25

2015 769.50

2016 725.00

2017 739.00

Name: Points, dtype: float64

grouped.agg(np.mean)

|

Rank |

Points |

| Year |

|

|

| 2014 |

2.5 |

795.25 |

| 2015 |

2.5 |

769.50 |

| 2016 |

1.5 |

725.00 |

| 2017 |

1.5 |

739.00 |

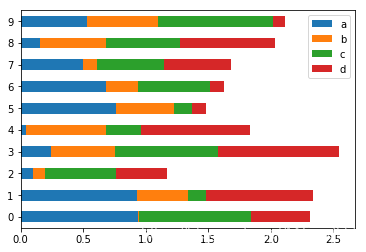

grouped = df.groupby('Team')

grouped.agg(np.size)

|

Rank |

Year |

Points |

| Team |

|

|

|

| Devils |

2 |

2 |

2 |

| Kings |

3 |

3 |

3 |

| Riders |

4 |

4 |

4 |

| Royals |

2 |

2 |

2 |

| kings |

1 |

1 |

1 |

grouped.agg(len)

|

Rank |

Year |

Points |

| Team |

|

|

|

| Devils |

2 |

2 |

2 |

| Kings |

3 |

3 |

3 |

| Riders |

4 |

4 |

4 |

| Royals |

2 |

2 |

2 |

| kings |

1 |

1 |

1 |

grouped.agg([np.sum, np.mean])

|

Rank |

Year |

Points |

|

sum |

mean |

sum |

mean |

sum |

mean |

| Team |

|

|

|

|

|

|

| Devils |

5 |

2.500000 |

4029 |

2014.500000 |

1536 |

768.000000 |

| Kings |

5 |

1.666667 |

6047 |

2015.666667 |

2285 |

761.666667 |

| Riders |

7 |

1.750000 |

8062 |

2015.500000 |

3049 |

762.250000 |

| Royals |

5 |

2.500000 |

4029 |

2014.500000 |

1505 |

752.500000 |

| kings |

4 |

4.000000 |

2015 |

2015.000000 |

812 |

812.000000 |

score = lambda x: (x - x.mean()) / x.std()*10

grouped.transform(score)

|

Rank |

Year |

Points |

| 0 |

-15.000000 |

-11.618950 |

12.843272 |

| 1 |

5.000000 |

-3.872983 |

3.020286 |

| 2 |

-7.071068 |

-7.071068 |

7.071068 |

| 3 |

7.071068 |

7.071068 |

-7.071068 |

| 4 |

11.547005 |

-10.910895 |

-8.608621 |

| 5 |

NaN |

NaN |

NaN |

| 6 |

-5.773503 |

2.182179 |

-2.360428 |

| 7 |

-5.773503 |

8.728716 |

10.969049 |

| 8 |

5.000000 |

3.872983 |

-7.705963 |

| 9 |

7.071068 |

-7.071068 |

-7.071068 |

| 10 |

-7.071068 |

7.071068 |

7.071068 |

| 11 |

5.000000 |

11.618950 |

-8.157595 |

def prin(x):

print(x)

print("*******")

return 1

grouped.transform(prin)

2 2

3 3

Name: Rank, dtype: int64

*******

2 2014

3 2015

Name: Year, dtype: int64

*******

2 863

3 673

Name: Points, dtype: int64

*******

Rank Year Points

2 2 2014 863

3 3 2015 673

*******

4 3

6 1

7 1

Name: Rank, dtype: int64

*******

4 2014

6 2016

7 2017

Name: Year, dtype: int64

*******

4 741

6 756

7 788

Name: Points, dtype: int64

*******

0 1

1 2

8 2

11 2

Name: Rank, dtype: int64

*******

0 2014

1 2015

8 2016

11 2017

Name: Year, dtype: int64

*******

0 876

1 789

8 694

11 690

Name: Points, dtype: int64

*******

9 4

10 1

Name: Rank, dtype: int64

*******

9 2014

10 2015

Name: Year, dtype: int64

*******

9 701

10 804

Name: Points, dtype: int64

*******

5 4

Name: Rank, dtype: int64

*******

5 2015

Name: Year, dtype: int64

*******

5 812

Name: Points, dtype: int64

*******

|

Rank |

Year |

Points |

| 0 |

1 |

1 |

1 |

| 1 |

1 |

1 |

1 |

| 2 |

1 |

1 |

1 |

| 3 |

1 |

1 |

1 |

| 4 |

1 |

1 |

1 |

| 5 |

1 |

1 |

1 |

| 6 |

1 |

1 |

1 |

| 7 |

1 |

1 |

1 |

| 8 |

1 |

1 |

1 |

| 9 |

1 |

1 |

1 |

| 10 |

1 |

1 |

1 |

| 11 |

1 |

1 |

1 |

过滤

df.groupby('Team').filter(lambda x: len(x) >= 3)

|

Team |

Rank |

Year |

Points |

| 0 |

Riders |

1 |

2014 |

876 |

| 1 |

Riders |

2 |

2015 |

789 |

| 4 |

Kings |

3 |

2014 |

741 |

| 6 |

Kings |

1 |

2016 |

756 |

| 7 |

Kings |

1 |

2017 |

788 |

| 8 |

Riders |

2 |

2016 |

694 |

| 11 |

Riders |

2 |

2017 |

690 |

def prin(x):

print(x)

print("*******")

if len(x) >=3:

return True

else:

return False

df.groupby('Team').filter(prin)

Team Rank Year Points

2 Devils 2 2014 863

3 Devils 3 2015 673

*******

Team Rank Year Points

4 Kings 3 2014 741

6 Kings 1 2016 756

7 Kings 1 2017 788

*******

Team Rank Year Points

0 Riders 1 2014 876

1 Riders 2 2015 789

8 Riders 2 2016 694

11 Riders 2 2017 690

*******

Team Rank Year Points

9 Royals 4 2014 701

10 Royals 1 2015 804

*******

Team Rank Year Points

5 kings 4 2015 812

*******

|

Team |

Rank |

Year |

Points |

| 0 |

Riders |

1 |

2014 |

876 |

| 1 |

Riders |

2 |

2015 |

789 |

| 4 |

Kings |

3 |

2014 |

741 |

| 6 |

Kings |

1 |

2016 |

756 |

| 7 |

Kings |

1 |

2017 |

788 |

| 8 |

Riders |

2 |

2016 |

694 |

| 11 |

Riders |

2 |

2017 |

690 |

Merging/Joining

left = pd.DataFrame({

'id':[1,2,3,4,5],

'Name': ['Alex', 'Amy', 'Allen', 'Alice', 'Ayoung'],

'subject_id':['sub1','sub2','sub4','sub6','sub5']})

right = pd.DataFrame(

{'id':[1,2,3,4,6],

'Name': ['Billy', 'Brian', 'Bran', 'Bryce', 'Betty'],

'subject_id':['sub2','sub4','sub3','sub6','sub5']})

left

|

id |

Name |

subject_id |

| 0 |

1 |

Alex |

sub1 |

| 1 |

2 |

Amy |

sub2 |

| 2 |

3 |

Allen |

sub4 |

| 3 |

4 |

Alice |

sub6 |

| 4 |

5 |

Ayoung |

sub5 |

right

|

id |

Name |

subject_id |

| 0 |

1 |

Billy |

sub2 |

| 1 |

2 |

Brian |

sub4 |

| 2 |

3 |

Bran |

sub3 |

| 3 |

4 |

Bryce |

sub6 |

| 4 |

6 |

Betty |

sub5 |

help(pd.merge)

Help on function merge in module pandas.core.reshape.merge:

merge(left, right, how='inner', on=None, left_on=None, right_on=None, left_index=False, right_index=False, sort=False, suffixes=('_x', '_y'), copy=True, indicator=False, validate=None)

Merge DataFrame objects by performing a database-style join operation by

columns or indexes.

If joining columns on columns, the DataFrame indexes *will be

ignored*. Otherwise if joining indexes on indexes or indexes on a column or

columns, the index will be passed on.

Parameters

----------

left : DataFrame

right : DataFrame

how : {'left', 'right', 'outer', 'inner'}, default 'inner'

* left: use only keys from left frame, similar to a SQL left outer join;

preserve key order

* right: use only keys from right frame, similar to a SQL right outer join;

preserve key order

* outer: use union of keys from both frames, similar to a SQL full outer

join; sort keys lexicographically

* inner: use intersection of keys from both frames, similar to a SQL inner

join; preserve the order of the left keys

on : label or list

Column or index level names to join on. These must be found in both

DataFrames. If `on` is None and not merging on indexes then this defaults

to the intersection of the columns in both DataFrames.

left_on : label or list, or array-like

Column or index level names to join on in the left DataFrame. Can also

be an array or list of arrays of the length of the left DataFrame.

These arrays are treated as if they are columns.

right_on : label or list, or array-like

Column or index level names to join on in the right DataFrame. Can also

be an array or list of arrays of the length of the right DataFrame.

These arrays are treated as if they are columns.

left_index : boolean, default False

Use the index from the left DataFrame as the join key(s). If it is a

MultiIndex, the number of keys in the other DataFrame (either the index

or a number of columns) must match the number of levels

right_index : boolean, default False

Use the index from the right DataFrame as the join key. Same caveats as

left_index

sort : boolean, default False

Sort the join keys lexicographically in the result DataFrame. If False,

the order of the join keys depends on the join type (how keyword)

suffixes : 2-length sequence (tuple, list, ...)

Suffix to apply to overlapping column names in the left and right

side, respectively

copy : boolean, default True

If False, do not copy data unnecessarily

indicator : boolean or string, default False

If True, adds a column to output DataFrame called "_merge" with

information on the source of each row.

If string, column with information on source of each row will be added to

output DataFrame, and column will be named value of string.

Information column is Categorical-type and takes on a value of "left_only"

for observations whose merge key only appears in 'left' DataFrame,

"right_only" for observations whose merge key only appears in 'right'

DataFrame, and "both" if the observation's merge key is found in both.

validate : string, default None

If specified, checks if merge is of specified type.

* "one_to_one" or "1:1": check if merge keys are unique in both

left and right datasets.

* "one_to_many" or "1:m": check if merge keys are unique in left

dataset.

* "many_to_one" or "m:1": check if merge keys are unique in right

dataset.

* "many_to_many" or "m:m": allowed, but does not result in checks.

.. versionadded:: 0.21.0

Notes

-----

Support for specifying index levels as the `on`, `left_on`, and

`right_on` parameters was added in version 0.23.0

Examples

--------

>>> A >>> B

lkey value rkey value

0 foo 1 0 foo 5

1 bar 2 1 bar 6

2 baz 3 2 qux 7

3 foo 4 3 bar 8

>>> A.merge(B, left_on='lkey', right_on='rkey', how='outer')

lkey value_x rkey value_y

0 foo 1 foo 5

1 foo 4 foo 5

2 bar 2 bar 6

3 bar 2 bar 8

4 baz 3 NaN NaN

5 NaN NaN qux 7

Returns

-------

merged : DataFrame

The output type will the be same as 'left', if it is a subclass

of DataFrame.

See also

--------

merge_ordered

merge_asof

DataFrame.join

pd.merge(left, right, on='id')

|

id |

Name_x |

subject_id_x |

Name_y |

subject_id_y |

| 0 |

1 |

Alex |

sub1 |

Billy |

sub2 |

| 1 |

2 |

Amy |

sub2 |

Brian |

sub4 |

| 2 |

3 |

Allen |

sub4 |

Bran |

sub3 |

| 3 |

4 |

Alice |

sub6 |

Bryce |

sub6 |

pd.merge(left, right, on=['id', 'subject_id'])

|

id |

Name_x |

subject_id |

Name_y |

| 0 |

4 |

Alice |

sub6 |

Bryce |

利用how

left = pd.DataFrame({

'id':[1,2,3,4,5],

'Name': ['Alex', 'Amy', 'Allen', 'Alice', 'Ayoung'],

'subject_id':['sub1','sub2','sub4','sub6','sub5']})

right = pd.DataFrame({

'id':[1,2,3,4,5],

'Name': ['Billy', 'Brian', 'Bran', 'Bryce', 'Betty'],

'subject_id':['sub2','sub4','sub3','sub6','sub5']})

pd.merge(left, right, on="subject_id", how="left") #按照左边数据框的index

|

id_x |

Name_x |

subject_id |

id_y |

Name_y |

| 0 |

1 |

Alex |

sub1 |

NaN |

NaN |

| 1 |

2 |

Amy |

sub2 |

1.0 |

Billy |

| 2 |

3 |

Allen |

sub4 |

2.0 |

Brian |

| 3 |

4 |

Alice |

sub6 |

4.0 |

Bryce |

| 4 |

5 |

Ayoung |

sub5 |

5.0 |

Betty |

pd.merge(left, right, on="subject_id", how="right") #按照右边数据框的index

|

id_x |

Name_x |

subject_id |

id_y |

Name_y |

| 0 |

2.0 |

Amy |

sub2 |

1 |

Billy |

| 1 |

3.0 |

Allen |

sub4 |

2 |

Brian |

| 2 |

4.0 |

Alice |

sub6 |

4 |

Bryce |

| 3 |

5.0 |

Ayoung |

sub5 |

5 |

Betty |

| 4 |

NaN |

NaN |

sub3 |

3 |

Bran |

pd.merge(left, right, on="subject_id", how="outer") #左右数据框的并集

|

id_x |

Name_x |

subject_id |

id_y |

Name_y |

| 0 |

1.0 |

Alex |

sub1 |

NaN |

NaN |

| 1 |

2.0 |

Amy |

sub2 |

1.0 |

Billy |

| 2 |

3.0 |

Allen |

sub4 |

2.0 |

Brian |

| 3 |

4.0 |

Alice |

sub6 |

4.0 |

Bryce |

| 4 |

5.0 |

Ayoung |

sub5 |

5.0 |

Betty |

| 5 |

NaN |

NaN |

sub3 |

3.0 |

Bran |

pd.merge(left, right, on="subject_id", how="inner") #默认 交集

|

id_x |

Name_x |

subject_id |

id_y |

Name_y |

| 0 |

2 |

Amy |

sub2 |

1 |

Billy |

| 1 |

3 |

Allen |

sub4 |

2 |

Brian |

| 2 |

4 |

Alice |

sub6 |

4 |

Bryce |

| 3 |

5 |

Ayoung |

sub5 |

5 |

Betty |

concatenation

pd.concat()

help(pd.concat)

Help on function concat in module pandas.core.reshape.concat:

concat(objs, axis=0, join='outer', join_axes=None, ignore_index=False, keys=None, levels=None, names=None, verify_integrity=False, sort=None, copy=True)

Concatenate pandas objects along a particular axis with optional set logic

along the other axes.

Can also add a layer of hierarchical indexing on the concatenation axis,

which may be useful if the labels are the same (or overlapping) on

the passed axis number.

Parameters

----------

objs : a sequence or mapping of Series, DataFrame, or Panel objects

If a dict is passed, the sorted keys will be used as the `keys`

argument, unless it is passed, in which case the values will be

selected (see below). Any None objects will be dropped silently unless

they are all None in which case a ValueError will be raised

axis : {0/'index', 1/'columns'}, default 0

The axis to concatenate along

join : {'inner', 'outer'}, default 'outer'

How to handle indexes on other axis(es)

join_axes : list of Index objects

Specific indexes to use for the other n - 1 axes instead of performing

inner/outer set logic

ignore_index : boolean, default False

If True, do not use the index values along the concatenation axis. The

resulting axis will be labeled 0, ..., n - 1. This is useful if you are

concatenating objects where the concatenation axis does not have

meaningful indexing information. Note the index values on the other

axes are still respected in the join.

keys : sequence, default None

If multiple levels passed, should contain tuples. Construct

hierarchical index using the passed keys as the outermost level

levels : list of sequences, default None

Specific levels (unique values) to use for constructing a

MultiIndex. Otherwise they will be inferred from the keys

names : list, default None

Names for the levels in the resulting hierarchical index

verify_integrity : boolean, default False

Check whether the new concatenated axis contains duplicates. This can

be very expensive relative to the actual data concatenation

sort : boolean, default None

Sort non-concatenation axis if it is not already aligned when `join`

is 'outer'. The current default of sorting is deprecated and will

change to not-sorting in a future version of pandas.

Explicitly pass ``sort=True`` to silence the warning and sort.

Explicitly pass ``sort=False`` to silence the warning and not sort.

This has no effect when ``join='inner'``, which already preserves

the order of the non-concatenation axis.

.. versionadded:: 0.23.0

copy : boolean, default True

If False, do not copy data unnecessarily

Returns

-------

concatenated : object, type of objs

When concatenating all ``Series`` along the index (axis=0), a

``Series`` is returned. When ``objs`` contains at least one

``DataFrame``, a ``DataFrame`` is returned. When concatenating along

the columns (axis=1), a ``DataFrame`` is returned.

Notes

-----

The keys, levels, and names arguments are all optional.

A walkthrough of how this method fits in with other tools for combining

pandas objects can be found `here

<http://pandas.pydata.org/pandas-docs/stable/merging.html>`__.

See Also

--------

Series.append

DataFrame.append

DataFrame.join

DataFrame.merge

Examples

--------

Combine two ``Series``.

>>> s1 = pd.Series(['a', 'b'])

>>> s2 = pd.Series(['c', 'd'])

>>> pd.concat([s1, s2])

0 a

1 b

0 c

1 d

dtype: object

Clear the existing index and reset it in the result

by setting the ``ignore_index`` option to ``True``.

>>> pd.concat([s1, s2], ignore_index=True)

0 a

1 b

2 c

3 d

dtype: object

Add a hierarchical index at the outermost level of

the data with the ``keys`` option.

>>> pd.concat([s1, s2], keys=['s1', 's2',])

s1 0 a

1 b

s2 0 c

1 d

dtype: object

Label the index keys you create with the ``names`` option.

>>> pd.concat([s1, s2], keys=['s1', 's2'],

... names=['Series name', 'Row ID'])

Series name Row ID

s1 0 a

1 b

s2 0 c

1 d

dtype: object

Combine two ``DataFrame`` objects with identical columns.

>>> df1 = pd.DataFrame([['a', 1], ['b', 2]],

... columns=['letter', 'number'])

>>> df1

letter number

0 a 1

1 b 2

>>> df2 = pd.DataFrame([['c', 3], ['d', 4]],

... columns=['letter', 'number'])

>>> df2

letter number

0 c 3

1 d 4

>>> pd.concat([df1, df2])

letter number

0 a 1

1 b 2

0 c 3

1 d 4

Combine ``DataFrame`` objects with overlapping columns

and return everything. Columns outside the intersection will

be filled with ``NaN`` values.

>>> df3 = pd.DataFrame([['c', 3, 'cat'], ['d', 4, 'dog']],

... columns=['letter', 'number', 'animal'])

>>> df3

letter number animal

0 c 3 cat

1 d 4 dog

>>> pd.concat([df1, df3])

animal letter number

0 NaN a 1

1 NaN b 2

0 cat c 3

1 dog d 4

Combine ``DataFrame`` objects with overlapping columns

and return only those that are shared by passing ``inner`` to

the ``join`` keyword argument.

>>> pd.concat([df1, df3], join="inner")

letter number

0 a 1

1 b 2

0 c 3

1 d 4

Combine ``DataFrame`` objects horizontally along the x axis by

passing in ``axis=1``.

>>> df4 = pd.DataFrame([['bird', 'polly'], ['monkey', 'george']],

... columns=['animal', 'name'])

>>> pd.concat([df1, df4], axis=1)

letter number animal name

0 a 1 bird polly

1 b 2 monkey george

Prevent the result from including duplicate index values with the

``verify_integrity`` option.

>>> df5 = pd.DataFrame([1], index=['a'])

>>> df5

0

a 1

>>> df6 = pd.DataFrame([2], index=['a'])

>>> df6

0

a 2

>>> pd.concat([df5, df6], verify_integrity=True)

Traceback (most recent call last):

...

ValueError: Indexes have overlapping values: ['a']

one = pd.DataFrame({

'Name': ['Alex', 'Amy', 'Allen', 'Alice', 'Ayoung'],

'subject_id':['sub1','sub2','sub4','sub6','sub5'],

'Marks_scored':[98,90,87,69,78]},

index=[1,2,3,4,5])

two = pd.DataFrame({

'Name': ['Billy', 'Brian', 'Bran', 'Bryce', 'Betty'],

'subject_id':['sub2','sub4','sub3','sub6','sub5'],

'Marks_scored':[89,80,79,97,88]},

index=[1,2,3,4,5])

one

|

Name |

subject_id |

Marks_scored |

| 1 |

Alex |

sub1 |

98 |

| 2 |

Amy |

sub2 |

90 |

| 3 |

Allen |

sub4 |

87 |

| 4 |

Alice |

sub6 |

69 |

| 5 |

Ayoung |

sub5 |

78 |

two

|

Name |

subject_id |

Marks_scored |

| 1 |

Billy |

sub2 |

89 |

| 2 |

Brian |

sub4 |

80 |

| 3 |

Bran |

sub3 |

79 |

| 4 |

Bryce |

sub6 |

97 |

| 5 |

Betty |

sub5 |

88 |

df = pd.concat([one, two])

df

|

Name |

subject_id |

Marks_scored |

| 1 |

Alex |

sub1 |

98 |

| 2 |

Amy |

sub2 |

90 |

| 3 |

Allen |

sub4 |

87 |

| 4 |

Alice |

sub6 |

69 |

| 5 |

Ayoung |

sub5 |

78 |

| 1 |

Billy |

sub2 |

89 |

| 2 |

Brian |

sub4 |

80 |

| 3 |

Bran |

sub3 |

79 |

| 4 |

Bryce |

sub6 |

97 |

| 5 |

Betty |

sub5 |

88 |

注意到上面的index是重复的

df.iloc[0]

Name Alex

subject_id sub1

Marks_scored 98

Name: 1, dtype: object

df.loc[1]

|

Name |

subject_id |

Marks_scored |

| 1 |

Alex |

sub1 |

98 |

| 1 |

Billy |

sub2 |

89 |

df = pd.concat([one, two], keys=['one', 'two'])

df

|

|

Name |

subject_id |

Marks_scored |

| one |

1 |

Alex |

sub1 |

98 |

| 2 |

Amy |

sub2 |

90 |

| 3 |

Allen |

sub4 |

87 |

| 4 |

Alice |

sub6 |

69 |

| 5 |

Ayoung |

sub5 |

78 |

| two |

1 |

Billy |

sub2 |

89 |

| 2 |

Brian |

sub4 |

80 |

| 3 |

Bran |

sub3 |

79 |

| 4 |

Bryce |

sub6 |

97 |

| 5 |

Betty |

sub5 |

88 |

df.iloc[1]

Name Amy

subject_id sub2

Marks_scored 90

Name: (one, 2), dtype: object

df.loc[('one', 2)]

Name Amy

subject_id sub2

Marks_scored 90

Name: (one, 2), dtype: object

想要让index不重复,可以利用ignore_index

pd.concat([one, two], keys=['x', 'y'], ignore_index=True)

|

Name |

subject_id |

Marks_scored |

| 0 |

Alex |

sub1 |

98 |

| 1 |

Amy |

sub2 |

90 |

| 2 |

Allen |

sub4 |

87 |

| 3 |

Alice |

sub6 |

69 |

| 4 |

Ayoung |

sub5 |

78 |

| 5 |

Billy |

sub2 |

89 |

| 6 |

Brian |

sub4 |

80 |

| 7 |

Bran |

sub3 |

79 |

| 8 |

Bryce |

sub6 |

97 |

| 9 |

Betty |

sub5 |

88 |

此时keys也被覆写了

.append()

one.append(two)

|

Name |

subject_id |

Marks_scored |

| 1 |

Alex |

sub1 |

98 |

| 2 |

Amy |

sub2 |

90 |

| 3 |

Allen |

sub4 |

87 |

| 4 |

Alice |

sub6 |

69 |

| 5 |

Ayoung |

sub5 |

78 |

| 1 |

Billy |

sub2 |

89 |

| 2 |

Brian |

sub4 |

80 |

| 3 |

Bran |

sub3 |

79 |

| 4 |

Bryce |

sub6 |

97 |

| 5 |

Betty |

sub5 |

88 |

one.append([two, one, two])

|

Name |

subject_id |

Marks_scored |

| 1 |

Alex |

sub1 |

98 |

| 2 |

Amy |

sub2 |

90 |

| 3 |

Allen |

sub4 |

87 |

| 4 |

Alice |

sub6 |

69 |

| 5 |

Ayoung |

sub5 |

78 |

| 1 |

Billy |

sub2 |

89 |

| 2 |

Brian |

sub4 |

80 |

| 3 |

Bran |

sub3 |

79 |

| 4 |

Bryce |

sub6 |

97 |

| 5 |

Betty |

sub5 |

88 |

| 1 |

Alex |

sub1 |

98 |

| 2 |

Amy |

sub2 |

90 |

| 3 |

Allen |

sub4 |

87 |

| 4 |

Alice |

sub6 |

69 |

| 5 |

Ayoung |

sub5 |

78 |

| 1 |

Billy |

sub2 |

89 |

| 2 |

Brian |

sub4 |

80 |

| 3 |

Bran |

sub3 |

79 |

| 4 |

Bryce |

sub6 |

97 |

| 5 |

Betty |

sub5 |

88 |

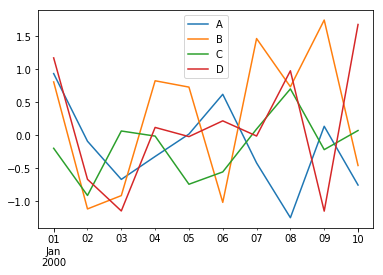

时间序列

datetime.now()

pd.datetime.now()

datetime.datetime(2019, 9, 5, 21, 9, 55, 821684)

pd.Timestamp(1587687255, unit='s')

Timestamp('2020-04-24 00:14:15')

创建时间序列

pd.date_range("11:00", "13:30", freq="30min").time

array([datetime.time(11, 0), datetime.time(11, 30), datetime.time(12, 0),

datetime.time(12, 30), datetime.time(13, 0), datetime.time(13, 30)],

dtype=object)

pd.date_range("11:00", "13:30", freq="h").time

array([datetime.time(11, 0), datetime.time(12, 0), datetime.time(13, 0)],

dtype=object)

pd.to_datetime(pd.Series(['Jul 31, 2009','2010-01-10', None]))

0 2009-07-31

1 2010-01-10

2 NaT

dtype: datetime64[ns]

pd.to_datetime(['2005/11/23 00:14:15', '2010.12.31', None])

DatetimeIndex(['2005-11-23 00:14:15', '2010-12-31 00:00:00', 'NaT'], dtype='datetime64[ns]', freq=None)

period freq

pd.date_range('1/1/2011', periods=5)

DatetimeIndex(['2011-01-01', '2011-01-02', '2011-01-03', '2011-01-04',

'2011-01-05'],

dtype='datetime64[ns]', freq='D')

help(pd.date_range)

Help on function date_range in module pandas.core.indexes.datetimes:

date_range(start=None, end=None, periods=None, freq=None, tz=None, normalize=False, name=None, closed=None, **kwargs)

Return a fixed frequency DatetimeIndex.

Parameters

----------

start : str or datetime-like, optional

Left bound for generating dates.

end : str or datetime-like, optional

Right bound for generating dates.

periods : integer, optional

Number of periods to generate.

freq : str or DateOffset, default 'D' (calendar daily)

Frequency strings can have multiples, e.g. '5H'. See

:ref:`here <timeseries.offset_aliases>` for a list of

frequency aliases.

tz : str or tzinfo, optional

Time zone name for returning localized DatetimeIndex, for example

'Asia/Hong_Kong'. By default, the resulting DatetimeIndex is

timezone-naive.

normalize : bool, default False

Normalize start/end dates to midnight before generating date range.

name : str, default None

Name of the resulting DatetimeIndex.

closed : {None, 'left', 'right'}, optional

Make the interval closed with respect to the given frequency to

the 'left', 'right', or both sides (None, the default).

**kwargs

For compatibility. Has no effect on the result.

Returns

-------

rng : DatetimeIndex

See Also

--------

pandas.DatetimeIndex : An immutable container for datetimes.

pandas.timedelta_range : Return a fixed frequency TimedeltaIndex.

pandas.period_range : Return a fixed frequency PeriodIndex.

pandas.interval_range : Return a fixed frequency IntervalIndex.

Notes

-----

Of the four parameters ``start``, ``end``, ``periods``, and ``freq``,

exactly three must be specified. If ``freq`` is omitted, the resulting

``DatetimeIndex`` will have ``periods`` linearly spaced elements between

``start`` and ``end`` (closed on both sides).

To learn more about the frequency strings, please see `this link

<http://pandas.pydata.org/pandas-docs/stable/timeseries.html#offset-aliases>`__.

Examples

--------

**Specifying the values**

The next four examples generate the same `DatetimeIndex`, but vary

the combination of `start`, `end` and `periods`.

Specify `start` and `end`, with the default daily frequency.

>>> pd.date_range(start='1/1/2018', end='1/08/2018')

DatetimeIndex(['2018-01-01', '2018-01-02', '2018-01-03', '2018-01-04',

'2018-01-05', '2018-01-06', '2018-01-07', '2018-01-08'],

dtype='datetime64[ns]', freq='D')

Specify `start` and `periods`, the number of periods (days).

>>> pd.date_range(start='1/1/2018', periods=8)

DatetimeIndex(['2018-01-01', '2018-01-02', '2018-01-03', '2018-01-04',

'2018-01-05', '2018-01-06', '2018-01-07', '2018-01-08'],

dtype='datetime64[ns]', freq='D')

Specify `end` and `periods`, the number of periods (days).

>>> pd.date_range(end='1/1/2018', periods=8)

DatetimeIndex(['2017-12-25', '2017-12-26', '2017-12-27', '2017-12-28',

'2017-12-29', '2017-12-30', '2017-12-31', '2018-01-01'],

dtype='datetime64[ns]', freq='D')

Specify `start`, `end`, and `periods`; the frequency is generated

automatically (linearly spaced).

>>> pd.date_range(start='2018-04-24', end='2018-04-27', periods=3)

DatetimeIndex(['2018-04-24 00:00:00', '2018-04-25 12:00:00',

'2018-04-27 00:00:00'], freq=None)

**Other Parameters**

Changed the `freq` (frequency) to ``'M'`` (month end frequency).

>>> pd.date_range(start='1/1/2018', periods=5, freq='M')

DatetimeIndex(['2018-01-31', '2018-02-28', '2018-03-31', '2018-04-30',

'2018-05-31'],

dtype='datetime64[ns]', freq='M')

Multiples are allowed

>>> pd.date_range(start='1/1/2018', periods=5, freq='3M')

DatetimeIndex(['2018-01-31', '2018-04-30', '2018-07-31', '2018-10-31',

'2019-01-31'],

dtype='datetime64[ns]', freq='3M')

`freq` can also be specified as an Offset object.

>>> pd.date_range(start='1/1/2018', periods=5, freq=pd.offsets.MonthEnd(3))

DatetimeIndex(['2018-01-31', '2018-04-30', '2018-07-31', '2018-10-31',

'2019-01-31'],

dtype='datetime64[ns]', freq='3M')

Specify `tz` to set the timezone.

>>> pd.date_range(start='1/1/2018', periods=5, tz='Asia/Tokyo')

DatetimeIndex(['2018-01-01 00:00:00+09:00', '2018-01-02 00:00:00+09:00',

'2018-01-03 00:00:00+09:00', '2018-01-04 00:00:00+09:00',

'2018-01-05 00:00:00+09:00'],

dtype='datetime64[ns, Asia/Tokyo]', freq='D')

`closed` controls whether to include `start` and `end` that are on the

boundary. The default includes boundary points on either end.

>>> pd.date_range(start='2017-01-01', end='2017-01-04', closed=None)

DatetimeIndex(['2017-01-01', '2017-01-02', '2017-01-03', '2017-01-04'],

dtype='datetime64[ns]', freq='D')

Use ``closed='left'`` to exclude `end` if it falls on the boundary.

>>> pd.date_range(start='2017-01-01', end='2017-01-04', closed='left')

DatetimeIndex(['2017-01-01', '2017-01-02', '2017-01-03'],

dtype='datetime64[ns]', freq='D')

Use ``closed='right'`` to exclude `start` if it falls on the boundary.

>>> pd.date_range(start='2017-01-01', end='2017-01-04', closed='right')

DatetimeIndex(['2017-01-02', '2017-01-03', '2017-01-04'],

dtype='datetime64[ns]', freq='D')

pd.date_range('1/1/2011', periods=5, freq='M')

DatetimeIndex(['2011-01-31', '2011-02-28', '2011-03-31', '2011-04-30',

'2011-05-31'],

dtype='datetime64[ns]', freq='M')

bdate_range

去掉周六周日

pd.bdate_range('9/6/2019', periods=5)

DatetimeIndex(['2019-09-06', '2019-09-09', '2019-09-10', '2019-09-11',

'2019-09-12'],

dtype='datetime64[ns]', freq='B')

pd.bdate_range('9/7/2019', periods=5)

DatetimeIndex(['2019-09-09', '2019-09-10', '2019-09-11', '2019-09-12',

'2019-09-13'],

dtype='datetime64[ns]', freq='B')

start= pd.datetime(2011, 1, 1)

end = pd.datetime(2011, 1, 5)

pd.date_range(start, end)

DatetimeIndex(['2011-01-01', '2011-01-02', '2011-01-03', '2011-01-04',

'2011-01-05'],

dtype='datetime64[ns]', freq='D')

一些freq的简写, D, M, Y...

| Alias |

Description |

Alias |

Description |

| B |

business day frequency |

BQS |

business quarter start frequency |

| D |

calendar day frequency |

A |

annual(Year) end frequency |

| W |

weekly frequency |

BA |

business year end frequency |

| M |

month end frequency |

BAS |

business year start frequency |

| SM |

semi-month end frequency |

BH |

business hour frequency |

| BM |

business month end frequency |

H |

hourly frequency |

| MS |

month start frequency |

T, min |

minutely frequency |

| SMS |

SMS semi month start frequency |

S |

secondly frequency |

| BMS |

business month start frequency |

L, ms |

milliseconds |

| Q |

quarter end frequency |

U, us |

microseconds |

| BQ |

business quarter end frequency |

N |

nanoseconds |

| QS |

quarter start frequency |

|

|

pd.date_range('9/6/2019', periods=5, freq='2W')

DatetimeIndex(['2019-09-08', '2019-09-22', '2019-10-06', '2019-10-20',

'2019-11-03'],

dtype='datetime64[ns]', freq='2W-SUN')

Timedelta 用以描述时间差

help(pd.Timedelta)

Help on class Timedelta in module pandas._libs.tslibs.timedeltas:

class Timedelta(_Timedelta)

| Timedelta(value=<object object at 0x000002BFBD410440>, unit=None, **kwargs)

|

| Represents a duration, the difference between two dates or times.

|

| Timedelta is the pandas equivalent of python's ``datetime.timedelta``

| and is interchangeable with it in most cases.

|

| Parameters

| ----------

| value : Timedelta, timedelta, np.timedelta64, string, or integer

| unit : string, {'ns', 'us', 'ms', 's', 'm', 'h', 'D'}, optional

| Denote the unit of the input, if input is an integer. Default 'ns'.

| days, seconds, microseconds,

| milliseconds, minutes, hours, weeks : numeric, optional

| Values for construction in compat with datetime.timedelta.

| np ints and floats will be coereced to python ints and floats.

|

| Notes

| -----

| The ``.value`` attribute is always in ns.

|

| Method resolution order:

| Timedelta

| _Timedelta

| datetime.timedelta

| builtins.object

|

| Methods defined here:

|

| __abs__(self)

|

| __add__(self, other)

|

| __divmod__(self, other)

|

| __floordiv__(self, other)

|

| __inv__(self)

|

| __mod__(self, other)

|

| __mul__(self, other)

|

| __neg__(self)

|

| __new__(cls, value=<object object at 0x000002BFBD410440>, unit=None, **kwargs)

|

| __pos__(self)

|

| __radd__(self, other)

|

| __rdivmod__(self, other)

|

| __reduce__(self)

|

| __rfloordiv__(self, other)

|

| __rmod__(self, other)

|

| __rmul__ = __mul__(self, other)

|

| __rsub__(self, other)

|

| __rtruediv__(self, other)

|

| __setstate__(self, state)

|

| __sub__(self, other)

|

| __truediv__(self, other)

|

| ceil(self, freq)

| return a new Timedelta ceiled to this resolution

|

| Parameters

| ----------

| freq : a freq string indicating the ceiling resolution

|

| floor(self, freq)

| return a new Timedelta floored to this resolution

|

| Parameters

| ----------

| freq : a freq string indicating the flooring resolution

|

| round(self, freq)

| Round the Timedelta to the specified resolution

|

| Returns

| -------

| a new Timedelta rounded to the given resolution of `freq`

|

| Parameters

| ----------

| freq : a freq string indicating the rounding resolution

|

| Raises

| ------

| ValueError if the freq cannot be converted

|

| ----------------------------------------------------------------------

| Data descriptors defined here:

|

| __dict__

| dictionary for instance variables (if defined)

|

| __weakref__

| list of weak references to the object (if defined)

|

| ----------------------------------------------------------------------

| Data and other attributes defined here:

|

| max = Timedelta('106751 days 23:47:16.854775')

|

| min = Timedelta('-106752 days +00:12:43.145224')

|

| ----------------------------------------------------------------------

| Methods inherited from _Timedelta:

|

| __bool__(self, /)

| self != 0

|

| __eq__(self, value, /)

| Return self==value.

|

| __ge__(self, value, /)

| Return self>=value.

|

| __gt__(self, value, /)

| Return self>value.

|

| __hash__(self, /)

| Return hash(self).

|

| __le__(self, value, /)

| Return self<=value.

|

| __lt__(self, value, /)

| Return self<value.

|

| __ne__(self, value, /)

| Return self!=value.

|

| __reduce_cython__(...)

|

| __repr__(self, /)

| Return repr(self).

|

| __setstate_cython__(...)

|

| __str__(self, /)

| Return str(self).

|

| isoformat(...)

| Format Timedelta as ISO 8601 Duration like

| ``P[n]Y[n]M[n]DT[n]H[n]M[n]S``, where the ``[n]`` s are replaced by the

| values. See https://en.wikipedia.org/wiki/ISO_8601#Durations

|

| .. versionadded:: 0.20.0

|

| Returns

| -------

| formatted : str

|

| Notes

| -----

| The longest component is days, whose value may be larger than

| 365.

| Every component is always included, even if its value is 0.

| Pandas uses nanosecond precision, so up to 9 decimal places may

| be included in the seconds component.

| Trailing 0's are removed from the seconds component after the decimal.

| We do not 0 pad components, so it's `...T5H...`, not `...T05H...`

|

| Examples

| --------

| >>> td = pd.Timedelta(days=6, minutes=50, seconds=3,

| ... milliseconds=10, microseconds=10, nanoseconds=12)

| >>> td.isoformat()

| 'P6DT0H50M3.010010012S'

| >>> pd.Timedelta(hours=1, seconds=10).isoformat()

| 'P0DT0H0M10S'

| >>> pd.Timedelta(hours=1, seconds=10).isoformat()

| 'P0DT0H0M10S'

| >>> pd.Timedelta(days=500.5).isoformat()

| 'P500DT12H0MS'

|

| See Also

| --------

| Timestamp.isoformat

|

| to_pytimedelta(...)

| return an actual datetime.timedelta object

| note: we lose nanosecond resolution if any

|

| to_timedelta64(...)

| Returns a numpy.timedelta64 object with 'ns' precision

|

| total_seconds(...)

| Total duration of timedelta in seconds (to ns precision)

|

| view(...)

| array view compat

|

| ----------------------------------------------------------------------

| Data descriptors inherited from _Timedelta:

|

| asm8

| return a numpy timedelta64 array view of myself

|

| components

| Return a Components NamedTuple-like

|

| delta

| Return the timedelta in nanoseconds (ns), for internal compatibility.

|

| Returns

| -------

| int

| Timedelta in nanoseconds.

|

| Examples

| --------

| >>> td = pd.Timedelta('1 days 42 ns')

| >>> td.delta

| 86400000000042

|

| >>> td = pd.Timedelta('3 s')

| >>> td.delta

| 3000000000

|

| >>> td = pd.Timedelta('3 ms 5 us')

| >>> td.delta

| 3005000

|

| >>> td = pd.Timedelta(42, unit='ns')

| >>> td.delta

| 42

|

| freq

|

| is_populated

|

| nanoseconds

| Return the number of nanoseconds (n), where 0 <= n < 1 microsecond.

|

| Returns

| -------

| int

| Number of nanoseconds.

|

| See Also

| --------

| Timedelta.components : Return all attributes with assigned values

| (i.e. days, hours, minutes, seconds, milliseconds, microseconds,

| nanoseconds).

|

| Examples

| --------

| **Using string input**

|

| >>> td = pd.Timedelta('1 days 2 min 3 us 42 ns')

| >>> td.nanoseconds

| 42

|

| **Using integer input**

|

| >>> td = pd.Timedelta(42, unit='ns')

| >>> td.nanoseconds

| 42

|

| resolution

| return a string representing the lowest resolution that we have

|

| value

|

| ----------------------------------------------------------------------

| Data and other attributes inherited from _Timedelta:

|

| __array_priority__ = 100

|

| __pyx_vtable__ = <capsule object NULL>

|

| ----------------------------------------------------------------------

| Methods inherited from datetime.timedelta:

|

| __getattribute__(self, name, /)

| Return getattr(self, name).

|

| ----------------------------------------------------------------------

| Data descriptors inherited from datetime.timedelta:

|

| days

| Number of days.

|

| microseconds

| Number of microseconds (>= 0 and less than 1 second).

|

| seconds

| Number of seconds (>= 0 and less than 1 day).

pd.Timedelta('2 days 2 hours 15 minutes 30 seconds')

Timedelta('2 days 02:15:30')

pd.Timedelta(6, unit='h')

Timedelta('0 days 06:00:00')

pd.Timedelta(days=2)

Timedelta('2 days 00:00:00')

to_timedelta()

pd.to_timedelta(['2 days 2 hours 15 minutes 30 seconds', '2 days 2 hours 15 minutes 30 seconds'])

TimedeltaIndex(['2 days 02:15:30', '2 days 02:15:30'], dtype='timedelta64[ns]', freq=None)

s = pd.Series(pd.date_range('2012-1-1', periods=3, freq='D'))

td = pd.Series([ pd.Timedelta(days=i) for i in range(3) ])

df = pd.DataFrame(dict(A = s, B = td))

df

|

A |

B |

| 0 |

2012-01-01 |

0 days |

| 1 |

2012-01-02 |

1 days |

| 2 |

2012-01-03 |

2 days |

df['C'] = df['A'] + df['B']

df

|

A |

B |

C |

| 0 |

2012-01-01 |

0 days |

2012-01-01 |

| 1 |

2012-01-02 |

1 days |

2012-01-03 |

| 2 |

2012-01-03 |

2 days |

2012-01-05 |

df['D'] = df['C'] - df['B']

df

|

A |

B |

C |

D |

| 0 |

2012-01-01 |

0 days |

2012-01-01 |

2012-01-01 |

| 1 |

2012-01-02 |

1 days |

2012-01-03 |

2012-01-02 |

| 2 |

2012-01-03 |

2 days |

2012-01-05 |

2012-01-03 |

Categorical Data

category

s = pd.Series(["a", 'b', 'c', 'a'], dtype='category')

s

0 a

1 b

2 c

3 a

dtype: category

Categories (3, object): [a, b, c]

pd.Categorical

pandas.Categorical(values, categories, ordered)

help(pd.Categorical)

Help on class Categorical in module pandas.core.arrays.categorical:

class Categorical(pandas.core.arrays.base.ExtensionArray, pandas.core.base.PandasObject)

| Categorical(values, categories=None, ordered=None, dtype=None, fastpath=False)

|

| Represents a categorical variable in classic R / S-plus fashion

|

| `Categoricals` can only take on only a limited, and usually fixed, number

| of possible values (`categories`). In contrast to statistical categorical

| variables, a `Categorical` might have an order, but numerical operations

| (additions, divisions, ...) are not possible.

|

| All values of the `Categorical` are either in `categories` or `np.nan`.

| Assigning values outside of `categories` will raise a `ValueError`. Order

| is defined by the order of the `categories`, not lexical order of the

| values.

|

| Parameters

| ----------

| values : list-like

| The values of the categorical. If categories are given, values not in

| categories will be replaced with NaN.

| categories : Index-like (unique), optional

| The unique categories for this categorical. If not given, the

| categories are assumed to be the unique values of values.

| ordered : boolean, (default False)

| Whether or not this categorical is treated as a ordered categorical.

| If not given, the resulting categorical will not be ordered.

| dtype : CategoricalDtype

| An instance of ``CategoricalDtype`` to use for this categorical

|

| .. versionadded:: 0.21.0

|

| Attributes

| ----------

| categories : Index

| The categories of this categorical

| codes : ndarray

| The codes (integer positions, which point to the categories) of this

| categorical, read only.

| ordered : boolean

| Whether or not this Categorical is ordered.

| dtype : CategoricalDtype

| The instance of ``CategoricalDtype`` storing the ``categories``

| and ``ordered``.

|

| .. versionadded:: 0.21.0

|

| Methods

| -------

| from_codes

| __array__

|

| Raises

| ------

| ValueError

| If the categories do not validate.

| TypeError

| If an explicit ``ordered=True`` is given but no `categories` and the

| `values` are not sortable.

|

| Examples

| --------

| >>> pd.Categorical([1, 2, 3, 1, 2, 3])

| [1, 2, 3, 1, 2, 3]

| Categories (3, int64): [1, 2, 3]

|

| >>> pd.Categorical(['a', 'b', 'c', 'a', 'b', 'c'])

| [a, b, c, a, b, c]

| Categories (3, object): [a, b, c]

|

| Ordered `Categoricals` can be sorted according to the custom order

| of the categories and can have a min and max value.

|

| >>> c = pd.Categorical(['a','b','c','a','b','c'], ordered=True,

| ... categories=['c', 'b', 'a'])

| >>> c

| [a, b, c, a, b, c]

| Categories (3, object): [c < b < a]

| >>> c.min()

| 'c'

|

| Notes

| -----

| See the `user guide

| <http://pandas.pydata.org/pandas-docs/stable/categorical.html>`_ for more.

|

| See also

| --------

| pandas.api.types.CategoricalDtype : Type for categorical data

| CategoricalIndex : An Index with an underlying ``Categorical``

|

| Method resolution order:

| Categorical

| pandas.core.arrays.base.ExtensionArray

| pandas.core.base.PandasObject

| pandas.core.base.StringMixin

| pandas.core.accessor.DirNamesMixin

| builtins.object

|

| Methods defined here:

|

| __array__(self, dtype=None)

| The numpy array interface.

|

| Returns

| -------

| values : numpy array

| A numpy array of either the specified dtype or,

| if dtype==None (default), the same dtype as

| categorical.categories.dtype

|

| __eq__(self, other)

|

| __ge__(self, other)

|

| __getitem__(self, key)

| Return an item.

|

| __gt__(self, other)

|

| __init__(self, values, categories=None, ordered=None, dtype=None, fastpath=False)

| Initialize self. See help(type(self)) for accurate signature.

|

| __iter__(self)

| Returns an Iterator over the values of this Categorical.

|

| __le__(self, other)

|

| __len__(self)

| The length of this Categorical.

|

| __lt__(self, other)

|

| __ne__(self, other)

|

| __setitem__(self, key, value)

| Item assignment.

|

|

| Raises

| ------

| ValueError

| If (one or more) Value is not in categories or if a assigned

| `Categorical` does not have the same categories

|

| __setstate__(self, state)

| Necessary for making this object picklable

|

| __unicode__(self)

| Unicode representation.

|

| add_categories(self, new_categories, inplace=False)

| Add new categories.

|

| `new_categories` will be included at the last/highest place in the

| categories and will be unused directly after this call.

|

| Raises

| ------

| ValueError

| If the new categories include old categories or do not validate as

| categories

|

| Parameters

| ----------

| new_categories : category or list-like of category

| The new categories to be included.

| inplace : boolean (default: False)

| Whether or not to add the categories inplace or return a copy of

| this categorical with added categories.

|

| Returns

| -------

| cat : Categorical with new categories added or None if inplace.

|

| See also

| --------

| rename_categories

| reorder_categories

| remove_categories

| remove_unused_categories

| set_categories

|

| argsort(self, *args, **kwargs)

| Return the indicies that would sort the Categorical.

|

| Parameters

| ----------

| ascending : bool, default True

| Whether the indices should result in an ascending

| or descending sort.

| kind : {'quicksort', 'mergesort', 'heapsort'}, optional

| Sorting algorithm.

| *args, **kwargs:

| passed through to :func:`numpy.argsort`.

|

| Returns

| -------

| argsorted : numpy array

|

| See also

| --------

| numpy.ndarray.argsort

|

| Notes

| -----

| While an ordering is applied to the category values, arg-sorting

| in this context refers more to organizing and grouping together

| based on matching category values. Thus, this function can be

| called on an unordered Categorical instance unlike the functions

| 'Categorical.min' and 'Categorical.max'.

|

| Examples

| --------

| >>> pd.Categorical(['b', 'b', 'a', 'c']).argsort()

| array([2, 0, 1, 3])

|

| >>> cat = pd.Categorical(['b', 'b', 'a', 'c'],

| ... categories=['c', 'b', 'a'],

| ... ordered=True)

| >>> cat.argsort()

| array([3, 0, 1, 2])

|

| as_ordered(self, inplace=False)

| Sets the Categorical to be ordered

|

| Parameters

| ----------

| inplace : boolean (default: False)

| Whether or not to set the ordered attribute inplace or return a copy

| of this categorical with ordered set to True

|

| as_unordered(self, inplace=False)

| Sets the Categorical to be unordered

|

| Parameters

| ----------

| inplace : boolean (default: False)

| Whether or not to set the ordered attribute inplace or return a copy

| of this categorical with ordered set to False

|

| astype(self, dtype, copy=True)

| Coerce this type to another dtype

|

| Parameters

| ----------

| dtype : numpy dtype or pandas type

| copy : bool, default True

| By default, astype always returns a newly allocated object.

| If copy is set to False and dtype is categorical, the original

| object is returned.

|

| .. versionadded:: 0.19.0

|

| check_for_ordered(self, op)

| assert that we are ordered

|

| copy(self)

| Copy constructor.

|

| describe(self)

| Describes this Categorical

|

| Returns

| -------

| description: `DataFrame`

| A dataframe with frequency and counts by category.

|

| dropna(self)

| Return the Categorical without null values.

|

| Missing values (-1 in .codes) are detected.

|

| Returns

| -------

| valid : Categorical

|

| equals(self, other)

| Returns True if categorical arrays are equal.

|

| Parameters

| ----------

| other : `Categorical`

|

| Returns

| -------

| are_equal : boolean

|

| fillna(self, value=None, method=None, limit=None)

| Fill NA/NaN values using the specified method.

|

| Parameters

| ----------

| value : scalar, dict, Series

| If a scalar value is passed it is used to fill all missing values.

| Alternatively, a Series or dict can be used to fill in different

| values for each index. The value should not be a list. The

| value(s) passed should either be in the categories or should be

| NaN.

| method : {'backfill', 'bfill', 'pad', 'ffill', None}, default None

| Method to use for filling holes in reindexed Series

| pad / ffill: propagate last valid observation forward to next valid

| backfill / bfill: use NEXT valid observation to fill gap

| limit : int, default None

| (Not implemented yet for Categorical!)

| If method is specified, this is the maximum number of consecutive

| NaN values to forward/backward fill. In other words, if there is

| a gap with more than this number of consecutive NaNs, it will only

| be partially filled. If method is not specified, this is the

| maximum number of entries along the entire axis where NaNs will be

| filled.

|

| Returns

| -------

| filled : Categorical with NA/NaN filled

|

| get_values(self)

| Return the values.

|

| For internal compatibility with pandas formatting.

|

| Returns

| -------

| values : numpy array

| A numpy array of the same dtype as categorical.categories.dtype or

| Index if datetime / periods

|

| is_dtype_equal(self, other)

| Returns True if categoricals are the same dtype

| same categories, and same ordered

|

| Parameters

| ----------

| other : Categorical

|

| Returns

| -------

| are_equal : boolean

|

| isin(self, values)

| Check whether `values` are contained in Categorical.

|

| Return a boolean NumPy Array showing whether each element in

| the Categorical matches an element in the passed sequence of

| `values` exactly.

|

| Parameters

| ----------

| values : set or list-like

| The sequence of values to test. Passing in a single string will

| raise a ``TypeError``. Instead, turn a single string into a

| list of one element.

|

| Returns

| -------

| isin : numpy.ndarray (bool dtype)

|

| Raises

| ------

| TypeError

| * If `values` is not a set or list-like

|

| See Also

| --------

| pandas.Series.isin : equivalent method on Series

|

| Examples

| --------

|

| >>> s = pd.Categorical(['lama', 'cow', 'lama', 'beetle', 'lama',

| ... 'hippo'])

| >>> s.isin(['cow', 'lama'])

| array([ True, True, True, False, True, False])

|

| Passing a single string as ``s.isin('lama')`` will raise an error. Use

| a list of one element instead:

|

| >>> s.isin(['lama'])

| array([ True, False, True, False, True, False])

|

| isna(self)

| Detect missing values

|

| Missing values (-1 in .codes) are detected.

|

| Returns

| -------

| a boolean array of whether my values are null

|

| See also

| --------

| isna : top-level isna

| isnull : alias of isna

| Categorical.notna : boolean inverse of Categorical.isna

|

| isnull = isna(self)

|

| map(self, mapper)

| Map categories using input correspondence (dict, Series, or function).

|

| Maps the categories to new categories. If the mapping correspondence is

| one-to-one the result is a :class:`~pandas.Categorical` which has the

| same order property as the original, otherwise a :class:`~pandas.Index`

| is returned.

|

| If a `dict` or :class:`~pandas.Series` is used any unmapped category is

| mapped to `NaN`. Note that if this happens an :class:`~pandas.Index`

| will be returned.

|

| Parameters

| ----------

| mapper : function, dict, or Series

| Mapping correspondence.

|

| Returns

| -------

| pandas.Categorical or pandas.Index

| Mapped categorical.

|

| See Also

| --------

| CategoricalIndex.map : Apply a mapping correspondence on a

| :class:`~pandas.CategoricalIndex`.

| Index.map : Apply a mapping correspondence on an

| :class:`~pandas.Index`.

| Series.map : Apply a mapping correspondence on a

| :class:`~pandas.Series`.

| Series.apply : Apply more complex functions on a

| :class:`~pandas.Series`.

|

| Examples

| --------

| >>> cat = pd.Categorical(['a', 'b', 'c'])

| >>> cat

| [a, b, c]

| Categories (3, object): [a, b, c]

| >>> cat.map(lambda x: x.upper())

| [A, B, C]

| Categories (3, object): [A, B, C]

| >>> cat.map({'a': 'first', 'b': 'second', 'c': 'third'})

| [first, second, third]

| Categories (3, object): [first, second, third]

|

| If the mapping is one-to-one the ordering of the categories is

| preserved:

|

| >>> cat = pd.Categorical(['a', 'b', 'c'], ordered=True)

| >>> cat

| [a, b, c]

| Categories (3, object): [a < b < c]

| >>> cat.map({'a': 3, 'b': 2, 'c': 1})

| [3, 2, 1]

| Categories (3, int64): [3 < 2 < 1]

|

| If the mapping is not one-to-one an :class:`~pandas.Index` is returned:

|

| >>> cat.map({'a': 'first', 'b': 'second', 'c': 'first'})

| Index(['first', 'second', 'first'], dtype='object')

|

| If a `dict` is used, all unmapped categories are mapped to `NaN` and

| the result is an :class:`~pandas.Index`:

|

| >>> cat.map({'a': 'first', 'b': 'second'})

| Index(['first', 'second', nan], dtype='object')

|

| max(self, numeric_only=None, **kwargs)

| The maximum value of the object.

|

| Only ordered `Categoricals` have a maximum!

|

| Raises

| ------

| TypeError

| If the `Categorical` is not `ordered`.

|

| Returns

| -------

| max : the maximum of this `Categorical`

|

| memory_usage(self, deep=False)

| Memory usage of my values

|

| Parameters

| ----------

| deep : bool

| Introspect the data deeply, interrogate

| `object` dtypes for system-level memory consumption

|

| Returns

| -------

| bytes used

|

| Notes

| -----

| Memory usage does not include memory consumed by elements that

| are not components of the array if deep=False

|

| See Also

| --------

| numpy.ndarray.nbytes

|

| min(self, numeric_only=None, **kwargs)

| The minimum value of the object.

|

| Only ordered `Categoricals` have a minimum!

|

| Raises

| ------

| TypeError

| If the `Categorical` is not `ordered`.

|

| Returns

| -------

| min : the minimum of this `Categorical`

|

| mode(self)

| Returns the mode(s) of the Categorical.

|

| Always returns `Categorical` even if only one value.

|

| Returns

| -------

| modes : `Categorical` (sorted)

|

| notna(self)

| Inverse of isna

|

| Both missing values (-1 in .codes) and NA as a category are detected as

| null.

|

| Returns

| -------

| a boolean array of whether my values are not null

|

| See also

| --------

| notna : top-level notna

| notnull : alias of notna

| Categorical.isna : boolean inverse of Categorical.notna

|

| notnull = notna(self)

|

| put(self, *args, **kwargs)

| Replace specific elements in the Categorical with given values.

|

| ravel(self, order='C')

| Return a flattened (numpy) array.

|

| For internal compatibility with numpy arrays.

|

| Returns

| -------

| raveled : numpy array

|

| remove_categories(self, removals, inplace=False)

| Removes the specified categories.

|

| `removals` must be included in the old categories. Values which were in

| the removed categories will be set to NaN

|

| Raises

| ------

| ValueError

| If the removals are not contained in the categories

|

| Parameters

| ----------

| removals : category or list of categories

| The categories which should be removed.

| inplace : boolean (default: False)

| Whether or not to remove the categories inplace or return a copy of

| this categorical with removed categories.

|

| Returns

| -------

| cat : Categorical with removed categories or None if inplace.

|

| See also

| --------

| rename_categories

| reorder_categories

| add_categories

| remove_unused_categories

| set_categories

|

| remove_unused_categories(self, inplace=False)

| Removes categories which are not used.

|

| Parameters

| ----------

| inplace : boolean (default: False)

| Whether or not to drop unused categories inplace or return a copy of

| this categorical with unused categories dropped.

|

| Returns

| -------

| cat : Categorical with unused categories dropped or None if inplace.

|

| See also

| --------

| rename_categories

| reorder_categories

| add_categories

| remove_categories

| set_categories

|

| rename_categories(self, new_categories, inplace=False)

| Renames categories.

|

| Raises

| ------

| ValueError

| If new categories are list-like and do not have the same number of

| items than the current categories or do not validate as categories

|

| Parameters

| ----------

| new_categories : list-like, dict-like or callable

|

| * list-like: all items must be unique and the number of items in

| the new categories must match the existing number of categories.

|

| * dict-like: specifies a mapping from

| old categories to new. Categories not contained in the mapping

| are passed through and extra categories in the mapping are

| ignored.

|

| .. versionadded:: 0.21.0

|

| * callable : a callable that is called on all items in the old

| categories and whose return values comprise the new categories.

|

| .. versionadded:: 0.23.0

|

| .. warning::

|

| Currently, Series are considered list like. In a future version

| of pandas they'll be considered dict-like.

|

| inplace : boolean (default: False)

| Whether or not to rename the categories inplace or return a copy of

| this categorical with renamed categories.

|

| Returns

| -------

| cat : Categorical or None

| With ``inplace=False``, the new categorical is returned.

| With ``inplace=True``, there is no return value.

|

| See also

| --------

| reorder_categories

| add_categories

| remove_categories

| remove_unused_categories

| set_categories

|

| Examples

| --------

| >>> c = Categorical(['a', 'a', 'b'])

| >>> c.rename_categories([0, 1])

| [0, 0, 1]

| Categories (2, int64): [0, 1]

|

| For dict-like ``new_categories``, extra keys are ignored and

| categories not in the dictionary are passed through

|

| >>> c.rename_categories({'a': 'A', 'c': 'C'})

| [A, A, b]

| Categories (2, object): [A, b]

|

| You may also provide a callable to create the new categories

|

| >>> c.rename_categories(lambda x: x.upper())

| [A, A, B]

| Categories (2, object): [A, B]

|

| reorder_categories(self, new_categories, ordered=None, inplace=False)

| Reorders categories as specified in new_categories.

|

| `new_categories` need to include all old categories and no new category

| items.

|

| Raises

| ------

| ValueError

| If the new categories do not contain all old category items or any

| new ones

|

| Parameters

| ----------

| new_categories : Index-like

| The categories in new order.

| ordered : boolean, optional

| Whether or not the categorical is treated as a ordered categorical.

| If not given, do not change the ordered information.

| inplace : boolean (default: False)

| Whether or not to reorder the categories inplace or return a copy of

| this categorical with reordered categories.

|

| Returns

| -------

| cat : Categorical with reordered categories or None if inplace.

|

| See also

| --------

| rename_categories

| add_categories

| remove_categories

| remove_unused_categories

| set_categories

|

| repeat(self, repeats, *args, **kwargs)

| Repeat elements of a Categorical.

|

| See also

| --------

| numpy.ndarray.repeat

|

| searchsorted(self, value, side='left', sorter=None)

| Find indices where elements should be inserted to maintain order.

|

| Find the indices into a sorted Categorical `self` such that, if the

| corresponding elements in `value` were inserted before the indices,

| the order of `self` would be preserved.

|

| Parameters

| ----------

| value : array_like

| Values to insert into `self`.

| side : {'left', 'right'}, optional

| If 'left', the index of the first suitable location found is given.

| If 'right', return the last such index. If there is no suitable

| index, return either 0 or N (where N is the length of `self`).

| sorter : 1-D array_like, optional

| Optional array of integer indices that sort `self` into ascending

| order. They are typically the result of ``np.argsort``.

|

| Returns

| -------

| indices : array of ints

| Array of insertion points with the same shape as `value`.

|

| See Also

| --------

| numpy.searchsorted

|

| Notes

| -----

| Binary search is used to find the required insertion points.

|

| Examples

| --------

|

| >>> x = pd.Series([1, 2, 3])

| >>> x

| 0 1

| 1 2

| 2 3

| dtype: int64

|

| >>> x.searchsorted(4)

| array([3])

|

| >>> x.searchsorted([0, 4])

| array([0, 3])

|

| >>> x.searchsorted([1, 3], side='left')

| array([0, 2])

|

| >>> x.searchsorted([1, 3], side='right')

| array([1, 3])

|

| >>> x = pd.Categorical(['apple', 'bread', 'bread',

| 'cheese', 'milk'], ordered=True)

| [apple, bread, bread, cheese, milk]

| Categories (4, object): [apple < bread < cheese < milk]

|

| >>> x.searchsorted('bread')

| array([1]) # Note: an array, not a scalar

|

| >>> x.searchsorted(['bread'], side='right')

| array([3])

|

| set_categories(self, new_categories, ordered=None, rename=False, inplace=False)

| Sets the categories to the specified new_categories.

|

| `new_categories` can include new categories (which will result in

| unused categories) or remove old categories (which results in values

| set to NaN). If `rename==True`, the categories will simple be renamed

| (less or more items than in old categories will result in values set to

| NaN or in unused categories respectively).

|

| This method can be used to perform more than one action of adding,

| removing, and reordering simultaneously and is therefore faster than

| performing the individual steps via the more specialised methods.

|

| On the other hand this methods does not do checks (e.g., whether the

| old categories are included in the new categories on a reorder), which

| can result in surprising changes, for example when using special string

| dtypes on python3, which does not considers a S1 string equal to a

| single char python string.

|

| Raises

| ------

| ValueError

| If new_categories does not validate as categories

|

| Parameters

| ----------

| new_categories : Index-like

| The categories in new order.

| ordered : boolean, (default: False)

| Whether or not the categorical is treated as a ordered categorical.

| If not given, do not change the ordered information.

| rename : boolean (default: False)

| Whether or not the new_categories should be considered as a rename

| of the old categories or as reordered categories.

| inplace : boolean (default: False)

| Whether or not to reorder the categories inplace or return a copy of

| this categorical with reordered categories.

|

| Returns

| -------

| cat : Categorical with reordered categories or None if inplace.

|

| See also

| --------

| rename_categories

| reorder_categories

| add_categories

| remove_categories

| remove_unused_categories

|

| set_ordered(self, value, inplace=False)

| Sets the ordered attribute to the boolean value

|

| Parameters

| ----------

| value : boolean to set whether this categorical is ordered (True) or

| not (False)

| inplace : boolean (default: False)

| Whether or not to set the ordered attribute inplace or return a copy

| of this categorical with ordered set to the value

|

| shift(self, periods)

| Shift Categorical by desired number of periods.

|

| Parameters

| ----------

| periods : int

| Number of periods to move, can be positive or negative

|

| Returns

| -------

| shifted : Categorical

|

| sort_values(self, inplace=False, ascending=True, na_position='last')

| Sorts the Categorical by category value returning a new

| Categorical by default.

|

| While an ordering is applied to the category values, sorting in this

| context refers more to organizing and grouping together based on

| matching category values. Thus, this function can be called on an

| unordered Categorical instance unlike the functions 'Categorical.min'

| and 'Categorical.max'.

|

| Parameters

| ----------

| inplace : boolean, default False

| Do operation in place.

| ascending : boolean, default True

| Order ascending. Passing False orders descending. The

| ordering parameter provides the method by which the

| category values are organized.

| na_position : {'first', 'last'} (optional, default='last')

| 'first' puts NaNs at the beginning

| 'last' puts NaNs at the end

|

| Returns

| -------

| y : Categorical or None