很长时间未更新了,人懒了。

最近有不少的东西,慢慢写吧,最近尝试了一下python 使用Redis 来构建分布式爬虫;

单体爬虫有很多缺点,但是在学习过程中能够学习爬虫的基本理念与运行模式,在后期构建健壮的爬虫还是很有用的;获取代理,构造Header伪装,构造Referer..... 在分布式里一样一样的

分布式爬虫,听起来就很高大上啊,运行起来也的确高大上;

=======================================================================================================

安装Redis

1.官网下载redis的tar包

wget http://download.redis.io/releases/redis-4.0.9.tar.gz

2. 解压安装包到安装目录

tar xvf redis-4.0.9.tar.gz -C /usr/local/

3.cd /usr/local/redis-4.0.9

4. 编译安装

make

====================如果出现以下错误

In file included from adlist.c:34:0:

zmalloc.h:50:31: fatal error: jemalloc/jemalloc.h: No such file or directory

#include <jemalloc/jemalloc.h>

^

compilation terminated.

make[1]: *** [adlist.o] Error 1

make[1]: Leaving directory `/usr/local/redis-4.0.9/src'

make: *** [all] Error 2

则使用make MALLOC=libc

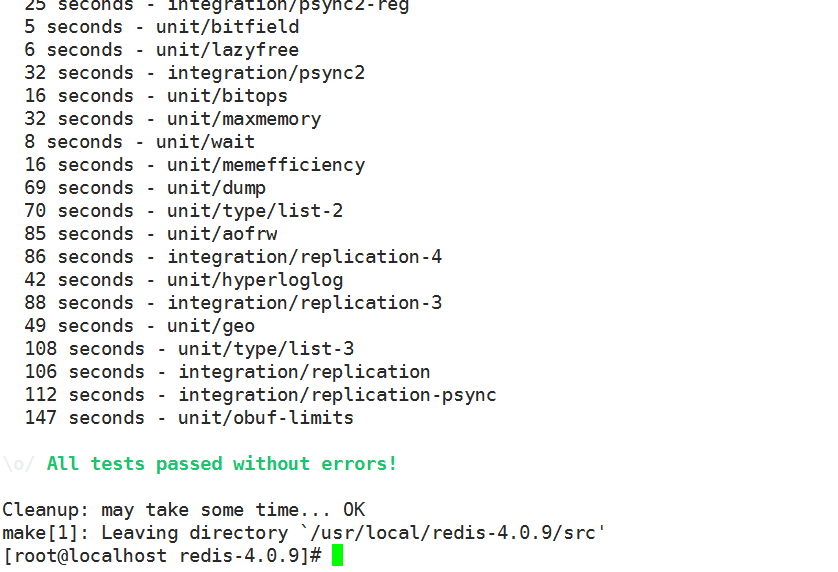

5. 测试是否安装成功

6. make test

======================make test 需要安装 tcl : yum -y install tcl

7. 测试成功

=======================================================================================================

Shell 操作

1. 连接Redis server

src/redis-cli #默认连接地址: 127.0.0.1:6379

src/redis-cli --help #帮助

src/redis-cli -h 192.168.209.145 # 连接远程Redis server 未加认证

src/redis-cli -h 192.168.209.145 -a passwd -p 6379 # 连接指定的信息服务器port=6379,password=passwd

2. 插入数据

==================

如果连接后未认证,则

auth ***** # * 为passwd

也可连接时认证

==================

set key value # 语法

set age 20

3.读取数据

get key # 语法

get age

"20"

=======================================================================================================

配置Redis.conf

以下是配置文件内容,大部分都是默认的;

更改:

bind 192.168.209.159 # 服务器ip, 如果是127.0.0.1则不能远程连接Redis

protected-mode no # 关闭保护模式

requirepass **** # 设置远程连接的密码

启动Redis src/redis-server 默认启动xxxxx

src/redis-server redis.conf 启动时加载配置文件

=======================================================================================================

Spider master 主要抓取URL的地址,并存入Redis

以下代码需要安装几个库,bs4, requests,redis,lxml

pip install 即可;

我使用的是pycharm IDE ,这几个包基本爬虫必备,基本功;

#!/usr/bin/env python # coding:utf-8 # @Time : 2018/3/21 22:44 # @Author : maomao # @File : Mzitumaster.py # @Mail : mail_maomao@163.com from bs4 import BeautifulSoup import requests from redis import Redis import time con = Redis(host="192.168.209.145",port=6379,password="tellusrd") baseUrl = "http://www.mzitu.com/" URL = baseUrl + "all" def getResponse(url): contents = requests.get(url).text return BeautifulSoup(contents,'lxml') def genObjs(**kwargs): return kwargs def addRedis(key,value): con.lpush(key,value) def getRedis(key): value = con.rpop(key) if value: return eval(value.decode('utf-8')) return None def getImagePages(url): soup = getResponse(url) pages = soup.find("div",attrs={'class':'pagenavi'}).find_all('span')[-2].text return pages def getImagesUrl(): soup = getResponse(URL) alltag = soup.find_all("a") for tag in alltag: url = tag.get('href') preurl = url.split('/')[-1] if preurl: endurl = baseUrl + preurl page = getImagePages(endurl) data = genObjs(title=tag.text,url=endurl,page=page) addRedis("objs",data) #### 以下两个自己测试用 def writeHost(data): title = data['title'] url = data['url'] with open("mmurl.txt","a+",encoding="utf-8") as f: f.write(title+" "+url+" ") def getValues(): while True: datas = getRedis("objs") if datas: writeHost(datas) else: break if __name__ == "__main__": print(time.ctime()) getImagesUrl() # getValues() print(time.ctime())

Spider slave 从Redis 中获取目标URL 地址并执行下载任务

#!/usr/bin/env python # coding:utf-8 # @Time : 2018/3/22 22:09 # @Author : maomao # @File : Mzituspider.py # @Mail : mail_maomao@163.com from redis import Redis from bs4 import BeautifulSoup import requests con = Redis(host="192.168.209.145",port=6379,password="tellusrd") def getResponse(url): contents = requests.get(url).text return BeautifulSoup(contents,'lxml') def getRedis(key): value = con.rpop(key) if value: return eval(value.decode('utf-8')) return None def getValues(): while True: datas = getRedis("objs") if datas: mmtitle = datas['title'] page = datas['page'] for i in range(1, 2): url = datas['url'] + "/" + str(i) contents = getResponse(url) imageurl = contents.find("div", attrs={"class": "main-image"}).find("img").get('src') print(imageurl) downImages(imageurl, url, mmtitle) else: break def downImages(url,referer,title): headers = { 'User-Agent': "Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.1 (KHTML, like Gecko) Chrome/22.0.1207.1 Safari/537.1", 'Referer': referer, } image = requests.get(url,headers=headers,stream=True) name = url[-10:] print("正在下载: ",title) with open(name,'wb') as f: f.write(image.content) if __name__ == "__main__": getValues()

注: 以上部分运行测试没问题,最后的存储部分未写完,不想写;

自己的思路如下:

根据Title 建立独立的文件夹用来保存即可;