用到的python第三方库:bs4、requests、execjs(在python中调用js脚本)

目标网站: www.beiwo.tv

通过分析网页源码发现,网页通过调用一个加密JS脚本来加密网页源码中的一段字符串从而获得迅雷电影链接,将分析网页得到的JS加密源码存放到encode.js文件中,通过exejs库在python中调用并传递我们抓取到的待加密的字符串从而获得想要得到的电影迅雷下载链接。

抓包可以分析出网页搜索所发送的表单,构造data表单,向网页发送请求获得搜索网页url,然后按照上面所说解析网页获得待加密字符串。

源码如下:

1 #ThunderUrl.py 2 import execjs 3 def echoDown(str): 4 #获得未编码的迅雷链接 5 gurl = [] 6 s = str.split("###") 7 for i in range(0,len(s)-1): 8 gurl = s[i].split("$") 9 return gurl[1] 10 11 def getJs(): 12 #生成JS脚本对象 13 f = open("encode.js", 'r') 14 line = f.readline() 15 htmlstr = '' 16 while line: 17 htmlstr = htmlstr + line 18 line = f.readline() 19 return htmlstr 20 21 """ 22 将str编码成可用的迅雷链接 23 """ 24 def getThunderUrl(str): 25 t_url = echoDown(str) 26 jsstr = getJs() 27 ctx = execjs.compile(jsstr) 28 ThunderUrl = ctx.call('ThunderEncode',t_url) 29 print(ThunderUrl)

1 #MovieSearch.py 2 import requests 3 from bs4 import BeautifulSoup 4 import re 5 6 def MovieSeach(movieName): 7 #根据movideName 从url中请求搜索结果 并将结果url存放到集合movieUrlSet中 8 name = movieName 9 data = {'typeid': '2', 'wd': name} 10 url = 'http://www.beiwo.tv/index.php?s=vod-search' 11 headers = { 12 'Cache-Control': 'max-age=0', 13 'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,image/webp,*/*;q=0.8', 14 'Accept-Encoding': 'gzip, deflate', 15 'Accept-Language': 'zh-CN,zh;q=0.8', 16 'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/54.0.2840.99 Safari/537.36' 17 } 18 response = requests.post(url, data=data) 19 if response.status_code != 200: 20 print('网页打开失败') 21 exit(0) 22 response.raise_for_status() 23 response.encoding = response.apparent_encoding 24 soup = BeautifulSoup(response.text, 'lxml') 25 movieUrl = soup.find_all('a') 26 movieUrlSet = set() 27 for movie in movieUrl: 28 temp = re.findall(r'(/vod/.*/)', movie['href']) 29 if len(temp): 30 url = 'http://www.beiwo.tv' + "".join(list(temp)) 31 movieUrlSet.add(url) 32 return movieUrlSet 33 34 """ 35 解析urls中的url获得编码前的迅雷链接 36 将迅雷链接存放到Thunders中 37 """ 38 def UrlParser(url): 39 response = requests.get(url) 40 if response.status_code != 200: 41 print('网页解析失败') 42 exit(0) 43 response.raise_for_status() 44 response.encoding = response.apparent_encoding 45 soup = BeautifulSoup(response.text,'lxml') 46 ThunderUrls = soup.find_all('script') 47 Thunders = re.findall(r'var GvodUrls3 = "(.*###)";',str(ThunderUrls)) 48 return Thunders

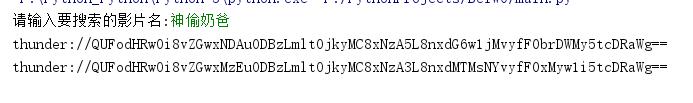

1 #main.py 2 from ThunderUrl import * 3 from MovieSearch import * 4 5 if __name__ == "__main__": 6 print('请输入要搜索的影片名:',end="") 7 name = input() 8 UrlSet = MovieSeach(name) 9 for url in UrlSet: 10 Thunders = UrlParser(url) 11 for Thunder in Thunders: 12 getThunderUrl(Thunder)

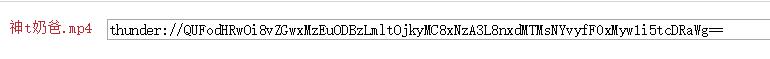

运行结果:

侵删