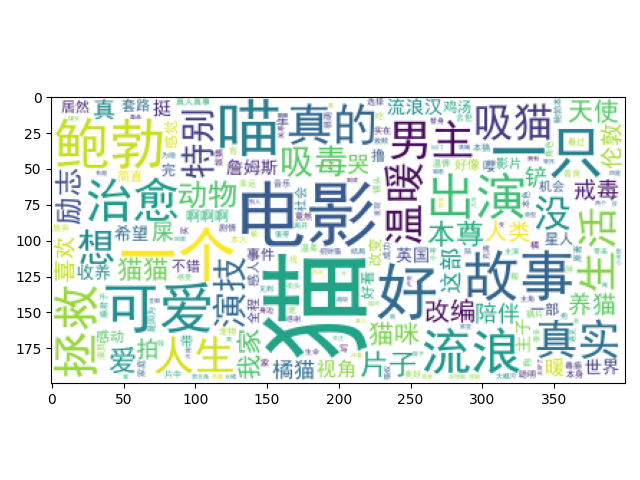

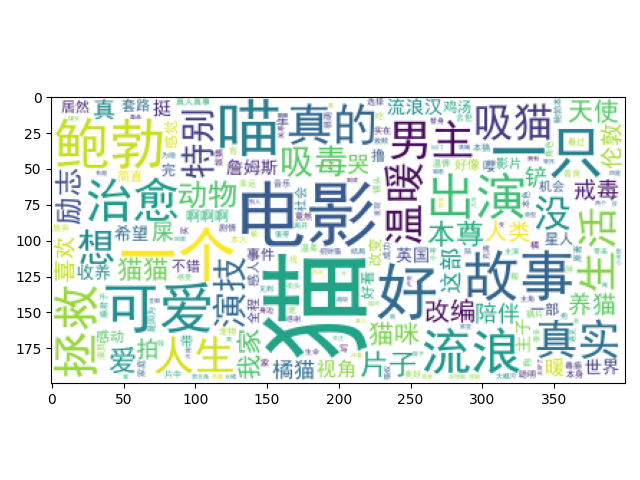

没事弄着玩的,爬取的是电影《流浪猫鲍勃》的电影评价,说是有1W多评价,实际只有500条左右,估计是引用的也算进去了

用的是python scrapy框架,安装部分就省略了

import time

import scrapy

from scrapy.selector import Selector

from ..items import DoubanItem

# 模拟请求头

headers = {

'User-Agent': "Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/55.0.2883.87 Safari/537.36"}

class QuotesSpider(scrapy.Spider):

name = "quotes"

allowed_domains = ['movie.douban.com']

start_urls = ['https://movie.douban.com/subject/26685451/comments?start=500&limit=20&status=P&sort=new_score']

number = 0

def parse(self, response):

item = DoubanItem()

for v in response.xpath(

'//div[@class="comment-item "]/div[@class="comment"]'):

item['name'] = v.xpath('h3/span[@class="comment-info"]/a/text()').get()

item['time'] = str.strip(

v.xpath('h3/span[@class="comment-info"]/span[@class="comment-time "]/text()').get())

item['evaluate'] = v.xpath('p[@class=" comment-content"]/span[@class="short"]/text()').get()

item['star'] = v.css("span").xpath('@title').get()

yield item

next_link_end = response.xpath("//div[@class='center']/a[@class='next']/@href").get()

next_link = response.xpath("//div[@class='center']/a[@class='next']/text()").get()

if next_link == '后页 >':

time.sleep(1)

self.number = self.number + 20

next_like = 'https://movie.douban.com/subject/26685451/comments' + next_link_end

yield scrapy.Request(url=next_like, callback=self.parse, headers={

'User-Agent': "Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/55.0.2883.87 Safari/537.36",

"Referer": "https://movie.douban.com/subject/26685451/comments?start={}&limit=20&status=P&sort=new_score".format(

self.number),

"Cookie": '你的cookie'}) # 豆瓣有限制,没登录只等爬取200条左右的数据

items.py文件

import scrapy

class DoubanItem(scrapy.Item):

# define the fields for your item here like:

name = scrapy.Field()

time = scrapy.Field()

star = scrapy.Field()

evaluate = scrapy.Field()

pass

启动命令 scrapy crawl quotes -o list.csv 直接保存为list.csv文件

后续我把文件存进了数据库,通过数据库读取的

#! /usr/bin/env python

# -*- coding:utf-8 -*-

import pymysql, jieba, re

import pandas as pd

import matplotlib.pyplot as plt

from wordcloud import WordCloud

conn = pymysql.connect(host='*', user='root', passwd="*", db='demo', port=3306, charset='utf8')

cur = conn.cursor(cursor=pymysql.cursors.DictCursor)

sql = "select * from douban"

cur.execute(sql)

cur.close()

conn.close()

# 将列表中的数据转换为字符串

allComment = ''

for v in cur.fetchall():

allComment = allComment + v['evaluate'].strip(" ")

# 使用正则表达式去除标点符号

pattern = re.compile(r'[u4e00-u9fa5]+')

filterdata = re.findall(pattern, allComment)

cleaned_comments = ''.join(filterdata)

# 使用结巴分词进行中文分词

segment = jieba.lcut(cleaned_comments)

comment = pd.DataFrame({'segment': segment})

# 去掉停用词 chineseStopWords.txt 自己网上下m,qu

stopwords = pd.read_csv("./chineseStopWords.txt", index_col=False, quoting=3, sep=" ",

names=['stopword'], encoding='GBK')

comment = comment[~comment.segment.isin(stopwords.stopword)]

# 统计词频

comment_fre = comment.groupby(by='segment').agg(

计数=pd.NamedAgg(column='segment', aggfunc='size')).reset_index().sort_values(

by='计数', ascending=False)

# 用词云进行显示

wordcloud = WordCloud(

font_path="你的文件地址/simhei.ttf",

background_color="white", max_font_size=80)

word_frequence = {x[0]: x[1] for x in comment_fre.head(1000).values}

word_frequence_list = []

for key in word_frequence:

temp = (key, word_frequence[key])

word_frequence_list.append(temp)

wordcloud = wordcloud.fit_words(dict(word_frequence_list))

plt.imshow(wordcloud)

plt.show()

最终结果