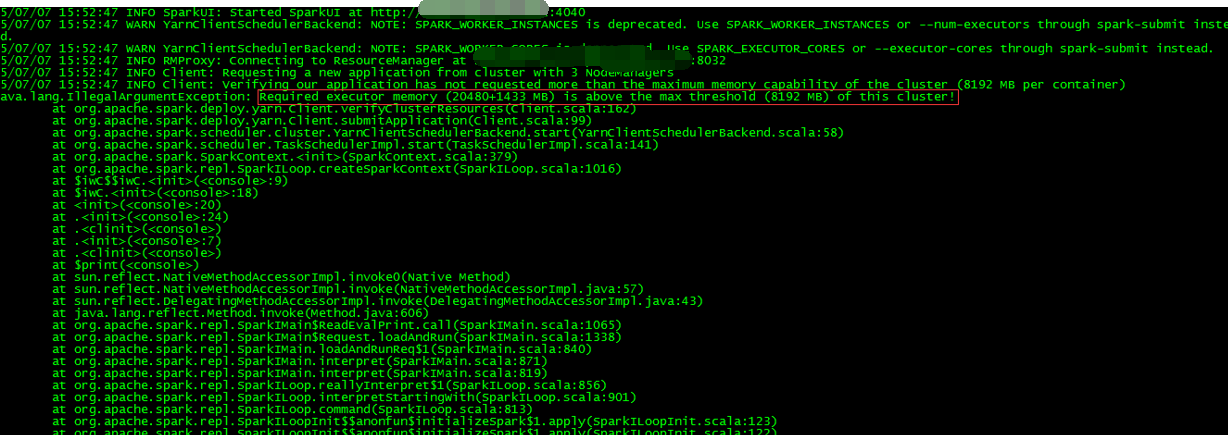

因为配置了spark如下参数,启动spark-shell报错

export SPARK_WORKER_CORES=5

export SPARK_WORKER_INSTANCES=4

export SPARK_WORKER_MEMORY=50G

export SPARK_WORKER_WEBUI_PORT=8081

export SPARK_EXECUTOR_CORES=2

export SPARK_EXECUTOR_MEMORY=20G

15/07/07 15:52:47 INFO Client: Verifying our application has not requested more than the maximum memory capability of the cluster (8192 MB per container)

java.lang.IllegalArgumentException: Required executor memory (20480+1433 MB) is above the max threshold (8192 MB) of this cluster!

at org.apache.spark.deploy.yarn.Client.verifyClusterResources(Client.scala:162)

查看在cloudera的管理控制台查看yarn的配置(修改之前是8,改为32)

同时更改下nodemanager的最大内存(修改之前是8,改为24)

另外,因为任务是提交到YARN上运行的,所以YARN中有几个关键参数,参考YARN的内存和CPU配置:

yarn.app.mapreduce.am.resource.mb :AM能够申请的最大内存,默认值为1536MB

yarn.nodemanager.resource.memory-mb :nodemanager能够申请的最大内存,默认值为8192MB

yarn.scheduler.minimum-allocation-mb :调度时一个container能够申请的最小资源,默认值为1024MB

yarn.scheduler.maximum-allocation-mb :调度时一个container能够申请的最大资源,默认值为8192MB

Spark On YARN内存分配:

http://www.tuicool.com/articles/YVFVRf3

http://www.sjsjw.com/107/001051MYM028913/