下载一长篇中文文章。

从文件读取待分析文本。

news = open('gzccnews.txt','r',encoding = 'utf-8')

安装与使用jieba进行中文分词。

pip install jieba

import jieba

list(jieba.lcut(news))

生成词频统计

排序

排除语法型词汇,代词、冠词、连词

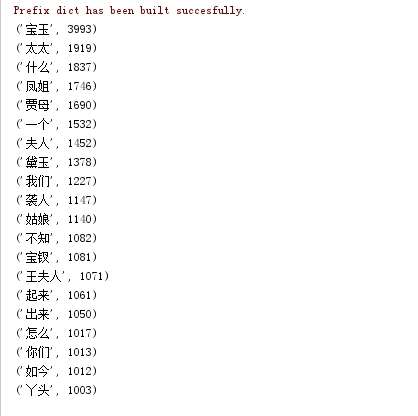

输出词频最大TOP20

代码

import jieba with open('novel.txt','r',encoding="utf-8") as file: novel = file.read() punctuation = '。,;!?、' for l in punctuation: novel = novel.replace(l,'') no_list = list(jieba.cut(novel)) dic = dict() for i in no_list: if len(i)!=1: dic[i] = novel.count(i) del_word = { ' ',' '} for i in del_word: if i in dic: del dic[i] dic = sorted(dic.items(),key=lambda x:x[1],reverse = True) for i in range(20): print(dic[i])

截图如下