错误1:

ERROR client.RemoteDriver: Failed to start SparkContext: java.lang.IllegalArgumentException: Executor memory 456340275 must be at least 471859200.

Please increase executor memory using the --executor-memory option or spark.executor.memory in Spark configuration

解决方法:搜索 spark.executor.memory 进行配置到可使用的范围大小,如下图:

错误2:

Caused by: java.lang.IllegalArgumentException:

Executor memory 456340275 must be at least 471859200.

Please increase executor memory using the --executor-memory option or spark.executor.memory in Spark configuration

解决方法:

spark.executor.memory(Spark 执行程序最大 Java 堆栈大小)的值过小,把 spark.executor.memory 设置大于 报错信息中规定的 at least 471859200。

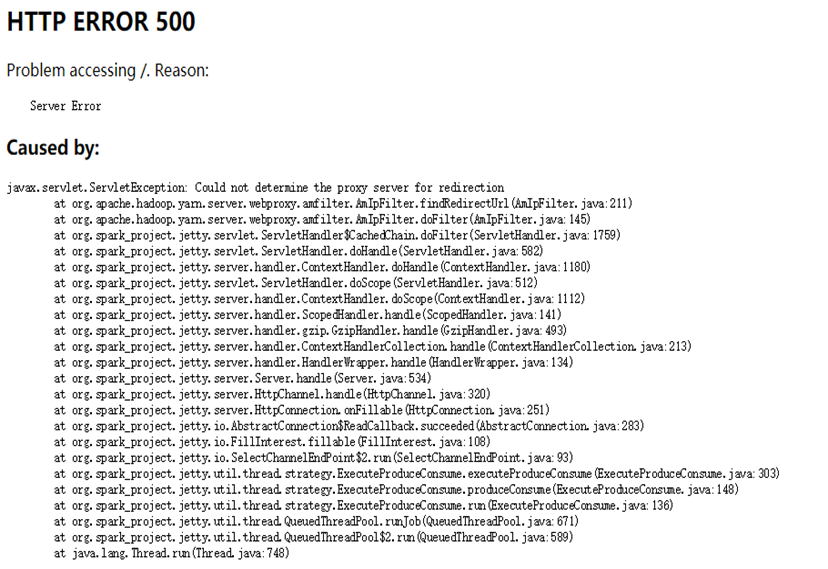

错误3:

使用 yarn HA时,运行 hive on yarn 的任务无法得出结果时,并且出现以下错误

Caused by:javax.servlet.ServletException: Could not determine the proxy server for redirection

解决办法:禁用 YARN HA,即ResourceManager只使用一个主节点,其实一般yarn HA仍然能运行 hive on yarn 的任务并且能得出正常结果,但是还是会报出同样错误

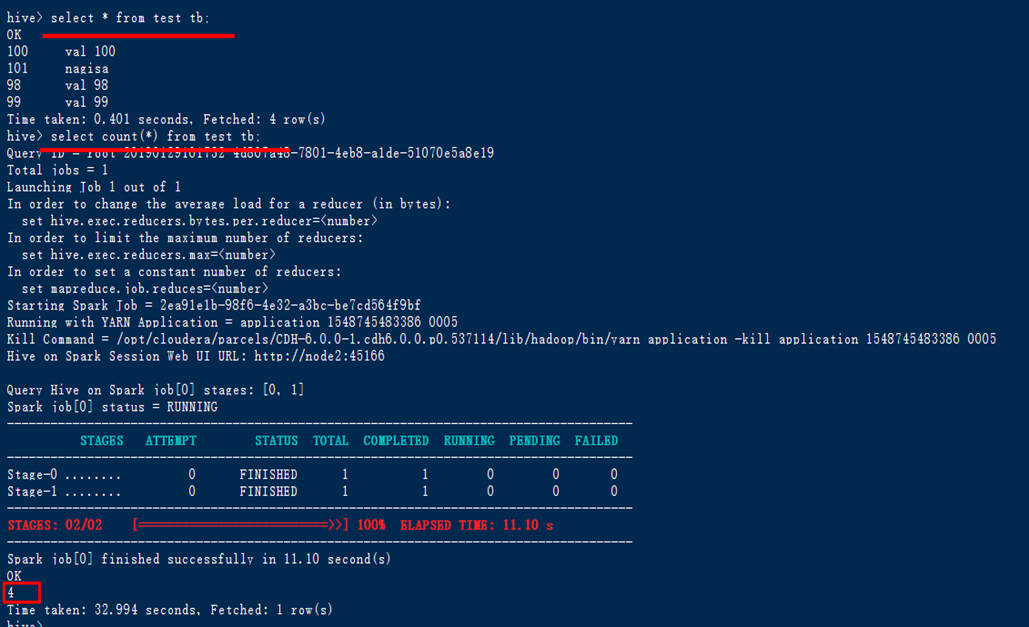

错误4:

YARN HA(node1、node2均部署了ResourceManager)的情况下,执行 hive on spark 的程序,虽然能得出正常执行成功得出结果,

但是对应该程序的日志信息仍然报错:无法确定用于重定向的代理服务器 Could not determine the proxy server for redirection。

select * from test_tb;

select count(*) from test_tb;

insert into test_tb values(2,'ushionagisa');

解决办法:

脚本中定义任务提交的命令:

Default Hive database:hdfs://nameservice1/user/hive/warehouse

spark.master:spark://master:7077

/root/spark/bin/spark-sql --master spark://node1:7077 --executor-memory 1g --total-executor-cores 2 --conf spark.sql.warehouse.dir=hdfs://nameservice1/user/hive/warehouse