参考https://blog.csdn.net/chentravelling/article/details/53558096

前言

使用透视变换可以完成2D到3D的转换,可以简单的想象为将照片上的人脸图像按照一定的角度进行多点拉扯形成3D图像,然后根据角度来判断姿态。使用的方法原理为使用2D平面上人脸的特征点和3D空间内对应的坐标点,按照求解pnp问题的思路。找到一个映射关系,从而估计头部的姿态。

一、基础知识

1.相机坐标系到像素坐标系

如图1,P(Xc,Yc,Zc)为相机成像中的实体点坐标,而p(x,y)为其对应的成像平面上的点的坐标。

通过相似三角形可以得到图2的等价关系。

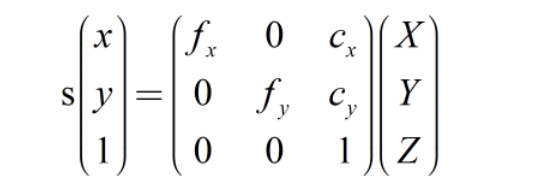

可以改写成对应的矩阵形式如图3,中间的矩阵即相机的内参:

2.相机坐标系到世界坐标系

世界坐标系和相机坐标系都是三维坐标系,只是对应的x,y,z轴的方向不同,可以通过旋转将二者的方向统一。还有就是原点位置(o点)不同,这个可以通过平移来移到同一个位置。所以二者可以通过旋转矩阵R和平移矩阵T来进行坐标转换。

由1,2可知像素坐标到世界坐标的转换可以归为如图5所示的公式:

二、求解步骤

1.2D人脸关键点检测

获取2D人脸关键点参考 Dlib人脸特征点检测;获取到的68个点的序号如图6。

2.3D模型匹配

使用的3D通用头部刚体模型,即在世界坐标系中,建立了一个头部的模型,该模型在世界坐标系中的各个点的坐标是固定的。我们可以通过在Dlib中检测的特征点,找到在该3D模型中对应的世界坐标系,例如2D中的鼻尖坐标为(u,v),我们可以使用该模型中的鼻尖位置的3D坐标为(Uc,Vc,Wc),从而形成一个点对。当有足够的点对时,我们就可以求解出对应的R矩阵和T矩阵,从而进行姿态估计(pnp求解问题不是该文重点,有好奇的可以自行百度,opencv提供了solvepnp函数来解决PnP问题)。

如下代码为3D通用刚体模型中的14个点的坐标:

//对应Dlib上点的序号为18, 22, 23, 27, 37, 40, 43, 46, 32, 36, 49, 55, 58, 9

pts_14 = np.float32([[6.825897, 6.760612, 4.402142],

[1.330353, 7.122144, 6.903745],

[-1.330353, 7.122144, 6.903745],

[-6.825897, 6.760612, 4.402142],

[5.311432, 5.485328, 3.987654],

[1.789930, 5.393625, 4.413414],

[-1.789930, 5.393625, 4.413414],

[-5.311432, 5.485328, 3.987654],

[2.005628, 1.409845, 6.165652],

[-2.005628, 1.409845, 6.165652],

[2.774015, -2.080775, 5.048531],

[-2.774015, -2.080775, 5.048531],

[0.000000, -3.116408, 6.097667],

[0.000000, -7.415691, 4.070434]])

3.求解3D点和对应2D点的转换关系

Opencv提供的方法:

//求取旋转向量和平移向量(求解PNP问题)

cv::solvePnP(model_points, image_points, camera_matrix, dist_coeffs, rotation_vector, translation_vector);

//罗德里格斯公式将旋转向量转移为旋转矩阵rotation_matrix

cv::Rodrigues(rotation_vector, rotation_matrix);

//形成R|T矩阵 pose_mat

cv::hconcat(rotation_matrix, translation_vector, pose_mat);

//算出欧拉角euler_angle

cv::decomposeProjectionMatrix(pose_mat, out_intrinsics, out_rotation, out_translation, cv::noArray(), cv::noArray(), cv::noArray(), euler_angle);

算欧拉角的代码如下(示例):

import cv2

import numpy as np

from PIL import Image

'模拟相机内参'

img_size=(640, 480)

focal_length = img_size[0]

camera_center = (img_size[1] / 2,img_size[0] / 2)

cam_matrix = np.array(

[[focal_length, 0, camera_center[0]],

[0, focal_length,camera_center[1]],

[0, 0, 1]], dtype="double")

'无畸变'

dist_coeffs = np.zeros((4, 1))

'头部三维通用模型关键点坐标'

object_pts_6 = np.array([

(0.0, 0.0, 0.0), # Nose tip 34

(0.0, -330.0, -65.0), # Chin 9

(-225.0, 170.0, -135.0), # Left eye left corner 46

(225.0, 170.0, -135.0), # Right eye right corne 37

(-150.0, -150.0, -125.0), # Left Mouth corner 55

(150.0, -150.0, -125.0) # Right mouth corner 49

], dtype=float) / 4.5

object_pts_14 = np.float32([[6.825897, 6.760612, 4.402142],

[1.330353, 7.122144, 6.903745],

[-1.330353, 7.122144, 6.903745],

[-6.825897, 6.760612, 4.402142],

[5.311432, 5.485328, 3.987654],

[1.789930, 5.393625, 4.413414],

[-1.789930, 5.393625, 4.413414],

[-5.311432, 5.485328, 3.987654],

[2.005628, 1.409845, 6.165652],

[-2.005628, 1.409845, 6.165652],

[2.774015, -2.080775, 5.048531],

[-2.774015, -2.080775, 5.048531],

[0.000000, -3.116408, 6.097667],

[0.000000, -7.415691, 4.070434]])

object_pts_68 = np.array([

[-73.393523, -29.801432, -47.667532],

[-72.775014, -10.949766, -45.909403],

[-70.533638, 7.929818, -44.84258 ],

[-66.850058, 26.07428 , -43.141114],

[-59.790187, 42.56439 , -38.635298],

[-48.368973, 56.48108 , -30.750622],

[-34.121101, 67.246992, -18.456453],

[-17.875411, 75.056892, -3.609035],

[ 0.098749, 77.061286, 0.881698],

[ 17.477031, 74.758448, -5.181201],

[ 32.648966, 66.929021, -19.176563],

[ 46.372358, 56.311389, -30.77057 ],

[ 57.34348 , 42.419126, -37.628629],

[ 64.388482, 25.45588 , -40.886309],

[ 68.212038, 6.990805, -42.281449],

[ 70.486405, -11.666193, -44.142567],

[ 71.375822, -30.365191, -47.140426],

[-61.119406, -49.361602, -14.254422],

[-51.287588, -58.769795, -7.268147],

[-37.8048 , -61.996155, -0.442051],

[-24.022754, -61.033399, 6.606501],

[-11.635713, -56.686759, 11.967398],

[ 12.056636, -57.391033, 12.051204],

[ 25.106256, -61.902186, 7.315098],

[ 38.338588, -62.777713, 1.022953],

[ 51.191007, -59.302347, -5.349435],

[ 60.053851, -50.190255, -11.615746],

[ 0.65394 , -42.19379 , 13.380835],

[ 0.804809, -30.993721, 21.150853],

[ 0.992204, -19.944596, 29.284036],

[ 1.226783, -8.414541, 36.94806 ],

[-14.772472, 2.598255, 20.132003],

[ -7.180239, 4.751589, 23.536684],

[ 0.55592 , 6.5629 , 25.944448],

[ 8.272499, 4.661005, 23.695741],

[ 15.214351, 2.643046, 20.858157],

[-46.04729 , -37.471411, -7.037989],

[-37.674688, -42.73051 , -3.021217],

[-27.883856, -42.711517, -1.353629],

[-19.648268, -36.754742, 0.111088],

[-28.272965, -35.134493, 0.147273],

[-38.082418, -34.919043, -1.476612],

[ 19.265868, -37.032306, 0.665746],

[ 27.894191, -43.342445, -0.24766 ],

[ 37.437529, -43.110822, -1.696435],

[ 45.170805, -38.086515, -4.894163],

[ 38.196454, -35.532024, -0.282961],

[ 28.764989, -35.484289, 1.172675],

[-28.916267, 28.612716, 2.24031 ],

[-17.533194, 22.172187, 15.934335],

[ -6.68459 , 19.029051, 22.611355],

[ 0.381001, 20.721118, 23.748437],

[ 8.375443, 19.03546 , 22.721995],

[ 18.876618, 22.394109, 15.610679],

[ 28.794412, 28.079924, 3.217393],

[ 19.057574, 36.298248, 14.987997],

[ 8.956375, 39.634575, 22.554245],

[ 0.381549, 40.395647, 23.591626],

[ -7.428895, 39.836405, 22.406106],

[-18.160634, 36.677899, 15.121907],

[-24.37749 , 28.677771, 4.785684],

[ -6.897633, 25.475976, 20.893742],

[ 0.340663, 26.014269, 22.220479],

[ 8.444722, 25.326198, 21.02552 ],

[ 24.474473, 28.323008, 5.712776],

[ 8.449166, 30.596216, 20.671489],

[ 0.205322, 31.408738, 21.90367 ],

[ -7.198266, 30.844876, 20.328022]])

reprojectsrc = np.float32 ([[10.0, 10.0, 10.0],

[10.0, -10.0, 10.0],

[-10.0, 10.0, 10.0],

[-10.0, -10.0, 10.0]])

def get_head_pose(shape,point_number):

'''即图像坐标系中点的坐标从face_landmark_localization的检测结果抽取姿态估计需要的点坐标'''

if point_number==14:

image_pts = np.float32([shape[17], shape[21], shape[22], shape[26], shape[36],

shape[39], shape[42], shape[45], shape[31], shape[35],

shape[48], shape[54], shape[57], shape[8]])

'函数solvepnp接收一组对应的3D坐标和2D坐标,以及相机内参camera_matrix和dist_coeffs进行反推图片的外参'

_, rotation_vec, translation_vec = cv2.solvePnP(object_pts_14, image_pts, cam_matrix, dist_coeffs)

elif point_number==6:

image_pts = np.float32([shape[34], shape[9],

shape[46], shape[37], shape[55], shape[49]])

_, rotation_vec, translation_vec = cv2.solvePnP(object_pts_6, image_pts, cam_matrix, dist_coeffs)

else:

image_pts = np.float32([shape])

_, rotation_vec, translation_vec = cv2.solvePnP(object_pts_68, image_pts, cam_matrix, dist_coeffs)

'函数projectPoints根据所给的3D坐标和已知的几何变换来求解投影后的2D坐标'

reprojectdst, _ = cv2.projectPoints(reprojectsrc, rotation_vec, translation_vec, cam_matrix,

dist_coeffs)

reprojectdst = tuple(map(tuple, reprojectdst.reshape(4, 2)))

# calc euler angle

rotation_mat, _ = cv2.Rodrigues(rotation_vec)

pose_mat = cv2.hconcat((rotation_mat, translation_vec))

_, _, _, _, _, _, euler_angle = cv2.decomposeProjectionMatrix(pose_mat)

return reprojectdst, euler_angle

算出了欧拉角就知道了头部相对于x,y,z轴的角度,然后根据角度可以估计对应的头部姿态。

X:上负下正 euler_angle[0][0]

Y:左负有正 euler_angle[1][0]

Z:左正右负 euler_angle[2][0]

三、结果图:

总结

建议使用14个点进行检测,6个点求解的误差过大,而68个点人脸轮廓部位的检测可能不是很准,计算出来的旋转矩阵和平移矩阵可能偏差过大,而且计算速度太慢。可能还会有其他的影响估计的准确度,在后续对特定相机标定后会继续进行测试。