#英文小说 词频统计

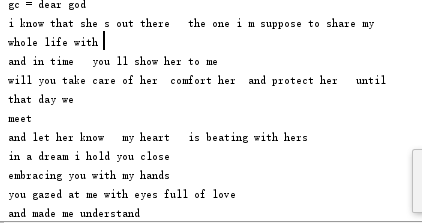

fo = open('gc.txt', 'r', encoding='utf-8')#提取字符串

gc = fo.read().lower()

fo.close()

print(gc)

运行结果:

strgc = str.lower(gc) #换小写

sep ='''.,;;!?~~-_...''' #替换字符

for ch in sep:

gc = gc.replace(ch,' ')

print(gc)

运行结果:

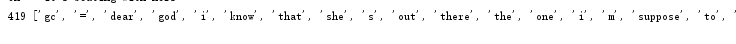

strList = gc.split() #用 list 分解提取单词

print(len(strList),strList)

运行结果:

strSet = set(strList) #用 set 计数

print(len(strSet),strSet)

运行结果:

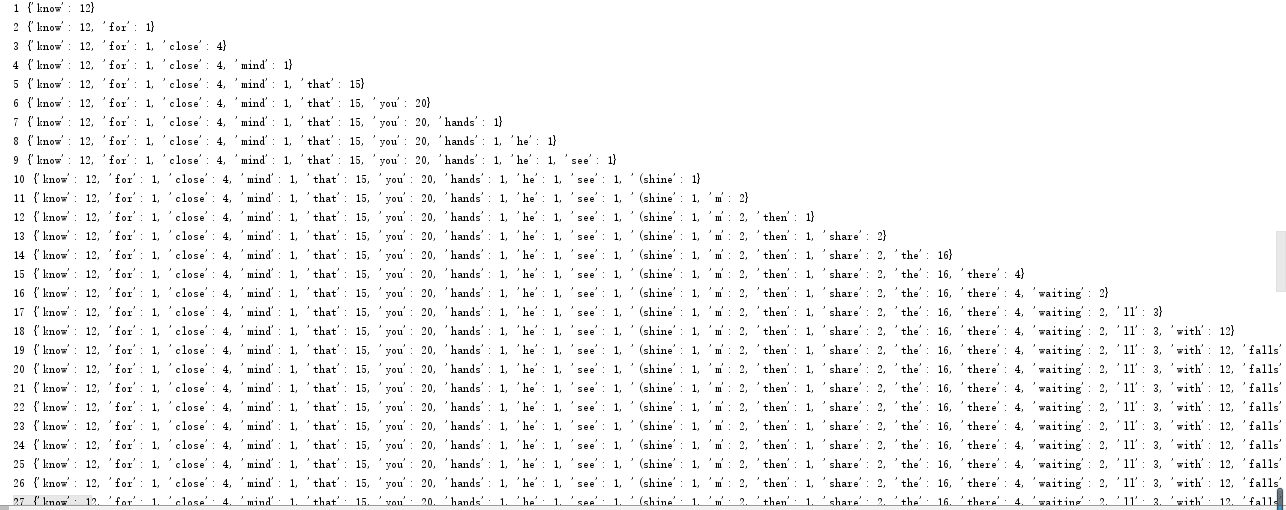

strDict = {} #用 dict 计数

for word in strSet:

strDict[word] = strList.count(word)

print(len(strDict),strDict)

运行结果:

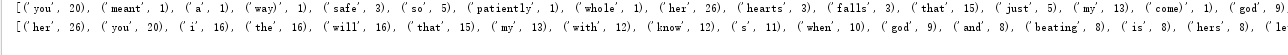

strList = list(strDict.items()) #词频排序 list.sort(key=)

print(strList)

strList.sort(key = lambda x:x[1],reverse = True)

print(strList)

运行结果:

strSet = set(strSet) #排除语法型词汇,代词、冠词、连词等无语义词

include = {'a','the','and','oh','you'}

strSet = strSet-include

print(len(strSet),strSet)

运行结果:

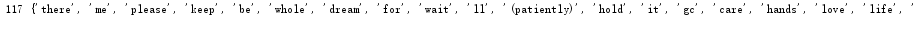

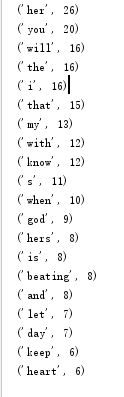

for i in range(20): #输出TOP(20)

print(strList[i])

运行结果:

#中文小说 词频统计

import jieba

to = open('sbe.txt', 'r', encoding='utf-8')#提取字符串

sbe = to.read()

to.close()

sep =',。?/:;<>!' #替换字符

for ch in sep:

sbe = sbe.replace(ch,' ')

print(sbe)

strList = sbe.split() #用 list 分解提取单词

print(len(strList),strList)

strSet = set(strList) #用 set 计数

print(len(strSet),strSet)

strDict = {} #用 dict 计数

for word in strSet:

strDict[word] = strList.count(word)

print(len(strDict),strDict)

strList = list(strDict.items()) #词频排序 list.sort(key=)

print(strList)

strList.sort(key = lambda x:x[1],reverse = True)

print(strList)

strSet = set(strSet) #排除语法型词汇,代词、冠词、连词等无语义词

include = {'的','和','啊','呢','啦'}

strSet = strSet-include

print(len(strSet),strSet)

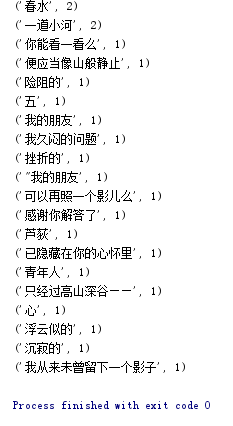

for i in range(20): #输出TOP(20)

print(strList[i])