一、camera_metadata简介

Camera API2/HAL3架构下使用了全新的CameraMetadata结构取代了之前的SetParameter/Paramters等操作,实现了Java到native到HAL3的参数传递。引入了管道的概念将安卓设备和摄像头之间联系起来,系统向摄像头发送 Capture 请求,而摄像头会返回 CameraMetadata,这一切建立在一个叫作 CameraCaptureSession 的会话中。和Camera_metadata数据结构相关的主要有以下几个文件:

frameworks/base/core/java/android/hardware/camera2/impl/CameraMetadataNative.java

frameworks/base/core/jni/android_hardware_camera2_CameraMetadata.cpp

system/media/camera/include/system/camera_metadata_tags.h(不允许直接引用该头文件,如需引用,可以引用camera_metadata.h)

system/media/camera/include/system/camera_metadata.h

system/media/camera/include/system/camera_vendor_tags.h(给各个芯片厂商扩展的tag)

system/media/camera/src/camera_metadata_tag_info.c(不允许直接调用该文件中的函数,如需调用,调用camera_metadata.c中包装函数)

system/media/camera/src/camera_metadata.c

framework/av/camera/CameraMetadata.cpp

framework/av/include/camera/CameraMetadata.h

frameworks/base/core/jni/android_hardware_camera2_CameraMetadata.cpp

二、Framework到HAL层的转换

Camera2Client 使用 API1 传递参数采用的逻辑是还是在Java层预留了setParameters接口,只是当Parameter在设置时比起CameraClient而言,是将这个Parameter根据不同的TAG形式直接绑定到CameraMetadata mPreviewRequest/mRecordRequest/mCaptureRequest中,这些数据会由Capture_Request转为camera3_capture_request中的camera_metadata_t settings完成参数从Java到native到HAL3的传递。

但是在Camera API2下,不再需要那么复杂的转换过程,在Java层中直接对参数进行设置并将其封装到Capture_Request即可,即参数控制由Java层来完成。这也体现了API2中Request和Result在APP中就大量存在的原因。对此为了和Framework Native层相关TAG数据的统一,在Java层中大量出现的参数设置是通过Section Tag的name来交由Native完成转换生成在Java层的TAG。

(1)Java层对应代码位置:frameworks/base/core/java/android/hardware/camera2/impl/CameraMetadataNative.java

1 private <T> T getBase(Key<T> key) { 2 int tag = nativeGetTagFromKeyLocal(key.getName()); 3 byte[] values = readValues(tag); 4 if (values == null) { 5 // If the key returns null, use the fallback key if exists. 6 // This is to support old key names for the newly published keys. 7 if (key.mFallbackName == null) { 8 return null; 9 } 10 tag = nativeGetTagFromKeyLocal(key.mFallbackName); 11 values = readValues(tag); 12 if (values == null) { 13 return null; 14 } 15 } 16 17 int nativeType = nativeGetTypeFromTagLocal(tag); 18 Marshaler<T> marshaler = getMarshalerForKey(key, nativeType); 19 ByteBuffer buffer = ByteBuffer.wrap(values).order(ByteOrder.nativeOrder()); 20 return marshaler.unmarshal(buffer); 21 }

(2)Native层对应代码位置:frameworks/base/core/jni/android_hardware_camera2_CameraMetadata.cpp

1 static const JNINativeMethod gCameraMetadataMethods[] = { 2 // static methods 3 { "nativeGetTagFromKey", 4 "(Ljava/lang/String;J)I", 5 (void *)CameraMetadata_getTagFromKey }, 6 { "nativeGetTypeFromTag", 7 "(IJ)I", 8 (void *)CameraMetadata_getTypeFromTag }, 9 { "nativeSetupGlobalVendorTagDescriptor", 10 "()I", 11 (void*)CameraMetadata_setupGlobalVendorTagDescriptor }, 12 // instance methods 13 { "nativeAllocate", 14 "()J", 15 (void*)CameraMetadata_allocate }, 16 { "nativeAllocateCopy", 17 "(L" CAMERA_METADATA_CLASS_NAME ";)J", 18 (void *)CameraMetadata_allocateCopy }, 19 { "nativeIsEmpty", 20 "()Z", 21 (void*)CameraMetadata_isEmpty }, 22 { "nativeGetEntryCount", 23 "()I", 24 (void*)CameraMetadata_getEntryCount }, 25 { "nativeClose", 26 "()V", 27 (void*)CameraMetadata_close }, 28 { "nativeSwap", 29 "(L" CAMERA_METADATA_CLASS_NAME ";)V", 30 (void *)CameraMetadata_swap }, 31 { "nativeGetTagFromKeyLocal", 32 "(Ljava/lang/String;)I", 33 (void *)CameraMetadata_getTagFromKeyLocal }, 34 { "nativeGetTypeFromTagLocal", 35 "(I)I", 36 (void *)CameraMetadata_getTypeFromTagLocal }, 37 ... 38 };

其中CameraMetadata_getTagFromKey是实现将一个Java层的string转为一个tag的值,如:android.control.mode。对比最初不同的Section name就可以发现前面两个x.y的字符串就是代表是Section name.而后面mode即是在该section下的tag数值,所以通过对这个string的分析可知,就可以定位对应的section以及tag值,这样返回到Java层的就是key相应的tag值了。继续追踪到system/media/camera/src/camera_metadata.c:

1 static jint CameraMetadata_getTagFromKey(JNIEnv *env, jobject thiz, jstring keyName, 2 jlong vendorId) { 3 ScopedUtfChars keyScoped(env, keyName); 4 const char *key = keyScoped.c_str(); 5 if (key == NULL) { 6 // exception thrown by ScopedUtfChars 7 return 0; 8 } 9 ALOGV("%s (key = '%s')", __FUNCTION__, key); 10 11 uint32_t tag = 0; 12 sp<VendorTagDescriptor> vTags = 13 VendorTagDescriptor::getGlobalVendorTagDescriptor(); 14 if (vTags.get() == nullptr) { 15 sp<VendorTagDescriptorCache> cache = VendorTagDescriptorCache::getGlobalVendorTagCache(); 16 if (cache.get() != nullptr) { 17 cache->getVendorTagDescriptor(vendorId, &vTags); 18 } 19 } 20 21 status_t res = CameraMetadata::getTagFromName(key, vTags.get(), &tag); 22 if (res != OK) { 23 jniThrowExceptionFmt(env, "java/lang/IllegalArgumentException", 24 "Could not find tag for key '%s')", key); 25 } 26 return tag; 27 }

接下来根据name找到具体Tag

1 status_t CameraMetadata::getTagFromName(const char *name, 2 const VendorTagDescriptor* vTags, uint32_t *tag) { 3 4 if (name == nullptr || tag == nullptr) return BAD_VALUE; 5 6 size_t nameLength = strlen(name); 7 8 const SortedVector<String8> *vendorSections; 9 size_t vendorSectionCount = 0; 10 11 if (vTags != NULL) { 12 vendorSections = vTags->getAllSectionNames(); 13 vendorSectionCount = vendorSections->size(); 14 } 15 16 // First, find the section by the longest string match 17 const char *section = NULL; 18 size_t sectionIndex = 0; 19 size_t sectionLength = 0; 20 size_t totalSectionCount = ANDROID_SECTION_COUNT + vendorSectionCount; 21 for (size_t i = 0; i < totalSectionCount; ++i) { 22 23 const char *str = (i < ANDROID_SECTION_COUNT) ? camera_metadata_section_names[i] : 24 (*vendorSections)[i - ANDROID_SECTION_COUNT].string(); 25 26 ALOGV("%s: Trying to match against section '%s'", __FUNCTION__, str); 27 28 if (strstr(name, str) == name) { // name begins with the section name 29 size_t strLength = strlen(str); 30 31 ALOGV("%s: Name begins with section name", __FUNCTION__); 32 33 // section name is the longest we've found so far 34 if (section == NULL || sectionLength < strLength) { 35 section = str; 36 sectionIndex = i; 37 sectionLength = strLength; 38 39 ALOGV("%s: Found new best section (%s)", __FUNCTION__, section); 40 } 41 } 42 } 43 44 // TODO: Make above get_camera_metadata_section_from_name ? 45 46 if (section == NULL) { 47 return NAME_NOT_FOUND; 48 } else { 49 ALOGV("%s: Found matched section '%s' (%zu)", 50 __FUNCTION__, section, sectionIndex); 51 } 52 53 // Get the tag name component of the name 54 const char *nameTagName = name + sectionLength + 1; // x.y.z -> z 55 if (sectionLength + 1 >= nameLength) { 56 return BAD_VALUE; 57 } 58 59 // Match rest of name against the tag names in that section only 60 uint32_t candidateTag = 0; 61 if (sectionIndex < ANDROID_SECTION_COUNT) { 62 // Match built-in tags (typically android.*) 63 uint32_t tagBegin, tagEnd; // [tagBegin, tagEnd) 64 tagBegin = camera_metadata_section_bounds[sectionIndex][0]; 65 tagEnd = camera_metadata_section_bounds[sectionIndex][1]; 66 67 for (candidateTag = tagBegin; candidateTag < tagEnd; ++candidateTag) { 68 const char *tagName = get_camera_metadata_tag_name(candidateTag); 69 70 if (strcmp(nameTagName, tagName) == 0) { 71 ALOGV("%s: Found matched tag '%s' (%d)", 72 __FUNCTION__, tagName, candidateTag); 73 break; 74 } 75 } 76 77 if (candidateTag == tagEnd) { 78 return NAME_NOT_FOUND; 79 } 80 } else if (vTags != NULL) { 81 // Match vendor tags (typically com.*) 82 const String8 sectionName(section); 83 const String8 tagName(nameTagName); 84 85 status_t res = OK; 86 if ((res = vTags->lookupTag(tagName, sectionName, &candidateTag)) != OK) { 87 return NAME_NOT_FOUND; 88 } 89 } 90 91 *tag = candidateTag; 92 return OK; 93 }

至此,根据key找到了对应的tag值。

相关文件调用关系如下:

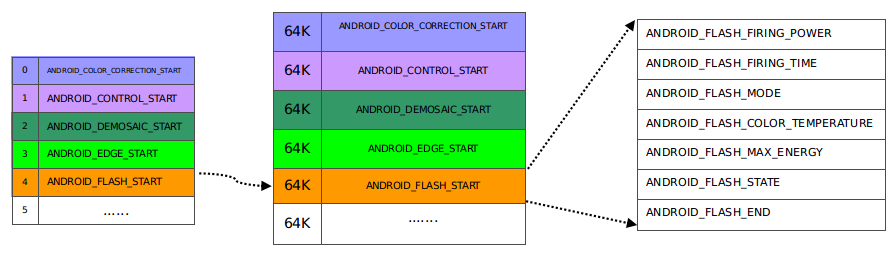

其中 camera_metadata_tags.h 包含了所有的基本宏,每一个section的大小是64K(每个section_start 由section偏移16位,也就是2^16=65536,即64K)。tag也就是又section_start偏移而来,比如android.control.afMode,由camera_metadata_section--->ANDROID_CONTROL找到对应的camera_metadata_section_start--->ANDROID_CONTROL_START,然后在camera_metadata_tag找到对应的ANDROID_CONTROL_AF_MODE,也就是tag值。

1 /** 2 * !! Do not include this file directly !! 3 * 4 * Include camera_metadata.h instead. 5 */ 6 7 /** 8 * ! Do not edit this file directly ! 9 * 10 * Generated automatically from camera_metadata_tags.mako 11 */ 12 13 /** TODO: Nearly every enum in this file needs a description */ 14 15 /** 16 * Top level hierarchy definitions for camera metadata. *_INFO sections are for 17 * the static metadata that can be retrived without opening the camera device. 18 * New sections must be added right before ANDROID_SECTION_COUNT to maintain 19 * existing enumerations. 20 */ 21 typedef enum camera_metadata_section { 22 ANDROID_COLOR_CORRECTION, 23 ANDROID_CONTROL, 24 ANDROID_DEMOSAIC, 25 ANDROID_EDGE, 26 ANDROID_FLASH, 27 ANDROID_FLASH_INFO, 28 ANDROID_HOT_PIXEL, 29 ANDROID_JPEG, 30 ANDROID_LENS, 31 ANDROID_LENS_INFO, 32 ANDROID_NOISE_REDUCTION, 33 ANDROID_QUIRKS, 34 ANDROID_REQUEST, 35 ANDROID_SCALER, 36 ANDROID_SENSOR, 37 ANDROID_SENSOR_INFO, 38 ANDROID_SHADING, 39 ANDROID_STATISTICS, 40 ANDROID_STATISTICS_INFO, 41 ANDROID_TONEMAP, 42 ANDROID_LED, 43 ANDROID_INFO, 44 ANDROID_BLACK_LEVEL, 45 ANDROID_SYNC, 46 ANDROID_REPROCESS, 47 ANDROID_DEPTH, 48 ANDROID_LOGICAL_MULTI_CAMERA, 49 ANDROID_DISTORTION_CORRECTION, 50 ANDROID_HEIC, 51 ANDROID_HEIC_INFO, 52 ANDROID_H264, 53 ANDROID_H265, 54 ANDROID_SECTION_COUNT, 55 56 VENDOR_SECTION = 0x8000 57 } camera_metadata_section_t; 58 59 /** 60 * Hierarchy positions in enum space. All vendor extension tags must be 61 * defined with tag >= VENDOR_SECTION_START 62 */ 63 typedef enum camera_metadata_section_start { 64 ANDROID_COLOR_CORRECTION_START = ANDROID_COLOR_CORRECTION << 16, 65 ANDROID_CONTROL_START = ANDROID_CONTROL << 16, 66 ANDROID_DEMOSAIC_START = ANDROID_DEMOSAIC << 16, 67 ANDROID_EDGE_START = ANDROID_EDGE << 16, 68 ANDROID_FLASH_START = ANDROID_FLASH << 16, 69 ANDROID_FLASH_INFO_START = ANDROID_FLASH_INFO << 16, 70 ANDROID_HOT_PIXEL_START = ANDROID_HOT_PIXEL << 16, 71 ANDROID_JPEG_START = ANDROID_JPEG << 16, 72 ANDROID_LENS_START = ANDROID_LENS << 16, 73 ANDROID_LENS_INFO_START = ANDROID_LENS_INFO << 16, 74 ANDROID_NOISE_REDUCTION_START = ANDROID_NOISE_REDUCTION << 16, 75 ANDROID_QUIRKS_START = ANDROID_QUIRKS << 16, 76 ANDROID_REQUEST_START = ANDROID_REQUEST << 16, 77 ANDROID_SCALER_START = ANDROID_SCALER << 16, 78 ANDROID_SENSOR_START = ANDROID_SENSOR << 16, 79 ANDROID_SENSOR_INFO_START = ANDROID_SENSOR_INFO << 16, 80 ANDROID_SHADING_START = ANDROID_SHADING << 16, 81 ANDROID_STATISTICS_START = ANDROID_STATISTICS << 16, 82 ANDROID_STATISTICS_INFO_START = ANDROID_STATISTICS_INFO << 16, 83 ANDROID_TONEMAP_START = ANDROID_TONEMAP << 16, 84 ANDROID_LED_START = ANDROID_LED << 16, 85 ANDROID_INFO_START = ANDROID_INFO << 16, 86 ANDROID_BLACK_LEVEL_START = ANDROID_BLACK_LEVEL << 16, 87 ANDROID_SYNC_START = ANDROID_SYNC << 16, 88 ANDROID_REPROCESS_START = ANDROID_REPROCESS << 16, 89 ANDROID_DEPTH_START = ANDROID_DEPTH << 16, 90 ANDROID_LOGICAL_MULTI_CAMERA_START 91 = ANDROID_LOGICAL_MULTI_CAMERA 92 << 16, 93 ANDROID_DISTORTION_CORRECTION_START 94 = ANDROID_DISTORTION_CORRECTION 95 << 16, 96 ANDROID_HEIC_START = ANDROID_HEIC << 16, 97 ANDROID_HEIC_INFO_START = ANDROID_HEIC_INFO << 16, 98 ANDROID_H264_START = ANDROID_H264 << 16, 99 ANDROID_H265_START = ANDROID_H265 << 16, 100 VENDOR_SECTION_START = VENDOR_SECTION << 16 101 } camera_metadata_section_start_t;

1 /** 2 * Main enum for defining camera metadata tags. New entries must always go 3 * before the section _END tag to preserve existing enumeration values. In 4 * addition, the name and type of the tag needs to be added to 5 * system/media/camera/src/camera_metadata_tag_info.c 6 */ 7 typedef enum camera_metadata_tag { 8 ANDROID_COLOR_CORRECTION_MODE = // enum | public | HIDL v3.2 9 ANDROID_COLOR_CORRECTION_START, 10 ANDROID_COLOR_CORRECTION_TRANSFORM, // rational[] | public | HIDL v3.2 11 ANDROID_COLOR_CORRECTION_GAINS, // float[] | public | HIDL v3.2 12 ANDROID_COLOR_CORRECTION_ABERRATION_MODE, // enum | public | HIDL v3.2 13 ANDROID_COLOR_CORRECTION_AVAILABLE_ABERRATION_MODES, 14 // byte[] | public | HIDL v3.2 15 ANDROID_COLOR_CORRECTION_END, 16 17 ANDROID_CONTROL_AE_ANTIBANDING_MODE = // enum | public | HIDL v3.2 18 ANDROID_CONTROL_START, 19 ANDROID_CONTROL_AE_EXPOSURE_COMPENSATION, // int32 | public | HIDL v3.2 20 ANDROID_CONTROL_AE_LOCK, // enum | public | HIDL v3.2 21 ANDROID_CONTROL_AE_MODE, // enum | public | HIDL v3.2 22 ANDROID_CONTROL_AE_REGIONS, // int32[] | public | HIDL v3.2 23 ANDROID_CONTROL_AE_TARGET_FPS_RANGE, // int32[] | public | HIDL v3.2 24 ANDROID_CONTROL_AE_PRECAPTURE_TRIGGER, // enum | public | HIDL v3.2 25 ANDROID_CONTROL_AF_MODE, // enum | public | HIDL v3.2 26 ANDROID_CONTROL_AF_REGIONS, // int32[] | public | HIDL v3.2 27 ANDROID_CONTROL_AF_TRIGGER, // enum | public | HIDL v3.2 28 ANDROID_CONTROL_AWB_LOCK, // enum | public | HIDL v3.2 29 ANDROID_CONTROL_AWB_MODE, // enum | public | HIDL v3.2 30 ANDROID_CONTROL_AWB_REGIONS, // int32[] | public | HIDL v3.2 31 ANDROID_CONTROL_CAPTURE_INTENT, // enum | public | HIDL v3.2 32 ANDROID_CONTROL_EFFECT_MODE, // enum | public | HIDL v3.2 33 ANDROID_CONTROL_MODE, // enum | public | HIDL v3.2 34 ANDROID_CONTROL_SCENE_MODE, // enum | public | HIDL v3.2 35 ANDROID_CONTROL_VIDEO_STABILIZATION_MODE, // enum | public | HIDL v3.2 36 ANDROID_CONTROL_AE_AVAILABLE_ANTIBANDING_MODES, // byte[] | public | HIDL v3.2 37 ANDROID_CONTROL_AE_AVAILABLE_MODES, // byte[] | public | HIDL v3.2 38 ANDROID_CONTROL_AE_AVAILABLE_TARGET_FPS_RANGES, // int32[] | public | HIDL v3.2 39 ANDROID_CONTROL_AE_COMPENSATION_RANGE, // int32[] | public | HIDL v3.2 40 ANDROID_CONTROL_AE_COMPENSATION_STEP, // rational | public | HIDL v3.2 41 ANDROID_CONTROL_AF_AVAILABLE_MODES, // byte[] | public | HIDL v3.2 42 ANDROID_CONTROL_AVAILABLE_EFFECTS, // byte[] | public | HIDL v3.2 43 ANDROID_CONTROL_AVAILABLE_SCENE_MODES, // byte[] | public | HIDL v3.2 44 ANDROID_CONTROL_AVAILABLE_VIDEO_STABILIZATION_MODES, 45 // byte[] | public | HIDL v3.2 46 ANDROID_CONTROL_AWB_AVAILABLE_MODES, // byte[] | public | HIDL v3.2 47 ANDROID_CONTROL_MAX_REGIONS, // int32[] | ndk_public | HIDL v3.2 48 ANDROID_CONTROL_SCENE_MODE_OVERRIDES, // byte[] | system | HIDL v3.2 49 ANDROID_CONTROL_AE_PRECAPTURE_ID, // int32 | system | HIDL v3.2 50 ANDROID_CONTROL_AE_STATE, // enum | public | HIDL v3.2 51 ANDROID_CONTROL_AF_STATE, // enum | public | HIDL v3.2 52 ANDROID_CONTROL_AF_TRIGGER_ID, // int32 | system | HIDL v3.2 53 ANDROID_CONTROL_AWB_STATE, // enum | public | HIDL v3.2 54 ANDROID_CONTROL_AVAILABLE_HIGH_SPEED_VIDEO_CONFIGURATIONS, 55 // int32[] | hidden | HIDL v3.2 56 ANDROID_CONTROL_AE_LOCK_AVAILABLE, // enum | public | HIDL v3.2 57 ANDROID_CONTROL_AWB_LOCK_AVAILABLE, // enum | public | HIDL v3.2 58 ANDROID_CONTROL_AVAILABLE_MODES, // byte[] | public | HIDL v3.2 59 ANDROID_CONTROL_POST_RAW_SENSITIVITY_BOOST_RANGE, // int32[] | public | HIDL v3.2 60 ANDROID_CONTROL_POST_RAW_SENSITIVITY_BOOST, // int32 | public | HIDL v3.2 61 ANDROID_CONTROL_ENABLE_ZSL, // enum | public | HIDL v3.2 62 ANDROID_CONTROL_AF_SCENE_CHANGE, // enum | public | HIDL v3.3 63 ANDROID_CONTROL_END, 64 65 ......

对应关系如图所示:

然后在 camera_metadata_tag_info.c 中进行了映射和绑定,前面Native层CameraMetadata_getTagFromKey--->getTagFromName--->camera_metadata_section_bounds调用的camera_metadata_section_bounds实现在这里:

1 /** 2 * ! Do not edit this file directly ! 3 * 4 * Generated automatically from camera_metadata_tag_info.mako 5 */ 6 7 const char *camera_metadata_section_names[ANDROID_SECTION_COUNT] = { 8 [ANDROID_COLOR_CORRECTION] = "android.colorCorrection", 9 [ANDROID_CONTROL] = "android.control", 10 [ANDROID_DEMOSAIC] = "android.demosaic", 11 [ANDROID_EDGE] = "android.edge", 12 [ANDROID_FLASH] = "android.flash", 13 [ANDROID_FLASH_INFO] = "android.flash.info", 14 [ANDROID_HOT_PIXEL] = "android.hotPixel", 15 [ANDROID_JPEG] = "android.jpeg", 16 [ANDROID_LENS] = "android.lens", 17 [ANDROID_LENS_INFO] = "android.lens.info", 18 [ANDROID_NOISE_REDUCTION] = "android.noiseReduction", 19 [ANDROID_QUIRKS] = "android.quirks", 20 [ANDROID_REQUEST] = "android.request", 21 [ANDROID_SCALER] = "android.scaler", 22 [ANDROID_SENSOR] = "android.sensor", 23 [ANDROID_SENSOR_INFO] = "android.sensor.info", 24 [ANDROID_SHADING] = "android.shading", 25 [ANDROID_STATISTICS] = "android.statistics", 26 [ANDROID_STATISTICS_INFO] = "android.statistics.info", 27 [ANDROID_TONEMAP] = "android.tonemap", 28 [ANDROID_LED] = "android.led", 29 [ANDROID_INFO] = "android.info", 30 [ANDROID_BLACK_LEVEL] = "android.blackLevel", 31 [ANDROID_SYNC] = "android.sync", 32 [ANDROID_REPROCESS] = "android.reprocess", 33 [ANDROID_DEPTH] = "android.depth", 34 [ANDROID_LOGICAL_MULTI_CAMERA] = "android.logicalMultiCamera", 35 [ANDROID_DISTORTION_CORRECTION] 36 = "android.distortionCorrection", 37 }; 38 39 unsigned int camera_metadata_section_bounds[ANDROID_SECTION_COUNT][2] = { 40 [ANDROID_COLOR_CORRECTION] = { ANDROID_COLOR_CORRECTION_START, 41 ANDROID_COLOR_CORRECTION_END }, 42 [ANDROID_CONTROL] = { ANDROID_CONTROL_START, 43 ANDROID_CONTROL_END }, 44 [ANDROID_DEMOSAIC] = { ANDROID_DEMOSAIC_START, 45 ANDROID_DEMOSAIC_END }, 46 [ANDROID_EDGE] = { ANDROID_EDGE_START, 47 ANDROID_EDGE_END }, 48 [ANDROID_FLASH] = { ANDROID_FLASH_START, 49 ANDROID_FLASH_END }, 50 [ANDROID_FLASH_INFO] = { ANDROID_FLASH_INFO_START, 51 ANDROID_FLASH_INFO_END }, 52 [ANDROID_HOT_PIXEL] = { ANDROID_HOT_PIXEL_START, 53 ANDROID_HOT_PIXEL_END }, 54 [ANDROID_JPEG] = { ANDROID_JPEG_START, 55 ANDROID_JPEG_END }, 56 [ANDROID_LENS] = { ANDROID_LENS_START, 57 ANDROID_LENS_END }, 58 [ANDROID_LENS_INFO] = { ANDROID_LENS_INFO_START, 59 ANDROID_LENS_INFO_END }, 60 [ANDROID_NOISE_REDUCTION] = { ANDROID_NOISE_REDUCTION_START, 61 ANDROID_NOISE_REDUCTION_END }, 62 [ANDROID_QUIRKS] = { ANDROID_QUIRKS_START, 63 ANDROID_QUIRKS_END }, 64 [ANDROID_REQUEST] = { ANDROID_REQUEST_START, 65 ANDROID_REQUEST_END }, 66 [ANDROID_SCALER] = { ANDROID_SCALER_START, 67 ANDROID_SCALER_END }, 68 [ANDROID_SENSOR] = { ANDROID_SENSOR_START, 69 ANDROID_SENSOR_END }, 70 [ANDROID_SENSOR_INFO] = { ANDROID_SENSOR_INFO_START, 71 ANDROID_SENSOR_INFO_END }, 72 [ANDROID_SHADING] = { ANDROID_SHADING_START, 73 ANDROID_SHADING_END }, 74 [ANDROID_STATISTICS] = { ANDROID_STATISTICS_START, 75 ANDROID_STATISTICS_END }, 76 [ANDROID_STATISTICS_INFO] = { ANDROID_STATISTICS_INFO_START, 77 ANDROID_STATISTICS_INFO_END }, 78 [ANDROID_TONEMAP] = { ANDROID_TONEMAP_START, 79 ANDROID_TONEMAP_END }, 80 [ANDROID_LED] = { ANDROID_LED_START, 81 ANDROID_LED_END }, 82 [ANDROID_INFO] = { ANDROID_INFO_START, 83 ANDROID_INFO_END }, 84 [ANDROID_BLACK_LEVEL] = { ANDROID_BLACK_LEVEL_START, 85 ANDROID_BLACK_LEVEL_END }, 86 [ANDROID_SYNC] = { ANDROID_SYNC_START, 87 ANDROID_SYNC_END }, 88 [ANDROID_REPROCESS] = { ANDROID_REPROCESS_START, 89 ANDROID_REPROCESS_END }, 90 [ANDROID_DEPTH] = { ANDROID_DEPTH_START, 91 ANDROID_DEPTH_END }, 92 [ANDROID_LOGICAL_MULTI_CAMERA] = { ANDROID_LOGICAL_MULTI_CAMERA_START, 93 ANDROID_LOGICAL_MULTI_CAMERA_END }, 94 [ANDROID_DISTORTION_CORRECTION] 95 = { ANDROID_DISTORTION_CORRECTION_START, 96 ANDROID_DISTORTION_CORRECTION_END }, 97 };

由 tag_info 结构体统一管理:

1 static tag_info_t android_color_correction[ANDROID_COLOR_CORRECTION_END - 2 ANDROID_COLOR_CORRECTION_START] = { 3 [ ANDROID_COLOR_CORRECTION_MODE - ANDROID_COLOR_CORRECTION_START ] = 4 { "mode", TYPE_BYTE }, 5 [ ANDROID_COLOR_CORRECTION_TRANSFORM - ANDROID_COLOR_CORRECTION_START ] = 6 { "transform", TYPE_RATIONAL 7 }, 8 [ ANDROID_COLOR_CORRECTION_GAINS - ANDROID_COLOR_CORRECTION_START ] = 9 { "gains", TYPE_FLOAT }, 10 [ ANDROID_COLOR_CORRECTION_ABERRATION_MODE - ANDROID_COLOR_CORRECTION_START ] = 11 { "aberrationMode", TYPE_BYTE }, 12 [ ANDROID_COLOR_CORRECTION_AVAILABLE_ABERRATION_MODES - ANDROID_COLOR_CORRECTION_START ] = 13 { "availableAberrationModes", TYPE_BYTE }, 14 }; 15 16 ------------------------------------------------------------- 17 18 tag_info_t *tag_info[ANDROID_SECTION_COUNT] = { 19 android_color_correction, 20 android_control, 21 android_demosaic, 22 android_edge, 23 android_flash, 24 android_flash_info, 25 android_hot_pixel, 26 android_jpeg, 27 android_lens, 28 android_lens_info, 29 android_noise_reduction, 30 android_quirks, 31 android_request, 32 android_scaler, 33 android_sensor, 34 android_sensor_info, 35 android_shading, 36 android_statistics, 37 android_statistics_info, 38 android_tonemap, 39 android_led, 40 android_info, 41 android_black_level, 42 android_sync, 43 android_reprocess, 44 android_depth, 45 android_logical_multi_camera, 46 android_distortion_correction, 47 };

下图是Camera Metadata对不同section以及相应section下不同tag的布局图,以最常见的android.control Section为例进行描述:

如果要写数据,那么在native同样需要一个CameraMetadata对象,这里是在Java构造CameraMetadataNative时实现的,调用的native接口是nativeAllocate():

1 // instance methods 2 { "nativeAllocate", 3 "()J", 4 (void*)CameraMetadata_allocate },

1 static jlong CameraMetadata_allocate(JNIEnv *env, jobject thiz) { 2 ALOGV("%s", __FUNCTION__); 3 4 return reinterpret_cast<jlong>(new CameraMetadata()); 5 }

1 CameraMetadata::CameraMetadata(size_t entryCapacity, size_t dataCapacity) : 2 mLocked(false) 3 { 4 mBuffer = allocate_camera_metadata(entryCapacity, dataCapacity); 5 }

函数allocate_camera_metadata()是重新根据入口数和数据大小计算、申请buffer。紧接着第二个place_camera_metadata()就是对刚申请的buffer,初始化一些变量,为后面更新,插入tag数据做准备。

1 camera_metadata_t *allocate_camera_metadata(size_t entry_capacity, 2 size_t data_capacity) { //传入的参数是(2,0) 3 if (entry_capacity == 0) return NULL; 4 5 size_t memory_needed = calculate_camera_metadata_size(entry_capacity, //返回的是header+2*sizeof(entry)大小 6 data_capacity); 7 void *buffer = malloc(memory_needed); //malloc申请一块连续的内存, 8 return place_camera_metadata(buffer, memory_needed, //并初始化。 9 entry_capacity, 10 data_capacity); 11 } 12 13 camera_metadata_t *place_camera_metadata(void *dst, 14 size_t dst_size, 15 size_t entry_capacity, 16 size_t data_capacity) { 17 if (dst == NULL) return NULL; 18 if (entry_capacity == 0) return NULL; 19 20 size_t memory_needed = calculate_camera_metadata_size(entry_capacity, //再一次计算需要的内存大小,为何?? 21 data_capacity); 22 if (memory_needed > dst_size) return NULL; 23 24 camera_metadata_t *metadata = (camera_metadata_t*)dst; 25 metadata->version = CURRENT_METADATA_VERSION; //版本号, 26 metadata->flags = 0;//没有排序标志 27 metadata->entry_count = 0; //初始化entry_count =0 28 metadata->entry_capacity = entry_capacity; //最大的入口数量,针对ANDROID_FLASH_MODE这里是2个。 29 metadata->entries_start = 30 ALIGN_TO(sizeof(camera_metadata_t), ENTRY_ALIGNMENT); //entry数据域的开始处紧挨着camera_metadata_t 头部。 31 metadata->data_count = 0; //初始化为0 32 metadata->data_capacity = data_capacity; //因为没有申请内存,这里也是0 33 metadata->size = memory_needed; //总的内存大小 34 size_t data_unaligned = (uint8_t*)(get_entries(metadata) + 35 metadata->entry_capacity) - (uint8_t*)metadata; 36 metadata->data_start = ALIGN_TO(data_unaligned, DATA_ALIGNMENT); //计算data数据区域的偏移地址。数据区域紧挨着entry区域末尾。 37 38 return metadata;}//根据入口数量和数据数量,计算实际camera_metadata需要的内存块大小(header+sizeof(camera_entry)+sizeof(data)。 39 size_t calculate_camera_metadata_size(size_t entry_count, 40 size_t data_count) { //针对我们上面讲的例子,传入的参数是(2,0) 41 size_t memory_needed = sizeof(camera_metadata_t); //这里计算header大小了。 42 // Start entry list at aligned boundary 43 memory_needed = ALIGN_TO(memory_needed, ENTRY_ALIGNMENT); //按字节对齐后的大小 44 memory_needed += sizeof(camera_metadata_buffer_entry_t[entry_count]); //紧接着是entry数据区的大小了,这里申请了2个entry内存空间。 45 // Start buffer list at aligned boundary 46 memory_needed = ALIGN_TO(memory_needed, DATA_ALIGNMENT); //同样对齐 47 memory_needed += sizeof(uint8_t[data_count]); //data_count = 0 48 return memory_needed; //返回的最后算出的大小 49 }

CameraMetadata数据内存块中组成的最小基本单元是struct camera_metadata_buffer_entry,总的entry数目等信息需要struct camera_metadata_t来维护。

结构图如下:

在头文件 system/media/camera/include/system/camera_metadata.h 有如下定义:

1 struct camera_metadata; 2 typedef struct camera_metadata camera_metadata_t;

camera_metadata_t 就是外部访问使用metadata的结构体。

这里有一个很有意思的用法:camera_metadata_t 的类型实际上是 camera_metadata,而 camera_metadata 是在 camera_metadata.c 中实现的。

外部文件只能inlcude头文件camera_metadata.h,这意味着如果外部只能看到camera_metadata_t,不能看到camera_metadata。所以无法直接访问camera_metadata的成员。

操控camera_metadata的唯一方式就是通过调用camera_metadata.h提供的函数接口。通过这种方式,实现了C语言的封装功能。

描述camera metadata的数据结构如下:

(1) struct camera_metadata:

1 typedef uint32_t metadata_uptrdiff_t; 2 typedef uint32_t metadata_size_t; 3 typedef uint64_t metadata_vendor_id_t; 4 5 struct camera_metadata { 6 metadata_size_t size; // metadata的总大小 7 uint32_t version; // CURRENT_METADATA_VERSION,一般是1 8 uint32_t flags; // FLAG_SORTED,标记当前是否有对entry进行排序(根据entry的tag从小到大)。好处:排序后可以使用二分查找,可以提升性能。 9 metadata_size_t entry_count; // 当前entry的数量,初始化为0 10 metadata_size_t entry_capacity; // entry的最大数量 11 metadata_uptrdiff_t entries_start; // entry的起始地址(类型为:camera_metadata_buffer_entry) 12 metadata_size_t data_count; // 当前data的数量(类型为uint8),初始化为0 13 metadata_size_t data_capacity; // data的最大数量 14 metadata_uptrdiff_t data_start; // data的起始地址 15 uint32_t padding; // 8字节对齐,不够就填充到这 16 metadata_vendor_id_t vendor_id; // 标记平台的id,default值为CAMERA_METADATA_INVALID_ID 17 };

(2) struct camera_metadata_buffer_entry:

1 typedef struct camera_metadata_buffer_entry { 2 uint32_t tag; // tag的key值 3 uint32_t count; // tag的value对应的data的数量。比如data的类型为uint8_t ,count为100。则总共100个字节。 4 union { 5 uint32_t offset; // offset标记当前的key值对应的value在data_start中的位置 6 uint8_t value[4]; // 当value占用的字节数<=4时,直接存储到这里(省空间) 7 } data; 8 uint8_t type; // TYPE_BYTE、TYPE_INT32、TYPE_FLOAT、TYPE_INT64、TYPE_DOUBLE、TYPE_RATIONAL 9 uint8_t reserved[3]; 10 } camera_metadata_buffer_entry_t;

(3) struct camera_metadata_entry :

1 typedef struct camera_metadata_entry { 2 size_t index; // 该entry在当前metadta里面的index(0 ~ entry_count-1) 3 uint32_t tag; // tag的key值 4 uint8_t type; // TYPE_BYTE、TYPE_INT32、TYPE_FLOAT、TYPE_INT64、TYPE_DOUBLE、TYPE_RATIONAL 5 size_t count; // tag的value对应的data的数量。比如data的类型为uint8_t ,count为100。则总共100个字节。 6 union { 7 uint8_t *u8; 8 int32_t *i32; 9 float *f; 10 int64_t *i64; 11 double *d; 12 camera_metadata_rational_t *r; 13 } data; // tag的value对应的data值 14 } camera_metadata_entry_t;

注:struct camera_metadata_buffer_entry_t; //内部使用记录tag数据

struct camera_metadata_entry_t; //外部引用

下图比较直观总结出三个结构体之间的关系:

metadata的基本操作就是增(增加tag)、删(删除tag)、查(根据tag查找对应的value)、改(修改tag对应的value)。到这里metadata的原理基本上可以推导出来了,以“查”为例:

(1) 当用户拿到 camera_metadata 以及对应的tag后,需要从该meta中,找到对应的value。

(2) 从metadata的 entries_start 成员中可以拿到entry的首地址,再根据 entry_count 可以遍历所有的entry。

(3) 根据tag来逐一比较camera_metadata_buffer_entry中的 tag 。就可以找到该tag对应的entry。

(4) 根据 count 和 type 可以计算出value的字节数。当字节数<=4的时候,直接取 data.value;否则就根据 offset 从metadata的 data_start 找到对应的value。

(5) 将其转换为结构体 camera_metadata_entry_t,返回给用户。用户通过count和type就可以找到该tag对应的value啦。

PS:这里有一个非常重要也非常容易出bug的地方,就是返回的entry,里面的data指向的是实际数据的地址。

所以如果直接改写data里面的内容,会覆盖之前的数据。 一定要记得做memcpy。

在HAL层代码中通过如下方式获取/更新 entry:

1 { 2 UINT32 SensorTimestampTag = 0x000E0010; 3 camera_metadata_entry_t entry = { 0 }; 4 camera_metadata_t* pMetadata = 5 const_cast<camera_metadata_t*>(static_cast<const camera_metadata_t*>(pResult->pResultMetadata)); 6 UINT64 timestamp = m_shutterTimestamp[applicationFrameNum % MaxOutstandingRequests]; 7 INT32 status = find_camera_metadata_entry(pMetadata, SensorTimestampTag, &entry); 8 9 if (-ENOENT == status) //没有查找到tag时,则认为是一个新的tag,需要添加到大数据结构中 10 { 11 status = add_camera_metadata_entry(pMetadata, SensorTimestampTag, ×tamp, 1); 12 } 13 else if (0 == status) 14 { 15 status = update_camera_metadata_entry(pMetadata, entry.index, ×tamp, 1, NULL); 16 } 17 }

find_camera_metadata_entry函数非常好理解,获取对应tag的entry结构体,并将数据保存在entry传入的参数中。

1 int find_camera_metadata_entry(camera_metadata_t *src, 2 uint32_t tag, 3 camera_metadata_entry_t *entry) { 4 if (src == NULL) return ERROR; 5 6 uint32_t index; 7 if (src->flags & FLAG_SORTED) { //之前初始化时,flags = 0,这里不成立,跳到else处 8 // Sorted entries, do a binary search 9 camera_metadata_buffer_entry_t *search_entry = NULL; 10 camera_metadata_buffer_entry_t key; 11 key.tag = tag; 12 search_entry = bsearch(&key, 13 get_entries(src), 14 src->entry_count, 15 sizeof(camera_metadata_buffer_entry_t), 16 compare_entry_tags); 17 if (search_entry == NULL) return NOT_FOUND; 18 index = search_entry - get_entries(src); 19 } else { 20 // Not sorted, linear search 21 camera_metadata_buffer_entry_t *search_entry = get_entries(src); 22 for (index = 0; index < src->entry_count; index++, search_entry++) { //这里由于entry_count =0 因为根本就没有添加任何东西。 23 if (search_entry->tag == tag) { 24 break; 25 } 26 } 27 if (index == src->entry_count) return NOT_FOUND; //返回NOT_FOUNT 28 } 29 30 return get_camera_metadata_entry(src, index, //找到index的tag entry 31 entry); 32 } 33 34 int add_camera_metadata_entry(camera_metadata_t *dst, 35 uint32_t tag, 36 const void *data, 37 size_t data_count) { //这里传入的参数为(mBuffer,ANDROID_FLASH_MODE,5,1) 38 39 int type = get_camera_metadata_tag_type(tag); 40 if (type == -1) { 41 ALOGE("%s: Unknown tag %04x.", __FUNCTION__, tag); 42 return ERROR; 43 } 44 45 return add_camera_metadata_entry_raw(dst, //这里传入的参数为(mBuffer,ANDROID_FLASH_MODE,BYTE_TYPE,5,1) DOWN 46 tag, 47 type, 48 data, 49 data_count); 50 } 51 //下面是真正干实事的方法,这里会将外部传入的tag信息,存放到各自的家中 52 static int add_camera_metadata_entry_raw(camera_metadata_t *dst, 53 uint32_t tag, 54 uint8_t type, 55 const void *data, 56 size_t data_count) { 57 58 if (dst == NULL) return ERROR; 59 if (dst->entry_count == dst->entry_capacity) return ERROR; //如果成立,就没有空间了。 60 if (data == NULL) return ERROR; 61 62 size_t data_bytes = 63 calculate_camera_metadata_entry_data_size(type, data_count); //计算要使用的内存大小这里1*1,但是返回的是0 64 if (data_bytes + dst->data_count > dst->data_capacity) return ERROR; //用的空间+当前数据位置指针,不能大于数据最大空间。 65 66 size_t data_payload_bytes = 67 data_count * camera_metadata_type_size[type]; //data_count =1,data_payload_bytes =1; 68 camera_metadata_buffer_entry_t *entry = get_entries(dst) + dst->entry_count;//得到当前空闲的entry对象。 69 memset(entry, 0, sizeof(camera_metadata_buffer_entry_t)); //清0 70 entry->tag = tag; //ANDROID_FLASH_MODE. 71 entry->type = type; //BYTE_TYPE 72 entry->count = data_count; //没有占用data数据域,这里就是0了。 73 74 if (data_bytes == 0) { 75 memcpy(entry->data.value, data, 76 data_payload_bytes); //小于4字节的,直接放到entry数据域。 77 } else { 78 entry->data.offset = dst->data_count; 79 memcpy(get_data(dst) + entry->data.offset, data, 80 data_payload_bytes); 81 dst->data_count += data_bytes; 82 } 83 dst->entry_count++; //入口位置记录指针+1. 84 dst->flags &= ~FLAG_SORTED; 85 return OK; //到这里ANDROID_FLASH_MODE就添加进去了。 86 }

update更新并建立参数过程:CameraMetadata支持不同类型的数据更新或者保存到camera_metadata_t中tag所在的entry当中去,以一个更新单字节的数据为例,data_count指定了数据的个数,而tag指定了要更新的entry。

status_t CameraMetadata::update(uint32_t tag, const int32_t *data, size_t data_count) { status_t res; if (mLocked) { ALOGE("%s: CameraMetadata is locked", __FUNCTION__); return INVALID_OPERATION; } if ( (res = checkType(tag, TYPE_INT32)) != OK) { return res; } return updateImpl(tag, (const void*)data, data_count); }

首先是通过checkType,主要是通过tag找到get_camera_metadata_tag_type其所应当支持的tag_type(因为具体的TAG是已经通过camera_metadata_tag_info.c源文件中的tag_info这个表指定了其应该具备的tag_type),比较两者是否一致,一致后才允许后续的操作。如这里需要TYPE_BYTE一致:

1 const char *get_camera_metadata_tag_name(uint32_t tag) { 2 uint32_t tag_section = tag >> 16; 3 if (tag_section >= VENDOR_SECTION && vendor_tag_ops != NULL) { 4 return vendor_tag_ops->get_tag_name( 5 vendor_tag_ops, 6 tag); 7 } 8 if (tag_section >= ANDROID_SECTION_COUNT || 9 tag >= camera_metadata_section_bounds[tag_section][1] ) { 10 return NULL; 11 } 12 uint32_t tag_index = tag & 0xFFFF;//取tag在section中的index,低16位 13 return tag_info[tag_section][tag_index].tag_name;//定位section然后再说tag 14 } 15 16 int get_camera_metadata_tag_type(uint32_t tag) { 17 uint32_t tag_section = tag >> 16; 18 if (tag_section >= VENDOR_SECTION && vendor_tag_ops != NULL) { 19 return vendor_tag_ops->get_tag_type( 20 vendor_tag_ops, 21 tag); 22 } 23 if (tag_section >= ANDROID_SECTION_COUNT || 24 tag >= camera_metadata_section_bounds[tag_section][1] ) { 25 return -1; 26 } 27 uint32_t tag_index = tag & 0xFFFF; 28 return tag_info[tag_section][tag_index].tag_type; 29 }

分别是通过tag取货section id即tag>>16,就定位到所属的section tag_info_t[],再通过在在该section中定位tag index一般是tag&0xFFFF的低16位为在该tag在section中的偏移值,进而找到tag自身的struct tag_info_t.

updataImpl函数主要是讲所有要写入的数据进行update操作:

1 status_t CameraMetadata::updateImpl(uint32_t tag, const void *data, 2 size_t data_count) { 3 status_t res; 4 if (mLocked) { 5 ALOGE("%s: CameraMetadata is locked", __FUNCTION__); 6 return INVALID_OPERATION; 7 } 8 int type = get_camera_metadata_tag_type(tag); 9 if (type == -1) { 10 ALOGE("%s: Tag %d not found", __FUNCTION__, tag); 11 return BAD_VALUE; 12 } 13 size_t data_size = calculate_camera_metadata_entry_data_size(type, 14 data_count); 15 16 res = resizeIfNeeded(1, data_size);//新建camera_metadata_t 17 18 if (res == OK) { 19 camera_metadata_entry_t entry; 20 res = find_camera_metadata_entry(mBuffer, tag, &entry); 21 if (res == NAME_NOT_FOUND) { 22 res = add_camera_metadata_entry(mBuffer, 23 tag, data, data_count);//将当前新的tag以及数据加入到camera_metadata_t 24 } else if (res == OK) { 25 res = update_camera_metadata_entry(mBuffer, 26 entry.index, data, data_count, NULL); 27 } 28 } 29 30 if (res != OK) { 31 ALOGE("%s: Unable to update metadata entry %s.%s (%x): %s (%d)", 32 __FUNCTION__, get_camera_metadata_section_name(tag), 33 get_camera_metadata_tag_name(tag), tag, strerror(-res), res); 34 } 35 36 IF_ALOGV() { 37 ALOGE_IF(validate_camera_metadata_structure(mBuffer, /*size*/NULL) != 38 OK, 39 40 "%s: Failed to validate metadata structure after update %p", 41 __FUNCTION__, mBuffer); 42 } 43 44 return res; 45 }

流程框图如下:

最终可以明确的是CameraMetadata相关的参数是被Java层来set/get,但本质是在native层进行了实现,后续如果相关控制参数是被打包到CaptureRequest中时传入到native时即操作的还是native中的CameraMetadata。