一. 概述

ServiceManager是Binder IPC通信过程中的守护进程,本身也是一个Binder服务,但并没有采用libbinder中的多线程模型来与Binder驱动通信,而是自行编写了binder.c直接和Binder驱动来通信,并且只有一个循环binder_loop来进行读取和处理事务,这样的好处是简单而高效。

ServiceManager本身工作相对简单,其功能:查询和注册服务。 对于Binder IPC通信过程中,其实更多的情形是BpBinder和BBinder之间的通信,比如ActivityManagerProxy和ActivityManagerService之间的通信等。

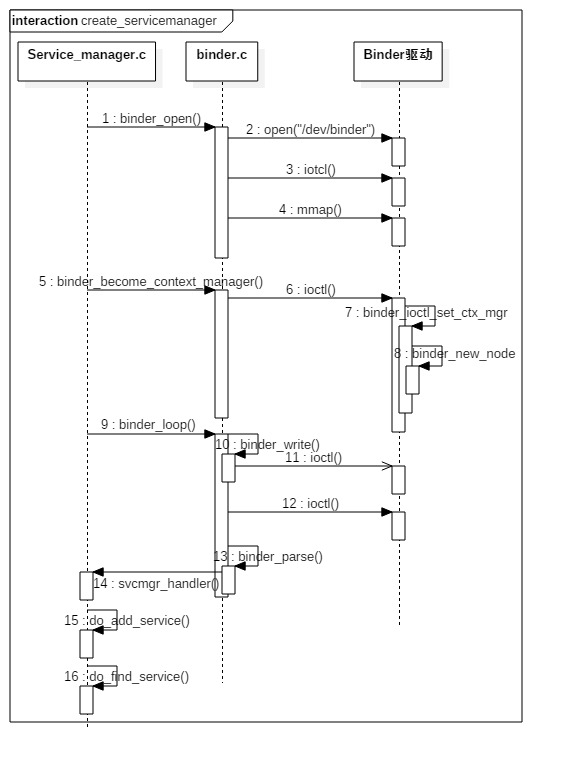

1.1 流程图

启动过程主要以下几个阶段:

- 打开binder驱动:binder_open;

- 注册成为binder服务的大管家:binder_become_context_manager;

- 进入无限循环,处理client端发来的请求:binder_loop;

二. 启动过程

ServiceManager是由init进程通过解析init.rc文件而创建的,其所对应的可执行程序/system/bin/servicemanager,所对应的源文件是service_manager.c,进程名为/system/bin/servicemanager。

1 service servicemanager /system/bin/servicemanager 2 class core 3 user system 4 group system 5 critical 6 onrestart restart healthd 7 onrestart restart zygote 8 onrestart restart media 9 onrestart restart surfaceflinger 10 onrestart restart drm

关于rc文件中class core和class main区别可以看

http://www.ccbu.cc/index.php/framework/51.html

https://www.cnblogs.com/SaraMoring/p/14393223.html

启动Service Manager的入口函数是service_manager.c中的main()方法,代码如下:

2.1 main

1 //service_manager.c 2 3 int main(int argc, char **argv) 4 { 5 struct binder_state *bs; 6 //打开binder驱动,申请128k字节大小的内存空间 【见小节2.2】 7 bs = binder_open(128*1024); 8 ... 9 10 //成为上下文管理者 【见小节2.3】 11 if (binder_become_context_manager(bs)) { 12 return -1; 13 } 14 15 selinux_enabled = is_selinux_enabled(); //selinux权限是否使能 16 sehandle = selinux_android_service_context_handle(); 17 selinux_status_open(true); 18 19 if (selinux_enabled > 0) { 20 if (sehandle == NULL) { 21 abort(); //无法获取sehandle 22 } 23 if (getcon(&service_manager_context) != 0) { 24 abort(); //无法获取service_manager上下文 25 } 26 } 27 ... 28 29 //进入无限循环,处理client端发来的请求 【见小节2.4】 30 binder_loop(bs, svcmgr_handler); 31 return 0; 32 }

2.2 binder_open

1 //servicemanager/binder.c 2 3 struct binder_state *binder_open(size_t mapsize) 4 { 5 struct binder_state *bs;【见小节2.2.1】 6 struct binder_version vers; 7 8 bs = malloc(sizeof(*bs)); 9 if (!bs) { 10 errno = ENOMEM; 11 return NULL; 12 } 13 14 //通过系统调用陷入内核,打开Binder设备驱动 15 bs->fd = open("/dev/binder", O_RDWR); 16 if (bs->fd < 0) { 17 goto fail_open; // 无法打开binder设备 18 } 19 20 //通过系统调用,ioctl获取binder版本信息 21 if ((ioctl(bs->fd, BINDER_VERSION, &vers) == -1) || 22 (vers.protocol_version != BINDER_CURRENT_PROTOCOL_VERSION)) { 23 goto fail_open; //内核空间与用户空间的binder不是同一版本 24 } 25 26 bs->mapsize = mapsize; 27 //通过系统调用,mmap内存映射,mmap必须是page的整数倍 28 bs->mapped = mmap(NULL, mapsize, PROT_READ, MAP_PRIVATE, bs->fd, 0); 29 if (bs->mapped == MAP_FAILED) { 30 goto fail_map; // binder设备内存无法映射 31 } 32 33 return bs; 34 35 fail_map: 36 close(bs->fd); 37 fail_open: 38 free(bs); 39 return NULL; 40 }

打开binder驱动相关操作:

先调用open()打开binder设备,open()方法经过系统调用,进入Binder驱动,然后调用方法binder_open(),该方法会在Binder驱动层创建一个binder_proc对象,再将binder_proc对象赋值给fd->private_data,同时放入全局链表binder_procs。再通过ioctl()检验当前binder版本与Binder驱动层的版本是否一致。

调用mmap()进行内存映射,同理mmap()方法经过系统调用,对应于Binder驱动层的binder_mmap()方法,该方法会在Binder驱动层创建Binder_buffer对象,并放入当前binder_proc的proc->buffers链表。

2.2.1 binder_state

1 //servicemanager/binder.c 2 3 struct binder_state 4 { 5 int fd; // dev/binder的文件描述符 6 void *mapped; //指向mmap的内存地址 7 size_t mapsize; //分配的内存大小,默认为128KB 8 };

2.3 binder_become_context_manager

1 // servicemanager/binder.c 2 3 int binder_become_context_manager(struct binder_state *bs) 4 { 5 //通过ioctl,传递BINDER_SET_CONTEXT_MGR指令【见小节2.3.1】 6 return ioctl(bs->fd, BINDER_SET_CONTEXT_MGR, 0); 7 }

成为上下文的管理者,整个系统中只有一个这样的管理者。 通过ioctl()方法经过系统调用,对应于Binder驱动层的binder_ioctl()方法.

2.3.1 binder_ioctl

1 //kernel/drivers/android/binder.c 2 3 static long binder_ioctl(struct file *filp, unsigned int cmd, unsigned long arg) 4 { 5 binder_lock(__func__); 6 switch (cmd) { 7 case BINDER_SET_CONTEXT_MGR: 8 ret = binder_ioctl_set_ctx_mgr(filp);//【见小节2.3.2】 9 break; 10 } 11 case :... 12 } 13 binder_unlock(__func__); 14 }

根据参数BINDER_SET_CONTEXT_MGR,最终调用binder_ioctl_set_ctx_mgr()方法,这个过程会持有binder_main_lock。

2.3.2 binder_ioctl_set_ctx_mgr

1 //kernel/drivers/android/binder.c 2 3 static int binder_ioctl_set_ctx_mgr(struct file *filp) 4 { 5 int ret = 0; 6 struct binder_proc *proc = filp->private_data; 7 kuid_t curr_euid = current_euid(); 8 9 //保证只创建一次mgr_node对象 10 if (binder_context_mgr_node != NULL) { 11 ret = -EBUSY; 12 goto out; 13 } 14 15 if (uid_valid(binder_context_mgr_uid)) { 16 ... 17 } else { 18 //设置当前线程euid作为Service Manager的uid 19 binder_context_mgr_uid = curr_euid; 20 } 21 22 //创建ServiceManager实体【见小节2.3.3】 23 binder_context_mgr_node = binder_new_node(proc, 0, 0); 24 ... 25 binder_context_mgr_node->local_weak_refs++; 26 binder_context_mgr_node->local_strong_refs++; 27 binder_context_mgr_node->has_strong_ref = 1; 28 binder_context_mgr_node->has_weak_ref = 1; 29 out: 30 return ret; 31 }

进入binder驱动,在Binder驱动中定义的静态变量

1 // service manager所对应的binder_node; 2 static struct binder_node *binder_context_mgr_node; 3 // 运行service manager的线程uid 4 static kuid_t binder_context_mgr_uid = INVALID_UID;

创建了全局的binder_node对象binder_context_mgr_node,并将binder_context_mgr_node的强弱引用各加1.

2.3.3 binder_new_node

1 //kernel/drivers/android/binder.c 2 3 static struct binder_node *binder_new_node(struct binder_proc *proc, 4 binder_uintptr_t ptr, 5 binder_uintptr_t cookie) 6 { 7 struct rb_node **p = &proc->nodes.rb_node; 8 struct rb_node *parent = NULL; 9 struct binder_node *node; 10 //首次进来为空 11 while (*p) { 12 parent = *p; 13 node = rb_entry(parent, struct binder_node, rb_node); 14 15 if (ptr < node->ptr) 16 p = &(*p)->rb_left; 17 else if (ptr > node->ptr) 18 p = &(*p)->rb_right; 19 else 20 return NULL; 21 } 22 23 //给新创建的binder_node 分配内核空间 24 node = kzalloc(sizeof(*node), GFP_KERNEL); 25 if (node == NULL) 26 return NULL; 27 binder_stats_created(BINDER_STAT_NODE); 28 // 将新创建的node对象添加到proc红黑树; 29 rb_link_node(&node->rb_node, parent, p); 30 rb_insert_color(&node->rb_node, &proc->nodes); 31 node->debug_id = ++binder_last_id; 32 node->proc = proc; 33 node->ptr = ptr; 34 node->cookie = cookie; 35 node->work.type = BINDER_WORK_NODE; //设置binder_work的type 36 INIT_LIST_HEAD(&node->work.entry); 37 INIT_LIST_HEAD(&node->async_todo); 38 return node; 39 }

在Binder驱动层创建binder_node结构体对象,并将当前binder_proc加入到binder_node的node->proc。并创建binder_node的async_todo和binder_work两个队列。

2.4 binder_loop

1 //servicemanager/binder.c 2 3 void binder_loop(struct binder_state *bs, binder_handler func) 4 { 5 int res; 6 struct binder_write_read bwr; 7 uint32_t readbuf[32]; 8 9 bwr.write_size = 0; 10 bwr.write_consumed = 0; 11 bwr.write_buffer = 0; 12 13 readbuf[0] = BC_ENTER_LOOPER; 14 //将BC_ENTER_LOOPER命令发送给binder驱动,让Service Manager进入循环 【见小节2.4.1】 15 binder_write(bs, readbuf, sizeof(uint32_t)); 16 17 for (;;) { 18 bwr.read_size = sizeof(readbuf); 19 bwr.read_consumed = 0; 20 bwr.read_buffer = (uintptr_t) readbuf; 21 22 res = ioctl(bs->fd, BINDER_WRITE_READ, &bwr); //进入循环,不断地binder读写过程 23 if (res < 0) { 24 break; 25 } 26 27 // 解析binder信息 【见小节2.5】 28 res = binder_parse(bs, 0, (uintptr_t) readbuf, bwr.read_consumed, func); 29 if (res == 0) { 30 break; 31 } 32 if (res < 0) { 33 break; 34 } 35 } 36 }

进入循环读写操作,由main()方法传递过来的参数func指向svcmgr_handler。

binder_write通过ioctl()将BC_ENTER_LOOPER命令发送给binder驱动,此时bwr只有write_buffer有数据,进入binder_thread_write()方法。 接下来进入for循环,执行ioctl(),此时bwr只有read_buffer有数据,那么进入binder_thread_read()方法。

2.4.1 binder_write

1 //servicemanager/binder.c 2 3 int binder_write(struct binder_state *bs, void *data, size_t len) 4 { 5 struct binder_write_read bwr; 6 int res; 7 8 bwr.write_size = len; 9 bwr.write_consumed = 0; 10 bwr.write_buffer = (uintptr_t) data; //此处data为BC_ENTER_LOOPER 11 bwr.read_size = 0; 12 bwr.read_consumed = 0; 13 bwr.read_buffer = 0; 14 //【见小节2.4.2】 15 res = ioctl(bs->fd, BINDER_WRITE_READ, &bwr); 16 return res; 17 }

根据传递进来的参数,初始化bwr,其中write_size大小为4,write_buffer指向缓冲区的起始地址,其内容为BC_ENTER_LOOPER请求协议号。通过ioctl将bwr数据发送给binder驱动,则调用其binder_ioctl方法,如下:

2.4.2 binder_ioctl

1 //kernel/drivers/android/binder.c 2 3 static long binder_ioctl(struct file *filp, unsigned int cmd, unsigned long arg) 4 { 5 int ret; 6 struct binder_proc *proc = filp->private_data; 7 struct binder_thread *thread; 8 ret = wait_event_interruptible(binder_user_error_wait, binder_stop_on_user_error < 2); 9 ... 10 11 binder_lock(__func__); 12 thread = binder_get_thread(proc); //获取binder_thread 13 switch (cmd) { 14 case BINDER_WRITE_READ: //进行binder的读写操作 15 ret = binder_ioctl_write_read(filp, cmd, arg, thread); //【见小节2.4.3】 16 if (ret) 17 goto err; 18 break; 19 case ... 20 } 21 ret = 0; 22 23 err: 24 if (thread) 25 thread->looper &= ~BINDER_LOOPER_STATE_NEED_RETURN; 26 binder_unlock(__func__); 27 ... 28 return ret; 29 }

2.4.3 binder_ioctl_write_read

1 //kernel/drivers/android/binder.c 2 3 static int binder_ioctl_write_read(struct file *filp, 4 unsigned int cmd, unsigned long arg, 5 struct binder_thread *thread) 6 { 7 int ret = 0; 8 struct binder_proc *proc = filp->private_data; 9 void __user *ubuf = (void __user *)arg; 10 struct binder_write_read bwr; 11 12 if (copy_from_user(&bwr, ubuf, sizeof(bwr))) { //把用户空间数据ubuf拷贝到bwr 13 ret = -EFAULT; 14 goto out; 15 } 16 17 if (bwr.write_size > 0) { //此时写缓存有数据【见小节2.4.4】 18 ret = binder_thread_write(proc, thread, 19 bwr.write_buffer, bwr.write_size, &bwr.write_consumed); 20 ... 21 } 22 23 if (bwr.read_size > 0) { //此时读缓存无数据 24 ... 25 } 26 27 if (copy_to_user(ubuf, &bwr, sizeof(bwr))) { //将内核数据bwr拷贝到用户空间ubuf 28 ret = -EFAULT; 29 goto out; 30 } 31 out: 32 return ret; 33 }

此处将用户空间的binder_write_read结构体 拷贝到内核空间.

2.4.4 binder_thread_write

1 //kernel/drivers/android/binder.c 2 3 static int binder_thread_write(struct binder_proc *proc, 4 struct binder_thread *thread, 5 binder_uintptr_t binder_buffer, size_t size, 6 binder_size_t *consumed) 7 { 8 uint32_t cmd; 9 void __user *buffer = (void __user *)(uintptr_t)binder_buffer; 10 void __user *ptr = buffer + *consumed; 11 void __user *end = buffer + size; 12 13 while (ptr < end && thread->return_error == BR_OK) { 14 get_user(cmd, (uint32_t __user *)ptr); //获取命令 15 switch (cmd) { 16 case BC_ENTER_LOOPER: 17 //设置该线程的looper状态 18 thread->looper |= BINDER_LOOPER_STATE_ENTERED; 19 break; 20 case ...; 21 } 22 } }

从bwr.write_buffer拿出cmd数据,此处为BC_ENTER_LOOPER. 可见上层本次调用binder_write()方法,主要是完成设置当前线程的looper状态为BINDER_LOOPER_STATE_ENTERED。

2.5 binder_parse

1 //servicemanager/binder.c 2 3 int binder_parse(struct binder_state *bs, struct binder_io *bio, 4 uintptr_t ptr, size_t size, binder_handler func) 5 { 6 int r = 1; 7 uintptr_t end = ptr + (uintptr_t) size; 8 9 while (ptr < end) { 10 uint32_t cmd = *(uint32_t *) ptr; 11 ptr += sizeof(uint32_t); 12 switch(cmd) { 13 case BR_NOOP: //无操作,退出循环 14 break; 15 case BR_TRANSACTION_COMPLETE: 16 break; 17 case BR_INCREFS: 18 case BR_ACQUIRE: 19 case BR_RELEASE: 20 case BR_DECREFS: 21 ptr += sizeof(struct binder_ptr_cookie); 22 break; 23 case BR_TRANSACTION: { 24 struct binder_transaction_data *txn = (struct binder_transaction_data *) ptr; 25 ... 26 binder_dump_txn(txn); 27 if (func) { 28 unsigned rdata[256/4]; 29 struct binder_io msg; 30 struct binder_io reply; 31 int res; 32 //【见小节2.5.1】 33 bio_init(&reply, rdata, sizeof(rdata), 4); 34 bio_init_from_txn(&msg, txn); //从txn解析出binder_io信息 35 //【见小节2.6】 36 res = func(bs, txn, &msg, &reply); 37 //【见小节3.4】 38 binder_send_reply(bs, &reply, txn->data.ptr.buffer, res); 39 } 40 ptr += sizeof(*txn); 41 break; 42 } 43 case BR_REPLY: { 44 struct binder_transaction_data *txn = (struct binder_transaction_data *) ptr; 45 ... 46 binder_dump_txn(txn); 47 if (bio) { 48 bio_init_from_txn(bio, txn); 49 bio = 0; 50 } 51 ptr += sizeof(*txn); 52 r = 0; 53 break; 54 } 55 case BR_DEAD_BINDER: { 56 struct binder_death *death = (struct binder_death *)(uintptr_t) *(binder_uintptr_t *)ptr; 57 ptr += sizeof(binder_uintptr_t); 58 // binder死亡消息【见小节3.3】 59 death->func(bs, death->ptr); 60 break; 61 } 62 case BR_FAILED_REPLY: 63 r = -1; 64 break; 65 case BR_DEAD_REPLY: 66 r = -1; 67 break; 68 default: 69 return -1; 70 } 71 } 72 return r; 73 }

解析binder信息,此处参数ptr指向BC_ENTER_LOOPER,func指向svcmgr_handler。故有请求到来,则调用svcmgr_handler。

2.5.1 bio_init

1 //servicemanager/binder.c 2 3 void bio_init(struct binder_io *bio, void *data, 4 size_t maxdata, size_t maxoffs) 5 { 6 size_t n = maxoffs * sizeof(size_t); 7 if (n > maxdata) { 8 ... 9 } 10 11 bio->data = bio->data0 = (char *) data + n; 12 bio->offs = bio->offs0 = data; 13 bio->data_avail = maxdata - n; 14 bio->offs_avail = maxoffs; 15 bio->flags = 0; 16 }

其中

1 struct binder_io 2 { 3 char *data; /* pointer to read/write from */ 4 binder_size_t *offs; /* array of offsets */ 5 size_t data_avail; /* bytes available in data buffer */ 6 size_t offs_avail; /* entries available in offsets array */ 7 8 char *data0; //data buffer起点位置 9 binder_size_t *offs0; //buffer偏移量的起点位置 10 uint32_t flags; 11 uint32_t unused; 12 };

2.5.2 bio_init_from_txn

1 //servicemanager/binder.c 2 3 void bio_init_from_txn(struct binder_io *bio, struct binder_transaction_data *txn) 4 { 5 bio->data = bio->data0 = (char *)(intptr_t)txn->data.ptr.buffer; 6 bio->offs = bio->offs0 = (binder_size_t *)(intptr_t)txn->data.ptr.offsets; 7 bio->data_avail = txn->data_size; 8 bio->offs_avail = txn->offsets_size / sizeof(size_t); 9 bio->flags = BIO_F_SHARED; 10 }

将readbuf的数据赋给bio对象的data

2.6 svcmgr_handler

1 //service_manager.c 2 3 int svcmgr_handler(struct binder_state *bs, 4 struct binder_transaction_data *txn, 5 struct binder_io *msg, 6 struct binder_io *reply) 7 { 8 struct svcinfo *si; //【见小节2.6.1】 9 uint16_t *s; 10 size_t len; 11 uint32_t handle; 12 uint32_t strict_policy; 13 int allow_isolated; 14 ... 15 16 strict_policy = bio_get_uint32(msg); 17 s = bio_get_string16(msg, &len); 18 ... 19 20 switch(txn->code) { 21 case SVC_MGR_GET_SERVICE: 22 case SVC_MGR_CHECK_SERVICE: 23 s = bio_get_string16(msg, &len); //服务名 24 //根据名称查找相应服务 【见小节3.1】 25 handle = do_find_service(bs, s, len, txn->sender_euid, txn->sender_pid); 26 //【见小节3.1.2】 27 bio_put_ref(reply, handle); 28 return 0; 29 30 case SVC_MGR_ADD_SERVICE: 31 s = bio_get_string16(msg, &len); //服务名 32 handle = bio_get_ref(msg); //handle【见小节3.2.3】 33 allow_isolated = bio_get_uint32(msg) ? 1 : 0; 34 //注册指定服务 【见小节3.2】 35 if (do_add_service(bs, s, len, handle, txn->sender_euid, 36 allow_isolated, txn->sender_pid)) 37 return -1; 38 break; 39 40 case SVC_MGR_LIST_SERVICES: { 41 uint32_t n = bio_get_uint32(msg); 42 43 if (!svc_can_list(txn->sender_pid)) { 44 return -1; 45 } 46 si = svclist; 47 while ((n-- > 0) && si) 48 si = si->next; 49 if (si) { 50 bio_put_string16(reply, si->name); 51 return 0; 52 } 53 return -1; 54 } 55 default: 56 return -1; 57 } 58 59 bio_put_uint32(reply, 0); 60 return 0; 61 }

该方法的功能:查询服务,注册服务,以及列举所有服务

2.6.1 svcinfo

1 struct svcinfo 2 { 3 struct svcinfo *next; 4 uint32_t handle; //服务的handle值 5 struct binder_death death; 6 int allow_isolated; 7 size_t len; //名字长度 8 uint16_t name[0]; //服务名 9 };

每一个服务用svcinfo结构体来表示,该handle值是在注册服务的过程中,由服务所在进程那一端所确定的。

最新R版本发现该代码已经全部删除,具体Servicemanager实现待重新梳理。。。。。。。。。

原文链接:http://gityuan.com/tags/#binder