hive搭建共分为三种模式:1、embedded,2、local,3、remote server

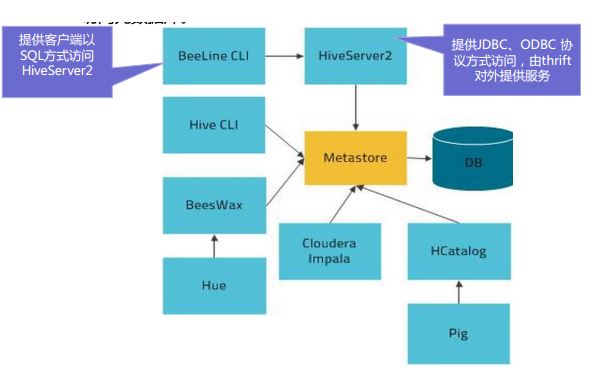

在这里,主要是配置第3种模式:remote server模式,如下图所示:

我的环境共三台虚拟机:Host0,Host2,Host3

在remote server模式中,Host0:Hive-server2

Host2:Hive-metastore

Host3:MySQL server

1、分别在Host0,Host2和Host3中安装hive-server2,hive-metastore,mysql-server

yum install hive-server2 -y

yum install hive-metastore -y

yum install mysql-server -y

2、在MySQL server中创建hive数据库以及hive用户,并设置hive用户可以远程登录

create database hive; create user hive identified by '123456'; grant all PRIVILEGES on *.* to hive@’%’ identified by ‘123456’; flush privileges;

3、在hive-metastore中设置hive配置文件,使其能连接到MySQL server

hive配置文件位置:/etc/hive/conf/hive-site.xml

向hive-site.xml中添加下列内容

<property> <name>javax.jdo.option.ConnectionURL</name> <value>jdbc:mysql://Host3:3306/hive?characterEncoding=UTF-8</value> <description>JDBC connect string for a JDBC metastore</description> </property> <property> <name>javax.jdo.option.ConnectionDriverName</name> <value>com.mysql.jdbc.Driver</value> <description>Driver class name for a JDBC metastore</description> </property> <property> <name>javax.jdo.option.ConnectionUserName</name> <value>hive</value> </property> <property> <name>javax.jdo.option.ConnectionPassword</name> <value>123456</value> </property> <property> <name>hive.metastore.warehouse.dir</name> <!-- base hdfs path --> <value>/user/hive/warehouse</value> <description>location of default database for the warehouse</description> </property>

4、在hdfs上创建hive.metastore.warehouse.dir目录,并修改权限

sudo -u hdfs hadoop fs -mkdir -p /user/hive/warehouse sudo -u hdfs hadoop fs -chown -R hive:hive /user/hive

5、在hive-server2中设置hive配置文件,使其能连接到hive-metastore

hive配置文件位置:/etc/hive/conf/hive-site.xml

向hive-site.xml中添加下列内容

<property> <name>hive.metastore.uris</name> <value>thrift://Host2:9083</value> </property>

6、分别在Host0,Host2中启动hive-server2,hive-metastore

service hive-server2 start

service hive-metastore start

7、在另一台虚拟机中进行测试(需要安装hive)

[root@client ~]# beeline which: no hbase in (/usr/lib64/qt-3.3/bin:/usr/local/sbin:/usr/local/bin:/sbin:/bin:/usr/sbin:/usr/bin:/usr/java/jdk1.8.0_60/bin:/root/bin) Beeline version 1.1.0-cdh5.8.0 by Apache Hive beeline> !connect jdbc:hive2://Host0:10000 scan complete in 11ms Connecting to jdbc:hive2://Host0:10000 Enter username for jdbc:hive2://Host0:10000: 1 Enter password for jdbc:hive2://Host0:10000: * Connected to: Apache Hive (version 1.1.0-cdh5.8.0) Driver: Hive JDBC (version 1.1.0-cdh5.8.0) Transaction isolation: TRANSACTION_REPEATABLE_READ 0: jdbc:hive2://Host0:10000> show tables; INFO : Compiling command(queryId=hive_20160912015858_bd9495d2-191f-429c-bf01-a165821b6d9a): show tables INFO : Semantic Analysis Completed INFO : Returning Hive schema: Schema(fieldSchemas:[FieldSchema(name:tab_name, type:string, comment:from deserializer)], properties:null) INFO : Completed compiling command(queryId=hive_20160912015858_bd9495d2-191f-429c-bf01-a165821b6d9a); Time taken: 0.027 seconds INFO : Concurrency mode is disabled, not creating a lock manager INFO : Executing command(queryId=hive_20160912015858_bd9495d2-191f-429c-bf01-a165821b6d9a): show tables INFO : Starting task [Stage-0:DDL] in serial mode INFO : Completed executing command(queryId=hive_20160912015858_bd9495d2-191f-429c-bf01-a165821b6d9a); Time taken: 0.061 seconds INFO : OK +-----------+--+ | tab_name | +-----------+--+ +-----------+--+ No rows selected (0.389 seconds)

需要注意的是:如果在hiveserver2方式中没有使用Kerberos,则上述密码可以随意写

8、在Hive中创建表,并将数据导入表中

CREATE TABLE test(id int, A int, B int, C int) ROW FORMAT DELIMITED FIELDS TERMINATED BY ','; load data inpath '/user/sqoop/sample' into table test;

/user/sqoop/sample表中的数据格式为:11419,9,160,48格式

当执行load data inpath '/user/sqoop/sample' into table test;后,/user/sqoop/sample里的数据就mv到了/user/hive/warehouse/test中去了,/user/sqoop/sample目录为空。