在这一教程中,你将会学习到怎么使用迁移学习训练网络。你可以在cs231n课程中学习更多有关迁移学习的内容。

引用如下笔记:

实践中,很少有人从随机开始训练一个完整的网络,因为缺乏足够的数据。通用的做法是在一个非常大的数据集上(比如ImageNet,它有120万图片,1000个类别)预训练一个ConvNet,然后使用这个ConvNet作为初始化或一个固定的特征提取器用在你感兴趣的任务上。

有如下两种主要的迁移学习形式:

- 微调ConvNet:相对于随机初始化,我们使用一个预训练网络参数来初始化网络,剩下的与常规的相同。

- ConvNet作为一个固定的特征提取器:除了最后的全连接层,我们将会锁住所有的网络权重。最后的全连接层替换成一个新的随机初始化的层,只有这层被训练。

导入需要的库:

import torch import torch.nn as nn import torch.optim as optim from torch.optim import lr_scheduler import torchvision from torchvision import datasets,models,transforms import matplotlib.pyplot as plt import time import os import copy plt.ion()

加载数据:

我们将使用torchvision和torch.utils.data包来加载数据。

我们今天想要解决的问题是训练一个模型来分类蚂蚁和蜜蜂。我们有将近120张关于蚂蚁和蜜蜂的训练图片。对于每个类有75张验证图片。通常这是一个非常小的数据集,如果从随机开始训练不足以泛化。使用迁移学习,能够相对泛化地很好。

这个数据集是imgnet的一个小的子集。

注意:点击这里下载,并提取到当下文件夹。

data_transforms={ 'train':transforms.Compose([ transforms.RandomResizedCrop(224), transforms.RandomHorizontalFlip(), transforms.ToTensor(), transforms.Normalize([0.485,0.456,0.406],[0.229,0.224,0.225]) ]), 'val':transforms.Compose([ transforms.Resize(256), transforms.CenterCrop(224), transforms.ToTensor(), transforms.Normalize([0.485,0.456,0.406],[0.229,0.224,0.225]) ]) } data_dir='hymenoptera_data' image_datasets={x:datasets.ImageFolder(os.path.join(data_dir,x),data_transforms[x]) for x in ['train','val']}

dataloaders={x:torch.utils.data.DataLoader(image_datasets[x],batch_size=4,shuffle=True,num_workers=0) for x in ['train','val']}

dataset_size={x:len(image_datasets[x]) for x in ['train','val']} class_name=image_datasets['train'].classes device=torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

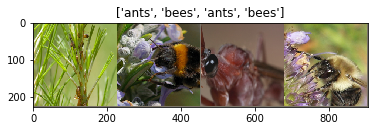

可视化部分图像

让我们可视化一些训练图片来了解下数据。

import numpy as np def imshow(inp,title=None): """Imshow for Tensor""" inp=inp.numpy().transpose((1,2,0)) mean=np.array([0.485,0.456,0.406]) std=np.array([0.229,0.224,0.225])

#输出*标准差+均值 inp=std*inp+mean

#限制范围为[0,1] inp=np.clip(inp,0,1) plt.imshow(inp) if title is not None: plt.title(title) plt.pause(0.001) inputs,classes=next(iter(dataloaders['train'])) out=torchvision.utils.make_grid(inputs) imshow(out,title=[class_name[x] for x in classes])

训练模型

现在,让我们写一个常规的函数来训练模型,我们将会实现:

- 调整学习率

- 保存最好的模型

在下面,参数scheduler是来自torch.optim.lr_scheduler的LR调度目标

def train_model(model,criterion,optimizer,scheduler,num_epoch=25): since=time.time() # 最佳的模型参数 best_model_wts=copy.deepcopy(model.state_dict()) best_acc=0.0 for epoch in range(num_epoch): print('Epoch {}/{}'.format(epoch,num_epoch-1)) print('-'*10) # 在每个epoch划分训练与验证集合 for phase in ['train','val']: if phase=='train': scheduler.step() model.train() else: model.eval() running_loss=0.0 running_corrects=0 #迭代数据 for inputs,labels in dataloaders[phase]: inputs=inputs.to(device) labels=labels.to(device) # 清空梯度 optimizer.zero_grad() # 前向传播 # 在训练中追踪历史 with torch.set_grad_enabled(phase=='train'): outputs=model(inputs) _,preds=torch.max(outputs,1) loss=criterion(outputs,labels) #在训练中后向传播并优化 if phase=='train': loss.backward() optimizer.step() running_loss+=loss.item()*inputs.size(0) running_corrects+=torch.sum(preds==labels.data) epoch_loss=running_loss/dataset_sizes[phase] epoch_acc=running_corrects.double()/dataset_sizes[phase] print('{} loss:{:.4f} Acc:{:.4f}'.format(phase,epoch_loss,epoch_acc)) #对模型深度复制 if phase=='val' and epoch_acc > best_acc: best_acc=epoch_acc best_model_wts=copy.deepcopy(model.state_dict()) print() time_elapsed=time.time()-since print('Traning complete in {:.0f}m {:.0f}s'.format(time_elapsed//60,time_elapsed%60)) print('Best val Acc: {:4f}'.format(best_acc)) # 加载最佳的模型参数 model.load_state_dict(best_model_wts) return model

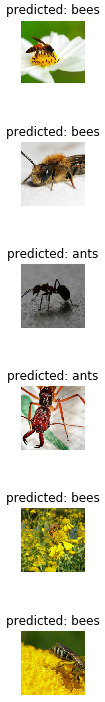

可视化模型预测

常规的函数来展示部分图片的预测

def visualize_model(model,num_images=6): was_training=model.training model.eval() images_so_far=0 fig=plt.figure() with torch.no_grad(): for i,(inputs,labels) in enumerate(dataloaders['val']): inputs=inputs.to(device) lables=labels.to(device) outputs=model(inputs) _,preds=torch.max(outputs,1) for j in range(inputs.size()[0]): images_so_far+=1 #被分为num_images//2行,2列,显示位置从1开始 ax =plt.subplot(num_images//2,2,images_so_far) ax.axis('off') ax.set_title('predicted:{}'.format(class_names[preds[j]])) imshow(inputs.cpu.data[j]) if images_so_far==num_images: model.train(mode=was_training) return # 在测试之后将模型恢复之前的形式 model.train(mode=was_trainning)

微调卷积网络

加载预训练模型和替换最后的全连接层

model_ft=models.resnet18(pretrained=True) num_ftrs=model_ft.fc.in_features model_ft.fc=nn.Linear(num_ftrs,2) model_ft=model_ft.to(device) criterion=nn.CrossEntropyLoss() # 这样所有的参数都将优化 optimizer_ft=optim.SGD(model_ft.parameters(),lr=0.001,momentum=0.9) # 每7个epoch LR衰减0.1 exp_lr_scheduler=lr_scheduler.StepLR(optimizer_ft,step_size=7,gamma=0.1)

训练和评估

model_ft=train_model(model_ft,criterion,optimizer_ft,exp_lr_scheduler,num_epoch=25)

out:

Epoch 0/24 ----------

train loss:0.5504 Acc:0.7582

val loss:0.2265 Acc:0.9085

Epoch 1/24 ----------

train loss:0.5732 Acc:0.7377

val loss:0.3290 Acc:0.8693

Epoch 2/24 ----------

train loss:0.4693 Acc:0.7664

val loss:0.3554 Acc:0.8824

Epoch 3/24 ----------

train loss:0.5210 Acc:0.8402

val loss:0.5970 Acc:0.7843

Epoch 4/24 ----------

train loss:0.4709 Acc:0.8361

val loss:0.2318 Acc:0.8758

Epoch 5/24 ----------

train loss:0.4802 Acc:0.8115

val loss:0.2669 Acc:0.8693

Epoch 6/24 ----------

train loss:0.5484 Acc:0.7910

val loss:0.2531 Acc:0.8889

Epoch 7/24 ----------

train loss:0.3559 Acc:0.8443

val loss:0.2264 Acc:0.9085

Epoch 8/24 ----------

train loss:0.3517 Acc:0.8689

val loss:0.2716 Acc:0.8954

Epoch 9/24 ----------

train loss:0.3331 Acc:0.8607

val loss:0.2068 Acc:0.9150

Epoch 10/24 ----------

train loss:0.3589 Acc:0.8402

val loss:0.1906 Acc:0.9216

Epoch 11/24 ----------

train loss:0.2559 Acc:0.9057

val loss:0.1732 Acc:0.9346

Epoch 12/24 ----------

train loss:0.3467 Acc:0.8279

val loss:0.1772 Acc:0.9346

Epoch 13/24 ----------

train loss:0.3280 Acc:0.8730

val loss:0.1722 Acc:0.9346

Epoch 14/24 ----------

train loss:0.2826 Acc:0.8975

val loss:0.1651 Acc:0.9346

Epoch 15/24 ----------

train loss:0.2317 Acc:0.9098

val loss:0.1984 Acc:0.9346

Epoch 16/24 ----------

train loss:0.2708 Acc:0.8811

val loss:0.2128 Acc:0.9150

Epoch 17/24 ----------

train loss:0.3020 Acc:0.8811

val loss:0.1926 Acc:0.9281

Epoch 18/24 ----------

train loss:0.3015 Acc:0.8689

val loss:0.2119 Acc:0.8954

Epoch 19/24 ----------

train loss:0.3194 Acc:0.8525

val loss:0.1766 Acc:0.9281

Epoch 20/24 ----------

train loss:0.2548 Acc:0.8893

val loss:0.1709 Acc:0.9346

Epoch 21/24 ----------

train loss:0.2439 Acc:0.8893

val loss:0.1996 Acc:0.9216

Epoch 22/24 ----------

train loss:0.2203 Acc:0.9262

val loss:0.1606 Acc:0.9281

Epoch 23/24 ----------

train loss:0.2406 Acc:0.9057

val loss:0.1681 Acc:0.9412

Epoch 24/24 ----------

train loss:0.1897 Acc:0.9385

val loss:0.1548 Acc:0.9281

Traning complete in 6m 39s Best val Acc: 0.941176

visualize_model(model_ft)

卷积网络作为固定的特征提取器

现在,我们需要固定住所有的网络,除了最后一层。我们需要设置requires_grad==False来固定网络参数,以便于它们的梯度在反向传播不进行计算。

你可以点击这里了解更多。

model_conv=torchvision.models.resnet18(pretrained=True) for param in model_conv.parameters(): param.requires_grad=False # 新加的模块默认是requires_grad=True num_ftrs=model_conv.fc.in_features model_conv.fc=nn.Linear(num_ftrs,2) model_conv=model_conv.to(device) criterion=nn.CrossEntropyLoss() # 只有最后一层的参数被优化 optimizer_conv=optim.SGD(model_conv.fc.parameters(),lr=0.001,momentum=0.9) exp_lr_scheduler=lr_scheduler.StepLR(optimizer_conv,step_size=7,gamma=0.1)

训练和评估

model_conv=train_model(model_conv,criterion,optimizer_conv,exp_lr_scheduler,num_epoch=25)

out: Epoch 0/24 ---------- train loss:0.6060 Acc:0.6311 val loss:0.3218 Acc:0.8562 Epoch 1/24 ---------- train loss:0.6493 Acc:0.7418 val loss:0.5251 Acc:0.7712 Epoch 2/24 ---------- train loss:0.5541 Acc:0.7623 val loss:0.1878 Acc:0.9477 Epoch 3/24 ---------- train loss:0.4401 Acc:0.7828 val loss:0.3141 Acc:0.8758 Epoch 4/24 ---------- train loss:0.5697 Acc:0.7869 val loss:0.3362 Acc:0.8627 Epoch 5/24 ---------- train loss:0.3668 Acc:0.8402 val loss:0.4343 Acc:0.8301 Epoch 6/24 ---------- train loss:0.4692 Acc:0.8238 val loss:0.2586 Acc:0.9085 Epoch 7/24 ---------- train loss:0.2712 Acc:0.8770 val loss:0.1950 Acc:0.9477 Epoch 8/24 ---------- train loss:0.3284 Acc:0.8443 val loss:0.1944 Acc:0.9542 Epoch 9/24 ---------- train loss:0.3115 Acc:0.8852 val loss:0.2000 Acc:0.9542 Epoch 10/24 ---------- train loss:0.3889 Acc:0.8402 val loss:0.1896 Acc:0.9542 Epoch 11/24 ---------- train loss:0.3071 Acc:0.8689 val loss:0.1981 Acc:0.9542 Epoch 12/24 ---------- train loss:0.2208 Acc:0.9098 val loss:0.1956 Acc:0.9542 Epoch 13/24 ---------- train loss:0.3622 Acc:0.8320 val loss:0.2058 Acc:0.9477 Epoch 14/24 ---------- train loss:0.3290 Acc:0.8525 val loss:0.2212 Acc:0.9412 Epoch 15/24 ---------- train loss:0.3359 Acc:0.8525 val loss:0.2120 Acc:0.9542 Epoch 16/24 ---------- train loss:0.3550 Acc:0.8279 val loss:0.1864 Acc:0.9477 Epoch 17/24 ---------- train loss:0.3395 Acc:0.8402 val loss:0.2104 Acc:0.9542 Epoch 18/24 ---------- train loss:0.2966 Acc:0.8811 val loss:0.2044 Acc:0.9477 Epoch 19/24 ---------- train loss:0.3477 Acc:0.8320 val loss:0.1918 Acc:0.9542 Epoch 20/24 ---------- train loss:0.3185 Acc:0.8607 val loss:0.1891 Acc:0.9477 Epoch 21/24 ---------- train loss:0.4269 Acc:0.8238 val loss:0.2043 Acc:0.9412 Epoch 22/24 ---------- train loss:0.3475 Acc:0.8566 val loss:0.1913 Acc:0.9542 Epoch 23/24 ---------- train loss:0.4412 Acc:0.7951 val loss:0.1972 Acc:0.9542 Epoch 24/24 ---------- train loss:0.3772 Acc:0.8279 val loss:0.2329 Acc:0.9346 Traning complete in 3m 2s Best val Acc: 0.954248

visualize_model(model_conv)

plt.ioff()

plt.show()