1.首先我们了解一下keras中的Embedding层:from keras.layers.embeddings import Embedding:

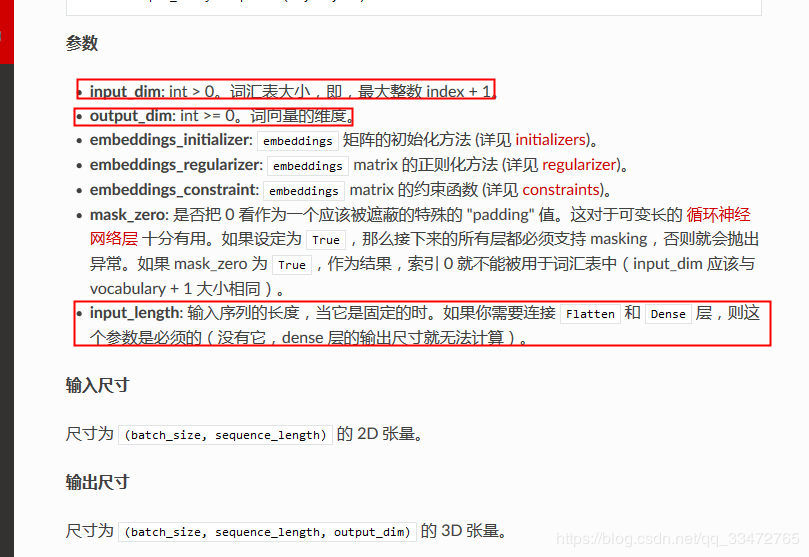

Embedding参数如下:

输入尺寸:(batch_size,input_length)

输出尺寸:(batch_size,input_length,output_dim)

举个例子:(随机初始化Embedding):

from keras.models import Sequential

from keras.layers import Embedding

import numpy as np

model = Sequential()

model.add(Embedding(1000, 64, input_length=10))

# 输入大小为(None,10),Nnoe是batch_size大小,10代表每一个batch中有10条样本

# 输出大小为(None, 10, 64),其中64代表输入中每个每条样本被embedding成了64维的向量

input_array = np.random.randint(1000, size=(32, 10))

model.compile('rmsprop', 'mse')

output_array = model.predict(input_array)

print(output_array)

assert output_array.shape == (32, 10, 64)

具体可以看下面的例子:

from keras.models import Sequential

from keras.layers import Flatten, Dense, Embedding

import numpy as np

model = Sequential()

model.add(Embedding(3, 2, input_length=7))

#通俗的讲,这个过程中,Embedding层生成了一个大小为3*2的随机矩阵(3代表词汇表大小,,2代表没个词embedding后的向量大小),记为M,查看矩阵M:

model.layers[0].get_weights()

#输出

[array([[-0.00732628, -0.02913231],

[ 0.00573028, 0.0329752 ],

[-0.0401206 , -0.01729034]], dtype=float32)]

矩阵的每一行是该行下标指示的标记的数值向量,即矩阵M的第i(0,1,2)行是index为i的单词对应的数值向量,比如说,我的输入如果index=1,则对应的embedding向量= [ 0.00573028, 0.0329752 ],具体看下面:

data = np.array([[0,1,2,1,1,0,1],[0,1,2,1,1,0,1]]

model.predict(data))

#输出

[[[-0.00732628 -0.02913231]

[ 0.00573028 0.0329752 ]

[-0.0401206 -0.01729034]

[ 0.00573028 0.0329752 ]

[ 0.00573028 0.0329752 ]

[-0.00732628 -0.02913231]

[ 0.00573028 0.0329752 ]]

[[-0.00732628 -0.02913231]

[ 0.00573028 0.0329752 ]

[-0.0401206 -0.01729034]

[ 0.00573028 0.0329752 ]

[ 0.00573028 0.0329752 ]

[-0.00732628 -0.02913231]

[ 0.00573028 0.0329752 ]]]

data是Embedding层的输入,它包含2个batch,每个batch有7条样本,即data.shape = (2,7), 输出out的shape = (2,7,2),即每一条样本被embedding成了2维向量。

有时候我们可以用预训练好的embedding matrix初始化(使用百度百科(word2vec)的语料库):

def create_embedding(word_index, num_words, word2vec_model):

embedding_matrix = np.zeros((num_words, EMBEDDING_DIM))

for word, i in word_index.items():

try:

embedding_vector = word2vec_model[word]

embedding_matrix[i] = embedding_vector

except:

continue

return embedding_matrix

#word_index:词典

#num_word:词典长度+1

#word2vec_model:词向量的model

embedding_matrix = create_embedding(word_index, num_words, word2vec_model)

model = Sequential()

embedding_layer = Embedding(num_words,

EMBEDDING_DIM, #embedding后的向量大小

embeddings_initializer=Constant(embedding_matrix), #使用预训练好的embedding matrix初始化

input_length=MAX_SEQUENCE_LENGTH, #输入的每个batch中样本个数

trainable=False)

input = Input(shape=(MAX_SEQUENCE_LENGTH,), dtype='int32')

embedded_input = embedding_layer(sequence_input)

model.add(embedded_sequences)

其实Keras实现LLSTM(其它网络模型也一样),就像是在堆积木:

#单层LSTM

model = Sequential()

model.add(Embedding(len(words)+1, 256, input_length=maxlen))

model.add(LSTM(output_dim=128, activation='sigmoid', inner_activation='hard_sigmoid'))

model.add(Dropout(0.5))

model.add(Dense(1))

model.add(Activation('sigmoid'))

model.compile(loss='binary_crossentropy',

optimizer='rmsprop',

metrics=['accuracy'])

#model.compile(loss='binary_crossentropy', optimizer='adam', class_mode="binary")

model.fit(x, y, batch_size=16, nb_epoch=10)

y_= model.predict_classes(x)

#多层LSTM

model = Sequential()

#多层LSTM中,最后一个LSTM层return_sequences通常为false,非最后一层为True

#return_sequences:默认为false。当为False时,返回最后一层最后一个步长的隐藏状态;当为True时,返回最后一层的所有隐藏状态

model.add(LSTM(layers[1], input_shape=(seq_len, layers[0]),return_sequences=True))

#model.add(Dropout(0.2))

model.add(LSTM(layers[2],return_sequences=False))

#model.add(Dropout(0.2))

model.add(Dense(units=layers[3], activation='tanh'))

下面附上LSTM在keras中参数return_sequences,return_state的超详细区别:

一,定义

return_sequences:默认为false。当为假时,返回最后一层最后一个步长的隐藏状态;当为真时,返回最后一层的所有隐藏状态。

return_state:默认false。当为真时,返回最后一层的最后一个步长的输出隐藏状态和输入单元状态。

二,实例验证

下图的输入是一个步长为3,维度为1的数组。

一共有2层神经网络(其中第一层必须加上“return_sequences =真”,这样才能转化成步长为3的输入变量)

(1)return_sequences =True

from keras.models import Model

from keras.layers import Input

from keras.layers import LSTM

from numpy import array

from keras.models import Sequential

data = array([0.1,0.2,0.3]).reshape((1,3,1))

inputs1 = Input(shape=(3,1))

lstm1,state_h,state_c = LSTM(2,return_sequences=True,return_state=True)(inputs1) #第一层LSTM

lstm2 = LSTM(2,return_sequences=True)(lstm1) #第二层LSTM

model = Model(input = inputs1,outputs = [lstm2])

print(model.predict(data))输出结果为:(最后一层LSTM2的每一个时间步长hidden_state的结果)

[[[0.00120299 0.0009285]

[0.0040868 0.00327]

[0.00869473 0.00720878]]]

(2)return_sequence = False,return_state = True

from keras.models import Model

from keras.layers import Input

from keras.layers import LSTM

from numpy import array

from keras.models import Sequential

data = array([0.1,0.2,0.3]).reshape((1,3,1))

inputs1 = Input(shape=(3,1))

lstm1,state_h,state_c = LSTM(2,return_sequences=True,return_state=True)(inputs1)

lstm2,state_h2,state_c2 = LSTM(2,return_state=True)(lstm1)

model = Model(input = inputs1,outputs = [lstm2,state_h2,state_c2])

print(model.predict(data))

输出为:

因为return_state =真,返回了最后一层最后一个时间步长的输出hidden_state和输入cell_state。

[array([[ - 0.00234587,0.00718377]],dtype = float32),array([[ - 0.00234587,0.00718377]],dtype = float32),array([[ - 0.00476015,0.01406127]],dtype = float32)]

(3)return_sequence = True,return_state = True

from keras.models import Model

from keras.layers import Input

from keras.layers import LSTM

from numpy import array

from keras.models import Sequential

data = array([0.1,0.2,0.3]).reshape((1,3,1))

inputs1 = Input(shape=(3,1))

lstm1,state_h,state_c = LSTM(2,return_sequences=True,return_state=True)(inputs1)

lstm2,state_h2,state_c2 = LSTM(2,return_sequences=True,return_state=True)(lstm1)

model = Model(input = inputs1,outputs = [lstm2,state_h2,state_c2])

print(model.predict(data))

输出为:最后一层所有时间步长的隐藏状态,及最后一层最后一步的隐藏状态,细胞状态。

[array([[[ - [2.0248523e-04,-1.0290105e-03],

[ - 3.6455912e-04,-3.3424206e-03],

[ - 3.66696041e-05,-6.6624139e-03]]],dtype = FLOAT32),

array([[ - 3.669604e-05,-6.662414e-03]],dtype = float32),

array([[ - 7.3107367e-05,-1.3788906e-02]],dtype = float32)]

(4)return_sequence = False,return_state = False

from keras.models import Model

from keras.layers import Input

from keras.layers import LSTM

from numpy import array

from keras.models import Sequential

data = array([0.1,0.2,0.3]).reshape((1,3,1))

inputs1 = Input(shape=(3,1))

lstm1,state_h,state_c = LSTM(2,return_sequences=True,return_state=True)(inputs1)

lstm2 = LSTM(2)(lstm1)

model = Model(input = inputs1,outputs = [lstm2])

print(model.predict(data))

输出为:最后一层的最后一个步长的隐藏状态。

[[-0.01998264 -0.00451741]]

本文参考自:

https://www.jianshu.com/p/a3f3033a7379

https://blog.csdn.net/qq_33472765/article/details/86561245

https://www.wandouip.com/t5i152855/

https://blog.csdn.net/weixin_36541072/article/details/53786020