并发编程-ConcurrentHashMap(二)

昨天说到扩容前面的准备工作,和一系列的判断,其中我觉得设计精妙的就是他的那个【高低位扩容】,精巧的使用了二进制,从某种层面讲,提升了性能,因为二进制的那个变量的存储,就相同于一个容器,如果不使用它,那肯定要new出一个容器进行存储,这就会占用内存。今天继续分析,所有关于CHM的东西,今天咱们就会剖析完,let's start with the method named transfer.

transfer()

这里主要是对数据进行转移

- 需要计算当前线程的数据迁移空间

- 创建一个新的数组,容量为扩容后的大小

- 实现数据的转移

- 如果是红黑树

- 如果数据迁移后,不满足红黑树的条件,则红黑树转化成链表

- 如果是链表

- 相应的阈值转换成红黑树

private final void transfer(Node<K,V>[] tab, Node<K,V>[] nextTab) { int n = tab.length, stride; // 这里是计算每个线程处理数据的区间大小,最小是16 if ((stride = (NCPU > 1) ? (n >>> 3) / NCPU : n) < MIN_TRANSFER_STRIDE) stride = MIN_TRANSFER_STRIDE; //扩容之后的数组(在原来的数组的容量的基础上扩大了一倍) if (nextTab == null) { // initiating try { @SuppressWarnings("unchecked") Node<K,V>[] nt = (Node<K,V>[])new Node<?,?>[n << 1]; nextTab = nt; } catch (Throwable ex) { // try to cope with OOME sizeCtl = Integer.MAX_VALUE; return; } nextTable = nextTab; //这是转移的索引,每个线程所处理的区间数量 transferIndex = n; } int nextn = nextTab.length; //这个表示已经迁移完成的状态(如果老数组中的的节点完成了迁移,则需要修改成fwd) ForwardingNode<K,V> fwd = new ForwardingNode<K,V>(nextTab); boolean advance = true; boolean finishing = false; for (int i = 0, bound = 0;;) { Node<K,V> f; int fh; while (advance) { int nextIndex, nextBound; if (--i >= bound || finishing) advance = false; else if ((nextIndex = transferIndex) <= 0) { i = -1; advance = false; } //通过循环对区间进行计算 假设数组长度是32 //那第一次计算的区间就是【16(nextBound),31(i)】 第二次计算就是【0,15】 else if (U.compareAndSwapInt (this, TRANSFERINDEX, nextIndex, (nextBound) = (nextIndex > stride ? nextIndex - stride : 0))) { bound = nextBound; i = nextIndex - 1; advance = false; } } //判断是否扩容结束 if (i < 0 || i >= n || i + n >= nextn) { int sc; if (finishing) { nextTable = null; table = nextTab; sizeCtl = (n << 1) - (n >>> 1); return; } if (U.compareAndSwapInt(this, SIZECTL, sc = sizeCtl, sc - 1)) { //因为前面在提到高低位扩容的时候是默认给低位加2的,所以现在减2如果等于初始数据则证明扩容结束 if ((sc - 2) != resizeStamp(n) << RESIZE_STAMP_SHIFT) return; finishing = advance = true; i = n; } } //得到数组最高位的值,如果当前数组位置为空,则直接修改成fwd表示数组迁移完成 else if ((f = tabAt(tab, i)) == null) advance = casTabAt(tab, i, null, fwd); //判断这个节点是否已经被处理过了,如果是,则进入下一次区间遍历 else if ((fh = f.hash) == MOVED) advance = true; // already processed else { //针对当前要去迁移的节点加锁(数组最大位的节点的位置),其他线程调用时候,需要等待 synchronized (f) { //下面就是针对不同类型的节点【链表/红黑树】,做不同的处理了,那这里我们会遇见一个问题,就是我们的内容存货从的下标是通过key和老数组的长度计算出来的,那新的数组可能会对应不同的hash数值,所以下面有一个变量【runBit】判断是否我们迁移某些数据或者不迁移 if (tabAt(tab, i) == f) { Node<K,V> ln, hn; if (fh >= 0) { int runBit = fh & n; Node<K,V> lastRun = f; //遍历当前列表,进行计算(组成两个链路)-找到最早的runBit不产生变化的那个数据(这样就证明在后续的数据中我都不需要进行迁移),那就把这个数据后面的组成一条链路(ln),这个链路上的剩余数据就是需要进行迁移的(因为他们的hash和新数组的不同)所以剩下的数据就组成一条链路(hn) for (Node<K,V> p = f.next; p != null; p = p.next) { int b = p.hash & n; if (b != runBit) { runBit = b; lastRun = p; } } //表示当前位置不用变化 if (runBit == 0) { ln = lastRun; hn = null; } else { hn = lastRun; ln = null; } for (Node<K,V> p = f; p != lastRun; p = p.next) { int ph = p.hash; K pk = p.key; V pv = p.val; if ((ph & n) == 0) ln = new Node<K,V>(ph, pk, pv, ln); else hn = new Node<K,V>(ph, pk, pv, hn); } setTabAt(nextTab, i, ln); setTabAt(nextTab, i + n, hn); setTabAt(tab, i, fwd); advance = true; } else if (f instanceof TreeBin) { TreeBin<K,V> t = (TreeBin<K,V>)f; TreeNode<K,V> lo = null, loTail = null; TreeNode<K,V> hi = null, hiTail = null; int lc = 0, hc = 0; for (Node<K,V> e = t.first; e != null; e = e.next) { int h = e.hash; TreeNode<K,V> p = new TreeNode<K,V> (h, e.key, e.val, null, null); if ((h & n) == 0) { if ((p.prev = loTail) == null) lo = p; else loTail.next = p; loTail = p; ++lc; } else { if ((p.prev = hiTail) == null) hi = p; else hiTail.next = p; hiTail = p; ++hc; } } ln = (lc <= UNTREEIFY_THRESHOLD) ? untreeify(lo) : (hc != 0) ? new TreeBin<K,V>(lo) : t; hn = (hc <= UNTREEIFY_THRESHOLD) ? untreeify(hi) : (lc != 0) ? new TreeBin<K,V>(hi) : t; setTabAt(nextTab, i, ln); setTabAt(nextTab, i + n, hn); setTabAt(tab, i, fwd); advance = true; } } } } } }

如果进行元素个数的计算

因为它是一个并发的集合框架,那多线程情况下,他是如何保证计算元素个数的准确性呢,这里面他使用了两种方法结合的方式,一个是basecount计算总数的变量 另外一种就是名为CounterCell的数组。

整体流程如下:

- 每次增加数据的时候对basecount进行增加,如果失败(那就证明有多个线程正在对这个资源共同抢占)

- 那就随机给CounterCell数组中存储一个数据,这就削减了basecount的压力

- 最后对basecount和CounterCell的数据进行一个累加,从而达到计算总数的效果,这里都是使用cas保障安全性的

private final void addCount(long x, int check) { CounterCell[] as; long b, s; //统计元素个数 如果使用BASECOUNT没有修改成功 if ((as = counterCells) != null || !U.compareAndSwapLong(this, BASECOUNT, b = baseCount, s = b + x)) { CounterCell a; long v; int m; boolean uncontended = true; if (as == null || (m = as.length - 1) < 0 || //这里就是随便找一个或者counterCells中的元素进行累加 (a = as[ThreadLocalRandom.getProbe() & m]) == null || !(uncontended = U.compareAndSwapLong(a, CELLVALUE, v = a.value, v + x))) { //这里完成元素的累加 fullAddCount(x, uncontended); return; } if (check <= 1) return; s = sumCount(); } //是否要进行扩容 if (check >= 0) { Node<K,V>[] tab, nt; int n, sc; while (s >= (long)(sc = sizeCtl) && (tab = table) != null && (n = tab.length) < MAXIMUM_CAPACITY) { int rs = resizeStamp(n); if (sc < 0) { if ((sc >>> RESIZE_STAMP_SHIFT) != rs || sc == rs + 1 || sc == rs + MAX_RESIZERS || (nt = nextTable) == null || transferIndex <= 0) break; if (U.compareAndSwapInt(this, SIZECTL, sc, sc + 1)) transfer(tab, nt); } else if (U.compareAndSwapInt(this, SIZECTL, sc, (rs << RESIZE_STAMP_SHIFT) + 2)) transfer(tab, null); s = sumCount(); } } }对元素进行累加

// See LongAdder version for explanation private final void fullAddCount(long x, boolean wasUncontended) { int h; if ((h = ThreadLocalRandom.getProbe()) == 0) { ThreadLocalRandom.localInit(); // force initialization h = ThreadLocalRandom.getProbe(); wasUncontended = true; } boolean collide = false; // True if last slot nonempty for (;;) { CounterCell[] as; CounterCell a; int n; long v; if ((as = counterCells) != null && (n = as.length) > 0) { if ((a = as[(n - 1) & h]) == null) { if (cellsBusy == 0) { // Try to attach new Cell CounterCell r = new CounterCell(x); // Optimistic create //cellsBusy是一个修改数据时保持原子性的标记 if (cellsBusy == 0 && U.compareAndSwapInt(this, CELLSBUSY, 0, 1)) { boolean created = false; try { // Recheck under lock //将初始化的r对象的元素个数放在对应下标的位置 CounterCell[] rs; int m, j; if ((rs = counterCells) != null && (m = rs.length) > 0 && rs[j = (m - 1) & h] == null) { rs[j] = r; created = true; } } finally { cellsBusy = 0; } if (created) break; continue; // Slot is now non-empty } } collide = false; } else if (!wasUncontended) // CAS already known to fail wasUncontended = true; // Continue after rehash else if (U.compareAndSwapLong(a, CELLVALUE, v = a.value, v + x)) break; else if (counterCells != as || n >= NCPU) collide = false; // At max size or stale else if (!collide) collide = true; // 扩容部分 同样通过cas去获得锁 else if (cellsBusy == 0 && U.compareAndSwapInt(this, CELLSBUSY, 0, 1)) { try { if (counterCells == as) {// Expand table unless stale CounterCell[] rs = new CounterCell[n << 1];//把countercell的大小扩大一倍,然后遍历数组,把数据添加到新的数组中 for (int i = 0; i < n; ++i) rs[i] = as[i]; counterCells = rs; } } finally { cellsBusy = 0; } collide = false; continue; // Retry with expanded table } h = ThreadLocalRandom.advanceProbe(h); } //如果countercell为空 通过CAS(compareAndSwapInt)操作保障线程安全性 else if (cellsBusy == 0 && counterCells == as && U.compareAndSwapInt(this, CELLSBUSY, 0, 1)) { boolean init = false; try { // Initialize table if (counterCells == as) { //初始化一个长度为2的数组 CounterCell[] rs = new CounterCell[2]; //把x(元素的个数)保存在某个位置 rs[h & 1] = new CounterCell(x); //赋值给全局变量counterCells counterCells = rs; init = true; } } finally { //释放锁 cellsBusy = 0; } if (init) break; } //当上面的操作都失败的,那就去修改basecount,因为所有线程都去玩counterCells,那basecount就空闲了 else if (U.compareAndSwapLong(this, BASECOUNT, v = baseCount, v + x)) break; // Fall back on using base } }链表转换成红黑树(这里牵扯到红黑树的知识,会在后续的博文中和大家专门聊)

static final class TreeBin<K,V> extends Node<K,V> { TreeNode<K,V> root; volatile TreeNode<K,V> first; //保留抢到锁的线程 volatile Thread waiter; volatile int lockState; static final int WRITER = 1; // set while holding write lock static final int WAITER = 2; // set when waiting for write lock static final int READER = 4; // increment value for setting read lock static int tieBreakOrder(Object a, Object b) { int d; if (a == null || b == null || (d = a.getClass().getName(). compareTo(b.getClass().getName())) == 0) d = (System.identityHashCode(a) <= System.identityHashCode(b) ? -1 : 1); return d; } //把链表转换成红黑树 TreeBin(TreeNode<K,V> b) { super(TREEBIN, null, null, null); this.first = b; TreeNode<K,V> r = null; //初始化红黑树 for (TreeNode<K,V> x = b, next; x != null; x = next) { next = (TreeNode<K,V>)x.next; x.left = x.right = null; if (r == null) { x.parent = null; x.red = false; r = x; } //进行添加 这里我会出一期关于红黑树的博文,之后再聊 else { K k = x.key; int h = x.hash; Class<?> kc = null; for (TreeNode<K,V> p = r;;) { int dir, ph; K pk = p.key; if ((ph = p.hash) > h) dir = -1; else if (ph < h) dir = 1; else if ((kc == null && (kc = comparableClassFor(k)) == null) || (dir = compareComparables(kc, k, pk)) == 0) dir = tieBreakOrder(k, pk); TreeNode<K,V> xp = p; if ((p = (dir <= 0) ? p.left : p.right) == null) { x.parent = xp; if (dir <= 0) xp.left = x; else xp.right = x; r = balanceInsertion(r, x); break; } } } } this.root = r; assert checkInvariants(root); }

总结(这两篇聊过的东西)

使用:包含了一些java8的新方法

原理分析:put方法内元素添加,构建数组

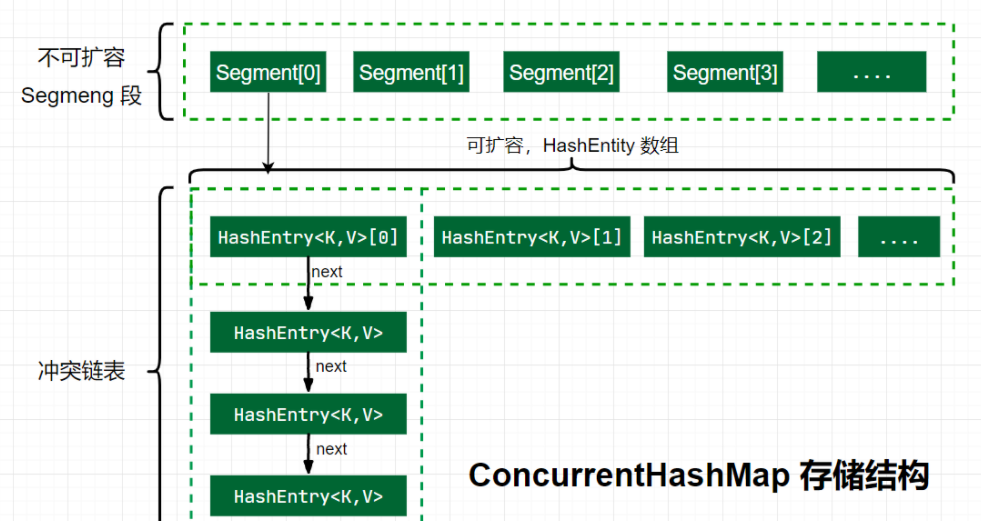

解决hash冲突:使用了链式寻址法

扩容:数据迁移,多线程并发协助迁移,高低位迁移(需要迁移的数据放在高位,不需要迁移的放在低位,然后一次性把这些放在新的数组中)

元素的统计:使用数组和basecounter使用分片的思想进行统计

当链表长度大于等于8,,并且数组长度大于等于64的时候,链表转换成红黑树