一、简介

在前一篇文章中,初步介绍了RN提供的关于相机功能CameraRoll的使用了。很多时候,这种最基础的API有时很难满足功能需求,此时,如果不想重复造轮子,我们可以选择一个完善好用的第三库。react-native-camera就是一个非常不错的关于相机功能的第三方库,使用这个框架基本能满足大多数的需求,现在来简单研究一下。

二、安装

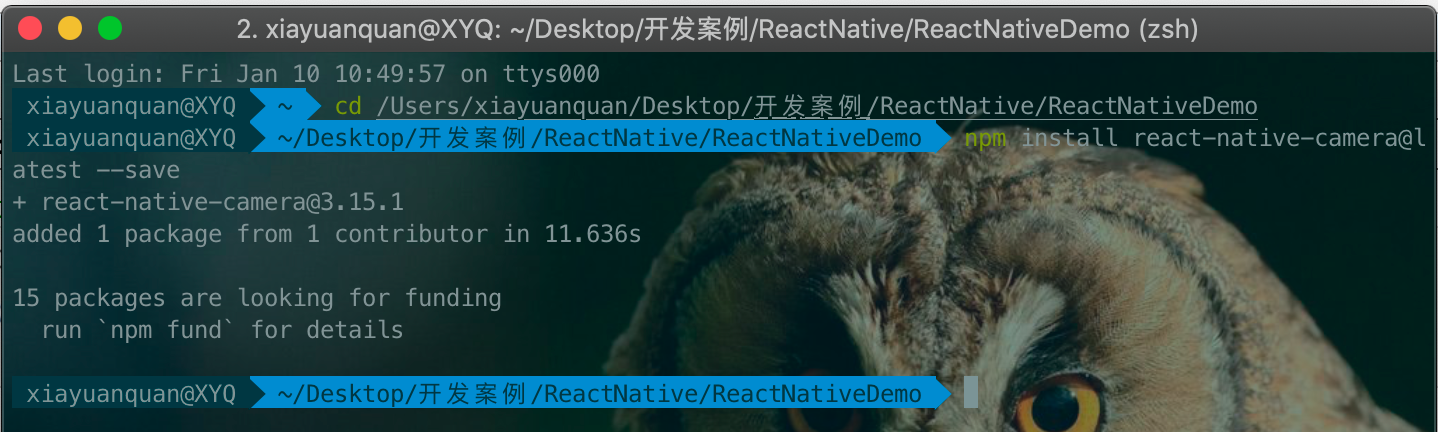

1、同样地道理,使用之前得先安装,还是采用npm安装吧。如下:

//安装react-native-camera npm install react-native-camera@latest --save

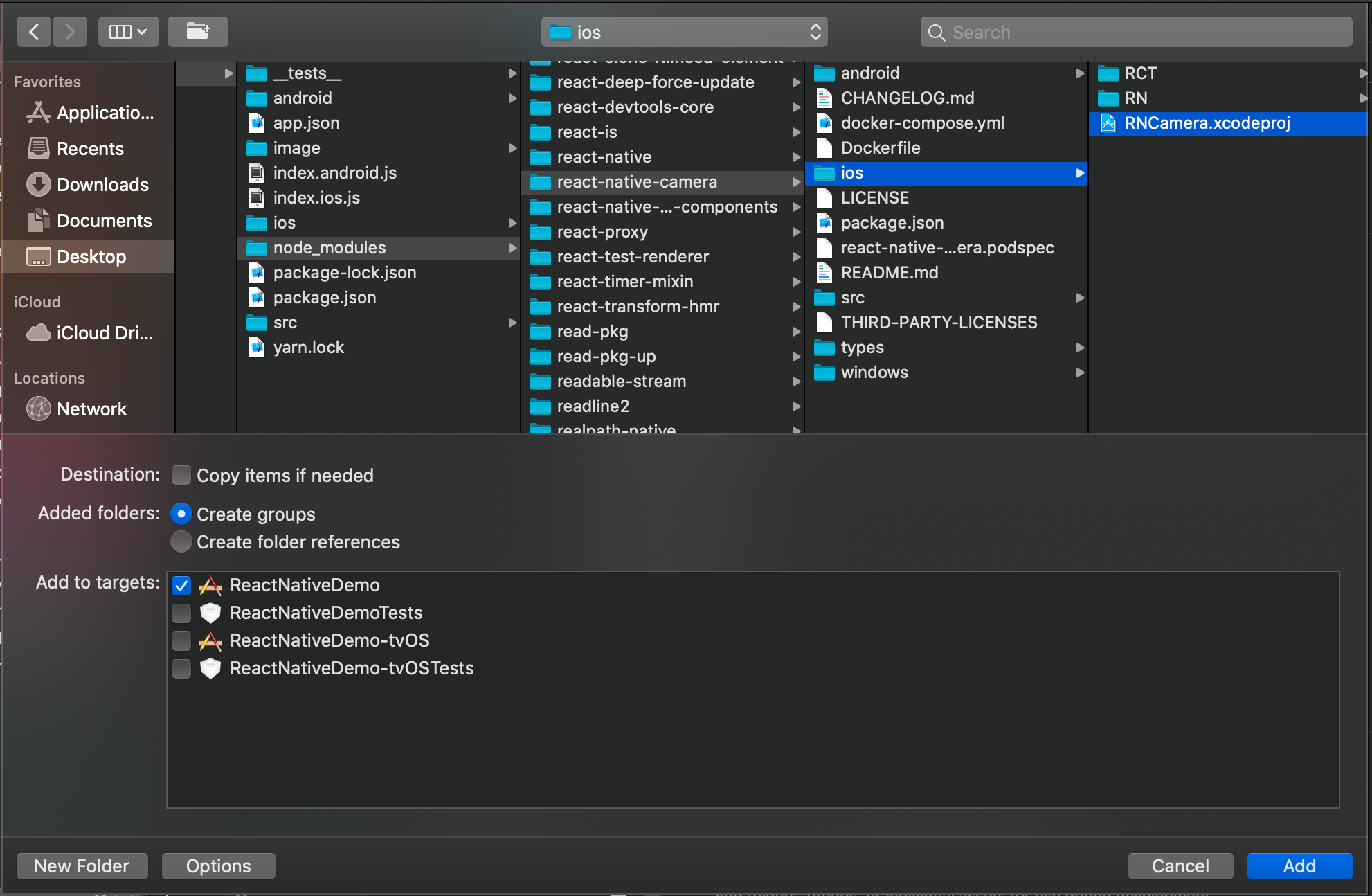

2、安装完成之后,需要添加工程,进行编译配置。执行完了这些步骤后,就完成了第三方库的添加了。

(1)打开xcode,找到Libraries文件,添加安装的react-native-camera目录下的RNCamera.xcodeproj工程

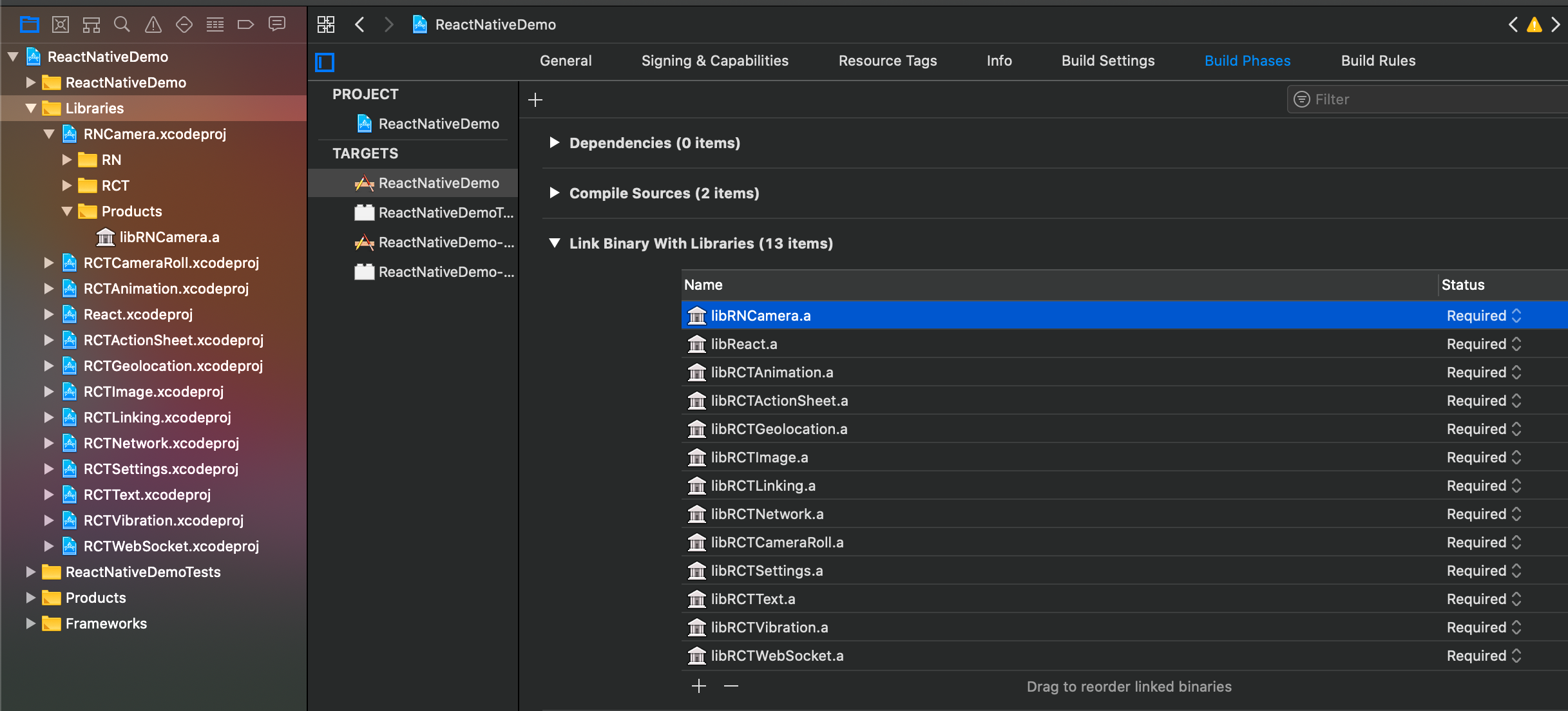

(2)添加libRNCamera.a静态库

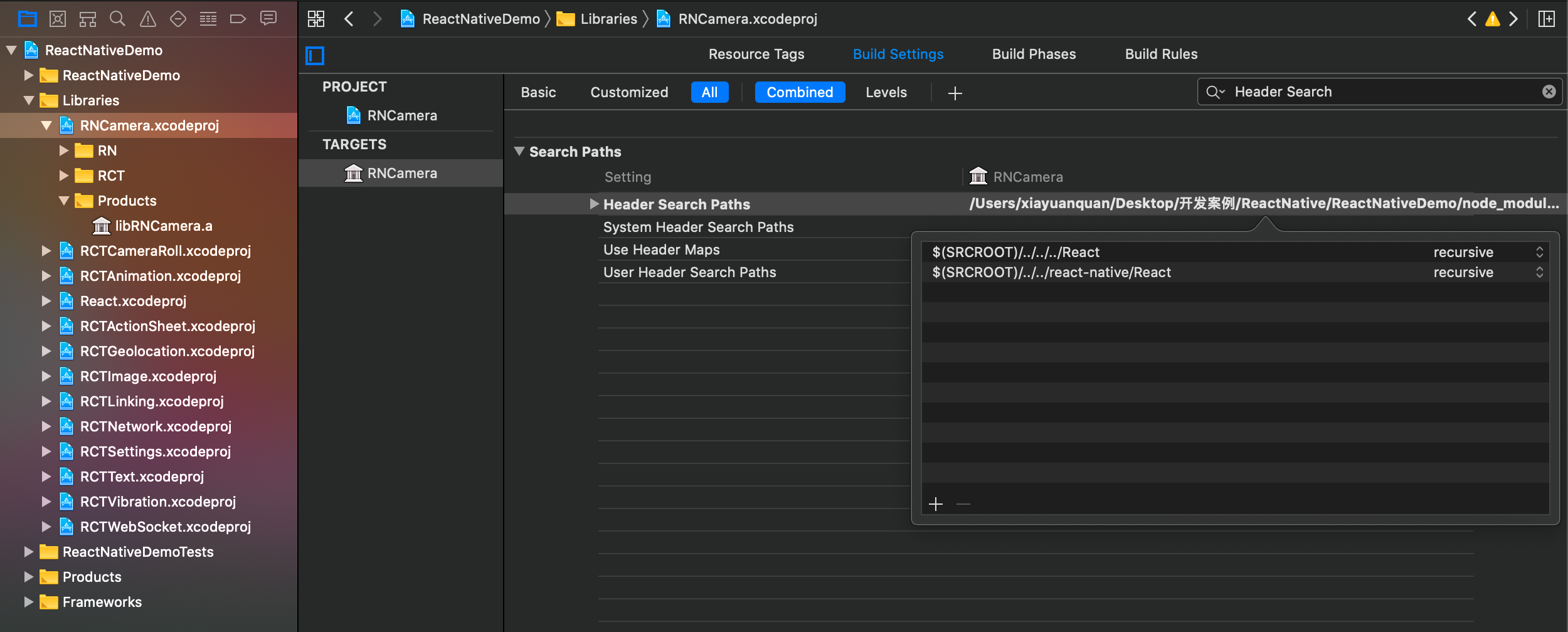

(3)找到并选中添加的RNCamera.xcodeproj工程,配置Build Settings选项卡中的Header Search Paths路径

三、API

1、类RNCamera的定义如下:包含静态常量和函数

//类RNCamera //继承RNCameraProps属性和ViewProperties属性 export class RNCamera extends Component<RNCameraProps & ViewProperties> { //静态常量(属性),只读,不可修改 static Constants: Constants; _cameraRef: null | NativeMethodsMixinStatic; _cameraHandle: ReturnType<typeof findNodeHandle>; //异步拍照 //参数 TakePictureOptions:表示的一下拍照时相机的配置选项 //返回值 Promise: 异步函数,TakePictureResponse为拍照的响应 takePictureAsync(options?: TakePictureOptions): Promise<TakePictureResponse>; //异步录制 //参数 RecordOptions:表示的一下录制时相机的配置选项 //返回值 Promise: 异步函数,RecordResponse为录制的响应 recordAsync(options?: RecordOptions): Promise<RecordResponse>; //刷新认证状态 //返回值 Promise: 异步函数,void无结果 refreshAuthorizationStatus(): Promise<void>; //停止录制 stopRecording(): void; //暂停预览 pausePreview(): void; //恢复预览 resumePreview(): void; //获取可用的图片的尺寸 //返回值 Promise: 异步函数,字符串结果 getAvailablePictureSizes(): Promise<string[]>; /** Android only */ //是否支持异步比率 getSupportedRatiosAsync(): Promise<string[]>; /** iOS only */ //是否正在录制 isRecording(): Promise<boolean>; }

2、关于RNCameraProps、Constants、TakePictureOptions、TakePictureResponse、RecordOptions、RecordResponse这些对象的定义,分别如下:

RNCameraProps:相机的配置属性

export interface RNCameraProps { children?: ReactNode | FaCC; autoFocus?: keyof AutoFocus; autoFocusPointOfInterest?: Point; /* iOS only */ onSubjectAreaChanged?: (event: { nativeEvent: { prevPoint: { x: number; y: number; } } }) => void; type?: keyof CameraType; flashMode?: keyof FlashMode; notAuthorizedView?: JSX.Element; pendingAuthorizationView?: JSX.Element; useCamera2Api?: boolean; exposure?: number; whiteBalance?: keyof WhiteBalance; captureAudio?: boolean; onCameraReady?(): void; onStatusChange?(event: { cameraStatus: keyof CameraStatus; recordAudioPermissionStatus: keyof RecordAudioPermissionStatus; }): void; onMountError?(error: { message: string }): void; /** iOS only */ onAudioInterrupted?(): void; onAudioConnected?(): void; /** Value: float from 0 to 1.0 */ zoom?: number; /** iOS only. float from 0 to any. Locks the max zoom value to the provided value A value <= 1 will use the camera's max zoom, while a value > 1 will use that value as the max available zoom **/ maxZoom?: number; /** Value: float from 0 to 1.0 */ focusDepth?: number; // -- BARCODE PROPS barCodeTypes?: Array<keyof BarCodeType>; googleVisionBarcodeType?: Constants['GoogleVisionBarcodeDetection']['BarcodeType']; googleVisionBarcodeMode?: Constants['GoogleVisionBarcodeDetection']['BarcodeMode']; onBarCodeRead?(event: { data: string; rawData?: string; type: keyof BarCodeType; /** * @description For Android use `{ number, height: number, origin: Array<Point<string>> }` * @description For iOS use `{ origin: Point<string>, size: Size<string> }` */ bounds: { number, height: number, origin: Array<Point<string>> } | { origin: Point<string>; size: Size<string> }; }): void; onGoogleVisionBarcodesDetected?(event: { barcodes: Barcode[]; }): void; // -- FACE DETECTION PROPS onFacesDetected?(response: { faces: Face[] }): void; onFaceDetectionError?(response: { isOperational: boolean }): void; faceDetectionMode?: keyof FaceDetectionMode; faceDetectionLandmarks?: keyof FaceDetectionLandmarks; faceDetectionClassifications?: keyof FaceDetectionClassifications; trackingEnabled?: boolean; onTextRecognized?(response: { textBlocks: TrackedTextFeature[] }): void; // -- ANDROID ONLY PROPS /** Android only */ ratio?: string; /** Android only - Deprecated */ permissionDialogTitle?: string; /** Android only - Deprecated */ permissionDialogMessage?: string; /** Android only */ playSoundOnCapture?: boolean; androidCameraPermissionOptions?: { title: string; message: string; buttonPositive?: string; buttonNegative?: string; buttonNeutral?: string; } | null; androidRecordAudioPermissionOptions?: { title: string; message: string; buttonPositive?: string; buttonNegative?: string; buttonNeutral?: string; } | null; // -- IOS ONLY PROPS defaultVideoQuality?: keyof VideoQuality; /* if true, audio session will not be released on component unmount */ keepAudioSession?: boolean; }

Constants:相机的静态常量属性,都是只读的

export interface Constants { CameraStatus: CameraStatus; AutoFocus: AutoFocus; FlashMode: FlashMode; VideoCodec: VideoCodec; Type: CameraType; WhiteBalance: WhiteBalance; VideoQuality: VideoQuality; BarCodeType: BarCodeType; FaceDetection: { Classifications: FaceDetectionClassifications; Landmarks: FaceDetectionLandmarks; Mode: FaceDetectionMode; }; GoogleVisionBarcodeDetection: { BarcodeType: GoogleVisionBarcodeType; BarcodeMode: GoogleVisionBarcodeMode; }; Orientation: { auto: 'auto'; landscapeLeft: 'landscapeLeft'; landscapeRight: 'landscapeRight'; portrait: 'portrait'; portraitUpsideDown: 'portraitUpsideDown'; }; }

TakePictureOptions:拍照时的配置选项

interface TakePictureOptions { quality?: number; orientation?: keyof Orientation | OrientationNumber; base64?: boolean; exif?: boolean; width?: number; mirrorImage?: boolean; doNotSave?: boolean; pauseAfterCapture?: boolean; writeExif?: boolean | { [name: string]: any }; /** Android only */ fixOrientation?: boolean; /** iOS only */ forceUpOrientation?: boolean; }

TakePictureResponse:拍照后的响应结果

export interface TakePictureResponse { number; height: number; uri: string; base64?: string; exif?: { [name: string]: any }; pictureOrientation: number; deviceOrientation: number; }

RecordOptions:录制时的配置选项

interface RecordOptions { quality?: keyof VideoQuality; orientation?: keyof Orientation | OrientationNumber; maxDuration?: number; maxFileSize?: number; mute?: boolean; mirrorVideo?: boolean; path?: string; videoBitrate?: number; /** iOS only */ codec?: keyof VideoCodec | VideoCodec[keyof VideoCodec]; }

RecordResponse:录制后的响应结果

export interface RecordResponse { /** Path to the video saved on your app's cache directory. */ uri: string; videoOrientation: number; deviceOrientation: number; isRecordingInterrupted: boolean; /** iOS only */ codec: VideoCodec[keyof VideoCodec]; }

3、更多的介绍请查看完整文件,如下:

// Type definitions for react-native-camera 1.0 // Definitions by: Felipe Constantino <https://github.com/fconstant> // Trent Jones <https://github.com/FizzBuzz791> // If you modify this file, put your GitHub info here as well (for easy contacting purposes) /* * Author notes: * I've tried to find a easy tool to convert from Flow to Typescript definition files (.d.ts). * So we woudn't have to do it manually... Sadly, I haven't found it. * * If you are seeing this from the future, please, send us your cutting-edge technology :) (if it exists) */ import { Component, ReactNode } from 'react'; import { NativeMethodsMixinStatic, ViewProperties, findNodeHandle } from 'react-native'; type Orientation = Readonly<{ auto: any; landscapeLeft: any; landscapeRight: any; portrait: any; portraitUpsideDown: any; }>; type OrientationNumber = 1 | 2 | 3 | 4; type AutoFocus = Readonly<{ on: any; off: any }>; type FlashMode = Readonly<{ on: any; off: any; torch: any; auto: any }>; type CameraType = Readonly<{ front: any; back: any }>; type WhiteBalance = Readonly<{ sunny: any; cloudy: any; shadow: any; incandescent: any; fluorescent: any; auto: any; }>; type BarCodeType = Readonly<{ aztec: any; code128: any; code39: any; code39mod43: any; code93: any; ean13: any; ean8: any; pdf417: any; qr: any; upc_e: any; interleaved2of5: any; itf14: any; datamatrix: any; }>; type VideoQuality = Readonly<{ '2160p': any; '1080p': any; '720p': any; '480p': any; '4:3': any; /** iOS Only. Android not supported. */ '288p': any; }>; type VideoCodec = Readonly<{ H264: symbol; JPEG: symbol; HVEC: symbol; AppleProRes422: symbol; AppleProRes4444: symbol; }>; type FaceDetectionClassifications = Readonly<{ all: any; none: any }>; type FaceDetectionLandmarks = Readonly<{ all: any; none: any }>; type FaceDetectionMode = Readonly<{ fast: any; accurate: any }>; type GoogleVisionBarcodeType = Readonly<{ CODE_128: any; CODE_39: any; CODABAR: any; DATA_MATRIX: any; EAN_13: any; EAN_8: any; ITF: any; QR_CODE: any; UPC_A: any; UPC_E: any; PDF417: any; AZTEC: any; ALL: any; }>; type GoogleVisionBarcodeMode = Readonly<{ NORMAL: any; ALTERNATE: any; INVERTED: any }>; // FaCC (Function as Child Components) type Self<T> = { [P in keyof T]: P }; type CameraStatus = Readonly<Self<{ READY: any; PENDING_AUTHORIZATION: any; NOT_AUTHORIZED: any }>>; type RecordAudioPermissionStatus = Readonly< Self<{ AUTHORIZED: 'AUTHORIZED'; PENDING_AUTHORIZATION: 'PENDING_AUTHORIZATION'; NOT_AUTHORIZED: 'NOT_AUTHORIZED'; }> >; type FaCC = ( params: { camera: RNCamera; status: keyof CameraStatus; recordAudioPermissionStatus: keyof RecordAudioPermissionStatus; }, ) => JSX.Element; export interface Constants { CameraStatus: CameraStatus; AutoFocus: AutoFocus; FlashMode: FlashMode; VideoCodec: VideoCodec; Type: CameraType; WhiteBalance: WhiteBalance; VideoQuality: VideoQuality; BarCodeType: BarCodeType; FaceDetection: { Classifications: FaceDetectionClassifications; Landmarks: FaceDetectionLandmarks; Mode: FaceDetectionMode; }; GoogleVisionBarcodeDetection: { BarcodeType: GoogleVisionBarcodeType; BarcodeMode: GoogleVisionBarcodeMode; }; Orientation: { auto: 'auto'; landscapeLeft: 'landscapeLeft'; landscapeRight: 'landscapeRight'; portrait: 'portrait'; portraitUpsideDown: 'portraitUpsideDown'; }; } export interface RNCameraProps { children?: ReactNode | FaCC; autoFocus?: keyof AutoFocus; autoFocusPointOfInterest?: Point; /* iOS only */ onSubjectAreaChanged?: (event: { nativeEvent: { prevPoint: { x: number; y: number; } } }) => void; type?: keyof CameraType; flashMode?: keyof FlashMode; notAuthorizedView?: JSX.Element; pendingAuthorizationView?: JSX.Element; useCamera2Api?: boolean; exposure?: number; whiteBalance?: keyof WhiteBalance; captureAudio?: boolean; onCameraReady?(): void; onStatusChange?(event: { cameraStatus: keyof CameraStatus; recordAudioPermissionStatus: keyof RecordAudioPermissionStatus; }): void; onMountError?(error: { message: string }): void; /** iOS only */ onAudioInterrupted?(): void; onAudioConnected?(): void; /** Value: float from 0 to 1.0 */ zoom?: number; /** iOS only. float from 0 to any. Locks the max zoom value to the provided value A value <= 1 will use the camera's max zoom, while a value > 1 will use that value as the max available zoom **/ maxZoom?: number; /** Value: float from 0 to 1.0 */ focusDepth?: number; // -- BARCODE PROPS barCodeTypes?: Array<keyof BarCodeType>; googleVisionBarcodeType?: Constants['GoogleVisionBarcodeDetection']['BarcodeType']; googleVisionBarcodeMode?: Constants['GoogleVisionBarcodeDetection']['BarcodeMode']; onBarCodeRead?(event: { data: string; rawData?: string; type: keyof BarCodeType; /** * @description For Android use `{ number, height: number, origin: Array<Point<string>> }` * @description For iOS use `{ origin: Point<string>, size: Size<string> }` */ bounds: { number, height: number, origin: Array<Point<string>> } | { origin: Point<string>; size: Size<string> }; }): void; onGoogleVisionBarcodesDetected?(event: { barcodes: Barcode[]; }): void; // -- FACE DETECTION PROPS onFacesDetected?(response: { faces: Face[] }): void; onFaceDetectionError?(response: { isOperational: boolean }): void; faceDetectionMode?: keyof FaceDetectionMode; faceDetectionLandmarks?: keyof FaceDetectionLandmarks; faceDetectionClassifications?: keyof FaceDetectionClassifications; trackingEnabled?: boolean; onTextRecognized?(response: { textBlocks: TrackedTextFeature[] }): void; // -- ANDROID ONLY PROPS /** Android only */ ratio?: string; /** Android only - Deprecated */ permissionDialogTitle?: string; /** Android only - Deprecated */ permissionDialogMessage?: string; /** Android only */ playSoundOnCapture?: boolean; androidCameraPermissionOptions?: { title: string; message: string; buttonPositive?: string; buttonNegative?: string; buttonNeutral?: string; } | null; androidRecordAudioPermissionOptions?: { title: string; message: string; buttonPositive?: string; buttonNegative?: string; buttonNeutral?: string; } | null; // -- IOS ONLY PROPS defaultVideoQuality?: keyof VideoQuality; /* if true, audio session will not be released on component unmount */ keepAudioSession?: boolean; } interface Point<T = number> { x: T; y: T; } interface Size<T = number> { T; height: T; } export interface Barcode { bounds: { size: Size; origin: Point; }; data: string; dataRaw: string; type: BarcodeType; format?: string; addresses?: { addressesType?: "UNKNOWN" | "Work" | "Home"; addressLines?: string[]; }[]; emails?: Email[]; phones?: Phone[]; urls?: string[]; name?: { firstName?: string; lastName?: string; middleName?: string; prefix?:string; pronounciation?:string; suffix?:string; formattedName?: string; }; phone?: Phone; organization?: string; latitude?: number; longitude?: number; ssid?: string; password?: string; encryptionType?: string; title?: string; url?: string; firstName?: string; middleName?: string; lastName?: string; gender?: string; addressCity?: string; addressState?: string; addressStreet?: string; addressZip?: string; birthDate?: string; documentType?: string; licenseNumber?: string; expiryDate?: string; issuingDate?: string; issuingCountry?: string; eventDescription?: string; location?: string; organizer?: string; status?: string; summary?: string; start?: string; end?: string; email?: Email; phoneNumber?: string; message?: string; } export type BarcodeType = |"EMAIL" |"PHONE" |"CALENDAR_EVENT" |"DRIVER_LICENSE" |"GEO" |"SMS" |"CONTACT_INFO" |"WIFI" |"TEXT" |"ISBN" |"PRODUCT" |"URL" export interface Email { address?: string; body?: string; subject?: string; emailType?: "UNKNOWN" | "Work" | "Home"; } export interface Phone { number?: string; phoneType?: "UNKNOWN" | "Work" | "Home" | "Fax" | "Mobile"; } export interface Face { faceID?: number; bounds: { size: Size; origin: Point; }; smilingProbability?: number; leftEarPosition?: Point; rightEarPosition?: Point; leftEyePosition?: Point; leftEyeOpenProbability?: number; rightEyePosition?: Point; rightEyeOpenProbability?: number; leftCheekPosition?: Point; rightCheekPosition?: Point; leftMouthPosition?: Point; mouthPosition?: Point; rightMouthPosition?: Point; bottomMouthPosition?: Point; noseBasePosition?: Point; yawAngle?: number; rollAngle?: number; } export interface TrackedTextFeature { type: 'block' | 'line' | 'element'; bounds: { size: Size; origin: Point; }; value: string; components: TrackedTextFeature[]; } interface TakePictureOptions { quality?: number; orientation?: keyof Orientation | OrientationNumber; base64?: boolean; exif?: boolean; width?: number; mirrorImage?: boolean; doNotSave?: boolean; pauseAfterCapture?: boolean; writeExif?: boolean | { [name: string]: any }; /** Android only */ fixOrientation?: boolean; /** iOS only */ forceUpOrientation?: boolean; } export interface TakePictureResponse { number; height: number; uri: string; base64?: string; exif?: { [name: string]: any }; pictureOrientation: number; deviceOrientation: number; } interface RecordOptions { quality?: keyof VideoQuality; orientation?: keyof Orientation | OrientationNumber; maxDuration?: number; maxFileSize?: number; mute?: boolean; mirrorVideo?: boolean; path?: string; videoBitrate?: number; /** iOS only */ codec?: keyof VideoCodec | VideoCodec[keyof VideoCodec]; } export interface RecordResponse { /** Path to the video saved on your app's cache directory. */ uri: string; videoOrientation: number; deviceOrientation: number; isRecordingInterrupted: boolean; /** iOS only */ codec: VideoCodec[keyof VideoCodec]; } export class RNCamera extends Component<RNCameraProps & ViewProperties> { static Constants: Constants; _cameraRef: null | NativeMethodsMixinStatic; _cameraHandle: ReturnType<typeof findNodeHandle>; takePictureAsync(options?: TakePictureOptions): Promise<TakePictureResponse>; recordAsync(options?: RecordOptions): Promise<RecordResponse>; refreshAuthorizationStatus(): Promise<void>; stopRecording(): void; pausePreview(): void; resumePreview(): void; getAvailablePictureSizes(): Promise<string[]>; /** Android only */ getSupportedRatiosAsync(): Promise<string[]>; /** iOS only */ isRecording(): Promise<boolean>; } interface DetectionOptions { mode?: keyof FaceDetectionMode; detectLandmarks?: keyof FaceDetectionLandmarks; runClassifications?: keyof FaceDetectionClassifications; } export class FaceDetector { private constructor(); static Constants: Constants['FaceDetection']; static detectFacesAsync(uri: string, options?: DetectionOptions): Promise<Face[]>; } // -- DEPRECATED CONTENT BELOW /** * @deprecated As of 1.0.0 release, RCTCamera is deprecated. Please use RNCamera for the latest fixes and improvements. */ export default class RCTCamera extends Component<any> { static constants: any; }

四、使用

实现一个摄像头切换、扫描二维码功能。(模拟器无法调起相机)请使用真机测试。

切记要添加授权字段:Privacy - Camera Usage Description、Privacy - Microphone Usage Description。代码示例如下:

/** * Sample React Native App * https://github.com/facebook/react-native * @flow */ import React, { Component } from 'react'; import { AppRegistry, StyleSheet, Text, TouchableHighlight } from 'react-native'; import {RNCamera, TakePictureResponse} from 'react-native-camera'; export default class ReactNativeDemo extends Component { //设置当前摄像头为后置摄像头 state = { cameraType: RNCamera.Constants.Type.back }; //扫描二维码 _onBarCodeRead(e){ //data: string; //rawData?: string; //type: keyof BarCodeType; //bounds: //For iOS use `{ origin: Point<string>, size: Size<string> }` //For Android use `{ number, height: number, origin: Array<Point<string>> }` console.log(e) } //切换摄像头方向 undefined is not an object (evaluating 'state.cameraType') _switchCamera(){ this.setState({ cameraType: (this.state.cameraType === RNCamera.Constants.Type.back) ? RNCamera.Constants.Type.front : RNCamera.Constants.Type.back }) // let state = this.state; // state.cameraType = (state.cameraType === RNCamera.Constants.Type.back) ? // RNCamera.Constants.Type.front : RNCamera.Constants.Type.back; // this.setState(state); } //拍摄照片 _takePicture(){ this.refs.camera.takePictureAsync().then( (response) => { console.log("response.uri:"+response.uri) }).catch((error => { console.log("error:"+error) })) } render() { return ( <RNCamera ref="camera" style={styles.container} onBarCodeRead={this._onBarCodeRead.bind(this)} type={this.state.cameraType} > <TouchableHighlight onPress={this._switchCamera.bind(this)}> <Text style={styles.switch}>Switch Camera</Text> </TouchableHighlight> <TouchableHighlight onPress={this._takePicture.bind(this)}> <Text style={styles.picture}>Take Picture</Text> </TouchableHighlight> </RNCamera> ); } } const styles = StyleSheet.create({ container: { flex: 1, justifyContent: 'center', alignItems: 'center', backgroundColor: 'transparent' }, switch: { marginTop: 30, textAlign: 'center', fontSize: 30, color: 'red' }, picture: { marginTop: 30, textAlign: 'center', fontSize: 30, color: 'red' } }); AppRegistry.registerComponent('ReactNativeDemo', () => ReactNativeDemo);

模拟器运行出现,无法启动相机如下

真机运行出现,对相机和麦克风进行授权后,示例如下

拍照时的打印日至如下:

2020-01-11 10:52:23.940 [info][tid:com.facebook.react.JavaScript] response.uri:file:///var/mobile/Containers/Data/Application/F36A8D1A-2F96-4E24-B2D8-9755AD1FF488/Library/Caches/Camera/F4B30B4E-69C5-4D61-B2AE-99426AED52F2.jpg