Spark MLlib

部分内容原文地址:

掘金:美图数据团队:从Spark MLlib到美图机器学习框架实践

博客园:牧梦者:Spark MLlib 机器学习 ***

一、Spark MLlib

在 Spark 官网上展示了逻辑回归算法在 Spark 和 Hadoop 上运行性能比较,从下图可以看出 MLlib 比 MapReduce 快了 100 倍。

Spark MLlib 主要包括以下几方面的内容:

- 学习算法:分类、回归、聚类和协同过滤;

- 特征处理:特征提取、变换、降维和选择;

- 管道(Pipeline):用于构建、评估和调整机器学习管道的工具;

- 持久性:保存和加载算法,模型和管道;

- 实用工具:线性代数,统计,最优化,调参等工具。

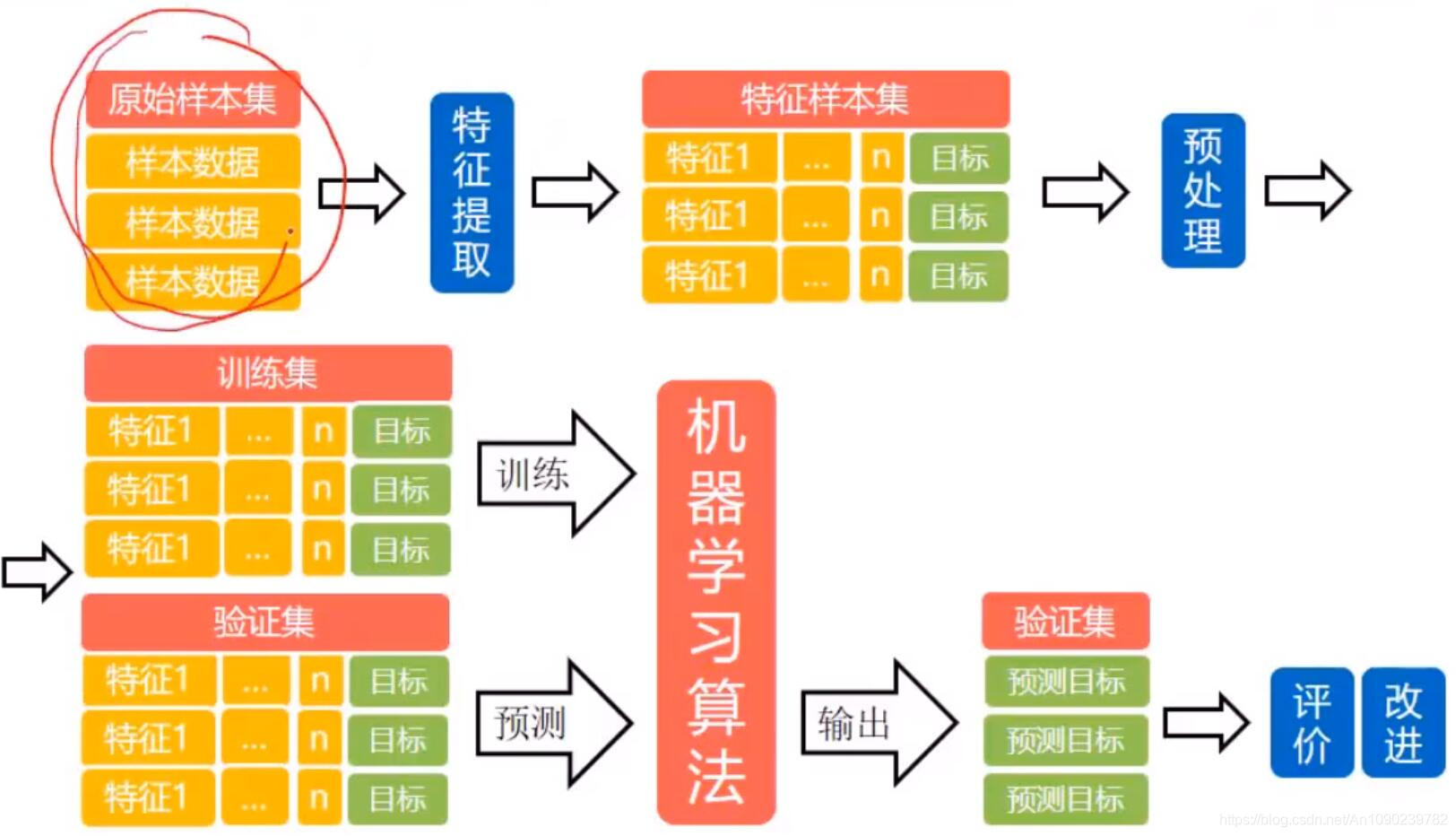

Spark MLlib 典型流程如下:

- 构造训练数据集

- 构建各个 Stage

- Stage 组成 Pipeline

- 启动模型训练

- 评估模型效果

- 计算预测结果

通过一个 Pipeline 的文本分类示例来加深理解:

import org.apache.spark.ml.{Pipeline, PipelineModel}

import org.apache.spark.ml.classification.LogisticRegression

import org.apache.spark.ml.feature.{HashingTF, Tokenizer}

import org.apache.spark.ml.linalg.Vector

import org.apache.spark.sql.Row

// Prepare training documents from a list of (id, text, label) tuples.

val training = spark.createDataFrame(Seq(

(0L, "a b c d e spark", 1.0),

(1L, "b d", 0.0),

(2L, "spark f g h", 1.0),

(3L, "hadoop mapreduce", 0.0)

)).toDF("id", "text", "label")

// Configure an ML pipeline, which consists of three stages: tokenizer, hashingTF, and lr.

val tokenizer = new Tokenizer()

.setInputCol("text")

.setOutputCol("words")

val hashingTF = new HashingTF()

.setNumFeatures(1000)

.setInputCol(tokenizer.getOutputCol)

.setOutputCol("features")

val lr = new LogisticRegression()

.setMaxIter(10)

.setRegParam(0.001)

val pipeline = new Pipeline()

.setStages(Array(tokenizer, hashingTF, lr))

// Fit the pipeline to training documents.

val model = pipeline.fit(training)

// Now we can optionally save the fitted pipeline to disk

model.write.overwrite().save("/tmp/spark-logistic-regression-model")

// We can also save this unfit pipeline to disk

pipeline.write.overwrite().save("/tmp/unfit-lr-model")

// And load it back in during production

val sameModel = PipelineModel.load("/tmp/spark-logistic-regression-model")

// Prepare test documents, which are unlabeled (id, text) tuples.

val test = spark.createDataFrame(Seq(

(4L, "spark i j k"),

(5L, "l m n"),

(6L, "spark hadoop spark"),

(7L, "apache hadoop")

)).toDF("id", "text")

// Make predictions on test documents.

model.transform(test)

.select("id", "text", "probability", "prediction")

.collect()

.foreach { case Row(id: Long, text: String, prob: Vector, prediction: Double) =>

println(s"($id, $text) --> prob=$prob, prediction=$prediction")

}

模型选择与调参

Spark MLlib 提供了 CrossValidator 和 TrainValidationSplit 两个模型选择和调参工具。模型选择与调参的三个基本组件分别是 Estimator、ParamGrid 和 Evaluator,其中 Estimator 包括算法或者 Pipeline;ParamGrid 即 ParamMap 集合,提供参数搜索空间;Evaluator 即评价指标。

CrossValidator

CrossValidator 将数据集按照交叉验证数切分成 n 份,每次用 n-1 份作为训练集,剩余的作为测试集,训练并评估模型,重复 n 次,得到 n 个评估结果,求 n 次的平均值作为这次交叉验证的结果。接着对每个候选 ParamMap 重复上面的过程,选择最优的 ParamMap 并重新训练模型,得到最优参数的模型输出。

// We use a ParamGridBuilder to construct a grid of parameters to search over.

// With 3 values for hashingTF.numFeatures and 2 values for lr.regParam,

// this grid will have 3 x 2 = 6 parameter settings for CrossValidator to choose from.

val paramGrid = new ParamGridBuilder()

.addGrid(hashingTF.numFeatures, Array(10, 100, 1000))

.addGrid(lr.regParam, Array(0.1, 0.01))

.build()

// We now treat the Pipeline as an Estimator, wrapping it in a CrossValidator instance.

// This will allow us to jointly choose parameters for all Pipeline stages.

// A CrossValidator requires an Estimator, a set of Estimator ParamMaps, and an Evaluator.

// Note that the evaluator here is a BinaryClassificationEvaluator and its default metric

// is areaUnderROC.

val cv = new CrossValidator()

.setEstimator(pipeline)

.setEvaluator(new BinaryClassificationEvaluator)

.setEstimatorParamMaps(paramGrid)

.setNumFolds(2) // Use 3+ in practice

.setParallelism(2) // Evaluate up to 2 parameter settings in parallel

// Run cross-validation, and choose the best set of parameters.

val cvModel = cv.fit(training)

// Prepare test documents, which are unlabeled (id, text) tuples.

val test = spark.createDataFrame(Seq(

(4L, "spark i j k"),

(5L, "l m n"),

(6L, "mapreduce spark"),

(7L, "apache hadoop")

)).toDF("id", "text")

// Make predictions on test documents. cvModel uses the best model found (lrModel).

cvModel.transform(test)

.select("id", "text", "probability", "prediction")

.collect()

.foreach { case Row(id: Long, text: String, prob: Vector, prediction: Double) =>

println(s"($id, $text) --> prob=$prob, prediction=$prediction")

}

TrainValidationSplit

TrainValidationSplit 使用 trainRatio 参数将训练集按照比例切分成训练和验证集,其中 trainRatio 比例的样本用于训练,剩余样本用于验证。与 CrossValidator 不同的是,TrainValidationSplit 只有一次验证过程,可以简单看成是 CrossValidator 的 n 为 2 时的特殊版本。

import org.apache.spark.ml.evaluation.RegressionEvaluator

import org.apache.spark.ml.regression.LinearRegression

import org.apache.spark.ml.tuning.{ParamGridBuilder, TrainValidationSplit}

// Prepare training and test data.

val data = spark.read.format("libsvm").load("data/mllib/sample_linear_regression_data.txt")

val Array(training, test) = data.randomSplit(Array(0.9, 0.1), seed = 12345)

val lr = new LinearRegression()

.setMaxIter(10)

// We use a ParamGridBuilder to construct a grid of parameters to search over.

// TrainValidationSplit will try all combinations of values and determine best model using

// the evaluator.

val paramGrid = new ParamGridBuilder()

.addGrid(lr.regParam, Array(0.1, 0.01))

.addGrid(lr.fitIntercept)

.addGrid(lr.elasticNetParam, Array(0.0, 0.5, 1.0))

.build()

// In this case the estimator is simply the linear regression.

// A TrainValidationSplit requires an Estimator, a set of Estimator ParamMaps, and an Evaluator.

val trainValidationSplit = new TrainValidationSplit()

.setEstimator(lr)

.setEvaluator(new RegressionEvaluator)

.setEstimatorParamMaps(paramGrid)

// 80% of the data will be used for training and the remaining 20% for validation.

.setTrainRatio(0.8)

// Evaluate up to 2 parameter settings in parallel

.setParallelism(2)

// Run train validation split, and choose the best set of parameters.

val model = trainValidationSplit.fit(training)

// Make predictions on test data. model is the model with combination of parameters

// that performed best.

model.transform(test)

.select("features", "label", "prediction")

.show()

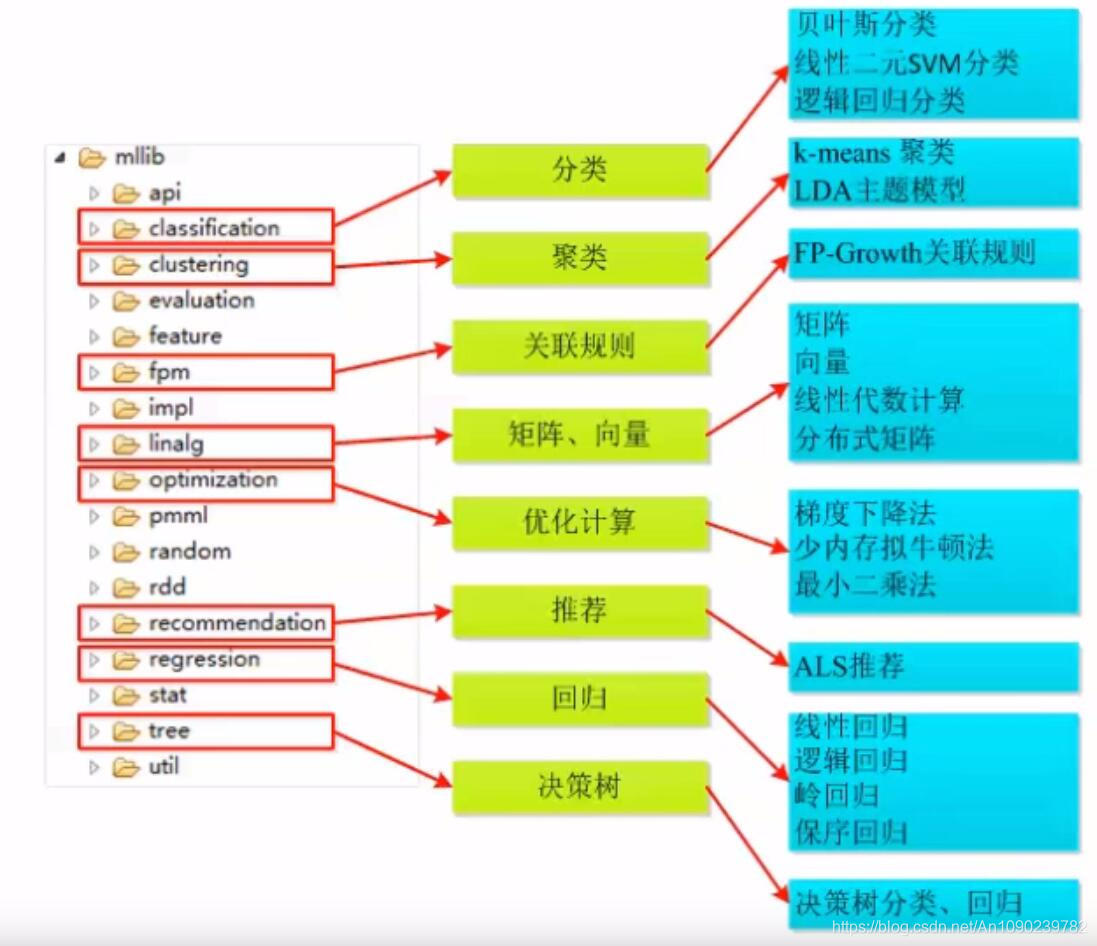

MLlib目录结构

MLlib处理流程

MLlib构成

两个算法包:

- spark.mllib:包含原始API,构建在RDD之上。

- spark.ml:基于DataFrame构建的高级API。

spark.ml具备更优的性能和更好的扩展性,建议优先选用。

spark.mllib相较于spark.ml包含更多的算法。

数据类型(Data Type)

向量:带类别的向量,矩阵等。

数学统计计算库

基本统计量(min,max,average等),相关分析,随机数产生器,假设检验等。

机器学习管道(pipeline)

Transformer、Estimator、Parameter。

机器学习算法

分类算法、回归算法、聚类算法、协同过滤。

二、Spark MLlib算法库

Spark MLlib算法库主要包含两类算法:分类算法与回归算法。

2.1 推荐算法(AlterNating Least Squares)(ALS)

协同过滤(Collaborative Filtering,简称CF)推荐算法,CF的基本思想是根据用户之前的喜好以及其他兴趣相近的用户的选择来给用户推荐物品。

CF推荐算法分类:

- User-based:基于用户对物品的偏好找到相邻邻居用户,然后将邻居用户喜好的推荐给当前用户。

- Item-based:基于用户对物品的偏好找到相似的物品,然后根据用户的历史偏好,推荐相似的物品给他。

2.2 ALS:Scala

import org.apache.spark.ml.evalution.RegressionEvaluator

import org.apache.soark.ml.recommendation.ALS

case class Rating(userId:Int,movieId:Int,rating:Float,timestamp:Long)

def parseRating(str: String): Rating = {

val fields = str.split("::")

assert(fields.size == 4)

Rating(fields(0).toInt,fields(1).toInt,fields(2).toFloat,fields(3).toLong)

}

val ratings = spark.read.textFile("data/mllib/als/sample_movicelens_ratings.txt")

.map(parseRating)

,toDF()

val Array(training.test) = ratings.randomSplit(Array(0.8,0.2))

val als = new ALS()

.setMaxIter(5)

.setRegParam(0.01)

.setUserCol("userId")

.setItemCOl("movieId")

.setRatingCol("rating")

val model = als.fit(training)

val predictions = model.transform(test)

val evaluator = new RegressionEvaluator()

.setMetricName("rmse")

.setLabelCol("rating")

.setPredicationCol("prediction")

val rmse = evaluator.evaluate(predictions)

println(s"Root-mean-squar error = $rmse")