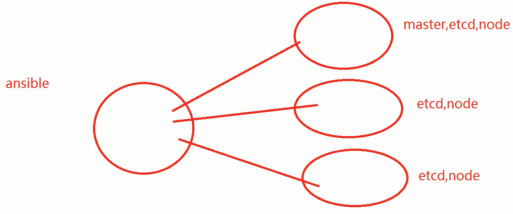

1. 部署环境

k8s集群部署以四台机器做实验,一台部署节点,三台集群节点。在部署节点上通过ansible将关于k8s相关部署文件发送给集群节点进行部署。

四台主机:node、node1、node2、node3;

a) 部署节点:node;

b) master节点:node1;

c) etcd节点:node1、node2、node3;

d) 计算(node)节点:node1、node2、node3。

版本组件

kubernetes v1.9.7

etcd v3.3.4

docker 18.03.0-ce

calico/node:v3.0.6

calico/cni:v2.0.5

calico/kube-controllers:v2.0.4

centos 7.3+

2. 上传镜像至部署节点,解压

[root@node opt]# ls

kubernetes.tar.gz rh

[root@node opt]# tar zxf kubernetes.tar.gz

3. 域名解析和免密钥,各机器均做

[root@node opt]# cat /etc/hosts 127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4 ::1 localhost localhost.localdomain localhost6 localhost6.localdomain6 172.16.254.20 reg.yunwei.edu 192.168.16.95 node 192.168.16.96 node1 192.168.16.98 node2 192.168.16.99 node3 [root@node opt]# ssh-keygen [root@node opt]# ssh-copy-id node3 [root@node opt]# ssh-copy-id node2 [root@node opt]# ssh-copy-id node1 [root@node opt]# ssh-copy-id node

4. 下载安装docker,参考docker安装部署。

5. 下载并运行docker版ansible

[root@node ~]# docker pull reg.yunwei.edu/learn/ansible:alpine3 [root@node ~]# docker images reg.yunwei.edu/learn/ansible alpine3 1acb4fd5df5b 17 months ago 129MB [root@node ~]# docker run -itd -v /etc/ansible:/etc/ansible -v /etc/kubernetes/:/etc/kubernetes/ -v /root/.kube:/root/.kube -v /usr/local/bin/:/usr/local/bin/ 1acb4fd5df5b /bin/sh 2a08c5849991a507219c5916b2fd2b26dc9e2befddcf2793bb35dacec1fe1da8 [root@node ~]# docker ps CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES 2a08c5849991 1acb4fd5df5b "/bin/sh" 30 seconds ago Up 29 seconds stupefied_easley [root@node ~]# docker exec -it 2a08c5849991 /bin/sh / #

6. 将编排文件移动至ansible目录

/etc/ansible/目录已映射至容器/etc/ansible/下,因此将所有k8s的编排文件移动至/etc/ansible目录即可

[root@node ~]# cd /opt/ [root@node opt]# ls kubernetes kubernetes.tar.gz rh [root@node kubernetes]# ls bash image.tar.gz scope.yaml ca.tar.gz k8s197.tar.gz sock-shop harbor-offline-installer-v1.4.0.tgz kube-yunwei-197.tar.gz [root@node kubernetes]# tar zxf kube-yunwei-197.tar.gz [root@node kubernetes]# ls bash kube-yunwei-197 ca.tar.gz kube-yunwei-197.tar.gz harbor-offline-installer-v1.4.0.tgz scope.yaml image.tar.gz sock-shop k8s197.tar.gz [root@node kube-yunwei-197]# mv ./* /etc/ansible/ 进入容器查看 [root@node ~]# docker exec -it 2a08c5849991 /bin/sh / # cd /etc/ansible/ /etc/ansible # ls 01.prepare.yml 06.network.yml manifests 02.etcd.yml 99.clean.yml roles 03.docker.yml ansible.cfg tools 04.kube-master.yml bin 05.kube-node.yml example 将k8s的二级制文件移动到/bin下 [root@node kubernetes]# rm -rf kube-yunwei-197 [root@node kubernetes]# tar zxf k8s197.tar.gz [root@node kubernetes]# ls bash harbor-offline-installer-v1.4.0.tgz kube-yunwei-197.tar.gz bin image.tar.gz scope.yaml ca.tar.gz k8s197.tar.gz [root@node kubernetes]# cd bin [root@node bin]# ls bridge docker-containerd-shim kube-apiserver calicoctl dockerd kube-controller-manager cfssl docker-init kubectl cfssl-certinfo docker-proxy kubelet cfssljson docker-runc kube-proxy docker etcd kube-scheduler docker-compose etcdctl loopback docker-containerd flannel portmap docker-containerd-ctr host-local [root@node bin]# mv * /etc/ansible/bin/ [root@node bin]# ls [root@node bin]# cd .. [root@node kubernetes]# rm -rf bin/

7. 编辑ansible的配置文件

root@node kubernetes]# cd /etc/ansible/ [root@node ansible]# ls 01.prepare.yml 04.kube-master.yml 99.clean.yml example tools 02.etcd.yml 05.kube-node.yml ansible.cfg manifests 03.docker.yml 06.network.yml bin roles [root@node ansible]# cd example/ [root@node example]# ls hosts.s-master.example [root@node example]# cp hosts.s-master.example ../hosts #将示例文件复制到上层目录的hosts文件再进行编辑配置 [root@node example]# cd .. [root@node ansible]# ls 01.prepare.yml 04.kube-master.yml 99.clean.yml example roles 02.etcd.yml 05.kube-node.yml ansible.cfg hosts tools 03.docker.yml 06.network.yml bin manifests [root@node ansible]# vim hosts [root@node ansible]# vim hosts # 部署节点:运行ansible 脚本的节点 [deploy] 192.168.16.95 192.168.16.98 NODE_NAME=etcd2 NODE_IP="192.168.16.98" 192.168.16.99 NODE_NAME=etcd3 NODE_IP="192.168.16.99" [kube-master] 192.168.16.96 NODE_IP="192.168.16.96" 192.168.16.99 NODE_IP="192.168.16.99" [all:vars] # ---------集群主要参数--------------- #集群部署模式:allinone, single-master, multi-master DEPLOY_MODE=single-master #集群 MASTER IP MASTER_IP="192.168.16.96" #集群 APISERVER KUBE_APISERVER="https://192.168.16.96:6443" #TLS Bootstrapping 使用的 Token,使用 head -c 16 /dev/urandom | od -An -t x | tr -d ' ' 生成 BOOTSTRAP_TOKEN="d18f94b5fa585c7123f56803d925d2e7" # 集群网络插件,目前支持calico和flannel CLUSTER_NETWORK="calico" #使用三层网络calico,可跨网段 CALICO_IPV4POOL_IPIP="always" IP_AUTODETECTION_METHOD="can-reach=223.5.5.5" FLANNEL_BACKEND="vxlan" SERVICE_CIDR="10.68.0.0/16" # POD 网段 (Cluster CIDR),部署前路由不可达,**部署后**路由可达 CLUSTER_CIDR="172.20.0.0/16" # 服务端口范围 (NodePort Range) NODE_PORT_RANGE="20000-40000" # kubernetes 服务 IP (预分配,一般是 SERVICE_CIDR 中第一个IP) CLUSTER_KUBERNETES_SVC_IP="10.68.0.1" # 集群 DNS 服务 IP (从 SERVICE_CIDR 中预分配) CLUSTER_DNS_SVC_IP="10.68.0.2" # 集群 DNS 域名 CLUSTER_DNS_DOMAIN="cluster.local." # etcd 集群间通信的IP和端口, **根据实际 etcd 集群成员设置** ETCD_NODES="etcd1=https://192.168.16.96:2380,etcd2=https://192.168.16.98: 2380,etcd3=https://192.168.16.99:2380" # etcd 集群服务地址列表, **根据实际 etcd 集群成员设置** ETCD_ENDPOINTS="https://192.168.16.96:2379,https://192.168.16.98:2379,htt ps://192.168.16.99:2379" # 集群basic auth 使用的用户名和密码 BASIC_AUTH_USER="admin" BASIC_AUTH_PASS="admin" # ---------附加参数-------------------- #默认二进制文件目录 bin_dir="/usr/local/bin" #证书目录 ca_dir="/etc/kubernetes/ssl" #部署目录,即 ansible 工作目录 base_dir="/etc/ansible" 在容器中查看修改后的hosts文件 /etc/ansible # ls 01.prepare.yml 06.network.yml hosts 02.etcd.yml 99.clean.yml manifests 03.docker.yml ansible.cfg roles 04.kube-master.yml bin tools 05.kube-node.yml example /etc/ansible # cat hosts .....

8. 部署文件hosts修改完成后先用ansible命令ping一下各节点,默认是无法ping通的。

/etc/ansible # ansible all -m ping 192.168.16.99 | UNREACHABLE! => { "changed": false, "msg": "Failed to connect to the host via ssh: Warning: Permanently added '192.168.16.99' (ECDSA) to the list of known hosts. Permission denied (publickey,gssapi-keyex,gssapi-with-mic,password). ", "unreachable": true } 192.168.16.98 | UNREACHABLE! => { "changed": false, "msg": "Failed to connect to the host via ssh: Warning: Permanently added '192.168.16.98' (ECDSA) to the list of known hosts. Permission denied (publickey,gssapi-keyex,gssapi-with-mic,password). ", "unreachable": true } 192.168.16.96 | UNREACHABLE! => { "changed": false, "msg": "Failed to connect to the host via ssh: Warning: Permanently added '192.168.16.96' (ECDSA) to the list of known hosts. Permission denied (publickey,gssapi-keyex,gssapi-with-mic,password). ", "unreachable": true } 192.168.16.95 | UNREACHABLE! => { "changed": false, "msg": "Failed to connect to the host via ssh: Warning: Permanently added '192.168.16.95' (ECDSA) to the list of known hosts. Permission denied (publickey,gssapi-keyex,gssapi-with-mic,password). ", "unreachable": true }

需要在容器内生成秘钥,秘钥相关文件存放在/root/.ssh目录

/etc/ansible # ssh-keygen 回车 回车 回车 发送密钥匙要注意,因为容器并没有做域名解析,因此直接将秘钥发送给主机ip /etc/ansible # ssh-copy-id 192.168.16.95 /etc/ansible # ssh-copy-id 192.168.16.96 /etc/ansible # ssh-copy-id 192.168.16.98 /etc/ansible # ssh-copy-id 192.168.16.99 秘钥发送完成后就能ping通了 /etc/ansible # ansible all -m ping 192.168.16.99 | SUCCESS => { "changed": false, "ping": "pong" } 192.168.16.96 | SUCCESS => { "changed": false, "ping": "pong" } 192.168.16.98 | SUCCESS => { "changed": false, "ping": "pong" } 192.168.16.95 | SUCCESS => { "changed": false, "ping": "pong" }

9. 进入ansible目录,执行.yml文件

/etc/ansible # cd /etc/ansible/ /etc/ansible # ls 01.prepare.yml 06.network.yml hosts 02.etcd.yml 99.clean.yml manifests 03.docker.yml ansible.cfg roles 04.kube-master.yml bin tools 05.kube-node.yml example

第一步:准备环境

/etc/ansible # ansible-playbook 01.prepare.yml

第二步:在三台节点部署etcd服务

/etc/ansible # ansible-playbook 02.etcd.yml

第三步:在三台节点上安装docker

先清理干净以前的docker环境

[root@node1 ~]# ls anaconda-ks.cfg apache2 doc_file docker test [root@node1 ~]# cd doc doc_file/ docker/ [root@node1 ~]# cd docker/ [root@node1 docker]# ls ca.crt docker-app.tar.gz docker.sh remove.sh [root@node1 docker]# sh remove.sh [root@node2 ~]# cd docker/ [root@node2 docker]# sh remove.sh Removed symlink /etc/systemd/system/multi-user.target.wants/docker.service. [root@node2 docker]# docker images -bash: /usr/local/bin/docker: No such file or directory [root@node3 ~]# cd docker/ [root@node3 docker]# sh remove.sh Removed symlink /etc/systemd/system/multi-user.target.wants/docker.service. [root@node3 docker]# docker ps -bash: /usr/local/bin/docker: No such file or directory

安装docker

/etc/ansible # ansible-playbook 03.docker.yml

docker部署完成

/etc/ansible # ansible all -m shell -a 'docker ps' 192.168.16.96 | SUCCESS | rc=0 >> CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES 192.168.16.99 | SUCCESS | rc=0 >> CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES 192.168.16.98 | SUCCESS | rc=0 >> CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES 192.168.16.95 | SUCCESS | rc=0 >> CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES 2a08c5849991 1acb4fd5df5b "/bin/sh" About an hour ago Up About an hour stupefied_easley

第四步:部署kube-master节点

在部署kube-master之前要确保/root/.kube目录是映射完成的。

[root@node ansible]# cd [root@node ~]# ls -a . apache2 .bashrc doc_file .ssh .. .bash_history .cache docker .tcshrc anaconda-ks.cfg .bash_logout .config .kube test .ansible .bash_profile .cshrc .pki .viminfo [root@node ~]# cd .kube/ [root@node .kube]# ls config /etc/ansible # cd /root ~ # ls -a . .ansible .cache .ssh .. .ash_history .kube ~ # cd .kube/ ~/.kube # ls config

部署master

/etc/ansible # ansible-playbook 04.kube-master.yml

第五步:部署node节点

/etc/ansible # ansible-playbook 05.kube-node.yml

将镜像文件发送给各节点,在各节点解压文件

[root@node opt]# cd kubernetes/ [root@node kubernetes]# ls bash image.tar.gz scope.yaml ca.tar.gz k8s197.tar.gz sock-shop harbor-offline-installer-v1.4.0.tgz kube-yunwei-197.tar.gz [root@node kubernetes]# scp image.tar.gz node1:/root [root@node kubernetes]# scp image.tar.gz node2:/root [root@node kubernetes]# scp image.tar.gz node3:/root [root@node1 ~]# ls anaconda-ks.cfg apache2 doc_file docker image.tar.gz test [root@node1 ~]# tar zxf image.tar.gz [root@node1 ~]# ls anaconda-ks.cfg apache2 doc_file docker image image.tar.gz test [root@node1 ~]# cd image/ [root@node1 image]# ls bash-completion-2.1-6.el7.noarch.rpm calico coredns-1.0.6.tar.gz grafana-v4.4.3.tar heapster-v1.5.1.tar influxdb-v1.3.3.tar kubernetes-dashboard-amd64-v1.8.3.tar.gz pause-amd64-3.1.tar [root@node2 ~]# ls anaconda-ks.cfg apache2 doc_file docker image.tar.gz test [root@node2 ~]# tar zxf image.tar.gz [root@node2 ~]# ls anaconda-ks.cfg apache2 doc_file docker image image.tar.gz test [root@node2 ~]# cd image/ [root@node2 image]# ls bash-completion-2.1-6.el7.noarch.rpm calico coredns-1.0.6.tar.gz grafana-v4.4.3.tar heapster-v1.5.1.tar influxdb-v1.3.3.tar kubernetes-dashboard-amd64-v1.8.3.tar.gz pause-amd64-3.1.tar [root@node3 ~]# ls anaconda-ks.cfg apache2 doc_file docker image.tar.gz test [root@node3 ~]# tar zxf image.tar.gz [root@node3 ~]# cd image/ [root@node3 image]# ls bash-completion-2.1-6.el7.noarch.rpm calico coredns-1.0.6.tar.gz grafana-v4.4.3.tar heapster-v1.5.1.tar influxdb-v1.3.3.tar kubernetes-dashboard-amd64-v1.8.3.tar.gz pause-amd64-3.1.tar

将所有的镜像导入到各节点的容器中,包括calico目录包含的镜像。镜像导入只能一个一个到,不能一次性导入,因此用脚本实现

[root@node1 image]# mv coredns-1.0.6.tar.gz heapster-v1.5.1.tar kubernetes-dashboard-amd64-v1.8.3.tar.gz grafana-v4.4.3.tar influxdb-v1.3.3.tar pause-amd64-3.1.tar calico/ [root@node1 image]# cd calico/ [root@node1 calico]# ls bash-completion-2.1-6.el7.noarch.rpm calico-kube-controllers-v2.0.4.tar coredns-1.0.6.tar.gz heapster-v1.5.1.tar kubernetes-dashboard-amd64-v1.8.3.tar.gz calico-cni-v2.0.5.tar calico-node-v3.0.6.tar grafana-v4.4.3.tar influxdb-v1.3.3.tar pause-amd64-3.1.tar [root@node1 calico]# for im in `ls`;do docker load -i $im;done [root@node1 ~]# cd image/ [root@node1 image]# ls bash-completion-2.1-6.el7.noarch.rpm coredns-1.0.6.tar.gz heapster-v1.5.1.tar kubernetes-dashboard-amd64-v1.8.3.tar.gz calico grafana-v4.4.3.tar influxdb-v1.3.3.tar pause-amd64-3.1.tar [root@node1 image]# coredns-1.0.6.tar.gz heapster-v1.5.1.tar kubernetes-dashboard-amd64-v1.8.3.tar.gz grafana-v4.4.3.tar influxdb-v1.3.3.tar pause-amd64-3.1.tar calico/ [root@node1 image]# cd calico/ [root@node1 calico]# ls calico-kube-controllers-v2.0.4.tar coredns-1.0.6.tar.gz heapster-v1.5.1.tar kubernetes-dashboard-amd64-v1.8.3.tar.gz calico-cni-v2.0.5.tar calico-node-v3.0.6.tar grafana-v4.4.3.tar influxdb-v1.3.3.tar pause-amd64-3.1.tar [root@node1 calico]# for im in `ls`;do docker load -i $im;done [root@node3 ~]# cd image/ [root@node3 image]# ls bash-completion-2.1-6.el7.noarch.rpm calico coredns-1.0.6.tar.gz grafana-v4.4.3.tar heapster-v1.5.1.tar influxdb-v1.3.3.tar kubernetes-dashboard-amd64-v1.8.3.tar.gz pause-amd64-3.1.tar [root@node3 image]# coredns-1.0.6.tar.gz heapster-v1.5.1.tar kubernetes-dashboard-amd64-v1.8.3.tar.gz grafana-v4.4.3.tar influxdb-v1.3.3.tar pause-amd64-3.1.tar calico/ [root@node3 image]# cd calico/ [root@node3 calico]# ls calico-kube-controllers-v2.0.4.tar coredns-1.0.6.tar.gz heapster-v1.5.1.tar kubernetes-dashboard-amd64-v1.8.3.tar.gz calico-cni-v2.0.5.tar calico-node-v3.0.6.tar grafana-v4.4.3.tar influxdb-v1.3.3.tar pause-amd64-3.1.tar [root@node3 calico]# for im in `ls`;do docker load -i $im;done

第六步:部署网络

/etc/ansible # ansible-playbook 06.network.yml

部署dns,实现容器域名解析

/etc/ansible # ls 01.prepare.yml 05.kube-node.yml example 02.etcd.yml 06.network.yml hosts 03.docker.retry 99.clean.yml manifests 03.docker.yml ansible.cfg roles 04.kube-master.yml bin tools /etc/ansible # cd manifests/ /etc/ansible/manifests # ls coredns dashboard efk heapster ingress kubedns /etc/ansible/manifests # cd coredns/ /etc/ansible/manifests/coredns # ls coredns.yaml error: flag needs an argument: 'f' in -f /etc/ansible/manifests/coredns # kubectl create -f . /etc/ansible/manifests/coredns # cd .. /etc/ansible/manifests # cd dashboard/ /etc/ansible/manifests/dashboard # ls 1.6.3 ui-admin-rbac.yaml admin-user-sa-rbac.yaml ui-read-rbac.yaml kubernetes-dashboard.yaml /etc/ansible/manifests/dashboard # kubectl create -f . /etc/ansible/manifests/dashboard #cd .. /etc/ansible/manifests # ls coredns dashboard efk heapster ingress kubedns /etc/ansible/manifests # cd heapster/ /etc/ansible/manifests/heapster # ls grafana.yaml influxdb-v1.1.1 influxdb.yaml heapster.yaml influxdb-with-pv /etc/ansible/manifests/heapster # kubectl create -f ./etc/ansible # ls 01.prepare.yml 05.kube-node.yml example 02.etcd.yml 06.network.yml hosts 03.docker.retry 99.clean.yml manifests 03.docker.yml ansible.cfg roles 04.kube-master.yml bin tools /etc/ansible # cd manifests/ /etc/ansible/manifests # ls coredns dashboard efk heapster ingress kubedns /etc/ansible/manifests # cd coredns/ /etc/ansible/manifests/coredns # ls coredns.yaml error: flag needs an argument: 'f' in -f /etc/ansible/manifests/coredns # kubectl create -f . /etc/ansible/manifests/coredns # cd .. /etc/ansible/manifests # cd dashboard/ /etc/ansible/manifests/dashboard # ls 1.6.3 ui-admin-rbac.yaml admin-user-sa-rbac.yaml ui-read-rbac.yaml kubernetes-dashboard.yaml /etc/ansible/manifests/dashboard # kubectl create -f . /etc/ansible/manifests/dashboard #cd .. /etc/ansible/manifests # ls coredns dashboard efk heapster ingress kubedns /etc/ansible/manifests # cd heapster/ /etc/ansible/manifests/heapster # ls grafana.yaml influxdb-v1.1.1 influxdb.yaml heapster.yaml influxdb-with-pv /etc/ansible/manifests/heapster # kubectl create -f .

10> 在部署节点使用kubernetes自己的命令查看kubernetes是否部署完成

查看节点 [root@node ~]# kubectl get node NAME STATUS ROLES AGE VERSION 192.168.16.96 Ready,SchedulingDisabled <none> 48m v1.9.7 192.168.16.98 Ready <none> 45m v1.9.7 192.168.16.99 Ready <none> 45m v1.9.7 命名空间 [root@node ~]# kubectl get ns NAME STATUS AGE default Active 50m kube-public Active 50m kube-system Active 50m pod [root@node ~]# kubectl get pod No resources found. #没有指定命名空间 指定命名空间,查看pod [root@node ~]# kubectl get pod -n kube-system NAME READY STATUS RESTARTS AGE calico-kube-controllers-754c88ccc8-rbqlm 1/1 Running 0 23m calico-node-8zxc4 2/2 Running 1 23m calico-node-b2j8n 2/2 Running 0 23m calico-node-zw8gg 2/2 Running 0 23m coredns-6ff7588dc6-cpmxv 1/1 Running 0 17m coredns-6ff7588dc6-pmqsn 1/1 Running 0 17m heapster-7f8bf9bc46-4jn5n 1/1 Running 0 5m kubernetes-dashboard-545b66db97-rlr88 1/1 Running 0 10m monitoring-grafana-64747d765f-brbwj 1/1 Running 0 5m monitoring-influxdb-565ff5f9b6-vrlcq 1/1 Running 0 5m 查看详细信息,加 -o wide [root@node ~]# kubectl get pod -n kube-system -o wide NAME READY STATUS RESTARTS AGE IP NODE calico-kube-controllers-754c88ccc8-rbqlm 1/1 Running 0 25m 192.168.16.99 192.168.16.99 calico-node-8zxc4 2/2 Running 1 25m 192.168.16.99 192.168.16.99 calico-node-b2j8n 2/2 Running 0 25m 192.168.16.98 192.168.16.98 calico-node-zw8gg 2/2 Running 0 25m 192.168.16.96 192.168.16.96 coredns-6ff7588dc6-cpmxv 1/1 Running 0 18m 172.20.104.1 192.168.16.98 coredns-6ff7588dc6-pmqsn 1/1 Running 0 18m 172.20.135.1 192.168.16.99 heapster-7f8bf9bc46-4jn5n 1/1 Running 0 6m 172.20.104.3 192.168.16.98 kubernetes-dashboard-545b66db97-rlr88 1/1 Running 0 11m 172.20.104.2 192.168.16.98 monitoring-grafana-64747d765f-brbwj 1/1 Running 0 6m 172.20.135.2 192.168.16.99 monitoring-influxdb-565ff5f9b6-vrlcq 1/1 Running 0 6m 172.20.135.3 192.168.16.99

11> 验证集群是否正常工作

在集群节点中选择一个一个节点来ping pod的ip,在不同的节点上ping ip,如在node2上ping node3 的ip

[root@node2 calico]# ping 172.20.135.3 PING 172.20.135.3 (172.20.135.3) 56(84) bytes of data. 64 bytes from 172.20.135.3: icmp_seq=1 ttl=63 time=0.495 ms 64 bytes from 172.20.135.3: icmp_seq=2 ttl=63 time=0.423 ms 64 bytes from 172.20.135.3: icmp_seq=3 ttl=63 time=0.744 ms

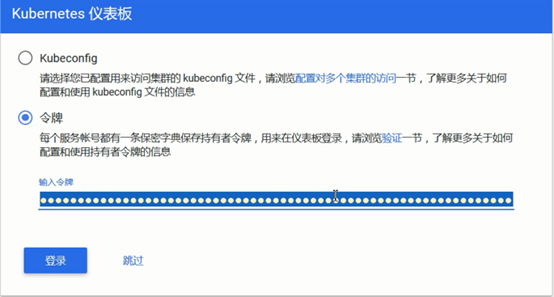

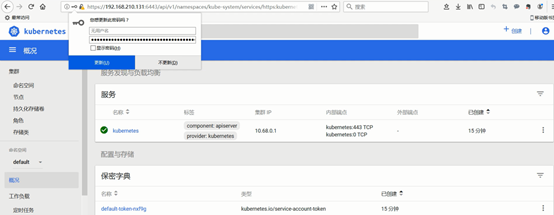

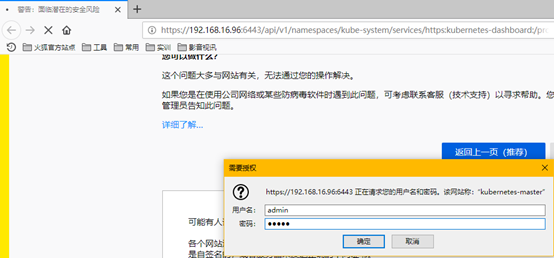

12> 集群部署完成后打开dashboard界面

先从集群信息获取地址

[root@node ~]# kubectl cluster-info Kubernetes master is running at https://192.168.16.96:6443 CoreDNS is running at https://192.168.16.96:6443/api/v1/namespaces/kube-system/services/coredns:dns/proxy kubernetes-dashboard is running at https://192.168.16.96:6443/api/v1/namespaces/kube-system /services/https:kubernetes-dashboard:/proxy monitoring-grafana is running at https://192.168.16.96:6443/api/v1/namespaces/kube-system/services/monitoring-grafana/proxy

复制地址至浏览器,输入用户密码为admin进行访问。

获取令牌

[root@node ~]# kubectl -n kube-system describe secret $(kubectl -n kube-system get secret|grep admin-user|awk '{print $1}') .... token: eyJhbGciOiJSUzI1NiIsInR5cCI6IkpXVCJ9.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlLXN5c3RlbSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJhZG1pbi11c2VyLXRva2VuLXJ0cGJnIiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQubmFtZSI6ImFkbWluLXVzZXIiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC51aWQiOiI1NTk5Nzk4Mi1hOTc3LTExZTktYmZhYy0wMDUwNTYyZDFjY2EiLCJzdWIiOiJzeXN0ZW06c2VydmljZWFjY291bnQ6a3ViZS1zeXN0ZW06YWRtaW4tdXNlciJ9.Y8I1CePpxpjvjtVC4QrJdJvjN0oclLx_2sNyzr7OxXh5rWguRs2BjeRBybTgm2_bvtnkKlGcm5XMIdpUQPNq__VY4oBCEChOYsuZnzJWGGAZXi0GQWtG83ximTAnMhia0LJ9iOtyK7bNr8F5FWZEm0Z5DBXLhnx94B-8Ljsr_MuOxkCHx1cuPQScWFbOUy8Pp9xVaUofjN9zH2CnzKumAnkRUJOHA1HtsdemU2m6Ih-PTLMZZyq7j7qTRzOuDw3K-RRvzwlNhjPe4oQZglJPKCFw-pkzpOJKfBKwwjKzUaioiUeVd9Cl23ETG69BT_KM_oEKCf9gs1KFfNAb7ixCsg

然后复制该令牌进行登录。