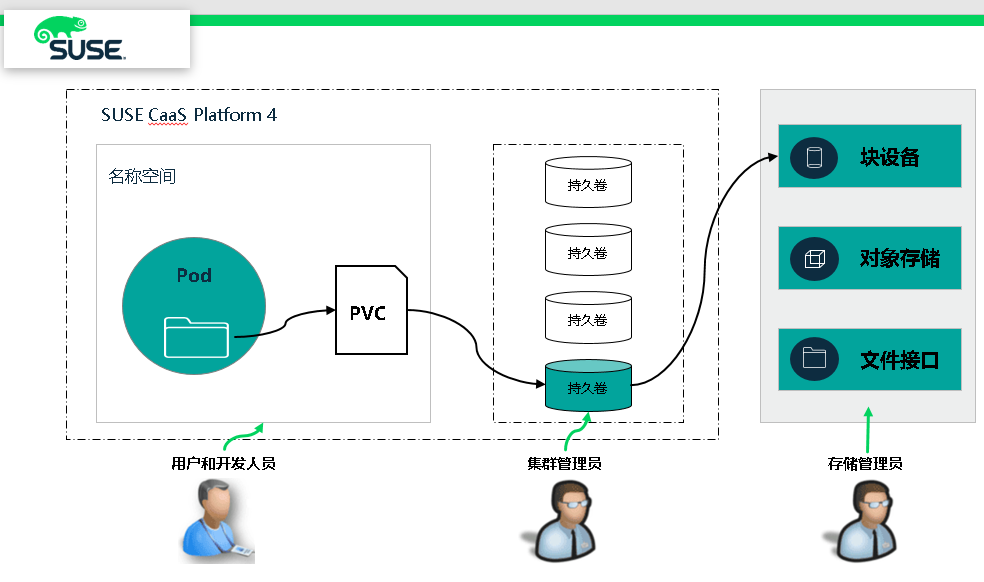

Kubernetes PersistentVolumes 持久化存储方案中,提供两种 API 资源方式: PersistentVolume(简称 PV) 和 PersistentVolumeClaim(简称 PVC)。PV 可理解为集群资源,PVC 可理解为对集群资源的请求,Kubernetes 支持很多种持久化卷存储类型。Ceph 是一个开源的分布式存储系统,支持对象存储、块设备、文件系统,具有可靠性高、管理方便、伸缩性强等特点。在日常工作中,我们会遇到使用 k8s 时后端存储需要持久化,这样不管 Pod 调度到哪个节点,都能挂载同一个卷,从而很容易读取或存储持久化数据,我们可以使用 Kubernetes 结合 Ceph 完成。

图1 Pod 存储卷、PVC 及存储设备的调用关系

实验环境搭建 - 静态供给

图2 实验环境架构

1、所有节点安装

# zypper -n in ceph-common

复制 ceph.conf 到 worker 节点上

# scp admin:/etc/ceph/ceph.conf /etc/ceph/

2、创建池

# ceph osd pool create caasp4 128

3、创建 key ,并存储到 /etc/ceph/ 目录中

# ceph auth get-or-create client.caasp4 mon 'allow r' osd 'allow rwx pool=caasp4' -o /etc/ceph/caasp4.keyring

4、创建 RBD 镜像,2G

# rbd create caasp4/ceph-image-test -s 2048

5、查看 ceph 集群 key 信息,并生成基于 base64 编码的key

# ceph auth list ....... client.admin key: AQA9w4VdAAAAABAAHZr5bVwkALYo6aLVryt7YA== caps: [mds] allow * caps: [mgr] allow * caps: [mon] allow * caps: [osd] allow * ....... client.caasp4 key: AQD1VJddM6QIJBAAlDbIWRT/eiGhG+aD8SB+5A== caps: [mon] allow r caps: [osd] allow rwx pool=caasp4

client.caasp4 密钥以 base64 编码

# echo AQD1VJddM6QIJBAAlDbIWRT/eiGhG+aD8SB+5A== | base64 QVFEMVZKZGRNNlFJSkJBQWxEYklXUlQvZWlHaEcrYUQ4U0IrNUE9PQo=

6、Master 节点上,创建 secret 资源,插入 base64 key

# vi ceph-secret-test.yaml apiVersion: v1 kind: Secret metadata: name: ceph-secret-test data: key: QVFEMVZKZGRNNlFJSkJBQWxEYklXUlQvZWlHaEcrYUQ4U0IrNUE9PQo=

# kubectl create -f ceph-secret-test.yaml

secret/ceph-secret-test created

# kubectl get secrets ceph-secret-test NAME TYPE DATA AGE ceph-secret-test Opaque 1 14s

7、Master 节点上,创建 Persistent Volume

# vim ceph-pv-test.yaml apiVersion: v1 kind: PersistentVolume metadata: name: ceph-pv-test spec: capacity: storage: 2Gi accessModes: - ReadWriteOnce rbd: monitors: - 192.168.2.40:6789 - 192.168.2.41:6789 - 192.168.2.42:6789 pool: caasp4 image: ceph-image-test user: caasp4 secretRef: name: ceph-secret-test fsType: ext4 readOnly: false persistentVolumeReclaimPolicy: Retain

# kubectl create -f ceph-pv-test.yaml

# kubectl get pv NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE ceph-pv-test 2Gi RWO Retain Available 29s

8、创建 Persistent Volume Claim (PVC)

# vim ceph-claim-test.yaml kind: PersistentVolumeClaim apiVersion: v1 metadata: name: ceph-claim-test spec: accessModes: - ReadWriteOnce resources: requests: storage: 2Gi

# kubectl create -f ceph-claim-test.yaml

# kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

ceph-claim-test Bound ceph-pv-test 2Gi RWO 14s

9、创建 Pod

# vim ceph-pod-test.yaml apiVersion: v1 kind: Pod metadata: name: ceph-pod-test spec: containers: - name: ceph-busybox image: busybox command: ["sleep", "60000"] volumeMounts: - name: ceph-vol-test mountPath: /usr/share/busybox readOnly: false volumes: - name: ceph-vol-test persistentVolumeClaim: claimName: ceph-claim-test

# kubectl create -f ceph-pod-test.yaml # kubectl get pods NAME READY STATUS RESTARTS AGE ceph-pod-test 0/1 ContainerCreating 0 6s

# kubectl get pods -o wide NAME READY STATUS RESTARTS AGE IP NODE ceph-pod-test 0/1 ContainerCreating 0 32s <none> worker02

10、worker02 节点显示 RBD 映射

# rbd showmapped id pool namespace image snap device 0 caasp4 ceph-image-test - /dev/rbd0

# df -Th | grep ceph /dev/rbd0 ext4 2.0G 6.0M 1.9G 1% /var/lib/kubelet/plugins/kubernetes.io/rbd/mounts/caasp4-image-ceph-image-test