图像分类

目标:已有固定的分类标签集合,然后对于输入的图像,从分类标签集合中找出一个分类标签,最后把分类标签分配给该输入图像。

图像分类流程

- 输入:输入是包含N个图像的集合,每个图像的标签是K种分类标签中的一种。这个集合称为训练集。

- 学习:这一步的任务是使用训练集来学习每个类到底长什么样。一般该步骤叫做训练分类器或者学习一个模型。

- 评价:让分类器来预测它未曾见过的图像的分类标签,把分类器预测的标签和图像真正的分类标签对比,并以此来评价分类器的质量。

Nearest Neighbor分类器

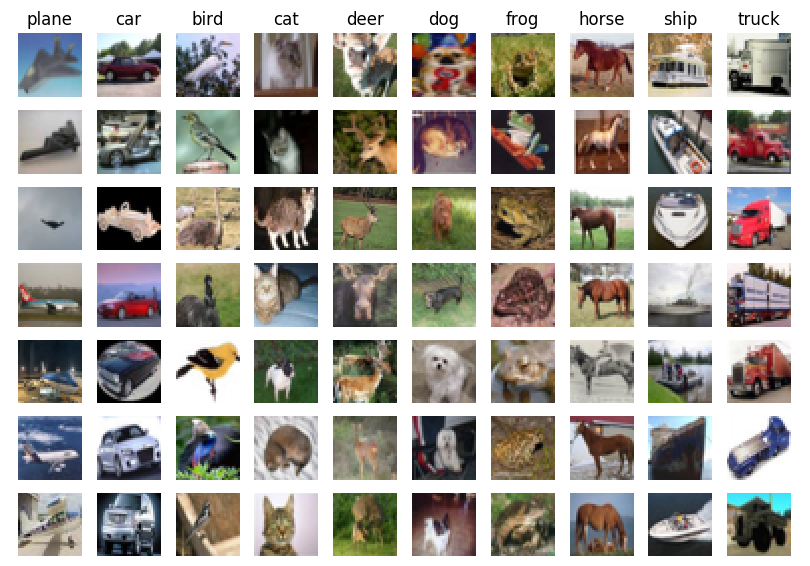

数据集:CIFAR-10。这是一个非常流行的图像分类数据集,包含了60000张32X32的小图像。每张图像都有10种分类标签中的一种。这60000张图像被分为包含50000张图像的训练集和包含10000张图像的测试集。

Nearest Neighbor图像分类思想:拿测试图片和训练集中每一张图片去比较,然后将它认为最相似的那个训练集图片的标签赋给这张测试图片。

如何比较来那个张图片?

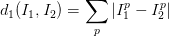

在本例中,就是比较32x32x3的像素块。最简单的方法就是逐个像素比较,最后将差异值全部加起来。换句话说,就是将两张图片先转化为两个向量I_1和I_2,然后计算他们的L1距离:

这里的求和是针对所有的像素。下面是整个比较流程的图例:

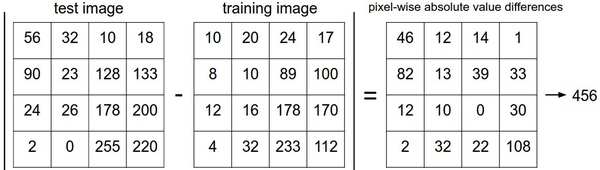

计算向量间的距离有很多种方法,另一个常用的方法是L2距离,从几何学的角度,可以理解为它在计算两个向量间的欧式距离。L2距离的公式如下:

L1和L2比较:比较这两个度量方式是挺有意思的。在面对两个向量之间的差异时,L2比L1更加不能容忍这些差异。也就是说,相对于1个巨大的差异,L2距离更倾向于接受多个中等程度的差异。L1和L2都是在p-norm常用的特殊形式。

k-Nearest Neighbor分类器(KNN)

KNN图像分类思想:与其只找最相近的那1个图片的标签,我们找最相似的k个图片的标签,然后让他们针对测试图片进行投票,最后把票数最高的标签作为对测试图片的预测。

如何选择k值?

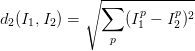

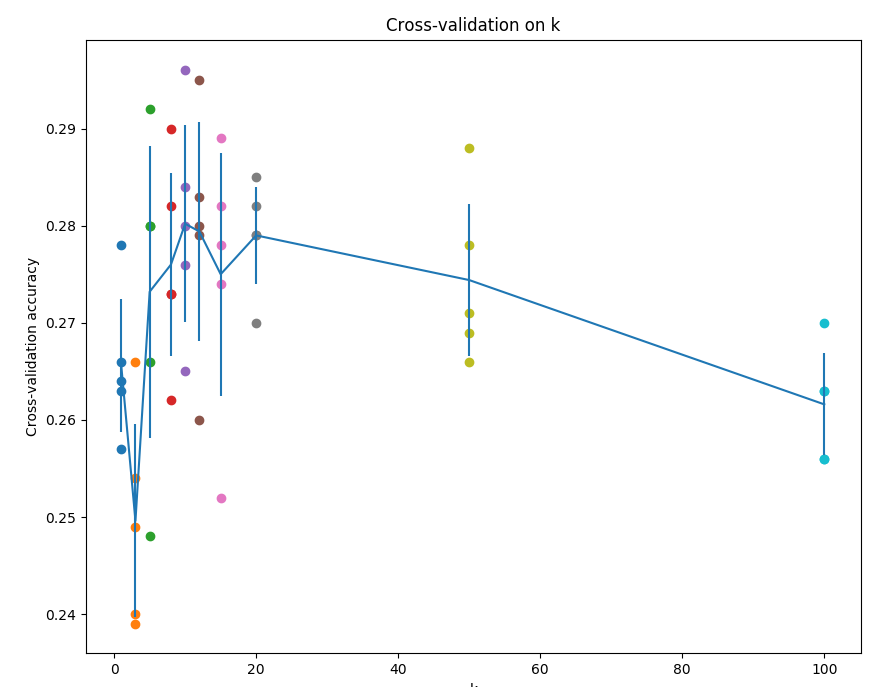

交叉验证:假如有1000张图片,我们将训练集平均分成5份,其中4份用来训练,1份用来验证。然后我们循环着取其中4份来训练,其中1份来验证,最后取所有5次验证结果的平均值作为算法验证结果。

这就是5份交叉验证对k值调优的例子。针对每个k值,得到5个准确率结果,取其平均值,然后对不同k值的平均表现画线连接。本例中,当k=10的时算法表现最好(对应图中的准确率峰值)。如果我们将训练集分成更多份数,直线一般会更加平滑(噪音更少)。

k-Nearest Neighbor分类器的优劣

优点:

- 思路清晰,易于理解,实现简单;

- 算法的训练不需要花时间,因为其训练过程只是将训练集数据存储起来。

缺点:测试要花费大量时间计算,因为每个测试图像需要和所有存储的训练图像进行比较。

实际应用k-NN

如果你希望将k-NN分类器用到实处(最好别用到图像上,若是仅仅作为练手还可以接受),那么可以按照以下流程:

- 预处理你的数据:对你数据中的特征进行归一化(normalize),让其具有零平均值(zero mean)和单位方差(unit variance)。在后面的小节我们会讨论这些细节。本小节不讨论,是因为图像中的像素都是同质的,不会表现出较大的差异分布,也就不需要标准化处理了。

- 如果数据是高维数据,考虑使用降维方法,比如PCA(wiki ref, CS229ref, blog ref)或随机投影。

- 将数据随机分入训练集和验证集。按照一般规律,70%-90% 数据作为训练集。这个比例根据算法中有多少超参数,以及这些超参数对于算法的预期影响来决定。如果需要预测的超参数很多,那么就应该使用更大的验证集来有效地估计它们。如果担心验证集数量不够,那么就尝试交叉验证方法。如果计算资源足够,使用交叉验证总是更加安全的(份数越多,效果越好,也更耗费计算资源)。

- 在验证集上调优,尝试足够多的k值,尝试L1和L2两种范数计算方式。

- 如果分类器跑得太慢,尝试使用Approximate Nearest Neighbor库(比如FLANN)来加速这个过程,其代价是降低一些准确率。

- 对最优的超参数做记录。记录最优参数后,是否应该让使用最优参数的算法在完整的训练集上运行并再次训练呢?因为如果把验证集重新放回到训练集中(自然训练集的数据量就又变大了),有可能最优参数又会有所变化。在实践中,不要这样做。千万不要在最终的分类器中使用验证集数据,这样做会破坏对于最优参数的估计。直接使用测试集来测试用最优参数设置好的最优模型,得到测试集数据的分类准确率,并以此作为你的kNN分类器在该数据上的性能表现。

课程作业

KNN实现代码:

1 import numpy as np 2 3 #http://blog.csdn.net/geekmanong/article/details/51524402 4 #http://www.cnblogs.com/daihengchen/p/5754383.html 5 #http://blog.csdn.net/han784851198/article/details/53331104 6 class KNearestNeighbor(object): 7 """ a kNN classifier with L2 distance """ 8 9 def __init__(self): 10 pass 11 12 def train(self, X, y): 13 """ 14 Train the classifier. For k-nearest neighbors this is just 15 memorizing the training data. 16 训练分类器。对于KNN算法,此处只需要存储训练数据即可。 17 Inputs: 18 - X: A numpy array of shape (num_train, D) containing the training data 19 consisting of num_train samples each of dimension D. 20 - y: A numpy array of shape (N,) containing the training labels, where 21 y[i] is the label for X[i]. 22 """ 23 self.X_train = X 24 self.y_train = y 25 26 def predict(self, X, k=1, num_loops=0): 27 """ 28 Predict labels for test data using this classifier. 29 基于该分类器,预测测试数据的标签分类。 30 Inputs: 31 - X: A numpy array of shape (num_test, D) containing test data consisting 32 of num_test samples each of dimension D.测试数据集 33 - k: The number of nearest neighbors that vote for the predicted labels. 34 - num_loops: Determines which implementation to use to compute distances 35 between training points and testing points.选择距离算法的实现方法 36 37 Returns: 38 - y: A numpy array of shape (num_test,) containing predicted labels for the 39 test data, where y[i] is the predicted label for the test point X[i]. 40 """ 41 if num_loops == 0: 42 dists = self.compute_distances_no_loops(X) 43 elif num_loops == 1: 44 dists = self.compute_distances_one_loop(X) 45 elif num_loops == 2: 46 dists = self.compute_distances_two_loops(X) 47 else: 48 raise ValueError('Invalid value %d for num_loops' % num_loops) 49 50 return self.predict_labels(dists, k=k) 51 52 def compute_distances_two_loops(self, X): 53 """ 54 Compute the distance between each test point in X and each training point 55 in self.X_train using a nested loop over both the training data and the 56 test data. 两层循环计算L2距离 57 58 Inputs: 59 - X: A numpy array of shape (num_test, D) containing test data. 60 61 Returns: 62 - dists: A numpy array of shape (num_test, num_train) where dists[i, j] 63 is the Euclidean distance between the ith test point and the jth training 64 point. 65 """ 66 num_test = X.shape[0] 67 num_train = self.X_train.shape[0] 68 dists = np.zeros((num_test, num_train)) 69 for i in range(num_test): 70 for j in range(num_train): 71 ##################################################################### 72 # TODO: # 73 # Compute the l2 distance between the ith test point and the jth # 74 # training point, and store the result in dists[i, j]. You should # 75 # not use a loop over dimension. # 76 ##################################################################### 77 test_row = X[i, :] 78 train_row = self.X_train[j, :] 79 dists[i, j] = np.sqrt(np.sum((test_row - train_row)**2)) 80 81 return dists 82 ##################################################################### 83 # END OF YOUR CODE # 84 ##################################################################### 85 return dists 86 87 def compute_distances_one_loop(self, X): 88 """ 89 Compute the distance between each test point in X and each training point 90 in self.X_train using a single loop over the test data. 一层循环计算L2距离 91 92 Input / Output: Same as compute_distances_two_loops 93 """ 94 num_test = X.shape[0] 95 num_train = self.X_train.shape[0] 96 dists = np.zeros((num_test, num_train)) 97 for i in range(num_test): 98 ####################################################################### 99 # TODO: # 100 # Compute the l2 distance between the ith test point and all training # 101 # points, and store the result in dists[i, :]. # 102 ####################################################################### 103 test_row = X[i, :] 104 dists[i,:] = np.sqrt(np.sum(test_row - self.X_train)**2) #numpy广播机制 105 ####################################################################### 106 # END OF YOUR CODE # 107 ####################################################################### 108 return dists 109 110 def compute_distances_no_loops(self, X): 111 """ 112 Compute the distance between each test point in X and each training point 113 in self.X_train using no explicit loops. 无循环计算L2距离 114 115 Input / Output: Same as compute_distances_two_loops 116 """ 117 num_test = X.shape[0] 118 num_train = self.X_train.shape[0] 119 dists = np.zeros((num_test, num_train)) 120 ######################################################################### 121 # TODO: # 122 # Compute the l2 distance between all test points and all training # 123 # points without using any explicit loops, and store the result in # 124 # dists. # 125 # # 126 # You should implement this function using only basic array operations; # 127 # in particular you should not use functions from scipy. # 128 # # 129 # HINT: Try to formulate the l2 distance using matrix multiplication # 130 # and two broadcast sums. # 131 ######################################################################### 132 X_sq = np.square(X).sum(axis=1) 133 X_train_sq = np.square(self.X_train).sum(axis=1) 134 dists = np.sqrt(-2*np.dot(X, self.X_train.T) + X_train_sq + np.matrix(X_sq).T) 135 dists = np.array(dists) 136 ######################################################################### 137 # END OF YOUR CODE # 138 ######################################################################### 139 return dists 140 141 def predict_labels(self, dists, k=1): 142 """ 143 Given a matrix of distances between test points and training points, 144 predict a label for each test point. 145 146 Inputs: 147 - dists: A numpy array of shape (num_test, num_train) where dists[i, j] 148 gives the distance betwen the ith test point and the jth training point. 149 150 Returns: 151 - y: A numpy array of shape (num_test,) containing predicted labels for the 152 test data, where y[i] is the predicted label for the test point X[i]. 153 """ 154 num_test = dists.shape[0] 155 y_pred = np.zeros(num_test) 156 for i in range(num_test): 157 # A list of length k storing the labels of the k nearest neighbors to 158 # the ith test point. 159 closest_y = [] 160 ######################################################################### 161 # TODO: # 162 # Use the distance matrix to find the k nearest neighbors of the ith # 163 # testing point, and use self.y_train to find the labels of these # 164 # neighbors. Store these labels in closest_y. # 165 # Hint: Look up the function numpy.argsort. # 166 # numpy.argsort.函数返回的是数组值从小到大的索引值 # 167 ######################################################################### 168 closest_y = self.y_train[np.argsort(dists[i,:])[:k]] 169 ######################################################################### 170 # TODO: # 171 # Now that you have found the labels of the k nearest neighbors, you # 172 # need to find the most common label in the list closest_y of labels. # 173 # Store this label in y_pred[i]. Break ties by choosing the smaller # 174 # label. # 175 ######################################################################### 176 #np.bincount:统计每一个元素出现的次数 177 y_pred[i] = np.argmax(np.bincount(closest_y)) 178 ######################################################################### 179 # END OF YOUR CODE # 180 ######################################################################### 181 182 return y_pred 183 184 k_nearest_neighbor.py

测试和交叉验证代码:

1 #coding:utf-8 2 ''' 3 Created on 2017年3月21日 4 5 @author: 206 6 ''' 7 import random 8 import numpy as np 9 from assignment1.data_utils import load_CIFAR10 10 from assignment1.classifiers.k_nearest_neighbor import KNearestNeighbor 11 import matplotlib.pyplot as plt 12 13 # This is a bit of magic to make matplotlib figures appear inline in the notebook 14 # rather than in a new window. 15 plt.rcParams['figure.figsize'] = (10.0, 8.0) # set default size of plots 16 plt.rcParams['image.interpolation'] = 'nearest' 17 plt.rcParams['image.cmap'] = 'gray' 18 19 X_train, y_train, X_test, y_test = load_CIFAR10('../datasets') 20 21 # As a sanity check, we print out the size of the training and test data. 22 print('Training data shape: ', X_train.shape) 23 print('Training labels shape: ', y_train.shape) 24 print('Test data shape: ', X_test.shape) 25 print('Test labels shape: ', y_test.shape) 26 27 # 从数据集中展示一部分数据 28 # 每个类别展示若干张对应图片 29 classes = ['plane', 'car', 'bird', 'cat', 'deer', 'dog', 'frog', 'horse', 'ship', 'truck'] 30 num_classes = len(classes) 31 samples_per_class = 7 32 for y, cls in enumerate(classes): 33 idxs = np.flatnonzero(y_train == y) 34 idxs = np.random.choice(idxs, samples_per_class, replace=False) 35 for i, idx in enumerate(idxs): 36 plt_idx = i * num_classes + y + 1 37 plt.subplot(samples_per_class, num_classes, plt_idx) 38 plt.imshow(X_train[idx].astype('uint8')) 39 plt.axis('off') 40 if i == 0: 41 plt.title(cls) 42 plt.show() 43 44 # 截取部分样本数据,以提高本作业的执行效率 45 num_training = 5000 46 mask = range(num_training) 47 X_train = X_train[mask] 48 y_train = y_train[mask] 49 50 num_test = 500 51 mask = range(num_test) 52 X_test = X_test[mask] 53 y_test = y_test[mask] 54 55 # reshape训练和测试数据,转换为行的形式 56 X_train = np.reshape(X_train, (X_train.shape[0], -1)) 57 X_test = np.reshape(X_test, (X_test.shape[0], -1)) 58 59 print(X_train.shape) 60 print(X_test.shape) 61 62 classifier = KNearestNeighbor() 63 classifier.train(X_train, y_train) 64 65 dists = classifier.compute_distances_two_loops(X_test) 66 print(dists.shape) 67 68 plt.imshow(dists, interpolation='none') 69 plt.show() 70 71 # Now implement the function predict_labels and run the code below: 72 # k=1时 73 y_test_pred = classifier.predict_labels(dists, k=1) 74 75 # Compute and print the fraction of correctly predicted examples 76 num_correct = np.sum(y_test_pred == y_test) 77 accuracy = float(num_correct) / num_test 78 print('Got %d / %d correct => accuracy: %f' % (num_correct, num_test, accuracy)) 79 80 # k=5时 81 y_test_pred = classifier.predict_labels(dists, k=5) 82 num_correct = np.sum(y_test_pred == y_test) 83 accuracy = float(num_correct) / num_test 84 print('Got %d / %d correct => accuracy: %f' % (num_correct, num_test, accuracy)) 85 86 ####测试三种距离计算法的效率 87 88 dists_one = classifier.compute_distances_one_loop(X_test) 89 90 difference = np.linalg.norm(dists - dists_one, ord='fro') 91 print('Difference was: %f' % (difference, )) 92 if difference < 0.001: 93 print('Good! The distance matrices are the same') 94 else: 95 print('Uh-oh! The distance matrices are different') 96 97 dists_two = classifier.compute_distances_no_loops(X_test) 98 difference = np.linalg.norm(dists - dists_two, ord='fro') 99 print('Difference was: %f' % (difference, )) 100 if difference < 0.001: 101 print('Good! The distance matrices are the same') 102 else: 103 print('Uh-oh! The distance matrices are different') 104 105 106 def time_function(f, *args): 107 """ 108 Call a function f with args and return the time (in seconds) that it took to execute. 109 """ 110 import time 111 tic = time.time() 112 f(*args) 113 toc = time.time() 114 return toc - tic 115 116 two_loop_time = time_function(classifier.compute_distances_two_loops, X_test) 117 print('Two loop version took %f seconds' % two_loop_time) 118 119 one_loop_time = time_function(classifier.compute_distances_one_loop, X_test) 120 print('One loop version took %f seconds' % one_loop_time) 121 122 no_loop_time = time_function(classifier.compute_distances_no_loops, X_test) 123 print('No loop version took %f seconds' % no_loop_time) 124 125 # 交叉验证 126 num_folds = 5 127 k_choices = [1, 3, 5, 8, 10, 12, 15, 20, 50, 100] 128 129 X_train_folds = [] 130 y_train_folds = [] 131 ################################################################################ 132 # TODO: # 133 # Split up the training data into folds. After splitting, X_train_folds and # 134 # y_train_folds should each be lists of length num_folds, where # 135 # y_train_folds[i] is the label vector for the points in X_train_folds[i]. # 136 # Hint: Look up the numpy array_split function. # 137 ################################################################################ 138 #数据划分 139 X_train_folds = np.array_split(X_train, num_folds); 140 y_train_folds = np.array_split(y_train, num_folds) 141 ################################################################################ 142 # END OF YOUR CODE # 143 ################################################################################ 144 145 # A dictionary holding the accuracies for different values of k that we find 146 # when running cross-validation. After running cross-validation, 147 # k_to_accuracies[k] should be a list of length num_folds giving the different 148 # accuracy values that we found when using that value of k. 149 150 k_to_accuracies = {} 151 152 ################################################################################ 153 # TODO: # 154 # Perform k-fold cross validation to find the best value of k. For each # 155 # possible value of k, run the k-nearest-neighbor algorithm num_folds times, # 156 # where in each case you use all but one of the folds as training data and the # 157 # last fold as a validation set. Store the accuracies for all fold and all # 158 # values of k in the k_to_accuracies dictionary. # 159 ################################################################################ 160 for k in k_choices: 161 k_to_accuracies[k] = [] 162 163 for k in k_choices:#find the best k-value 164 for i in range(num_folds): 165 X_train_cv = np.vstack(X_train_folds[:i]+X_train_folds[i+1:]) 166 X_test_cv = X_train_folds[i] 167 168 y_train_cv = np.hstack(y_train_folds[:i]+y_train_folds[i+1:]) #size:4000 169 y_test_cv = y_train_folds[i] 170 171 classifier.train(X_train_cv, y_train_cv) 172 dists_cv = classifier.compute_distances_no_loops(X_test_cv) 173 174 y_test_pred = classifier.predict_labels(dists_cv, k) 175 num_correct = np.sum(y_test_pred == y_test_cv) 176 accuracy = float(num_correct) / y_test_cv.shape[0] 177 178 k_to_accuracies[k].append(accuracy) 179 ################################################################################ 180 # END OF YOUR CODE # 181 ################################################################################ 182 183 # Print out the computed accuracies 184 for k in sorted(k_to_accuracies): 185 for accuracy in k_to_accuracies[k]: 186 print('k = %d, accuracy = %f' % (k, accuracy)) 187 188 189 190 # plot the raw observations 191 for k in k_choices: 192 accuracies = k_to_accuracies[k] 193 plt.scatter([k] * len(accuracies), accuracies) 194 195 # plot the trend line with error bars that correspond to standard deviation 196 accuracies_mean = np.array([np.mean(v) for k,v in sorted(k_to_accuracies.items())]) 197 accuracies_std = np.array([np.std(v) for k,v in sorted(k_to_accuracies.items())]) 198 plt.errorbar(k_choices, accuracies_mean, yerr=accuracies_std) 199 plt.title('Cross-validation on k') 200 plt.xlabel('k') 201 plt.ylabel('Cross-validation accuracy') 202 plt.show()

运行结果:

1 Training data shape: (50000, 32, 32, 3) 2 Training labels shape: (50000,) 3 Test data shape: (10000, 32, 32, 3) 4 Test labels shape: (10000,) 5 (5000, 3072) 6 (500, 3072) 7 (500, 5000) 8 Got 137 / 500 correct => accuracy: 0.274000 9 Got 139 / 500 correct => accuracy: 0.278000 10 Difference was: 794038655446.820190 11 Uh-oh! The distance matrices are different 12 Difference was: 0.000000 13 Good! The distance matrices are the same 14 Two loop version took 52.208034 seconds 15 One loop version took 42.104724 seconds 16 No loop version took 0.371247 seconds 17 k = 1, accuracy = 0.263000 18 k = 1, accuracy = 0.257000 19 k = 1, accuracy = 0.264000 20 k = 1, accuracy = 0.278000 21 k = 1, accuracy = 0.266000 22 k = 3, accuracy = 0.239000 23 k = 3, accuracy = 0.249000 24 k = 3, accuracy = 0.240000 25 k = 3, accuracy = 0.266000 26 k = 3, accuracy = 0.254000 27 k = 5, accuracy = 0.248000 28 k = 5, accuracy = 0.266000 29 k = 5, accuracy = 0.280000 30 k = 5, accuracy = 0.292000 31 k = 5, accuracy = 0.280000 32 k = 8, accuracy = 0.262000 33 k = 8, accuracy = 0.282000 34 k = 8, accuracy = 0.273000 35 k = 8, accuracy = 0.290000 36 k = 8, accuracy = 0.273000 37 k = 10, accuracy = 0.265000 38 k = 10, accuracy = 0.296000 39 k = 10, accuracy = 0.276000 40 k = 10, accuracy = 0.284000 41 k = 10, accuracy = 0.280000 42 k = 12, accuracy = 0.260000 43 k = 12, accuracy = 0.295000 44 k = 12, accuracy = 0.279000 45 k = 12, accuracy = 0.283000 46 k = 12, accuracy = 0.280000 47 k = 15, accuracy = 0.252000 48 k = 15, accuracy = 0.289000 49 k = 15, accuracy = 0.278000 50 k = 15, accuracy = 0.282000 51 k = 15, accuracy = 0.274000 52 k = 20, accuracy = 0.270000 53 k = 20, accuracy = 0.279000 54 k = 20, accuracy = 0.279000 55 k = 20, accuracy = 0.282000 56 k = 20, accuracy = 0.285000 57 k = 50, accuracy = 0.271000 58 k = 50, accuracy = 0.288000 59 k = 50, accuracy = 0.278000 60 k = 50, accuracy = 0.269000 61 k = 50, accuracy = 0.266000 62 k = 100, accuracy = 0.256000 63 k = 100, accuracy = 0.270000 64 k = 100, accuracy = 0.263000 65 k = 100, accuracy = 0.256000 66 k = 100, accuracy = 0.263000

交叉验证结果:

完整代码见这里。