1. 首先安装好docker,拉取intel caffe image:

$ docker pull bvlc/caffe:intel 试着运行: $ docker run -it bvlc/caffe:intel /bin/bash

2. 拉取 intel caffe 源码:

git clone https://github.com/intel/caffe git checkout 1.0

或者下载源码包:

wget https://github.com/intel/caffe/archive/1.1.0.zip unzip 1.0.zip

3. 编译Intel caffe

sudo apt-get -y install python-devel boost boost-devel cmake numpy numpy-devel gflags gflags-devel glog glog-devel protobuf protobuf-devel hdf5 hdf5-devel lmdb lmdb-devel leveldb leveldb-devel snappy-devel opencv opencv-devel

cp Makefile.config.example Makefile.config # Adjust Makefile.config (for example, if using Anaconda Python, or if cuDNN is desired)

vim Makefile.config

# Intel(r) Machine Learning Scaling Library (uncomment to build with MLSL) USE_MLSL := 1

多线程编译:

$ make -j <number_of_physical_cores> -k

编译过程中会下载MKL 和MKL-DNN:

Download mklml_lnx_2018.0.1.20171227.tgz git clone --no-checkout https://github.com/01org/mkl-dnn.git /home/ubuntu/yuntong/caffe-master/external/mkldnn/tmp

测试编译结果:

make test make runtest

4. 下载和创建mnist数据集:

cd $CAFFE_ROOT ./data/mnist/get_mnist.sh ./examples/mnist/create_mnist.sh Creating lmdb...

5. 写入intel caffe docker中的caffe运行路径:

vim /home/ubuntu/yuntong/caffe-1.0/examples/mnist/train_lenet.sh

#!/usr/bin/env sh set -e #./build/tools/caffe train --solver=examples/mnist/lenet_solver.prototxt $@ /opt/caffe/build/tools/caffe train --solver=examples/mnist/lenet_solver.prototxt $@

6. 设置CPU模式 vim examples/mnist/lenet_solver.prototxt

# solver mode: CPU or GPU #solver_mode: GPU solver_mode: CPU

7. 运行docker,并在docker中运行mnist训练:

sudo docker run -v "/home/ubuntu/yuntong/:/opt/caffe/share" -it bvlc/caffe:intel /bin/bash cd /opt/caffe/share/caffe-1.0 ./examples/mnist/train_lenet.sh

运行结果如下:

ubuntu@k8s-1:~$ sudo docker run -v "/home/ubuntu/yuntong/:/opt/caffe/share" -it bvlc/caffe:intel /bin/bash root@19eaccc415e1:/workspace# cd /opt/caffe/share/caffe-1.0 root@19eaccc415e1:/opt/caffe/share/caffe-1.0# ./examples/mnist/train_lenet.sh I0408 01:33:10.509523 12 caffe.cpp:285] Use CPU. I0408 01:33:10.510561 12 solver.cpp:107] Initializing solver from parameters: test_iter: 100 test_interval: 500 base_lr: 0.01 display: 100 max_iter: 10000 lr_policy: "inv" gamma: 0.0001 power: 0.75 momentum: 0.9 weight_decay: 0.0005 snapshot: 5000 snapshot_prefix: "examples/mnist/lenet" solver_mode: CPU net: "examples/mnist/lenet_train_test.prototxt" train_state { level: 0 stage: "" } I0408 01:33:10.511216 12 solver.cpp:153] Creating training net from net file: examples/mnist/lenet_train_test.prototxt I0408 01:33:10.523326 12 cpu_info.cpp:453] Processor speed [MHz]: 0 I0408 01:33:10.523360 12 cpu_info.cpp:456] Total number of sockets: 8 I0408 01:33:10.523373 12 cpu_info.cpp:459] Total number of CPU cores: 8 I0408 01:33:10.523385 12 cpu_info.cpp:462] Total number of processors: 8 I0408 01:33:10.523396 12 cpu_info.cpp:465] GPU is used: no I0408 01:33:10.523406 12 cpu_info.cpp:468] OpenMP environmental variables are specified: no I0408 01:33:10.523427 12 cpu_info.cpp:471] OpenMP thread bind allowed: yes I0408 01:33:10.523437 12 cpu_info.cpp:474] Number of OpenMP threads: 8 I0408 01:33:10.524194 12 net.cpp:1052] The NetState phase (0) differed from the phase (1) specified by a rule in layer mnist I0408 01:33:10.524220 12 net.cpp:1052] The NetState phase (0) differed from the phase (1) specified by a rule in layer accuracy I0408 01:33:10.524510 12 net.cpp:207] Initializing net from parameters: I0408 01:33:10.524531 12 net.cpp:208] name: "LeNet" state { phase: TRAIN level: 0 stage: "" } engine: "MKLDNN" compile_net_state { bn_scale_remove: false bn_scale_merge: false } layer { name: "mnist" type: "Data" top: "data" top: "label" include { phase: TRAIN } transform_param { scale: 0.00390625 } data_param { source: "examples/mnist/mnist_train_lmdb" batch_size: 64 backend: LMDB } } layer { name: "conv1" type: "Convolution" bottom: "data" top: "conv1" param { lr_mult: 1 } param { lr_mult: 2 } convolution_param { num_output: 20 kernel_size: 5 stride: 1 weight_filler { type: "xavier" } bias_filler { type: "constant" } } } layer { name: "pool1" type: "Pooling" bottom: "conv1" top: "pool1" pooling_param { pool: MAX kernel_size: 2 stride: 2 } } layer { name: "conv2" type: "Convolution" bottom: "pool1" top: "conv2" param { lr_mult: 1 } param { lr_mult: 2 } convolution_param { num_output: 50 kernel_size: 5 stride: 1 weight_filler { type: "xavier" } bias_filler { type: "constant" } } } layer { name: "pool2" type: "Pooling" bottom: "conv2" top: "pool2" pooling_param { pool: MAX kernel_size: 2 stride: 2 } } layer { name: "ip1" type: "InnerProduct" bottom: "pool2" top: "ip1" param { lr_mult: 1 } param { lr_mult: 2 } inner_product_param { num_output: 500 weight_filler { type: "xavier" } bias_filler { type: "constant" } } } layer { name: "relu1" type: "ReLU" bottom: "ip1" top: "ip1" } layer { name: "ip2" type: "InnerProduct" bottom: "ip1" top: "ip2" param { lr_mult: 1 } param { lr_mult: 2 } inner_product_param { num_output: 10 weight_filler { type: "xavier" } bias_filler { type: "constant" } } } layer { name: "loss" type: "SoftmaxWithLoss" bottom: "ip2" bottom: "label" top: "loss" } ……………………………………………. ……………………………………………. ……………………………………………. I0408 01:36:28.103435 12 solver.cpp:312] Iteration 7300, loss = 0.0219446 I0408 01:36:28.103497 12 solver.cpp:333] Train net output #0: loss = 0.0219446 (* 1 = 0.0219446 loss) I0408 01:36:28.103519 12 sgd_solver.cpp:215] Iteration 7300, lr = 0.00662927 I0408 01:36:30.492499 12 solver.cpp:312] Iteration 7400, loss = 0.00484636 I0408 01:36:30.492563 12 solver.cpp:333] Train net output #0: loss = 0.00484634 (* 1 = 0.00484634 loss) I0408 01:36:30.492584 12 sgd_solver.cpp:215] Iteration 7400, lr = 0.00660067 I0408 01:36:32.912159 12 solver.cpp:474] Iteration 7500, Testing net (#0) I0408 01:36:33.992708 12 solver.cpp:563] Test net output #0: accuracy = 0.9905 I0408 01:36:33.992983 12 solver.cpp:563] Test net output #1: loss = 0.0301301 (* 1 = 0.0301301 loss) I0408 01:36:34.019621 12 solver.cpp:312] Iteration 7500, loss = 0.00250706 I0408 01:36:34.019706 12 solver.cpp:333] Train net output #0: loss = 0.00250702 (* 1 = 0.00250702 loss) I0408 01:36:34.020164 12 sgd_solver.cpp:215] Iteration 7500, lr = 0.00657236 I0408 01:36:36.432328 12 solver.cpp:312] Iteration 7600, loss = 0.00537509 I0408 01:36:36.432528 12 solver.cpp:333] Train net output #0: loss = 0.00537505 (* 1 = 0.00537505 loss) I0408 01:36:36.432566 12 sgd_solver.cpp:215] Iteration 7600, lr = 0.00654433 I0408 01:36:39.159704 12 solver.cpp:312] Iteration 7700, loss = 0.034624 I0408 01:36:39.159781 12 solver.cpp:333] Train net output #0: loss = 0.0346239 (* 1 = 0.0346239 loss) I0408 01:36:39.159811 12 sgd_solver.cpp:215] Iteration 7700, lr = 0.00651658 I0408 01:36:41.873411 12 solver.cpp:312] Iteration 7800, loss = 0.00424178 I0408 01:36:41.873672 12 solver.cpp:333] Train net output #0: loss = 0.00424175 (* 1 = 0.00424175 loss) I0408 01:36:41.873694 12 sgd_solver.cpp:215] Iteration 7800, lr = 0.00648911 I0408 01:36:44.552800 12 solver.cpp:312] Iteration 7900, loss = 0.00208136 I0408 01:36:44.553073 12 solver.cpp:333] Train net output #0: loss = 0.00208134 (* 1 = 0.00208134 loss) I0408 01:36:44.553095 12 sgd_solver.cpp:215] Iteration 7900, lr = 0.0064619 I0408 01:36:47.132925 12 solver.cpp:474] Iteration 8000, Testing net (#0) I0408 01:36:48.254405 12 solver.cpp:563] Test net output #0: accuracy = 0.9905 I0408 01:36:48.254935 12 solver.cpp:563] Test net output #1: loss = 0.0278543 (* 1 = 0.0278543 loss) I0408 01:36:48.279563 12 solver.cpp:312] Iteration 8000, loss = 0.0065576 I0408 01:36:48.279626 12 solver.cpp:333] Train net output #0: loss = 0.00655758 (* 1 = 0.00655758 loss) I0408 01:36:48.279647 12 sgd_solver.cpp:215] Iteration 8000, lr = 0.00643496 I0408 01:36:50.693308 12 solver.cpp:312] Iteration 8100, loss = 0.0102435 I0408 01:36:50.694417 12 solver.cpp:333] Train net output #0: loss = 0.0102435 (* 1 = 0.0102435 loss) I0408 01:36:50.694447 12 sgd_solver.cpp:215] Iteration 8100, lr = 0.00640827 I0408 01:36:53.059345 12 solver.cpp:312] Iteration 8200, loss = 0.0111062 I0408 01:36:53.059619 12 solver.cpp:333] Train net output #0: loss = 0.0111061 (* 1 = 0.0111061 loss) I0408 01:36:53.059643 12 sgd_solver.cpp:215] Iteration 8200, lr = 0.00638185 I0408 01:36:55.439267 12 solver.cpp:312] Iteration 8300, loss = 0.0255548 I0408 01:36:55.439332 12 solver.cpp:333] Train net output #0: loss = 0.0255548 (* 1 = 0.0255548 loss) I0408 01:36:55.439357 12 sgd_solver.cpp:215] Iteration 8300, lr = 0.00635567 I0408 01:36:57.821687 12 solver.cpp:312] Iteration 8400, loss = 0.00810484 I0408 01:36:57.821768 12 solver.cpp:333] Train net output #0: loss = 0.00810483 (* 1 = 0.00810483 loss) I0408 01:36:57.821794 12 sgd_solver.cpp:215] Iteration 8400, lr = 0.00632975 I0408 01:37:00.229344 12 solver.cpp:474] Iteration 8500, Testing net (#0) I0408 01:37:01.341504 12 solver.cpp:563] Test net output #0: accuracy = 0.991 I0408 01:37:01.341583 12 solver.cpp:563] Test net output #1: loss = 0.028333 (* 1 = 0.028333 loss) I0408 01:37:01.368783 12 solver.cpp:312] Iteration 8500, loss = 0.00672253 I0408 01:37:01.368850 12 solver.cpp:333] Train net output #0: loss = 0.00672251 (* 1 = 0.00672251 loss) I0408 01:37:01.368876 12 sgd_solver.cpp:215] Iteration 8500, lr = 0.00630407 I0408 01:37:03.789499 12 solver.cpp:312] Iteration 8600, loss = 0.000701985 I0408 01:37:03.789630 12 solver.cpp:333] Train net output #0: loss = 0.000701961 (* 1 = 0.000701961 loss) I0408 01:37:03.789660 12 sgd_solver.cpp:215] Iteration 8600, lr = 0.00627864 I0408 01:37:06.311506 12 solver.cpp:312] Iteration 8700, loss = 0.00329251 I0408 01:37:06.311738 12 solver.cpp:333] Train net output #0: loss = 0.00329248 (* 1 = 0.00329248 loss) I0408 01:37:06.311763 12 sgd_solver.cpp:215] Iteration 8700, lr = 0.00625344 I0408 01:37:08.734477 12 solver.cpp:312] Iteration 8800, loss = 0.0011685 I0408 01:37:08.734781 12 solver.cpp:333] Train net output #0: loss = 0.00116848 (* 1 = 0.00116848 loss) I0408 01:37:08.734805 12 sgd_solver.cpp:215] Iteration 8800, lr = 0.00622847 I0408 01:37:11.223204 12 solver.cpp:312] Iteration 8900, loss = 0.000881624 I0408 01:37:11.223266 12 solver.cpp:333] Train net output #0: loss = 0.000881607 (* 1 = 0.000881607 loss) I0408 01:37:11.223289 12 sgd_solver.cpp:215] Iteration 8900, lr = 0.00620374 I0408 01:37:13.565495 12 solver.cpp:474] Iteration 9000, Testing net (#0) I0408 01:37:14.642087 12 solver.cpp:563] Test net output #0: accuracy = 0.99 I0408 01:37:14.642159 12 solver.cpp:563] Test net output #1: loss = 0.0268256 (* 1 = 0.0268256 loss) I0408 01:37:14.666667 12 solver.cpp:312] Iteration 9000, loss = 0.011516 I0408 01:37:14.666734 12 solver.cpp:333] Train net output #0: loss = 0.011516 (* 1 = 0.011516 loss) I0408 01:37:14.666755 12 sgd_solver.cpp:215] Iteration 9000, lr = 0.00617924 I0408 01:37:17.068984 12 solver.cpp:312] Iteration 9100, loss = 0.00914626 I0408 01:37:17.069262 12 solver.cpp:333] Train net output #0: loss = 0.00914625 (* 1 = 0.00914625 loss) I0408 01:37:17.069284 12 sgd_solver.cpp:215] Iteration 9100, lr = 0.00615496 I0408 01:37:19.455351 12 solver.cpp:312] Iteration 9200, loss = 0.00317596 I0408 01:37:19.455596 12 solver.cpp:333] Train net output #0: loss = 0.00317595 (* 1 = 0.00317595 loss) I0408 01:37:19.455623 12 sgd_solver.cpp:215] Iteration 9200, lr = 0.0061309 I0408 01:37:21.834389 12 solver.cpp:312] Iteration 9300, loss = 0.00890829 I0408 01:37:21.835710 12 solver.cpp:333] Train net output #0: loss = 0.00890827 (* 1 = 0.00890827 loss) I0408 01:37:21.835734 12 sgd_solver.cpp:215] Iteration 9300, lr = 0.00610706 I0408 01:37:24.199872 12 solver.cpp:312] Iteration 9400, loss = 0.0232409 I0408 01:37:24.199946 12 solver.cpp:333] Train net output #0: loss = 0.0232409 (* 1 = 0.0232409 loss) I0408 01:37:24.199970 12 sgd_solver.cpp:215] Iteration 9400, lr = 0.00608343 I0408 01:37:26.601363 12 solver.cpp:474] Iteration 9500, Testing net (#0) I0408 01:37:27.673274 12 solver.cpp:563] Test net output #0: accuracy = 0.989 I0408 01:37:27.673359 12 solver.cpp:563] Test net output #1: loss = 0.0323742 (* 1 = 0.0323742 loss) I0408 01:37:27.698536 12 solver.cpp:312] Iteration 9500, loss = 0.00388906 I0408 01:37:27.698603 12 solver.cpp:333] Train net output #0: loss = 0.00388905 (* 1 = 0.00388905 loss) I0408 01:37:27.698628 12 sgd_solver.cpp:215] Iteration 9500, lr = 0.00606002 I0408 01:37:30.146077 12 solver.cpp:312] Iteration 9600, loss = 0.00205984 I0408 01:37:30.146361 12 solver.cpp:333] Train net output #0: loss = 0.00205983 (* 1 = 0.00205983 loss) I0408 01:37:30.146386 12 sgd_solver.cpp:215] Iteration 9600, lr = 0.00603682 I0408 01:37:32.567978 12 solver.cpp:312] Iteration 9700, loss = 0.00330913 I0408 01:37:32.568212 12 solver.cpp:333] Train net output #0: loss = 0.00330913 (* 1 = 0.00330913 loss) I0408 01:37:32.568235 12 sgd_solver.cpp:215] Iteration 9700, lr = 0.00601382 I0408 01:37:34.955097 12 solver.cpp:312] Iteration 9800, loss = 0.0134696 I0408 01:37:34.955363 12 solver.cpp:333] Train net output #0: loss = 0.0134696 (* 1 = 0.0134696 loss) I0408 01:37:34.955386 12 sgd_solver.cpp:215] Iteration 9800, lr = 0.00599102 I0408 01:37:37.377465 12 solver.cpp:312] Iteration 9900, loss = 0.00235391 I0408 01:37:37.377655 12 solver.cpp:333] Train net output #0: loss = 0.0023539 (* 1 = 0.0023539 loss) I0408 01:37:37.377678 12 sgd_solver.cpp:215] Iteration 9900, lr = 0.00596843 I0408 01:37:39.850847 12 solver.cpp:707] Snapshot begin I0408 01:37:39.859346 12 solver.cpp:769] Snapshotting to binary proto file examples/mnist/lenet_iter_10000.caffemodel I0408 01:37:39.869576 12 sgd_solver.cpp:754] Snapshotting solver state to binary proto file examples/mnist/lenet_iter_10000.solverstate I0408 01:37:39.878753 12 solver.cpp:734] Snapshot end I0408 01:37:39.888120 12 solver.cpp:436] Iteration 10000, loss = 0.00251002 I0408 01:37:39.888172 12 solver.cpp:474] Iteration 10000, Testing net (#0) I0408 01:37:41.067348 12 solver.cpp:563] Test net output #0: accuracy = 0.9913 I0408 01:37:41.067407 12 solver.cpp:563] Test net output #1: loss = 0.0267652 (* 1 = 0.0267652 loss) I0408 01:37:41.067422 12 solver.cpp:443] Optimization Done. I0408 01:37:41.067432 12 caffe.cpp:345] Optimization Done.

花费时间 01:37:41.067432 - 01:33:10.509523 = 4.31分钟

CPU及IO利用率:

8个CPU基本达到100%

IO很小,MNIST数据集只有几十M,数据都被cache了

8. 加上MKL2017

./examples/mnist/train_lenet.sh -engine "MKL2017"

第一次: 03:01:35.904659 -02:58:30.774215 = 2:55分钟

第二次: 03:05:15.134409 - 03:02:13.449990 = 2:58分钟

对于原生Caffe

docker run -ti bvlc/caffe:cpu caffe –version

sudo docker run -v "/home/ubuntu/yuntong/:/opt/caffe/share" -it bvlc/caffe:cpu /bin/bash

./examples/mnist/train_lenet.sh

运行时间24分钟。

原生caffe 只能在一个线程上跑

运行cifar10

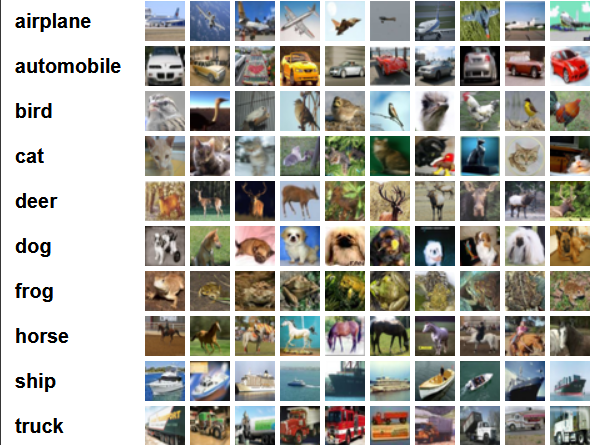

该数据集共有60000张彩色图像,这些图像是32*32,分为10个类,每类6000张图。这里面有50000张用于训练,构成了5个训练批,每一批10000张图;另外10000用于测试,单独构成一批。测试批的数据里,取自10类中的每一类,每一类随机取1000张。抽剩下的就随机排列组成了训练批。注意一个训练批中的各类图像并不一定数量相同,总的来看训练批,每一类都有5000张图。

下面这幅图就是列举了10各类,每一类展示了随机的10张图片:

1. 下载和创建cifar10数据集:

cd $CAFFE_ROOT ./data/mnist/get_cifar10.sh ./examples/cifar10/create_cifar10.sh Creating lmdb...

2. 写入intel caffe docker中的caffe运行路径: ~/yuntong/caffe-1.0/examples$ vim cifar10/train_quick.sh

TOOLS=/opt/caffe/build/tools

3. 设置CPU模式:

vim cifar10_quick_solver_lr1.prototxt cifar10_quick_solver.prototxt

4. Intel Caffe里面运行:

sudo docker run -v "/home/ubuntu/yuntong/:/opt/caffe/share" -it bvlc/caffe:intel /bin/bash cd /opt/caffe/share/caffe-1.0 ./examples/cifar10/train_quick.sh

训练时间: 07:20:47.795905 - 07:08:08.193487 = 12分40

5. 原生 Caffe里面运行:

sudo docker run -v "/home/ubuntu/yuntong/:/opt/caffe/share" -it bvlc/caffe:cpu /bin/bash cd /opt/caffe/share/caffe-1.0 ./examples/cifar10/train_quick.sh

07:26:23.522944 …………… I0408 08:56:53.116524 18 solver.cpp:310] Iteration 5000, loss = 0.449847 I0408 08:56:53.117141 18 solver.cpp:330] Iteration 5000, Testing net (#0) I0408 08:57:30.968313 21 data_layer.cpp:73] Restarting data prefetching from start. I0408 08:57:32.527096 18 solver.cpp:397] Test net output #0: accuracy = 0.7561 I0408 08:57:32.527354 18 solver.cpp:397] Test net output #1: loss = 0.72683 (* 1 = 0.72683 loss) I0408 08:57:32.527364 18 solver.cpp:315] Optimization Done. I0408 08:57:32.527381 18 caffe.cpp:259] Optimization Done.

用时 1.5小时

机器配置:

CPU: 8 * Intel(R) Core(TM) i5-3427U CPU @ 1.80GHz Memory: 16G Storage: SATA SSD