1、最完整的解释

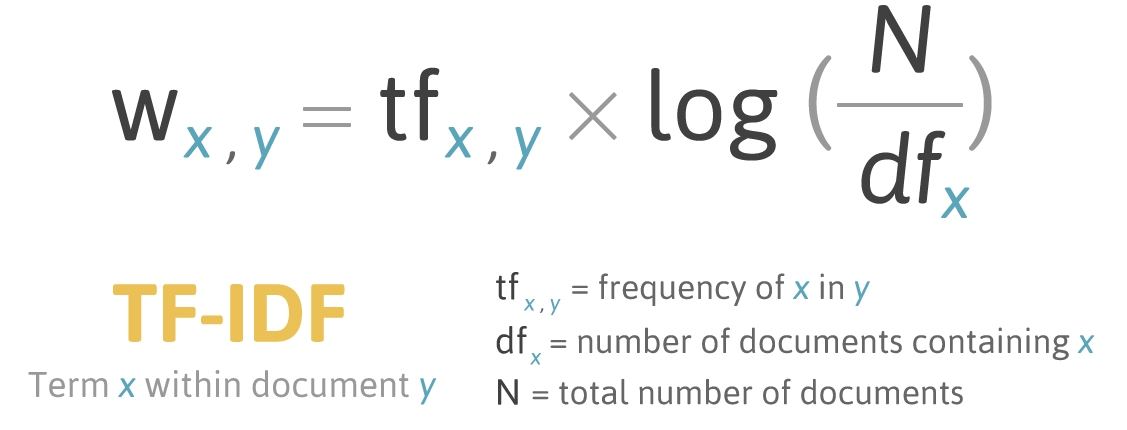

TF-IDF是一种统计方法,用以评估一个词对于语料库中的其中一份文件的重要程度。

--------------就是给定语料库的情况下(给定语料库就是说已知语料库的属性信息),给定一个词语term,计算一个term对于文件的重要性(就是计算一个得分),文件是可变的;

这样的话可以计算在词语在多个文件的得分然后做个排序就好了

一个直观的感觉是:一个词语在当前文件出现次数越多越重要;但是有可能是停用词

所以还得限制这个词语在语料库中出现文件次数;

所以就是一个词语对于一个文件的重要性取决于: 当前这个词语在文件中出现的次数(越多越重要);以及这个词语在整个语料库中出现次数(越少越重要);

其实就是评价单词对于文件的重要性,这个重要性怎么衡量?-就是后文所说 这里x是term,y是代表document,如果是一个query就会对x做sum

2、关于 normalization

是有两种 normalization的操作,叫做 instance-wise和 feature-wise的normalization:

>Normalizationしてみる(instance-wise normalization)

>Scaleを合わせてみる(feature-wise normalization)

最近在做text classificaion,所以会用到 tf-idf,这个时候一般只会做 instance-wise的,这个也就是针对每个样本的每个特征做nomalizaiton

具体为什么要做instance normalization?一段文字告诉你

According to our empirical experience, instance-wise data normalization makes the optimization problem easier to be solved. For example, for the following vector x (0, 0, 1, 1, 0, 1,0, 1, 0), the standard data normalization divides each element of x by by the 2-norm of x. The normalized x becomes (0, 0, 0.5, 0.5,0, 0.5, 0, 0.5, 0)

1. Transforming infrequent features into a special tag. Conceptually, infrequent

features should only include very little or even no information, so it

should be very hard for a model to extract those information. In fact, these

features can be very noisy. We got significant improvement (more than 0.0005)

by transforming these features into a special tag.

2. Transforming numerical features (I1-I13) to categorical features.

Empirically we observe using categorical features is always better than

using numerical features. However, too many features are generated if

numerical features are directly transformed into categorical features, so we

use

v <- floor(log(v)^2)

to reduce the number of features generated.

3. Data normalization. According to our empirical experience, instance-wise

data normalization makes the optimization problem easier to be solved.

For example, for the following vector x

(0, 0, 1, 1, 0, 1, 0, 1, 0),

the standard data normalization divides each element of x by by the 2-norm

of x. The normalized x becomes

(0, 0, 0.5, 0.5, 0, 0.5, 0, 0.5, 0).

The hashing trick do not contribute any improvement on the leaderboard. We

apply the hashing trick only because it makes our life easier to generate

features. :)