更为强大的库requests是为了更加方便地实现爬虫操作,有了它 , Cookies 、登录验证、代理设置等操作都不是 .

一、安装requests模块(cmd窗口执行)

pip3 install requests

二、requests的基本方法

import requests response=requests.get("https://www.baidu.com/") print(type(response)) #<class 'requests.models.Response'> response类型 print(response.status_code) #200 获取状态码 print(response.text) #获取网页源码 print(response.content) #获取网页源码 print(response.cookies) #获取网页cookies ,Req u estsCookieJar print(response.headers) #获取请求头

三、推荐一个测试网址:http://httpbin.org测试请求网站,可以随便捣鼓(其他请求方式)

import requests r=requests.post("http://httpbin.org/post") print(r.text) #打印post请求的头部信息 r=requests.put("http://httpbin.org/post") r=requests.delete("http://httpbin.org/post") r=requests.options("http://httpbin.org/post")

这里分别用 post ()、 put ()、 delete ()等方法实现了 POST 、 PUT 、 DELETE 等请求 。

四、get 请求

查看get请求包含的请求信息

import requests r=requests.get("http://httpbin.org/get") print(r.text) #打印get请求信息

结果显示: { "args": {}, "headers": { "Accept": "*/*", "Accept-Encoding": "gzip, deflate", "Connection": "close", "Host": "httpbin.org", "User-Agent": "python-requests/2.20.1" }, "origin": "119.123.196.143", "url": "http://httpbin.org/get" }

结果显示说明:一个请求信息应该包含了请求头、ip地址、URL等信息。

(1)请求添加额外信息

方法一:?key=value&key2=value2... (?:表示起始,&:表示和)

r= requests.get("http://httpbin.org/get?name=germey&age=22")

import requests r= requests.get("http://httpbin.org/get?name=germey&age=22") print(r.text)

{ "args": { "age": "22", "name": "germey" }, "headers": { "Accept": "*/*", "Accept-Encoding": "gzip, deflate", "Connection": "close", "Host": "httpbin.org", "User-Agent": "python-requests/2.20.1" }, "origin": "119.123.196.143", "url": "http://httpbin.org/get?name=germey&age=22" }

通过运行结果可以判断,请求的链接自动被构造成了:http://httpbin.org/get?name=germey&age=22

方法二:利用get 里面参数params,可以将请求信息编译加载到url中(推荐使用)

import requests data={ "name":"germey", "age":22 } r=requests.get("http://httpbin.org/get",params=data) print(r.text)

结果显示: { "args": { "age": "22", "name": "germey" }, "headers": { "Accept": "*/*", "Accept-Encoding": "gzip, deflate", "Connection": "close", "Host": "httpbin.org", "User-Agent": "python-requests/2.20.1" }, "origin": "119.123.196.143", "url": "http://httpbin.org/get?name=germey&age=22" }

结果都构造了:http://httpbin.org/get?name=germey&age=22,方法二比较实用

(2)从网页请求到的请求信息都是json格式字符串,转换成字典dict,使用 .json();

如果不是Json格式,则报错:JSON。decodeJSONDecodeError异常

import requests r=requests.get("http://httpbin.org/get") print(type(r.text)) #查看请求头数据类型 print(r.text) #打印请求信息 #r.json() 将json字符串转换为字典 print(type(r.json()))#转换为dict,打印数据类型

<class 'str'> { "args": {}, "headers": { "Accept": "*/*", "Accept-Encoding": "gzip, deflate", "Connection": "close", "Host": "httpbin.org", "User-Agent": "python-requests/2.20.1" }, "origin": "119.123.196.143", "url": "http://httpbin.org/get" } <class 'dict'>

结果显示:请求信息是<str>;r.json()后的数据是<dict>

五、get方法请求抓取网页实例

(1)成功获取知乎的网页信息

# 请求知乎 import requests #构建请求要求信息 data={ "type":"content", "q":"赵丽颖" } url="https://www.zhihu.com/search" #构建请求的ip和服务器信息 headers={ "User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/70.0.3538.110 Safari/537.36" , "origin": "119.123.196.143", } response=requests.get(url,params=data,headers=headers) print(response.text)

这里我们加入了 headers 信息,其中包含了 User- Agent 字段信息, 也就是浏览器标识信息 。 如果

不加这个 ,知乎会禁止抓取,data构造了一个请求搜索信息.

(2)github站点图标下载

import requests r=requests.get(" https://github.com/favicon.ico") print(r.text) print(r.content) with open("github.ico","wb") as f: f.write(r.content)

(3)请求头信息headers

{ "args": {}, "headers": { "Accept": "*/*", "Accept-Encoding": "gzip, deflate", "Connection": "close", "Host": "httpbin.org", "User-Agent": "python-requests/2.20.1" }, "origin": "119.123.196.143", "url": "https://httpbin.org/get" }

(4)抓取github图标

r.text 得到的数据是字符串类型

r.content 得到的数据是bytes类型数据

import requests r = requests.get("https://github.com/favicon.ico") print('text',r.text)#获取到字符串 print('content',r.content) #获取的是二进制

import requests r=requests.get(" https://github.com/favicon.ico") print(r.text) print(r.content) with open("gg.ico","wb") as f: f.write(r.content)

六、post请求

带data信息请求

import requests data ={ 'name' :'pig', 'age':18 } r = requests.post('http://httpbin.org/post', data=data) print(r.text)

{ "args": {}, "data": "", "files": {}, "form": { "age": "18", "name": "pig" }, "headers": { "Accept": "*/*", "Accept-Encoding": "gzip, deflate", "Connection": "close", "Content-Length": "15", "Content-Type": "application/x-www-form-urlencoded", "Host": "httpbin.org", "User-Agent": "python-requests/2.20.1" }, "json": null, "origin": "119.123.198.80", "url": "http://httpbin.org/post" }

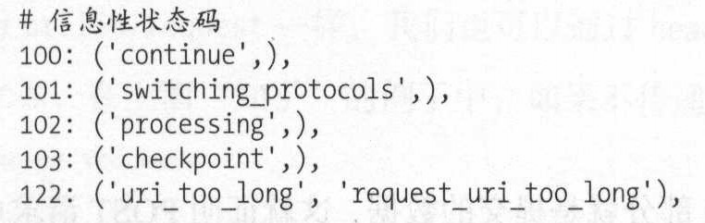

七、请求状态码

1、100状态码:信息状态码

2、200状态码:成功状态码

3、300状态吗:重定向状态码

4、400状态码:客户端错误状态码

5、500状态码:服务器错误状态码

import requests import re def get(url,session): headers={ "User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/70.0.3538.100 Safari/537.36", "Host": "book.douban.com" } response=session.get(url,headers=headers) return response.content.decode("utf-8") def parse(path,data,session): res=session.get(data,timeout=2) with open(path,"wb") as f: f.write(res.content) if __name__ == '__main__': obj=re.compile('<img src="(?P<picture>.*?)"') session = requests.session() html=get("https://book.douban.com/",session) pic_url_list=obj.findall(html,re.S) n=1 for pic_url in pic_url_list: print(pic_url) try: path=r"book_picture/"+f"{n}"+".jpg" parse(path,pic_url,session) n+=1 except Exception as e: print(e)

#coding=utf-8 import requests import os import re def getHtml(url): headers={ "Accept": "text/html,application/xhtml+xml,application/xml;q=0.9,image/webp,image/apng,*/*;q=0.8", "Cookie": "BDqhfp=%E5%90%8D%E8%83%9C%E5%8F%A4%E8%BF%B9%26%26-10-1undefined%26%260%26%261; BAIDUID=3E9EFDBD86FBFCF2DA4C32A06482351C:FG=1; BIDUPSID=E013F7397D0EF0F46FFAF97FC8A7F349; PSTM=1543903169; pgv_pvi=2584268800; delPer=0; PSINO=7; BDRCVFR[5VG_cZ6c41T]=9xWipS8B-FspA7EnHc1QhPEUf; ZD_ENTRY=baidu; BDRCVFR[dG2JNJb_ajR]=mk3SLVN4HKm; indexPageSugList=%5B%22%E5%90%8D%E8%83%9C%E5%8F%A4%E8%BF%B9%22%5D; cleanHistoryStatus=0; BDRCVFR[-pGxjrCMryR]=mk3SLVN4HKm; BCLID=7951205421696906576; BDSFRCVID=_rLOJeC629zHWkc9ByJPtBGK9T-me2jTH6bH27NMSSL8H8ptFoAvEG0PjM8g0KubkDpOogKK3gOTH4PF_2uxOjjg8UtVJeC6EG0P3J; H_BDCLCKID_SF=tJI8_DLytD_3JRcFDDTb-tCqMfTM2n58a5IX3buQX-od8pcNLTDKeJIn3UR2qxj8WHRJ5q3cfx-VDJK4MlO1j4DnDGJwWlJgBnb7VPJ23J7Nfl5jDh38XjksD-Rt5tnR-gOy0hvctb3cShPm0MjrDRLbXU6BK5vPbNcZ0l8K3l02VKO_e4bK-Tr3jaDetU5; H_PS_PSSID=; BDORZ=FFFB88E999055A3F8A630C64834BD6D0; userFrom=blog.csdn.net", "Host": "image.baidu.com", "Upgrade-Insecure-Requests":"1", "User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/70.0.3538.110 Safari/537.36" } page = requests.get(url,headers=headers) html = page.content.decode() return html if __name__ == '__main__': msg=input("请输入搜索内容<如苍老师>:") os.mkdir(msg) html = getHtml(f"https://image.baidu.com/search/index?ct=201326592&cl=2&st=-1&lm=-1&nc=1&ie=utf-8&tn=baiduimage&ipn=r&rps=1&pv=&word={msg}&hs=0&oriquery=%E5%90%8D%E8%83%9C%E5%8F%A4%E8%BF%B9&ofr=%E5%90%8D%E8%83%9C%E5%8F%A4%E8%BF%B9&z=0&ic=0&face=0&width=0&height=0&latest=0&s=0&hd=0©right=0&selected_tags=%E7%AE%80%E7%AC%94%E7%94%BB") obj=re.compile('"thumbURL":"(?P<pic>.*?)"',re.S) pic_list=obj.findall(html) n=1 for pic_url in pic_list: print(pic_url) try: data=requests.get(pic_url,timeout=2) path=f"{msg}/"+f"{n}"+".jpg" with open(path,"wb") as f: print("-----------正在下载图片----------") f.write(data.content) n+=1 print("----------下载完成----------") except Exception as e: print(e)