从零开始自己搭建复杂网络(以MobileNetV2为例)

tensorflow经过这几年的发展,已经成长为最大的神经网络框架。而mobileNetV2在经过Xception的实践与深度可分离卷积的应用之后,相对成熟和复杂,对于我们进行网络搭建的学习有着很大的帮助。

轻量化卷积神经网络MobileNet论文详解(V1&V2)

import tensorflow as tf from mobilenet_v2.ops import * def mobilenetv2(inputs, num_classes, is_train=True, reuse=False): exp = 6 # expansion ratio with tf.variable_scope('mobilenetv2'): net = conv2d_block(inputs, 32, 3, 2, is_train, name='conv1_1') # size/2 net = res_block(net, 1, 16, 1, is_train, name='res2_1') net = res_block(net, exp, 24, 2, is_train, name='res3_1') # size/4 net = res_block(net, exp, 24, 1, is_train, name='res3_2') net = res_block(net, exp, 32, 2, is_train, name='res4_1') # size/8 net = res_block(net, exp, 32, 1, is_train, name='res4_2') net = res_block(net, exp, 32, 1, is_train, name='res4_3') net = res_block(net, exp, 64, 1, is_train, name='res5_1') net = res_block(net, exp, 64, 1, is_train, name='res5_2') net = res_block(net, exp, 64, 1, is_train, name='res5_3') net = res_block(net, exp, 64, 1, is_train, name='res5_4') net = res_block(net, exp, 96, 2, is_train, name='res6_1') # size/16 net = res_block(net, exp, 96, 1, is_train, name='res6_2') net = res_block(net, exp, 96, 1, is_train, name='res6_3') net = res_block(net, exp, 160, 2, is_train, name='res7_1') # size/32 net = res_block(net, exp, 160, 1, is_train, name='res7_2') net = res_block(net, exp, 160, 1, is_train, name='res7_3') net = res_block(net, exp, 320, 1, is_train, name='res8_1', shortcut=False) net = pwise_block(net, 1280, is_train, name='conv9_1') net = global_avg(net) logits = flatten(conv_1x1(net, num_classes, name='logits')) pred = tf.nn.softmax(logits, name='prob') return logits, pred

MobileNetV2在第一层使用了一个通道数为3×3的卷积进行处理,之后才转入res_block(残差层),在经过res_block叠加之后,使用pwise_block(主要是1×1的卷积调整通道数),然后使用平均池化层,和一个1×1的卷积,将最后输出变为类数。

接着我们来细讲每一个模块:

首先是第一层卷积模块:

卷积模块由下面两个函数组成

卷积模块由卷积层和批正则化(batch_normalization),以及relu6组成

def conv2d(input_, output_dim, k_h, k_w, d_h, d_w, stddev=0.02, name='conv2d', bias=False): with tf.variable_scope(name): w = tf.get_variable('w', [k_h, k_w, input_.get_shape()[-1], output_dim], regularizer=tf.contrib.layers.l2_regularizer(weight_decay), initializer=tf.truncated_normal_initializer(stddev=stddev)) #truncated_normal_initializer生成截断正态分布的随机数 conv = tf.nn.conv2d(input_, w, strides=[1, d_h, d_w, 1], padding='SAME') if bias: biases = tf.get_variable('bias', [output_dim], initializer=tf.constant_initializer(0.0)) conv = tf.nn.bias_add(conv, biases) return conv def conv2d_block(input, out_dim, k, s, is_train, name): with tf.name_scope(name), tf.variable_scope(name): net = conv2d(input, out_dim, k, k, s, s, name='conv2d') net = batch_norm(net, train=is_train, name='bn') net = relu(net) return net

卷积层首先定义了w(可以把w理解为卷积核,是一个Tensor,w具有[filter_height, filter_width, in_channels, out_channels]这样的形状)(l2正则化之后+初始),然后通过 tf.nn.conv2d来进行卷积操作,之后加上偏置。

relu6和batch_normalization,tensorflow有直接的函数,调用即可。

def relu(x, name='relu6'): return tf.nn.relu6(x, name) def batch_norm(x, momentum=0.9, epsilon=1e-5, train=True, name='bn'): return tf.layers.batch_normalization(x, momentum=momentum, epsilon=epsilon, scale=True, training=train, name=name)

接着我们要在卷积层后叠加res_block残差模块

def res_block(input, expansion_ratio, output_dim, stride, is_train, name, bias=False, shortcut=True): with tf.name_scope(name), tf.variable_scope(name): # pw bottleneck_dim=round(expansion_ratio*input.get_shape().as_list()[-1]) net = conv_1x1(input, bottleneck_dim, name='pw', bias=bias) net = batch_norm(net, train=is_train, name='pw_bn') net = relu(net) # dw net = dwise_conv(net, strides=[1, stride, stride, 1], name='dw', bias=bias) net = batch_norm(net, train=is_train, name='dw_bn') net = relu(net) # pw & linear net = conv_1x1(net, output_dim, name='pw_linear', bias=bias) net = batch_norm(net, train=is_train, name='pw_linear_bn') # element wise add, only for stride==1 if shortcut and stride == 1: in_dim=int(input.get_shape().as_list()[-1]) if in_dim != output_dim: ins=conv_1x1(input, output_dim, name='ex_dim') net=ins+net else: net=input+net return net

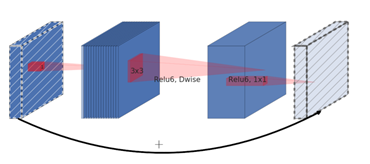

残差模块使用倒置残差结构,如下图所示

MobileNetv2架构是基于倒置残差结构(inverted residual structure),原本的残差结构的主分支是有三个卷积,两个逐点卷积通道数较多,而倒置的残差结构刚好相反,中间的卷积通道数(依旧使用深度分离卷积结构)较多,旁边的较小。

每个残差结构由一个1×1的卷积和一个3×3的深度卷积和一个1×1的卷积经过线性变换得到。

bottleneck的维度有扩张系数=6来影响。使用1×1的卷积将输入通道转换为扩张系数×输入维度。

dwise_conv深度卷积代码具体如下:

def dwise_conv(input, k_h=3, k_w=3, channel_multiplier= 1, strides=[1,1,1,1], padding='SAME', stddev=0.02, name='dwise_conv', bias=False): with tf.variable_scope(name): in_channel=input.get_shape().as_list()[-1] w = tf.get_variable('w', [k_h, k_w, in_channel, channel_multiplier], regularizer=tf.contrib.layers.l2_regularizer(weight_decay), initializer=tf.truncated_normal_initializer(stddev=stddev)) conv = tf.nn.depthwise_conv2d(input, w, strides, padding, rate=None,name=None,data_format=None) if bias: biases = tf.get_variable('bias', [in_channel*channel_multiplier], initializer=tf.constant_initializer(0.0)) conv = tf.nn.bias_add(conv, biases) return conv

卷积核大小 k_h, k_w, in_channel, 1

1×1的卷积定义如下:

def conv_1x1(input, output_dim, name, bias=False): with tf.name_scope(name): return conv2d(input, output_dim, 1,1,1,1, stddev=0.02, name=name, bias=bias)

我们为残差层添加shortcut连接。

# element wise add, only for stride==1 if shortcut and stride == 1: in_dim=int(input.get_shape().as_list()[-1]) if in_dim != output_dim: ins=conv_1x1(input, output_dim, name='ex_dim') net=ins+net else: net=input+net

只有当stride=1的时候,才启用shortcut链接。

接着叠加res模块

在最后使用全局平均池化:

def global_avg(x): with tf.name_scope('global_avg'): net=tf.layers.average_pooling2d(x, x.get_shape()[1:-1], 1) return net

我们没有使用全连接层,而是使用了1×1的卷积将维度转换为类数,再将其压平。

tf.contrib.layers.flatten(x)

最后使用softmax分类

pred = tf.nn.softmax(logits, name='prob')

好了,MobilenetV2就搭建成功了。

这种网络的搭建模式可以当成一个模板,将其输入输出定好之后,很容易组装到Estimator中,进行网络的更换,以及后期的微调。

本次我们使用了tf.nn搭建网络,下次我们会去尝试slim和tf.layer搭建网络。