关键词:memblock、totalram_pages、meminfo、MemTotal、CMA等。

最近在做低成本方案,需要研究一整块RAM都用在哪里了?

最直观的的就是通过/proc/meminfo查看MemTotal,实际上可能远小于RAM物理大小。

这里有个明显的分界点:在free_initmem()之后,MemTotal也即totalram_pages就固定在一个值。这也是Linux可以支配的内存,这之外的内存称之为内存黑洞。

这里分析从RAM启动,到free_initmem(),然后进入shell看到MemTotal究竟是多少?为什么这样?

这中间涉及到memblock、内核代码段、页面Reseved属性、CMA等等。

1. memblock介绍

memblock内存管理机制用于在Linux启动后管理内存,一直到free_initmem()为止。

之后totalram_pages就稳定在一个数值。

1.1 memblock数据结构

struct memblock是memblock的核心数据结构,下面分为几种类型的memblock,每种类型memblock包含若干regions。

/* Definition of memblock flags. */ enum { MEMBLOCK_NONE = 0x0, /* No special request */ MEMBLOCK_HOTPLUG = 0x1, /* hotpluggable region */ MEMBLOCK_MIRROR = 0x2, /* mirrored region */ MEMBLOCK_NOMAP = 0x4, /* don't add to kernel direct mapping */ }; struct memblock_region { phys_addr_t base;--------------------------------region的基地址 phys_addr_t size;--------------------------------region的大小 unsigned long flags;-----------------------------region的标志,上面枚举体定义。 #ifdef CONFIG_HAVE_MEMBLOCK_NODE_MAP int nid; #endif }; struct memblock_type { unsigned long cnt; /* number of regions */---------------内存的regions数量。 unsigned long max; /* size of the allocated array */-----当前集合中记录内存区域最大大小。 phys_addr_t total_size; /* size of all regions */--------regions总大小。 struct memblock_region *regions;----------------------------指向regions数组。 }; struct memblock { bool bottom_up; /* is bottom up direction? */-----表示分配器的分配方式,true表示从低地址向高地址分配,false则相反。 phys_addr_t current_limit;-------------------------内存块大小的限制。 struct memblock_type memory;-----------------------可用内存 struct memblock_type reserved;---------------------保留内存 #ifdef CONFIG_HAVE_MEMBLOCK_PHYS_MAP struct memblock_type physmem; #endif };

内核中的memblock实例,定义了初始值,这个全局变量在后面会被频繁使用。

static struct memblock_region memblock_memory_init_regions[INIT_MEMBLOCK_REGIONS] __initdata_memblock; static struct memblock_region memblock_reserved_init_regions[INIT_MEMBLOCK_REGIONS] __initdata_memblock; struct memblock memblock __initdata_memblock = { .memory.regions = memblock_memory_init_regions, .memory.cnt = 1, /* empty dummy entry */ .memory.max = INIT_MEMBLOCK_REGIONS, .reserved.regions = memblock_reserved_init_regions, .reserved.cnt = 1, /* empty dummy entry */ .reserved.max = INIT_MEMBLOCK_REGIONS, ... .bottom_up = false, .current_limit = MEMBLOCK_ALLOC_ANYWHERE, };

1.2 memblock API介绍

memblock API主要有如下系列:

phys_addr_t memblock_find_in_range_node(phys_addr_t size, phys_addr_t align, phys_addr_t start, phys_addr_t end, int nid, ulong flags); phys_addr_t memblock_find_in_range(phys_addr_t start, phys_addr_t end, phys_addr_t size, phys_addr_t align); void memblock_allow_resize(void); int memblock_add_node(phys_addr_t base, phys_addr_t size, int nid); int memblock_add(phys_addr_t base, phys_addr_t size); int memblock_remove(phys_addr_t base, phys_addr_t size); int memblock_free(phys_addr_t base, phys_addr_t size); int memblock_reserve(phys_addr_t base, phys_addr_t size); void memblock_trim_memory(phys_addr_t align); bool memblock_overlaps_region(struct memblock_type *type, phys_addr_t base, phys_addr_t size); int memblock_mark_hotplug(phys_addr_t base, phys_addr_t size); int memblock_clear_hotplug(phys_addr_t base, phys_addr_t size); int memblock_mark_mirror(phys_addr_t base, phys_addr_t size); int memblock_mark_nomap(phys_addr_t base, phys_addr_t size); ulong choose_memblock_flags(void);

其中对不同类型memblock的分配释放主要有如下:其中memblock_add()和memblock_remove()是针对可用memlbock操作;memblock_reserve()和memblock_free()是针对reserved类型memblock操作。

int __init_memblock memblock_add_node(phys_addr_t base, phys_addr_t size, int nid) { return memblock_add_range(&memblock.memory, base, size, nid, 0); } int __init_memblock memblock_add(phys_addr_t base, phys_addr_t size) { return memblock_add_range(&memblock.memory, base, size, MAX_NUMNODES, 0); } int __init_memblock memblock_remove(phys_addr_t base, phys_addr_t size) { return memblock_remove_range(&memblock.memory, base, size); } int __init_memblock memblock_free(phys_addr_t base, phys_addr_t size) { kmemleak_free_part_phys(base, size); return memblock_remove_range(&memblock.reserved, base, size); } int __init_memblock memblock_reserve(phys_addr_t base, phys_addr_t size) { return memblock_add_range(&memblock.reserved, base, size, MAX_NUMNODES, 0); }

1.3 对memblock调试

如果需要了解memblock的详细分配流程,可以通过在bootargs中加入“memblock=debug”。

在内核启动后,通过/proc/kmsg查看调试信息。

查看内存地址范围和reserved区域可以通过:

/sys/kernel/debug/memblock/memory

/sys/kernel/debug/memblock/reserved

2. totalram_pages是如何更新的

内核中totalram_pages在初始值是0,在free_initmem()之后就稳定在一个数值。

totalram_pages从0开始,(1)内核首先遍历memblock.memory和memblock.reserved区域,判断出空闲区域内存大小;

(2)然后在CMA阶段,将CMA预留的内存释放出来,totalram_pages增加;

(3)最后在free_initmem()中释放init段占用的内存。

2.1 memblock reserve区域分配

在打开memblock调试之后,可以看到创建reserve类型regions的log。

[ 0.000000] memblock_reserve: [0x00000000000000-0x000000007a15ff] flags 0x0 setup_arch+0x66/0x258----------------------------内核代码段 [ 0.000000] memblock_reserve: [0x00000080000000-0x0000007fffffff] flags 0x0 setup_arch+0x7e/0x258----------------------------initrd [ 0.000000] memblock_reserve: [0x00000000027380-0x0000000002bfb3] flags 0x0 early_init_dt_reserve_memory_arch+0x1e/0x30------dtb自身,从内核中__dtb_xxx_begin开始到__dtb_xxx_end结束的空间。 [ 0.000000] memblock_reserve: [0x00000004000000-0x00000004ffffff] flags 0x0 early_init_dt_reserve_memory_arch+0x1e/0x30------驱动保留区域 [ 0.000000] memblock_reserve: [0x00000001000000-0x00000003ffffff] flags 0x0 early_init_dt_reserve_memory_arch+0x1e/0x30------驱动保留区域 [ 0.000000] memblock_reserve: [0x00000005000000-0x00000007ffffff] flags 0x0 early_init_dt_reserve_memory_arch+0x1e/0x30------cma保留区域 [ 0.000000] memblock_reserve: [0x0000000fdff000-0x0000000fffefff] flags 0x0 memblock_virt_alloc_internal+0x1a6/0x200 [ 0.000000] memblock_reserve: [0x0000000fdfefe0-0x0000000fdfefff] flags 0x0 memblock_virt_alloc_internal+0x1a6/0x200 [ 0.000000] memblock_reserve: [0x0000000fdfa380-0x0000000fdfefb3] flags 0x0 memblock_alloc_range_nid+0x60/0x7c [ 0.000000] memblock_reserve: [0x0000000fdea3a4-0x0000000fdfa37f] flags 0x0 memblock_alloc_range_nid+0x60/0x7c [ 0.000000] memblock_reserve: [0x0000000fde9000-0x0000000fde9fff] flags 0x0 __alloc_memory_core_early+0xa4/0xe8 [ 0.000000] memblock_reserve: [0x0000000fde8000-0x0000000fde8fff] flags 0x0 __alloc_memory_core_early+0xa4/0xe8 [ 0.000000] memblock_reserve: [0x0000000fdea340-0x0000000fdea38a] flags 0x0 memblock_virt_alloc_internal+0x1a6/0x200 [ 0.000000] memblock_reserve: [0x0000000fdea2e0-0x0000000fdea32a] flags 0x0 memblock_virt_alloc_internal+0x1a6/0x200 [ 0.000000] memblock_reserve: [0x0000000fdea280-0x0000000fdea2ca] flags 0x0 memblock_virt_alloc_internal+0x1a6/0x200 [ 0.000000] memblock_reserve: [0x0000000fde7000-0x0000000fde7fff] flags 0x0 memblock_virt_alloc_internal+0x1a6/0x200 [ 0.000000] memblock_reserve: [0x0000000fddf000-0x0000000fde6fff] flags 0x0 memblock_virt_alloc_internal+0x1a6/0x200 [ 0.000000] memblock_reserve: [0x0000000fdfefc0-0x0000000fdfefc3] flags 0x0 memblock_virt_alloc_internal+0x1a6/0x200 [ 0.000000] memblock_reserve: [0x0000000fdea3a0-0x0000000fdea3a3] flags 0x0 memblock_virt_alloc_internal+0x1a6/0x200 [ 0.000000] memblock_reserve: [0x0000000fdea260-0x0000000fdea263] flags 0x0 memblock_virt_alloc_internal+0x1a6/0x200 [ 0.000000] memblock_reserve: [0x0000000fdea240-0x0000000fdea243] flags 0x0 memblock_virt_alloc_internal+0x1a6/0x200 [ 0.000000] memblock_reserve: [0x0000000fdea1c0-0x0000000fdea237] flags 0x0 memblock_virt_alloc_internal+0x1a6/0x200 [ 0.000000] memblock_reserve: [0x0000000fdea180-0x0000000fdea1bb] flags 0x0 memblock_virt_alloc_internal+0x1a6/0x200 [ 0.000000] memblock_reserve: [0x0000000fdde000-0x0000000fddefff] flags 0x0 memblock_virt_alloc_internal+0x1a6/0x200 [ 0.000000] memblock_reserve: [0x0000000fdbe000-0x0000000fdddfff] flags 0x0 memblock_virt_alloc_internal+0x1a6/0x200 [ 0.000000] memblock_reserve: [0x0000000fdae000-0x0000000fdbdfff] flags 0x0 memblock_virt_alloc_internal+0x1a6/0x200

其中内核代码段、initrd、dtb以及dtb中reserved-memory对应如下:

static void __init csky_memblock_init(void) { unsigned long zone_size[MAX_NR_ZONES]; unsigned long zhole_size[MAX_NR_ZONES]; signed long size; memblock_reserve(__pa(_stext), _end - _stext);--------------------------将内核代码段设置为reserved类型memblock,其中的init段会在free_initmem()中返还给内核。 #ifdef CONFIG_BLK_DEV_INITRD memblock_reserve(__pa(initrd_start), initrd_end - initrd_start);--------将内核initrd段设置为reserved类型memblock #endif early_init_fdt_reserve_self();------------------------------------------将dtb本身区域设置为reserved类型memblock early_init_fdt_scan_reserved_mem();-------------------------------------将dtb中reserved-memory区域设置为reserved类型memblock,其中CMA区域会返还给内核。 ... }

上面的reserved信息可以在/sys/kernel/debug/memblock/reserved中找到对应信息。

下面的信息,将一些错误的regions剔除,部分regions进行了合并。

0: 0x00000000..0x007a15ff 1: 0x01000000..0x07ffffff 2: 0x0fdae000..0x0fde9fff 3: 0x0fdea180..0x0fdea1bb 4: 0x0fdea1c0..0x0fdea237 5: 0x0fdea240..0x0fdea243 6: 0x0fdea260..0x0fdea263 7: 0x0fdea280..0x0fdea2ca 8: 0x0fdea2e0..0x0fdea32a 9: 0x0fdea340..0x0fdea38a 10: 0x0fdea3a0..0x0fdfefb3 11: 0x0fdfefc0..0x0fdfefc3 12: 0x0fdfefe0..0x0fffefff

2.2 返还空闲页面给buddy allocator

内核在free_all_bootmem()中将memblock中reserved之外的regions返还给内核的buddy allocator使用。

unsigned long __init free_all_bootmem(void) { unsigned long pages; reset_all_zones_managed_pages(); pages = free_low_memory_core_early(); printk("totalram_pages: %lu %luKB %s:%d ", totalram_pages, totalram_pages<<2, __func__, __LINE__); totalram_pages += pages; printk("totalram_pages: %lu %luKB %s:%d ", totalram_pages, totalram_pages<<2, __func__, __LINE__); return pages; } static unsigned long __init free_low_memory_core_early(void) { unsigned long count = 0; phys_addr_t start, end; u64 i; memblock_clear_hotplug(0, -1); for_each_reserved_mem_region(i, &start, &end)----------------------------------------遍历memblock.reserved类型的regions。 reserve_bootmem_region(start, end);----------------------------------------------对每个regions设置页面属性为Reserved。 /* * We need to use NUMA_NO_NODE instead of NODE_DATA(0)->node_id * because in some case like Node0 doesn't have RAM installed * low ram will be on Node1 */ for_each_free_mem_range(i, NUMA_NO_NODE, MEMBLOCK_NONE, &start, &end, NULL)---------------------------------------------------------------------遍历所有在memblock.memory中,但是不在memblock.reserve中的regions。然后清Reserved页面属性。 count += __free_memory_core(start, end); return count; } void __meminit reserve_bootmem_region(phys_addr_t start, phys_addr_t end) { unsigned long start_pfn = PFN_DOWN(start);--------------------------------------------页面号向前推一页 unsigned long end_pfn = PFN_UP(end);--------------------------------------------------页面号向后推一页,这样确保start和end刚好在start_pfn和end_pfn中。 printk("totalram_pages: reserved 0x%08x-0x%08x 0x%08x-0x%08x %lu-%lu=%lu", start, end, PFN_PHYS(start_pfn), PFN_PHYS(end_pfn), end_pfn, start_pfn, end_pfn-start_pfn); for (; start_pfn < end_pfn; start_pfn++) { if (pfn_valid(start_pfn)) { struct page *page = pfn_to_page(start_pfn); init_reserved_page(start_pfn); INIT_LIST_HEAD(&page->lru); SetPageReserved(page);--------------------------------------------------------设置页面属性为Reserved。 } } } #define for_each_free_mem_range(i, nid, flags, p_start, p_end, p_nid) for_each_mem_range(i, &memblock.memory, &memblock.reserved, nid, flags, p_start, p_end, p_nid) static unsigned long __init __free_memory_core(phys_addr_t start, phys_addr_t end) { unsigned long start_pfn = PFN_UP(start); unsigned long end_pfn = min_t(unsigned long, PFN_DOWN(end), max_low_pfn); if (start_pfn > end_pfn) return 0; __free_pages_memory(start_pfn, end_pfn); printk("totalram_pages: freeed 0x%08x-0x%08x 0x%08x-0x%08x %lu-%lu=%lu", start, end, PFN_PHYS(start_pfn), PFN_PHYS(end_pfn), end_pfn, start_pfn, end_pfn-start_pfn); return end_pfn - start_pfn; } static void __init __free_pages_memory(unsigned long start, unsigned long end) { int order; while (start < end) { order = min(MAX_ORDER - 1UL, __ffs(start)); while (start + (1UL << order) > end) order--; __free_pages_bootmem(pfn_to_page(start), start, order); start += (1UL << order); } } void __init __free_pages_bootmem(struct page *page, unsigned long pfn, unsigned int order) { if (early_page_uninitialised(pfn)) return; return __free_pages_boot_core(page, order); } static void __init __free_pages_boot_core(struct page *page, unsigned int order) { unsigned int nr_pages = 1 << order; struct page *p = page; unsigned int loop; prefetchw(p); for (loop = 0; loop < (nr_pages - 1); loop++, p++) { prefetchw(p + 1); __ClearPageReserved(p); set_page_count(p, 0); } __ClearPageReserved(p);-------------------------------------------------对空闲页面,清Reserved页面属性。 set_page_count(p, 0); page_zone(page)->managed_pages += nr_pages; set_page_refcounted(page); __free_pages(page, order); }

经过free_low_mem_core_early()之后,系统更新了totalram_pages。

[ 0.000000] totalram_pages: reserved 0x00000000-0x007a15ff 0x00000000-0x007a2000 1954-0=1954-----------------------内核代码段 [ 0.000000] totalram_pages: reserved 0x01000000-0x07ffffff 0x01000000-0x08000000 32768-4096=28672------------------reserved-memory段 [ 0.000000] totalram_pages: reserved 0x0fdae000-0x0fde9fff 0x0fdae000-0x0fdea000 65002-64942=60 [ 0.000000] totalram_pages: reserved 0x0fdea180-0x0fdea1bb 0x0fdea000-0x0fdeb000 65003-65002=1 [ 0.000000] totalram_pages: reserved 0x0fdea1c0-0x0fdea237 0x0fdea000-0x0fdeb000 65003-65002=1 [ 0.000000] totalram_pages: reserved 0x0fdea240-0x0fdea243 0x0fdea000-0x0fdeb000 65003-65002=1 [ 0.000000] totalram_pages: reserved 0x0fdea260-0x0fdea263 0x0fdea000-0x0fdeb000 65003-65002=1 [ 0.000000] totalram_pages: reserved 0x0fdea280-0x0fdea2ca 0x0fdea000-0x0fdeb000 65003-65002=1 [ 0.000000] totalram_pages: reserved 0x0fdea2e0-0x0fdea32a 0x0fdea000-0x0fdeb000 65003-65002=1 [ 0.000000] totalram_pages: reserved 0x0fdea340-0x0fdea38a 0x0fdea000-0x0fdeb000 65003-65002=1 [ 0.000000] totalram_pages: reserved 0x0fdea3a0-0x0fdfefb3 0x0fdea000-0x0fdff000 65023-65002=21 [ 0.000000] totalram_pages: reserved 0x0fdfefc0-0x0fdfefc3 0x0fdfe000-0x0fdff000 65023-65022=1 [ 0.000000] totalram_pages: reserved 0x0fdfefe0-0x0fffefff 0x0fdfe000-0x0ffff000 65535-65022=513---------------------reserved页面数量为:1954+28672+60+21+513-1(21和513重合)=31219个页面。 [ 0.000000] totalram_pages: freeed 0x007a1600-0x01000000 0x007a2000-0x01000000 4096-1954=2142 [ 0.000000] totalram_pages: freeed 0x08000000-0x0fdae000 0x08000000-0x0fdae000 64942-32768=32174 [ 0.000000] totalram_pages: freeed 0x0fdea000-0x0fdea180 0x0fdea000-0x0fdea000 65002-65002=0--------------------------空闲一共34316个页面。 [ 0.000000] totalram_pages: 0 0KB free_all_bootmem:189 [ 0.000000] totalram_pages: 34316 137264KB free_all_bootmem:191

从上面log可知,reserve一共31219个页面,空闲一共34316个页面,合计65535个页面。

和/sys/kernel/debug/memblock/memory得到的内容一致。

0: 0x00000000..0x0fffefff

2.3 CMA返还页面给buddy allocator

cma_init_reserved_areas()中初始化CMA区域,将这个区域和系统buddy allocator复用。

static int __init cma_init_reserved_areas(void) { int i; for (i = 0; i < cma_area_count; i++) { int ret = cma_activate_area(&cma_areas[i]); if (ret) return ret; } return 0; } static int __init cma_activate_area(struct cma *cma) { int bitmap_size = BITS_TO_LONGS(cma_bitmap_maxno(cma)) * sizeof(long); unsigned long base_pfn = cma->base_pfn, pfn = base_pfn; unsigned i = cma->count >> pageblock_order;----------------------------------------cma->count表示当前cma区域页面数,pageblock_order表示pageblock的阶数。这里将cma区域按pageblock划分。 struct zone *zone; cma->bitmap = kzalloc(bitmap_size, GFP_KERNEL); if (!cma->bitmap) return -ENOMEM; printk("totalram_pages: cma active 0x%08x-0x%08x count=%lu ", PFN_PHYS(cma->base_pfn), PFN_PHYS(cma->base_pfn + cma->count), cma->count); WARN_ON_ONCE(!pfn_valid(pfn)); zone = page_zone(pfn_to_page(pfn)); do { unsigned j; base_pfn = pfn; for (j = pageblock_nr_pages; j; --j, pfn++) {----------------------------------对当前pageblock中每个页面进行有效性检查。因为MAX_ORDER为11,所以pageblock_order为10,pageblock_nr_pages为1024个页面。 WARN_ON_ONCE(!pfn_valid(pfn)); if (page_zone(pfn_to_page(pfn)) != zone) goto err; } init_cma_reserved_pageblock(pfn_to_page(base_pfn));----------------------------遍历当前pageblock,进行属性设置。 } while (--i);---------------------------------------------------------------------按pageblock为单位遍历。 mutex_init(&cma->lock); #ifdef CONFIG_CMA_DEBUGFS INIT_HLIST_HEAD(&cma->mem_head); spin_lock_init(&cma->mem_head_lock); #endif return 0; err: kfree(cma->bitmap); cma->count = 0; return -EINVAL; } /* Free whole pageblock and set its migration type to MIGRATE_CMA. */ void __init init_cma_reserved_pageblock(struct page *page) { unsigned i = pageblock_nr_pages; struct page *p = page; do { __ClearPageReserved(p);--------------------------------------------------------将pageblock中所有page清Reserved属性。 set_page_count(p, 0); } while (++p, --i); set_pageblock_migratetype(page, MIGRATE_CMA);--------------------------------------设置当前pageblock属性为MIGRATE_CMA,只有可移动页面才可以在此pageblock申请内存。在CMA申请的时候,可以被移出,进而达到复用的目的。 if (pageblock_order >= MAX_ORDER) { i = pageblock_nr_pages; p = page; do { set_page_refcounted(p); __free_pages(p, MAX_ORDER - 1); p += MAX_ORDER_NR_PAGES; } while (i -= MAX_ORDER_NR_PAGES); } else { set_page_refcounted(page); __free_pages(page, pageblock_order); } adjust_managed_page_count(page, pageblock_nr_pages);--------------------------------将复用的页面数返还给totalram_pages。 } void adjust_managed_page_count(struct page *page, long count) { spin_lock(&managed_page_count_lock); page_zone(page)->managed_pages += count; totalram_pages += count; printk("totalram_pages: %lu %luKB %s:%d count=%ld ", totalram_pages, totalram_pages<<2, __func__, __LINE__, count); #ifdef CONFIG_HIGHMEM if (PageHighMem(page)) totalhigh_pages += count; #endif spin_unlock(&managed_page_count_lock); }

从下面的log可以看出,cma以一个pageblock为单位返还,每个1024个页面;共12288个页面。

[ 0.000000] totalram_pages: 0 0KB free_all_bootmem:189 [ 0.000000] totalram_pages: 34316 137264KB free_all_bootmem:191 [ 0.041129] totalram_pages: cma active 0x05000000-0x08000000 count=12288 [ 0.041316] totalram_pages: 35340 141360KB adjust_managed_page_count:6443 count=1024 [ 0.041495] totalram_pages: 36364 145456KB adjust_managed_page_count:6443 count=1024 [ 0.041672] totalram_pages: 37388 149552KB adjust_managed_page_count:6443 count=1024 [ 0.041848] totalram_pages: 38412 153648KB adjust_managed_page_count:6443 count=1024 [ 0.042027] totalram_pages: 39436 157744KB adjust_managed_page_count:6443 count=1024 [ 0.042206] totalram_pages: 40460 161840KB adjust_managed_page_count:6443 count=1024 [ 0.042384] totalram_pages: 41484 165936KB adjust_managed_page_count:6443 count=1024 [ 0.042562] totalram_pages: 42508 170032KB adjust_managed_page_count:6443 count=1024 [ 0.042740] totalram_pages: 43532 174128KB adjust_managed_page_count:6443 count=1024 [ 0.042920] totalram_pages: 44556 178224KB adjust_managed_page_count:6443 count=1024 [ 0.043096] totalram_pages: 45580 182320KB adjust_managed_page_count:6443 count=1024 [ 0.043275] totalram_pages: 46604 186416KB adjust_managed_page_count:6443 count=1024

2.4 init内存释放

在内核initcall完成之后,调用free_initmem()释放相关内存。

void free_initmem(void) { unsigned long addr; addr = (unsigned long) &__init_begin; while (addr < (unsigned long) &__init_end) { ClearPageReserved(virt_to_page(addr)); init_page_count(virt_to_page(addr)); free_page(addr); totalram_pages++; printk("totalram_pages: %lu %luKB %s:%d ", totalram_pages, totalram_pages<<2, __func__, __LINE__); addr += PAGE_SIZE; } pr_info("Freeing unused kernel memory: %dk freed ", ((unsigned int)&__init_end - (unsigned int)&__init_begin) >> 10); }

2.5 totalram_pages小结

2.5.1 释放init内存

init占用的内存从__init_begin开始到__init_end结束。

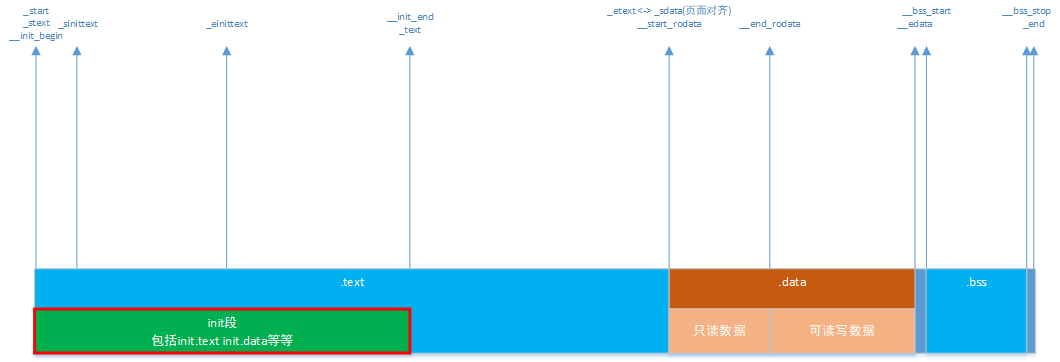

下面是一个实例,各个flags关系如下:_start/_stext/__init_begin < __sinittext < _einittext < __init_end/_text < _etext < _sdata/__start_rodata < __end_rodata < _edata < __bss_start < __bss_stop < _end。

可以看出整个内核可以分为三部分,其中init段在text段中。

从free_initmem()可知,释放的是__init_begin到__init_end这块内存,即上图红框部分。

void __init mem_init_print_info(const char *str) { unsigned long physpages, codesize, datasize, rosize, bss_size; unsigned long init_code_size, init_data_size; physpages = get_num_physpages(); codesize = _etext - _stext; datasize = _edata - _sdata; rosize = __end_rodata - __start_rodata; bss_size = __bss_stop - __bss_start; init_data_size = __init_end - __init_begin; init_code_size = _einittext - _sinittext; #define adj_init_size(start, end, size, pos, adj) do { if (start <= pos && pos < end && size > adj) size -= adj; } while (0) adj_init_size(__init_begin, __init_end, init_data_size, _sinittext, init_code_size);---------------------------------------------如果_sinittext在__init_begin-__init_end范围内,且init_data_size大于init_code_size,从中扣除_sinittext开始的init_code_size长度的段。 adj_init_size(_stext, _etext, codesize, _sinittext, init_code_size);--------------从codeseize中扣除init代码段。 adj_init_size(_sdata, _edata, datasize, __init_begin, init_data_size);------------__init_begin地址不在范围内,所以不扣除。 adj_init_size(_stext, _etext, codesize, __start_rodata, rosize);------------------__start_rodata不在_stext和_etext之间,所以不扣除。 adj_init_size(_sdata, _edata, datasize, __start_rodata, rosize);------------------从datasize中扣去rosize,剩下的就是可读写的大小。 #undef adj_init_size pr_info("Memory: %luK/%luK available (%luK kernel code, %luK rwdata, %luK rodata, %luK init, %luK bss, %luK reserved, %luK cma-reserved" #ifdef CONFIG_HIGHMEM ", %luK highmem" #endif "%s%s) ", nr_free_pages() << (PAGE_SHIFT - 10), physpages << (PAGE_SHIFT - 10), codesize >> 10, datasize >> 10, rosize >> 10,---------------------------------------------------这里的codesize意义不太大,因为扣除了init代码段,但是init数据段还在里面。 (init_data_size + init_code_size) >> 10, bss_size >> 10,----------------------------------------因为已经从init_data_size中扣除init_code_size,所以init_code_size+init_data_size也就是__init_begin到__init_end大小。这部分会被释放。 (physpages - totalram_pages - totalcma_pages) << (PAGE_SHIFT - 10), totalcma_pages << (PAGE_SHIFT - 10), #ifdef CONFIG_HIGHMEM totalhigh_pages << (PAGE_SHIFT - 10), #endif str ? ", " : "", str ? str : ""); }

每次释放一个页面,一共返还了3188KB,即797个页面。

[ 0.000000] Memory: 137264K/262140K available (6304K kernel code, 225K rwdata, 892K rodata, 3188K init, 242K bss, 75724K reserved, 49152K cma-reserved, 0K highmem)

最终totalram_pages应该是:34316+12288+797=47401,也即189604KB大小。

然后在shell中查看/proc/meminfo,吻合。

MemTotal: 189604 kB MemFree: 174816 kB MemAvailable: 179872 kB Buffers: 0 kB Cached: 8440 kB ...

所以MemTotal之外的内存黑洞主要包括以下内容:

- 内核代码段,除了init段

- dtb占用内存

- dtb中reserved-memory段,除CMA部分

- 系统共用部分(待分析)

2.6 遍历所有页面检查PG_reserved属性

通过遍历内核所有页面的属性,然后判断reserved和free区域。

free区域之和应该是totalram_pages,reserved是留给内核代码段和页面符号表等信息。

diff --git a/init/main.c b/init/main.c index 8b52d9a..78e31e1 100644 --- a/init/main.c +++ b/init/main.c @@ -938,6 +938,35 @@ static inline void mark_readonly(void) } #endif +void interate_pages_reserved(void) +{ + unsigned int i = 0, total_pages = node_present_pages(0), is_reserved = 0, region_start = 0, region_end = 0, total_reserved = 0, total_free = 0; + struct page *page; + + printk("Reserved vs free of %u pages. pagenum phyaddr type ", total_pages); + for(i = 0; i < total_pages; i++) + { + page = pfn_to_page(i); + if(i == 0) + { + is_reserved = test_bit(PG_reserved, &(page->flags)); + } + else if(is_reserved != test_bit(PG_reserved, &(page->flags))) + { + region_end = i; + printk(" % 5u-% 5u 0x%08x-0x%08x %s ", region_start, region_end-1, region_start<<12, (region_end<<12)-1, is_reserved?"reserved":"free"); + if(is_reserved) + total_reserved += region_end - region_start; + else + total_free += region_end - region_start; + is_reserved = test_bit(PG_reserved, &(page->flags)); + region_start = i; + } + } + region_end = i; + printk(" % 5u-% 5u 0x%08x-0x%08x %s ", region_start, region_end-1, region_start<<12, (region_end<<12)-1, is_reserved?"reserved":"free"); + printk("Summary: %u page, %u KB reserved; %u page, %u KB free. ", total_reserved, total_reserved<<2, total_free, total_free<<2); +} static int __ref kernel_init(void *unused) { int ret; @@ -948,6 +977,7 @@ static int __ref kernel_init(void *unused) free_initmem(); #ifdef CONFIG_PERF_TIMER printk(KERN_ALERT "PERF % 9u: kernel init done. ", perf_timer_read_us()); + interate_pages_reserved(); #endif mark_readonly(); system_state = SYSTEM_RUNNING;

interate_pages_reserved()输出如下:

[ 0.652687] Reserved vs free of 65535 pages. [ 0.652687] pagenum phyaddr type [ 0.652718] 0- 1197 0x00000000-0x004adfff free----------------------从dmesg中内核代码段init为4792KB,即1198个页面。 [ 0.652762] 1198- 2353 0x004ae000-0x00931fff reserved------------------reserved1 [ 0.652826] 2354- 4095 0x00932000-0x00ffffff free [ 0.653227] 4096-16383 0x01000000-0x03ffffff reserved------------------reserved2 [ 0.654785] 16384-64941 0x04000000-0x0fdadfff free [ 0.654811] 64942-65534 0x0fdae000-0x0fffefff reserved------------------reserved3 [ 0.654817] Summary: 13444 page, 53776 KB reserved; 51498 page, 205992 KB free.

dmsg显示内核代码段组成:

[ 0.000000] Memory: 152048K/262140K available (7909K kernel code, 221K rwdata, 892K rodata, 4792K init, 242K bss, 60940K reserved, 49152K cma-reserved, 0K highmem)

分别查看meminfo、reserved、memory三者信息:

# cat /proc/meminfo MemTotal: 205992 kB MemFree: 187404 kB MemAvailable: 196056 kB ... # cat /sys/kernel/debug/memblock/reserved 0: 0x00000000..0x009315ff------------------------返还init部分给系统,剩下部分和reserved1吻合。 1: 0x01000000..0x03ffffff------------------------和reserved2区域吻合 2: 0x05000000..0x07ffffff------------------------CMA区域返还给系统使用 3: 0x0fdae000..0x0fde9fff------------------------和reserved3区域吻合 4: 0x0fdea360..0x0fdea39b 5: 0x0fdea3a0..0x0fdea417 6: 0x0fdea420..0x0fdea423 7: 0x0fdea440..0x0fdea443 8: 0x0fdea460..0x0fdea463 9: 0x0fdea480..0x0fdea4bb 10: 0x0fdea4c0..0x0fdea4fb 11: 0x0fdea500..0x0fdea53b 12: 0x0fdea540..0x0fdea543 13: 0x0fdea54c..0x0fdfefd3 14: 0x0fdfefe0..0x0fffefff

# cat /sys/kernel/debug/memblock/memory 0: 0x00000000..0x0fffefff--------------------------总的RAM区域吻合。

3. 有什么用?

上面对totalram_pages的来历进行了分析,以及这之外都哪些部分占用了多少内存。

总内存使用角度来看,肯定希望totalram_pages最大化。

那么就需要削减内核代码段、dtb大小等空间,驱动中尽量减少内存独占区域,提倡使用CMA这种复用技术。