1.gpu解码器的基本调用流程

要做视频流解码,必须要了解cuda自身的解码流,因为二者是一样的底层实现,不一样的上层调用

那cuda的解码流程是如何的呢

在 https://developer.nvidia.com/nvidia-video-codec-sdk 下载 Video_Codec_SDK_8.0.14

解压开来

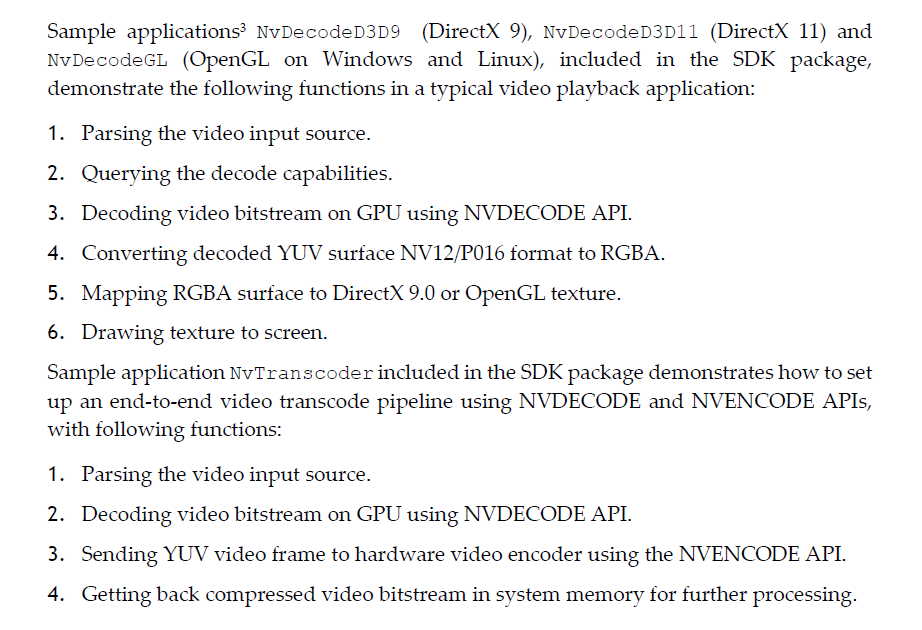

在sampls里面有几个针对不同场景应用的小例子,如果不知道自己该参考哪一个,就需要去看开发文档,doc里面有一个 NVENC_VideoEncoder_API_ProgGuide.pdf 文档

由于我这里使用的是视频流解码,所以最好去查看NvTranscoder这个demo.

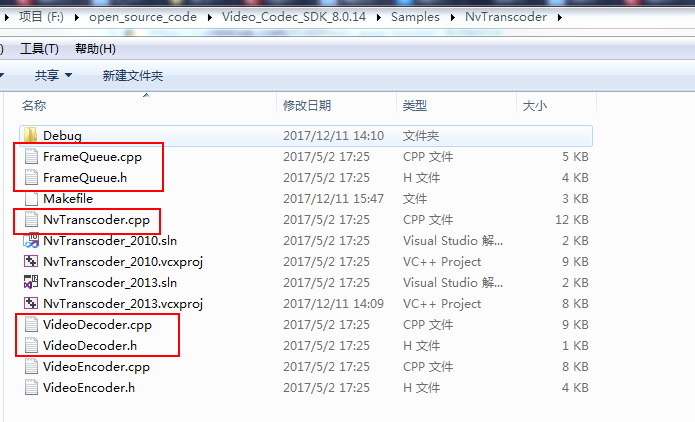

在NvTranscoder里面主要关注红框中的这几个文件

NvTranscoder.cpp实现了主函数

VideoDecoder.cpp实现了解码

FrameQueue.cpp实现了gpu解码后的数据回调

先看NvTranscoder.cpp的主要代码(比较冗余,有兴趣可以全部看)

int main(int argc, char* argv[]) { #if defined(WIN32) || defined(_WIN32) || defined(WIN64) || defined(_WIN64) typedef HMODULE CUDADRIVER; #else typedef void *CUDADRIVER; #endif CUDADRIVER hHandleDriver = 0; __cu(cuInit(0, __CUDA_API_VERSION, hHandleDriver)); __cu(cuvidInit(0)); EncodeConfig encodeConfig = { 0 }; encodeConfig.endFrameIdx = INT_MAX; encodeConfig.bitrate = 5000000; encodeConfig.rcMode = NV_ENC_PARAMS_RC_CONSTQP; encodeConfig.gopLength = NVENC_INFINITE_GOPLENGTH; encodeConfig.codec = NV_ENC_H264; encodeConfig.fps = 0; encodeConfig.qp = 28; encodeConfig.i_quant_factor = DEFAULT_I_QFACTOR; encodeConfig.b_quant_factor = DEFAULT_B_QFACTOR; encodeConfig.i_quant_offset = DEFAULT_I_QOFFSET; encodeConfig.b_quant_offset = DEFAULT_B_QOFFSET; encodeConfig.presetGUID = NV_ENC_PRESET_DEFAULT_GUID; encodeConfig.pictureStruct = NV_ENC_PIC_STRUCT_FRAME; NVENCSTATUS nvStatus = CNvHWEncoder::ParseArguments(&encodeConfig, argc, argv); if (nvStatus != NV_ENC_SUCCESS) { PrintHelp(); return 1; } if (!encodeConfig.inputFileName || !encodeConfig.outputFileName) { PrintHelp(); return 1; } encodeConfig.fOutput = fopen(encodeConfig.outputFileName, "wb"); if (encodeConfig.fOutput == NULL) { PRINTERR("Failed to create "%s" ", encodeConfig.outputFileName); return 1; } //init cuda CUcontext cudaCtx; CUdevice device; __cu(cuDeviceGet(&device, encodeConfig.deviceID)); __cu(cuCtxCreate(&cudaCtx, CU_CTX_SCHED_AUTO, device)); CUcontext curCtx; CUvideoctxlock ctxLock; __cu(cuCtxPopCurrent(&curCtx)); __cu(cuvidCtxLockCreate(&ctxLock, curCtx)); CudaDecoder* pDecoder = new CudaDecoder; FrameQueue* pFrameQueue = new CUVIDFrameQueue(ctxLock); pDecoder->InitVideoDecoder(encodeConfig.inputFileName, ctxLock, pFrameQueue, encodeConfig.width, encodeConfig.height); int decodedW, decodedH, decodedFRN, decodedFRD, isProgressive; pDecoder->GetCodecParam(&decodedW, &decodedH, &decodedFRN, &decodedFRD, &isProgressive); if (decodedFRN <= 0 || decodedFRD <= 0) { decodedFRN = 30; decodedFRD = 1; } if(encodeConfig.width <= 0 || encodeConfig.height <= 0) { encodeConfig.width = decodedW; encodeConfig.height = decodedH; } float fpsRatio = 1.f; if (encodeConfig.fps <= 0) { encodeConfig.fps = decodedFRN / decodedFRD; } else { fpsRatio = (float)encodeConfig.fps * decodedFRD / decodedFRN; } encodeConfig.pictureStruct = (isProgressive ? NV_ENC_PIC_STRUCT_FRAME : 0); pFrameQueue->init(encodeConfig.width, encodeConfig.height); VideoEncoder* pEncoder = new VideoEncoder(ctxLock); assert(pEncoder->GetHWEncoder()); nvStatus = pEncoder->GetHWEncoder()->Initialize(cudaCtx, NV_ENC_DEVICE_TYPE_CUDA); if (nvStatus != NV_ENC_SUCCESS) return 1; encodeConfig.presetGUID = pEncoder->GetHWEncoder()->GetPresetGUID(encodeConfig.encoderPreset, encodeConfig.codec); printf("Encoding input : "%s" ", encodeConfig.inputFileName); printf(" output : "%s" ", encodeConfig.outputFileName); printf(" codec : "%s" ", encodeConfig.codec == NV_ENC_HEVC ? "HEVC" : "H264"); printf(" size : %dx%d ", encodeConfig.width, encodeConfig.height); printf(" bitrate : %d bits/sec ", encodeConfig.bitrate); printf(" vbvMaxBitrate : %d bits/sec ", encodeConfig.vbvMaxBitrate); printf(" vbvSize : %d bits ", encodeConfig.vbvSize); printf(" fps : %d frames/sec ", encodeConfig.fps); printf(" rcMode : %s ", encodeConfig.rcMode == NV_ENC_PARAMS_RC_CONSTQP ? "CONSTQP" : encodeConfig.rcMode == NV_ENC_PARAMS_RC_VBR ? "VBR" : encodeConfig.rcMode == NV_ENC_PARAMS_RC_CBR ? "CBR" : encodeConfig.rcMode == NV_ENC_PARAMS_RC_VBR_MINQP ? "VBR MINQP (deprecated)" : encodeConfig.rcMode == NV_ENC_PARAMS_RC_CBR_LOWDELAY_HQ ? "CBR_LOWDELAY_HQ" : encodeConfig.rcMode == NV_ENC_PARAMS_RC_CBR_HQ ? "CBR_HQ" : encodeConfig.rcMode == NV_ENC_PARAMS_RC_VBR_HQ ? "VBR_HQ" : "UNKNOWN"); if (encodeConfig.gopLength == NVENC_INFINITE_GOPLENGTH) printf(" goplength : INFINITE GOP "); else printf(" goplength : %d ", encodeConfig.gopLength); printf(" B frames : %d ", encodeConfig.numB); printf(" QP : %d ", encodeConfig.qp); printf(" preset : %s ", (encodeConfig.presetGUID == NV_ENC_PRESET_LOW_LATENCY_HQ_GUID) ? "LOW_LATENCY_HQ" : (encodeConfig.presetGUID == NV_ENC_PRESET_LOW_LATENCY_HP_GUID) ? "LOW_LATENCY_HP" : (encodeConfig.presetGUID == NV_ENC_PRESET_HQ_GUID) ? "HQ_PRESET" : (encodeConfig.presetGUID == NV_ENC_PRESET_HP_GUID) ? "HP_PRESET" : (encodeConfig.presetGUID == NV_ENC_PRESET_LOSSLESS_HP_GUID) ? "LOSSLESS_HP" : "LOW_LATENCY_DEFAULT"); printf(" "); nvStatus = pEncoder->GetHWEncoder()->CreateEncoder(&encodeConfig); if (nvStatus != NV_ENC_SUCCESS) return 1; nvStatus = pEncoder->AllocateIOBuffers(&encodeConfig); if (nvStatus != NV_ENC_SUCCESS) return 1; unsigned long long lStart, lEnd, lFreq; NvQueryPerformanceCounter(&lStart); //start decoding thread #ifdef _WIN32 HANDLE decodeThread = CreateThread(NULL, 0, DecodeProc, (LPVOID)pDecoder, 0, NULL); #else pthread_t pid; pthread_create(&pid, NULL, DecodeProc, (void*)pDecoder); #endif //start encoding thread int frmProcessed = 0; int frmActual = 0; while(!(pFrameQueue->isEndOfDecode() && pFrameQueue->isEmpty()) ) { CUVIDPARSERDISPINFO pInfo; if(pFrameQueue->dequeue(&pInfo)) { CUdeviceptr dMappedFrame = 0; unsigned int pitch; CUVIDPROCPARAMS oVPP = { 0 }; oVPP.progressive_frame = pInfo.progressive_frame; oVPP.second_field = 0; oVPP.top_field_first = pInfo.top_field_first; oVPP.unpaired_field = (pInfo.progressive_frame == 1 || pInfo.repeat_first_field <= 1); cuvidMapVideoFrame(pDecoder->GetDecoder(), pInfo.picture_index, &dMappedFrame, &pitch, &oVPP); EncodeFrameConfig stEncodeConfig = { 0 }; NV_ENC_PIC_STRUCT picType = (pInfo.progressive_frame || pInfo.repeat_first_field >= 2 ? NV_ENC_PIC_STRUCT_FRAME : (pInfo.top_field_first ? NV_ENC_PIC_STRUCT_FIELD_TOP_BOTTOM : NV_ENC_PIC_STRUCT_FIELD_BOTTOM_TOP)); stEncodeConfig.dptr = dMappedFrame; stEncodeConfig.pitch = pitch; stEncodeConfig.width = encodeConfig.width; stEncodeConfig.height = encodeConfig.height; int dropOrDuplicate = MatchFPS(fpsRatio, frmProcessed, frmActual); for (int i = 0; i <= dropOrDuplicate; i++) { pEncoder->EncodeFrame(&stEncodeConfig, picType); frmActual++; } frmProcessed++; cuvidUnmapVideoFrame(pDecoder->GetDecoder(), dMappedFrame); pFrameQueue->releaseFrame(&pInfo); } } pEncoder->EncodeFrame(NULL, NV_ENC_PIC_STRUCT_FRAME, true); #ifdef _WIN32 WaitForSingleObject(decodeThread, INFINITE); #else pthread_join(pid, NULL); #endif if (pEncoder->GetEncodedFrames() > 0) { NvQueryPerformanceCounter(&lEnd); NvQueryPerformanceFrequency(&lFreq); double elapsedTime = (double)(lEnd - lStart)/(double)lFreq; printf("Total time: %fms, Decoded Frames: %d, Encoded Frames: %d, Average FPS: %f ", elapsedTime * 1000, pDecoder->m_decodedFrames, pEncoder->GetEncodedFrames(), (float)pEncoder->GetEncodedFrames() / elapsedTime); } pEncoder->Deinitialize(); delete pDecoder; delete pEncoder; delete pFrameQueue; cuvidCtxLockDestroy(ctxLock); __cu(cuCtxDestroy(cudaCtx)); return 0; }

下面这个是我的主要流程精简版

int main(int argc, char* argv[]) { #if defined(WIN32) || defined(_WIN32) || defined(WIN64) || defined(_WIN64) typedef HMODULE CUDADRIVER; #else typedef void *CUDADRIVER; #endif CUDADRIVER hHandleDriver = 0; __cu(cuInit(0, __CUDA_API_VERSION, hHandleDriver));//初始化cuda环境,必须的 __cu(cuvidInit(0)); //初始化解码器 //init cuda CUcontext cudaCtx; CUdevice device; __cu(cuDeviceGet(&device, deviceID)); //得到显卡操作对象,deviceID是显卡的id,一般说来如果一张显卡,id就是0,两张就是0,1 __cu(cuCtxCreate(&cudaCtx, CU_CTX_SCHED_AUTO, device)); //创建对应显卡的运行环境 CUcontext curCtx; CUvideoctxlock ctxLock; __cu(cuCtxPopCurrent(&curCtx));//弹出当前CPU线程的里面的可用的cuda环境,也就是上面创建的环境 __cu(cuvidCtxLockCreate(&ctxLock, curCtx));//为gpu上锁 CudaDecoder* pDecoder = new CudaDecoder;//创建cuda解码对象(重点查看) FrameQueue* pFrameQueue = new CUVIDFrameQueue(ctxLock);//创建解码输出队列 pDecoder->InitVideoDecoder(encodeConfig.inputFileName, ctxLock, pFrameQueue, encodeConfig.width, encodeConfig.height);//初始化解码器(重点查看) pFrameQueue->init(encodeConfig.width, encodeConfig.height);//初始化解码输出队列 //启动解码线程 #ifdef _WIN32 HANDLE decodeThread = CreateThread(NULL, 0, DecodeProc, (LPVOID)pDecoder, 0, NULL); #else pthread_t pid; pthread_create(&pid, NULL, DecodeProc, (void*)pDecoder); #endif //start encoding thread int frmProcessed = 0; int frmActual = 0; //从解码输出队列里面拉取解出来的数据 while(!(pFrameQueue->isEndOfDecode() && pFrameQueue->isEmpty()) ) { CUVIDPARSERDISPINFO pInfo; if(pFrameQueue->dequeue(&pInfo)) { CUdeviceptr dMappedFrame = 0; unsigned int pitch; CUVIDPROCPARAMS oVPP = { 0 }; oVPP.progressive_frame = pInfo.progressive_frame; oVPP.second_field = 0; oVPP.top_field_first = pInfo.top_field_first; oVPP.unpaired_field = (pInfo.progressive_frame == 1 || pInfo.repeat_first_field <= 1); //获取数据在GPU中的地址dMappedFrame,大小为pitch个 cuvidMapVideoFrame(pDecoder->GetDecoder(), pInfo.picture_index, &dMappedFrame, &pitch, &oVPP); //因为解码后的数据地址还是在GPU中,所有需要找到 unsigned int nv12_size = pitch * (pDecoder->iHeight + pDecoder->iHeight/2); // 12bpp //从GPU内存拷贝到pa->pFrameBuffer(CPU的内存地址) oResult = cuMemcpyDtoH(pa->pFrameBuffer, dMappedFrame, nv12_size); //释放GPU中的内存 cuvidUnmapVideoFrame(pDecoder->GetDecoder(), dMappedFrame); pFrameQueue->releaseFrame(&pInfo); } } #ifdef _WIN32 WaitForSingleObject(decodeThread, INFINITE); #else pthread_join(pid, NULL); #endif delete pDecoder; delete pFrameQueue; cuvidCtxLockDestroy(ctxLock); __cu(cuCtxDestroy(cudaCtx)); return 0; }

其中的解码器的流程调用是重点关注的

new解码器

CudaDecoder::CudaDecoder() : m_videoSource(NULL), m_videoParser(NULL), m_videoDecoder(NULL), m_ctxLock(NULL), m_decodedFrames(0), m_bFinish(false) { }

初始化解码器,这里创建了三个对象,一个是源,一个是解码器,一个是解析器,

//初始化Gpu解码器 void CudaDecoder::InitVideoDecoder(const char* videoPath, CUvideoctxlock ctxLock, FrameQueue* pFrameQueue, int targetWidth, int targetHeight) { assert(videoPath);//数据流地址 assert(ctxLock); assert(pFrameQueue); m_pFrameQueue = pFrameQueue; CUresult oResult; m_ctxLock = ctxLock; //init video source CUVIDSOURCEPARAMS oVideoSourceParameters; memset(&oVideoSourceParameters, 0, sizeof(CUVIDSOURCEPARAMS)); oVideoSourceParameters.pUserData = this; oVideoSourceParameters.pfnVideoDataHandler = HandleVideoData; oVideoSourceParameters.pfnAudioDataHandler = NULL; oResult = cuvidCreateVideoSource(&m_videoSource, videoPath, &oVideoSourceParameters);//创建数据源对象,目的是在回调里面得到数据包,然后在回调里面可以用m_videoParser处理,只支持文件 if (oResult != CUDA_SUCCESS) { fprintf(stderr, "cuvidCreateVideoSource failed "); fprintf(stderr, "Please check if the path exists, or the video is a valid H264 file "); exit(-1); } //init video decoder CUVIDEOFORMAT oFormat; cuvidGetSourceVideoFormat(m_videoSource, &oFormat, 0); if (oFormat.codec != cudaVideoCodec_H264 && oFormat.codec != cudaVideoCodec_HEVC) { fprintf(stderr, "The sample only supports H264/HEVC input video! "); exit(-1); } if (oFormat.chroma_format != cudaVideoChromaFormat_420) { fprintf(stderr, "The sample only supports 4:2:0 chroma! "); exit(-1); } CUVIDDECODECREATEINFO oVideoDecodeCreateInfo; memset(&oVideoDecodeCreateInfo, 0, sizeof(CUVIDDECODECREATEINFO)); oVideoDecodeCreateInfo.CodecType = oFormat.codec; oVideoDecodeCreateInfo.ulWidth = oFormat.coded_width; oVideoDecodeCreateInfo.ulHeight = oFormat.coded_height; oVideoDecodeCreateInfo.ulNumDecodeSurfaces = 8; if ((oVideoDecodeCreateInfo.CodecType == cudaVideoCodec_H264) || (oVideoDecodeCreateInfo.CodecType == cudaVideoCodec_H264_SVC) || (oVideoDecodeCreateInfo.CodecType == cudaVideoCodec_H264_MVC)) { // assume worst-case of 20 decode surfaces for H264 oVideoDecodeCreateInfo.ulNumDecodeSurfaces = 20; } if (oVideoDecodeCreateInfo.CodecType == cudaVideoCodec_VP9) oVideoDecodeCreateInfo.ulNumDecodeSurfaces = 12; if (oVideoDecodeCreateInfo.CodecType == cudaVideoCodec_HEVC) { // ref HEVC spec: A.4.1 General tier and level limits int MaxLumaPS = 35651584; // currently assuming level 6.2, 8Kx4K int MaxDpbPicBuf = 6; int PicSizeInSamplesY = oVideoDecodeCreateInfo.ulWidth * oVideoDecodeCreateInfo.ulHeight; int MaxDpbSize; if (PicSizeInSamplesY <= (MaxLumaPS>>2)) MaxDpbSize = MaxDpbPicBuf * 4; else if (PicSizeInSamplesY <= (MaxLumaPS>>1)) MaxDpbSize = MaxDpbPicBuf * 2; else if (PicSizeInSamplesY <= ((3*MaxLumaPS)>>2)) MaxDpbSize = (MaxDpbPicBuf * 4) / 3; else MaxDpbSize = MaxDpbPicBuf; MaxDpbSize = MaxDpbSize < 16 ? MaxDpbSize : 16; oVideoDecodeCreateInfo.ulNumDecodeSurfaces = MaxDpbSize + 4; } oVideoDecodeCreateInfo.ChromaFormat = oFormat.chroma_format; oVideoDecodeCreateInfo.OutputFormat = cudaVideoSurfaceFormat_NV12;//设置输出格式为NV12 oVideoDecodeCreateInfo.DeinterlaceMode = cudaVideoDeinterlaceMode_Weave; if (targetWidth <= 0 || targetHeight <= 0) { oVideoDecodeCreateInfo.ulTargetWidth = oFormat.display_area.right - oFormat.display_area.left; oVideoDecodeCreateInfo.ulTargetHeight = oFormat.display_area.bottom - oFormat.display_area.top; } else { oVideoDecodeCreateInfo.ulTargetWidth = targetWidth;//输出长宽 oVideoDecodeCreateInfo.ulTargetHeight = targetHeight; } oVideoDecodeCreateInfo.display_area.left = 0; oVideoDecodeCreateInfo.display_area.right = (short)oVideoDecodeCreateInfo.ulTargetWidth; oVideoDecodeCreateInfo.display_area.top = 0; oVideoDecodeCreateInfo.display_area.bottom = (short)oVideoDecodeCreateInfo.ulTargetHeight; oVideoDecodeCreateInfo.ulNumOutputSurfaces = 2; oVideoDecodeCreateInfo.ulCreationFlags = cudaVideoCreate_PreferCUVID; oVideoDecodeCreateInfo.vidLock = m_ctxLock; oResult = cuvidCreateDecoder(&m_videoDecoder, &oVideoDecodeCreateInfo);//创建解码器 if (oResult != CUDA_SUCCESS) { fprintf(stderr, "cuvidCreateDecoder() failed, error code: %d ", oResult); exit(-1); } m_oVideoDecodeCreateInfo = oVideoDecodeCreateInfo; //init video parser CUVIDPARSERPARAMS oVideoParserParameters; memset(&oVideoParserParameters, 0, sizeof(CUVIDPARSERPARAMS)); oVideoParserParameters.CodecType = oVideoDecodeCreateInfo.CodecType; oVideoParserParameters.ulMaxNumDecodeSurfaces = oVideoDecodeCreateInfo.ulNumDecodeSurfaces; oVideoParserParameters.ulMaxDisplayDelay = 1; oVideoParserParameters.pUserData = this; oVideoParserParameters.pfnSequenceCallback = HandleVideoSequence;//数据源拉取出来的回调 oVideoParserParameters.pfnDecodePicture = HandlePictureDecode; oVideoParserParameters.pfnDisplayPicture = HandlePictureDisplay;//解码后的数据回调 oResult = cuvidCreateVideoParser(&m_videoParser, &oVideoParserParameters);//创建解析器 目的是协助解析包,可以回调得到每帧的格式,回调得到预解码的数据,回调得到最后图片数据 if (oResult != CUDA_SUCCESS) { fprintf(stderr, "cuvidCreateVideoParser failed, error code: %d ", oResult); exit(-1); } }

源对象加载数据后会回调,里面有CUVIDSOURCEDATAPACKET格式的数据包,数据包会给解析器,解析器回传数据给解码器,解码器把数据回传给队列,发往主线程

static int CUDAAPI HandleVideoData(void* pUserData, CUVIDSOURCEDATAPACKET* pPacket) { assert(pUserData); CudaDecoder* pDecoder = (CudaDecoder*)pUserData; CUresult oResult = cuvidParseVideoData(pDecoder->m_videoParser, pPacket); if(oResult != CUDA_SUCCESS) { printf("error! "); } return 1; } static int CUDAAPI HandleVideoSequence(void* pUserData, CUVIDEOFORMAT* pFormat) { assert(pUserData); CudaDecoder* pDecoder = (CudaDecoder*)pUserData; if ((pFormat->codec != pDecoder->m_oVideoDecodeCreateInfo.CodecType) || // codec-type (pFormat->coded_width != pDecoder->m_oVideoDecodeCreateInfo.ulWidth) || (pFormat->coded_height != pDecoder->m_oVideoDecodeCreateInfo.ulHeight) || (pFormat->chroma_format != pDecoder->m_oVideoDecodeCreateInfo.ChromaFormat)) { fprintf(stderr, "NvTranscoder doesn't deal with dynamic video format changing "); return 0; } return 1; } static int CUDAAPI HandlePictureDecode(void* pUserData, CUVIDPICPARAMS* pPicParams) { assert(pUserData); CudaDecoder* pDecoder = (CudaDecoder*)pUserData; pDecoder->m_pFrameQueue->waitUntilFrameAvailable(pPicParams->CurrPicIdx); assert(CUDA_SUCCESS == cuvidDecodePicture(pDecoder->m_videoDecoder, pPicParams)); return 1; } static int CUDAAPI HandlePictureDisplay(void* pUserData, CUVIDPARSERDISPINFO* pPicParams) { assert(pUserData); CudaDecoder* pDecoder = (CudaDecoder*)pUserData; pDecoder->m_pFrameQueue->enqueue(pPicParams); pDecoder->m_decodedFrames++; return 1; }

看了以上流程,估计有一个大概的流程在心里了,

必要的gpu初始化------》初始化解码器,解析器,源解释器------》运行-----》处理输出数据

2.自己解码器的调用对接

现在轮到我们自己的需求,我的需求就是实现那个ffmpeg的解码GPU化,先看看官方文档

首先用这个必须有一些要求

NVIDIA Video Codec SDK 8.0 System Requirements * NVIDIA Kepler/Maxwell/Pascal GPU with hardware video accelerators - Refer to the NVIDIA Video SDK developer zone web page (https://developer.nvidia.com/nvidia-video-codec-sdk) for GPUs which support encoding and decoding acceleration. * Windows: Driver version 378.66 or higher * Linux: Driver version 378.13 or higher * CUDA 7.5 Toolkit (optional) [Windows Configuration Requirements] - DirectX SDK is needed. You can download the latest SDK from Microsoft's DirectX website - The CUDA 7.5 Toolkit is optional to install (see below on how to get it) - CUDA toolkit is used for building CUDA kernels that can interop with NVENC. The following environment variables need to be set to build the sample applications included with the SDK * For Windows - DXSDK_DIR: pointing to the DirectX SDK root directory [Linux Configuration Requirements] * For Linux - X11 and OpenGL, GLUT, GLEW libraries for video playback and display - The CUDA 7.5 Toolkit is optional to install (see below on how to get it) - CUDA toolkit is used for building CUDA kernels that can interop with NVENC.

我看下了我的linux基本满足条件

验证可行性

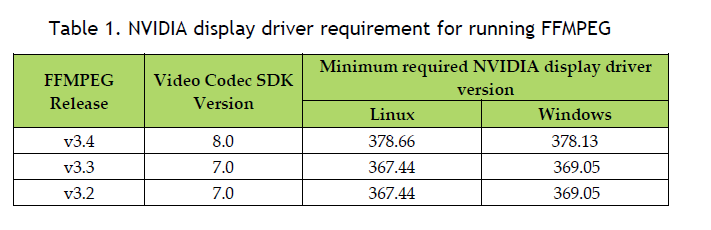

再看Using_FFmpeg_with_NVIDIA_GPU_Hardware_Acceleration.pdf里面的提示可以直接编译ffmpeg,使用它自带的cuda解码器来测试解码,不过也是有要求的

对号入座,我用的是8.0,所以使用ffmpeg3.4

编译

./configure --enable-shared -–enable-cuda --enable-cuvid --enable-nvenc --enable-nonfree -–enable-libnpp --extra-cflags=-I/usr/local/cuda/include --extra-ldflags=-L/usr/local/cuda/lib64 --prefix=/home/user/mjl/algo/ffmpeg/build make -j 4(建议用四线程,八线程可能出现找不到的错误)

验证

ffmpeg -y -hwaccel cuvid -c:v h264_cuvid -vsync 0 -i input.mp4 -vf scale_npp=1920:1072 -vcodec h264_nvenc output0.264 -vf scale_npp=1280:720 -vcodec h264_nvenc output1.264 报错:Unknown decoder 'h264_cuvid'

注意一定要在超级管理员权限下面运行,应为只有超级管理员才能访问gpu

正常输出了文件,证明可行

关于它自带的解码器,我一直不是很了解,ffmpeg在初始化的时候统一注册了各种编解码器,但是如何在上层简单的调用,一直不明白,这点可以大家交流

我这里是自己直接对接,也便于控制数据

avformat_network_init(); av_register_all();//1.注册各种编码解码模块,如果3.3及以上版本,里面包含GPU解码模块 std::string tempfile = “xxxx”;//视频流地址 avformat_find_stream_info(format_context_, nullptr)//2.拉取一小段数据流分析,便于得到数据的基本格式 if (AVMEDIA_TYPE_VIDEO == enc->codec_type && video_stream_index_ < 0)//3.筛选出视频流 codec_ = avcodec_find_decoder(enc->codec_id);//4.找到对应的解码器 codec_context_ = avcodec_alloc_context3(codec_);//5.创建解码器对应的结构体 av_read_frame(format_context_, &packet_); //6.读取数据包 avcodec_send_packet(codec_context_, &packet_) //7.发出解码 avcodec_receive_frame(codec_context_, yuv_frame_) //8.接收解码 sws_scale(y2r_sws_context_, yuv_frame_->data, yuv_frame_->linesize, 0, codec_context_->height, rgb_data_, rgb_line_size_) //9.数据格式转换

在第一节中说过,4,7,8,9步骤需要修改

数据还是由ffmpeg拉取,也就是说不需要cuda自带的源获取器,只需要对接解码器和解析器(如果拉取数据也可以用GPU会更好)

而在ffmpeg中出来的数据格式是AVPacket,而cuda解码器需要的格式是CUVIDSOURCEDATAPACKET,所以涉及到格式的转换

开始的时候我在网上资料发现一个 https://www.cnblogs.com/dwdxdy/archive/2013/08/07/3244723.html 这位兄弟的格式转换部分是这样实现的

我试过,不行的,没有任何解码输出!

https://www.cnblogs.com/betterwgo/p/6613641.html 这位兄弟比较全面,但是其中的

void VideoSource::play_thread(LPVOID lpParam) { AVPacket *avpkt; avpkt = (AVPacket *)av_malloc(sizeof(AVPacket)); CUVIDSOURCEDATAPACKET cupkt; int iPkt = 0; CUresult oResult; while (av_read_frame(pFormatCtx, avpkt) >= 0){ if (bThreadExit){ break; } bStarted = true; if (avpkt->stream_index == videoindex){ cuCtxPushCurrent(g_oContext); if (avpkt && avpkt->size) { if (h264bsfc) { av_bitstream_filter_filter(h264bsfc, pFormatCtx->streams[videoindex]->codec, NULL, &avpkt->data, &avpkt->size, avpkt->data, avpkt->size, 0); } cupkt.payload_size = (unsigned long)avpkt->size; cupkt.payload = (const unsigned char*)avpkt->data; if (avpkt->pts != AV_NOPTS_VALUE) { cupkt.flags = CUVID_PKT_TIMESTAMP; if (pCodecCtx->pkt_timebase.num && pCodecCtx->pkt_timebase.den){ AVRational tb; tb.num = 1; tb.den = AV_TIME_BASE; cupkt.timestamp = av_rescale_q(avpkt->pts, pCodecCtx->pkt_timebase, tb); } else cupkt.timestamp = avpkt->pts; } } else { cupkt.flags = CUVID_PKT_ENDOFSTREAM; } oResult = cuvidParseVideoData(oSourceData_.hVideoParser, &cupkt); if ((cupkt.flags & CUVID_PKT_ENDOFSTREAM) || (oResult != CUDA_SUCCESS)){ break; } iPkt++; //printf("Succeed to read avpkt %d ! ", iPkt); checkCudaErrors(cuCtxPopCurrent(NULL)); } av_free_packet(avpkt); } oSourceData_.pFrameQueue->endDecode(); bStarted = false; }

这部分代码比较陈旧,还是没能正常运行,起来,不过很敬佩这兄弟,能分享到这一步,已经很不错了!

这是我在他的基础上修改的代码,没有用他的下面这种方式

//h264bsfc = av_bitstream_filter_init("h264_mp4toannexb"); //av_bsf_alloc(av_bsf_get_by_name("h264_mp4toannexb"), &bsf);

改用了av_bsf_send_packet和av_bsf_receive_packet方式,下面的我的代码

if ((&fsc->packet_) && fsc->packet_.size) { if (fsc->bsf) { //av_bitstream_filter_filter(h264bsfc, codec_context_, NULL, &packet_.data, &packet_.size, packet_.data, packet_.size, 0); //av_bitstream_filter_filter(h264bsfc, video_st->codec, NULL, &packet_.data, &packet_.size, packet_.data, packet_.size, 0); AVPacket filter_packet = { 0 }; AVPacket filtered_packet = { 0 }; int ret; if (&fsc->packet_ && fsc->packet_.size) { if ((ret = av_packet_ref(&filter_packet, &fsc->packet_)) < 0) { //av_log(avctx, AV_LOG_ERROR, "av_packet_ref failed "); printf("av_packet_ref failed "); //return ret; } if ((ret = av_bsf_send_packet(fsc->bsf, &filter_packet)) < 0) { //av_log(avctx, AV_LOG_ERROR, "av_bsf_send_packet failed "); printf("av_bsf_send_packet failed "); av_packet_unref(&filter_packet); //return ret; } if ((ret = av_bsf_receive_packet(fsc->bsf, &filtered_packet)) < 0) { //av_log(avctx, AV_LOG_ERROR, "av_bsf_receive_packet failed "); printf("av_bsf_receive_packet failed "); //return ret; } memcpy(&fsc->packet_, &filtered_packet, sizeof(AVPacket)); //&packet_ = &filtered_packet; } } //if (fsc->h264bsfc){ // //av_bitstream_filter_filter(fsc->h264bsfc, fsc->codec_context_, NULL, &fsc->packet_.data, &fsc->packet_.size, fsc->packet_.data, fsc->packet_.size, 0); // av_bitstream_filter_filter(fsc->h264bsfc, fsc->video_st->codec, NULL, &fsc->packet_.data, &fsc->packet_.size, fsc->packet_.data, fsc->packet_.size, 0); //} pPacket.payload_size = (unsigned long)fsc->packet_.size; pPacket.payload = (const unsigned char*)fsc->packet_.data; if (fsc->packet_.pts != AV_NOPTS_VALUE) { //fprintf(stderr, "fsc->packet_.pts != AV_NOPTS_VALUE "); pPacket.flags = CUVID_PKT_TIMESTAMP; if (fsc->codec_context_->pkt_timebase.num && fsc->codec_context_->pkt_timebase.den) { //fprintf(stderr, "pkt_timebase.num ok "); AVRational tb; tb.num = 1; tb.den = AV_TIME_BASE; //pPacket.timestamp = av_rescale_q(fsc->packet_.pts, fsc->codec_context_->pkt_timebase, tb); pPacket.timestamp = av_rescale_q(fsc->packet_.pts, fsc->codec_context_->pkt_timebase, (AVRational) { 1, 10000000 }); } else { //fprintf(stderr, "pkt_timebase.num null "); pPacket.timestamp = fsc->packet_.pts; } } } else { pPacket.flags = CUVID_PKT_ENDOFSTREAM; //fprintf(stderr, "fsc->packet_.pts == AV_NOPTS_VALUE "); } fsc->pDecoder->HandleVideoData(&pPacket);

于是,解码部分就已经实现,有空在贴出全部源码。

如果觉得还可以,打赏地址

BTC: 1GYhFurFFWq4Ta9BzFKx961EKtLhnaVHRc

ETH: 0xe54AbD803573FDD245f0Abb75f4c9Ddfc8e72050