Hudi特性

-

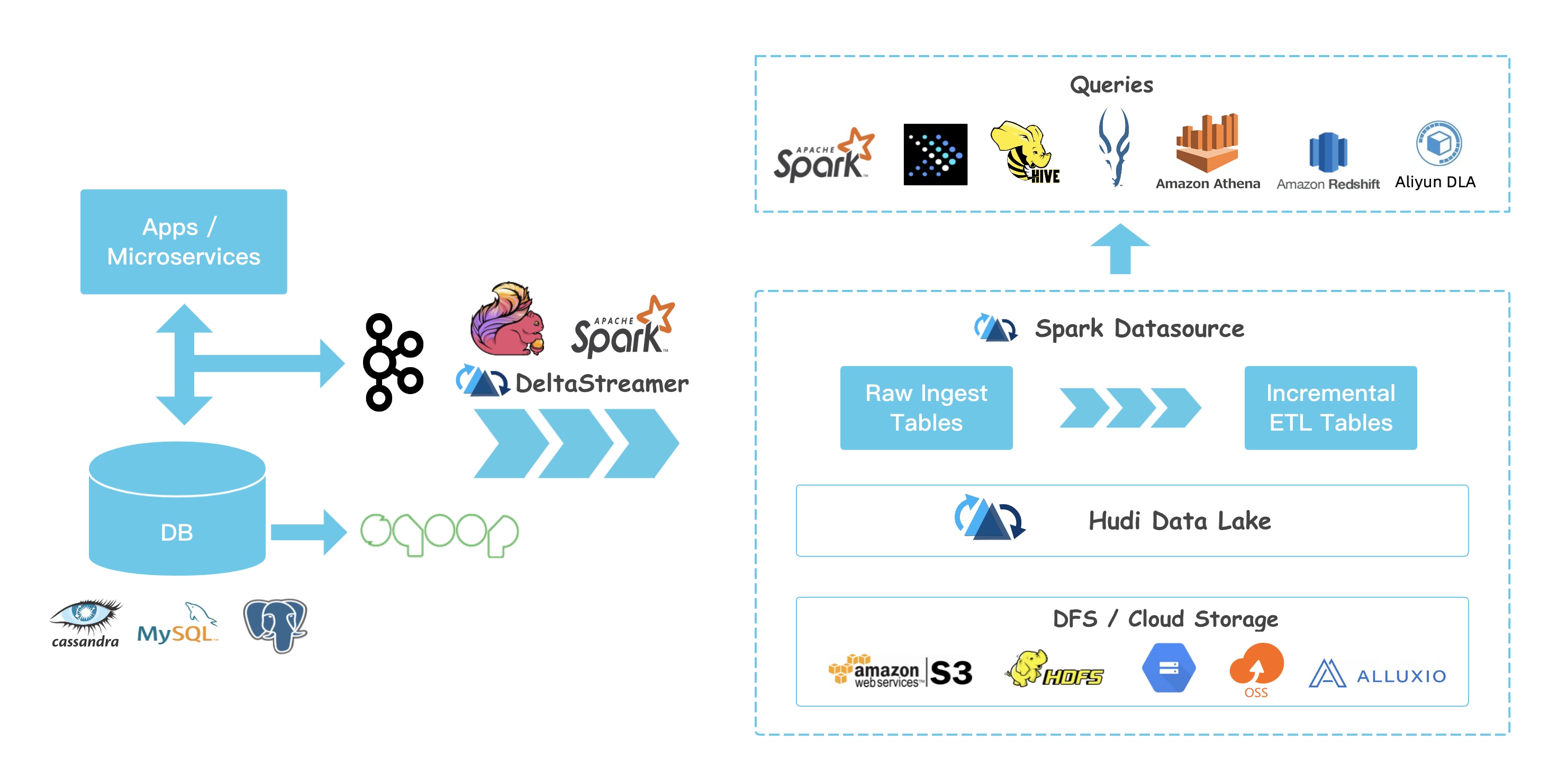

数据湖处理非结构化数据、日志数据、结构化数据

-

支持较快upsert/delete, 可插入索引

-

Table Schema

-

小文件管理Compaction

-

ACID语义保证,多版本保证 并具有回滚功能

-

savepoint 用户数据恢复的保存点

-

支持多种分析引擎 spark、hive、presto

编译Hudi

git clone https://github.com/apache/hudi.git && cd hudi

mvn clean package -DskipTests

hudi 高度耦合spark

执行spark-shell测试Hudi

bin/spark-shell --packages org.apache.spark:spark-avro_2.11:2.4.5 --conf 'spark.serializer=org.apache.spark.serializer.KryoSerializer' --jars /Users/macwei/IdeaProjects/hudi-master/packaging/hudi-spark-bundle/target/hudi-spark-bundle_2.11-0.6.1-SNAPSHOT.jar

hudi 写入数据

// spark-shell

import org.apache.hudi.QuickstartUtils._

import scala.collection.JavaConversions._

import org.apache.spark.sql.SaveMode._

import org.apache.hudi.DataSourceReadOptions._

import org.apache.hudi.DataSourceWriteOptions._

import org.apache.hudi.config.HoodieWriteConfig._

val tableName = "hudi_trips_cow"

val basePath = "file:///tmp/hudi_trips_cow"

val dataGen = new DataGenerator

// spark-shell

val inserts = convertToStringList(dataGen.generateInserts(10))

val df = spark.read.json(spark.sparkContext.parallelize(inserts, 2))

df.write.format("hudi").

options(getQuickstartWriteConfigs).

option(PRECOMBINE_FIELD_OPT_KEY, "ts").

option(RECORDKEY_FIELD_OPT_KEY, "uuid").

option(PARTITIONPATH_FIELD_OPT_KEY, "partitionpath").

option(TABLE_NAME, tableName).

mode(Overwrite).

save(basePath)

读取hudi数据:

val tripsSnapshotDF = spark.

read.

format("hudi").

load(basePath + "/*/*/*/*")

tripsSnapshotDF.createOrReplaceTempView("hudi_trips_snapshot")

spark.sql("select fare, begin_lon, begin_lat, ts from hudi_trips_snapshot where fare > 20.0").show()

+------------------+-------------------+-------------------+-------------+

| fare| begin_lon| begin_lat| ts|

+------------------+-------------------+-------------------+-------------+

| 64.27696295884016| 0.4923479652912024| 0.5731835407930634|1609771934700|

| 93.56018115236618|0.14285051259466197|0.21624150367601136|1610087553306|

| 33.92216483948643| 0.9694586417848392| 0.1856488085068272|1609982888463|

| 27.79478688582596| 0.6273212202489661|0.11488393157088261|1610187369637|

|34.158284716382845|0.46157858450465483| 0.4726905879569653|1610017361855|

| 43.4923811219014| 0.8779402295427752| 0.6100070562136587|1609795685223|

| 66.62084366450246|0.03844104444445928| 0.0750588760043035|1609923236735|

| 41.06290929046368| 0.8192868687714224| 0.651058505660742|1609838517703|

+------------------+-------------------+-------------------+-------------+

spark.sql("select _hoodie_commit_time, _hoodie_record_key, _hoodie_partition_path, rider, driver, fare from hudi_trips_snapshot").show()

+-------------------+--------------------+----------------------+---------+----------+------------------+

|_hoodie_commit_time| _hoodie_record_key|_hoodie_partition_path| rider| driver| fare|

+-------------------+--------------------+----------------------+---------+----------+------------------+

| 20210110225218|3c7ef0e7-86fb-444...| americas/united_s...|rider-213|driver-213| 64.27696295884016|

| 20210110225218|222db9ca-018b-46e...| americas/united_s...|rider-213|driver-213| 93.56018115236618|

| 20210110225218|3fc72d76-f903-4ca...| americas/united_s...|rider-213|driver-213|19.179139106643607|

| 20210110225218|512b0741-e54d-426...| americas/united_s...|rider-213|driver-213| 33.92216483948643|

| 20210110225218|ace81918-0e79-41a...| americas/united_s...|rider-213|driver-213| 27.79478688582596|

| 20210110225218|c76f82a1-d964-4db...| americas/brazil/s...|rider-213|driver-213|34.158284716382845|

| 20210110225218|73145bfc-bcb2-424...| americas/brazil/s...|rider-213|driver-213| 43.4923811219014|

| 20210110225218|9e0b1d58-a1c4-47f...| americas/brazil/s...|rider-213|driver-213| 66.62084366450246|

| 20210110225218|b8fccca1-9c28-444...| asia/india/chennai|rider-213|driver-213|17.851135255091155|

| 20210110225218|6144be56-cef9-43c...| asia/india/chennai|rider-213|driver-213| 41.06290929046368|

+-------------------+--------------------+----------------------+---------+----------+------------------+

对比

数据导入至hadoop方案: maxwell、canal、flume、sqoop

hudi是通用方案

-

hudi 支持presto、spark sql下游查询

-

hudi存储依赖hdfs

-

hudi可以当作数据源或数据库,支持PB级别

概念

Timeline: 时间戳

state:即时状态

原子写入操作

compaction: 后台协调hudi中差异数据

rollback: 回滚

savepoint: 数据还原

任何操作都有以下状态:

- Requested 已安排操作行为,但是没有开始

- Inflight 正在执行当前操作

- Completed 已完成操作

hudi提供两种表类型:

- CopyOnWrite 适用全量数据,列式存储,写入过程执行同步合并重写文件

- MergeOnRead 增量数据,基于列式(parquet)和行式(avro)存储,更新记录到增量文件(日志文件),压缩同步和异步生成新版本文件,延迟更低

hudi查询类型:

- 快照查询 查询最新快照表数据,如果是MergeOnRead表,动态合并最新版本基本数据和增量数据用于显示查询;如果是CopyOnWrite,直接查询Parquet表,同时提供upsert、delete操作

- 增量查询 只能看到写入表的新数据

- 优化读查询 给定时间段的一个查询

资料参考

- Docker Demo: https://hudi.apache.org/docs/docker_demo.html Hudi 官方建议代码测试可在Docker进行, 如果在Docker运行有问题也可以进行Remote Debugger

- Hudi 目前代码写的很多实现其实有点不太好, 如果有想贡献提交PR的可参考: 如何进行开源贡献