Hbase表两种数据备份方法-导入和导出示例

本文将提供两种备份方法 ——

1) 基于Hbase提供的类对hbase中某张表进行备份

2) 基于Hbase snapshot数据快速备份方法

场合:由于线上和测试环境是分离的,无法在测试环境访问线上库,所以需要将线上的hbase表导出一部分到测试环境中的hbase表,这就是本文的由来。

一、基于hbase提供的类对hbase中某张表进行备份

本文使用hbase提供的类把hbase中某张表的数据导出hdfs,之后再导出到测试hbase表中。

首先介绍一下相关参数选项:

(1) 从hbase表导出(# 默认不写file://的时候就是导出到hdfs上了 )

HBase数据导出到HDFS或者本地文件

hbase org.apache.hadoop.hbase.mapreduce.Export emp file:///Users/a6/Applications/experiment_data/hbase_data/bak

HBase数据导出到本地文件

hbase org.apache.hadoop.hbase.mapreduce.Export emp /hbase/emp_bak(2) 导入hbase表(# 默认不写file://的时候就是导出到hdfs上了 )

将hdfs上的数据导入到备份目标表中

localhost:bin a6$ hbase org.apache.hadoop.hbase.mapreduce.Driver import emp_bak /hbase/emp_bak/*

将本地文件上的数据导入到备份目标表中

hbase org.apache.hadoop.hbase.mapreduce.Driver import emp_bak file:///Users/a6/Applications/experiment_data/hbase_data/bak/*(3) 导出时可以限制scanner.batch的大小

如果在hbase中的一个row出现大量的数据,那么导出时会报出ScannerTimeoutException的错误。这时候需要设置hbase.export.scaaner.batch 这个参数。这样导出时的错误就可以避免了。

hbase org.apache.hadoop.hbase.mapreduce.Export -Dhbase.export.scanner.batch=2000 emp file:///Users/a6/Applications/experiment_data/hbase_data/bak(4)为了节省空间可以使用compress选项

hbase的数据导出的时候,如果不适用compress的选项,数据量的大小可能相差5倍。因此使用compress的选项,备份数据的时候是可以节省不少空间的。

并且本人测试了compress选项的导出速度,和无此选项时差别不大(几乎无差别):

hbase org.apache.hadoop.hbase.mapreduce.Export -Dhbase.export.scanner.batch=2000 -D mapred.output.compress=true emp file:///Users/a6/Applications/experiment_data/hbase_data/bak 通过添加compress选项,最终导出文件的大小由335字节变成了325字节,

File Output Format Counters File Output Format Counters

Bytes Written=335 Bytes Written=323 (5)导出指定行键范围和列族

在公司准备要更换数据中心,需要将hbase数据库中的数据进行迁移。虽然进行hbase数据库数据迁移时,使用其自带的工具import和export是很方便的。只不过,在迁移大量数据时,可能需要运行很长的时间,甚至可能出错。这时,是可以通过指定行键范围和列族,来减少单次export工具的运行时间。可以看出,支持的选项有好几个。假如,我们想导出表test的数据,且只要列族Info,行键范围在000到001之间,可以这样写:

./hbase org.apache.hadoop.hbase.mapreduce.Export -D hbase.mapreduce.scan.column.family=Info -D hbase.mapreduce.scan.row.start=000 -D hbase.mapreduce.scan.row.stop=001 test /test_datas闲话少叙,例子就来:

查到了HBase自带的export/import机制可以实现Backup Restore功能。而且可以实现增量备份。

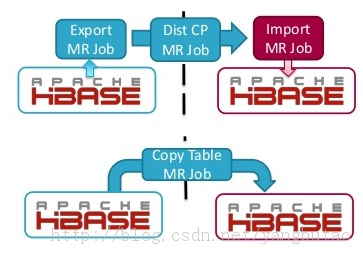

原理都是用了MapReduce来实现的。

1、Export是以表为单位导出数据的,若想完成整库的备份需要执行n遍。

2、Export在shell中的调用方式类似如下格式:

./hbase org.apache.hadoop.hbase.mapreduce.Export 表名 备份路径 (版本号) (起始时间戳) (结束时间戳)

括号内为可选项,例如

Usage: Export [-D <property=value>]* <tablename> <outputdir> [<versions> [<starttime> [<endtime>]] [^[regex pattern] or [Prefix] to filter]]

hbase org.apache.hadoop.hbase.mapreduce.Export emp /hbase/emp_bak 1 123456789

备份 emp 这张表到 /hbase/emp_bak 目录下(最后一级目录必须由Export自己创建),版本号为1,备份记录从123456789这个时间戳开始到当前时间内所有的执行过put操作的记录。

注意:为什么是所有put操作记录?因为在备份时是扫描所有表中所有时间戳大于等于123456789这个值的记录并导出。如果是delete操作,则表中这条记录已经删除,扫描时也无法获取这条记录信息

当不指定时间戳时,备份的就是当前完整表中的数据。

1)、创建hbase表emp

localhost:bin a6$ pwd

/Users/a6/Applications/hbase-1.2.6/bin

localhost:bin a6$ hbase shell

create 'emp','personal data','professional data'2)、插入数据并查看数据

将第一行的值插入到emp表如下所示。

hbase(main):005:0> put 'emp','1','personal data:name','raju'

0 row(s) in 0.6600 seconds

hbase(main):006:0> put 'emp','1','personal data:city','hyderabad'

0 row(s) in 0.0410 seconds

hbase(main):007:0> put 'emp','1','professional data:designation','manager'

0 row(s) in 0.0240 seconds

hbase(main):007:0> put 'emp','1','professional data:salary','50000'

0 row(s) in 0.0240 seconds

插入完成整个表格,会得到下面的输出。

hbase(main):002:0> scan 'emp'

ROW COLUMN+CELL

1 column=personal data:city, timestamp=1526269334560, value=hyderabad

1 column=personal data:name, timestamp=1526269326929, value=raju

1 column=professional data:designation, timestamp=1526269345044, value=manager

1 column=professional data:salary, timestamp=1526269352605, value=50000

1 row(s) in 0.2230 secondslocalhost:bin a6$ pwd

/Users/a6/Applications/hbase-1.2.6/bin

localhost:bin a6$ hbase org.apache.hadoop.hbase.mapreduce.Export emp /hbase/emp_bak

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/Users/a6/Applications/hbase-1.2.6/lib/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/common/lib/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory]

2018-05-15 17:31:18,340 WARN [main] util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

2018-05-15 17:31:18,412 INFO [main] mapreduce.Export: versions=1, starttime=0, endtime=9223372036854775807, keepDeletedCells=false

2018-05-15 17:31:19,224 INFO [main] client.RMProxy: Connecting to ResourceManager at /0.0.0.0:8032

2018-05-15 17:31:23,325 INFO [main] zookeeper.RecoverableZooKeeper: Process identifier=hconnection-0x5ed731d0 connecting to ZooKeeper ensemble=localhost:2182

2018-05-15 17:31:23,332 INFO [main] zookeeper.ZooKeeper: Client environment:zookeeper.version=3.4.6-1569965, built on 02/20/2014 09:09 GMT

2018-05-15 17:31:23,333 INFO [main] zookeeper.ZooKeeper: Client environment:host.name=localhost

2018-05-15 17:31:23,333 INFO [main] zookeeper.ZooKeeper: Client environment:java.version=1.8.0_131

2018-05-15 17:31:23,333 INFO [main] zookeeper.ZooKeeper: Client environment:java.vendor=Oracle Corporation

2018-05-15 17:31:23,333 INFO [main] zookeeper.ZooKeeper: Client environment:java.home=/Library/Java/JavaVirtualMachines/jdk1.8.0_131.jdk/Contents/Home/jre

2018-05-15 17:31:23,333 INFO [main] zookeeper.ZooKeeper: Client environment:java.class.path=/Users/a6/Applications/hbase-1.2.6/bin/../conf:/Library/Java/JavaVirtualMachines/jdk1.8.0_131.jdk/Contents/Home/lib/tools.jar:/Users/a6/Applications/hbase-1.2.6/bin/..:/Users/a6/Applications/hbase-1.2.6/bin/../lib/activation-1.1.jar:/Users/a6/Applications/hbase-1.2.6/bin/../lib/aopalliance-1.0.jar:/Users/a6/Applications/hbase-1.2.6/bin/../lib/apacheds-i18n-2.0.0-M15.jar:/Users/a6/Applications/hbase-1.2.6/bin/../lib/apacheds-kerberos-codec-2.0.0-M15.jar:/Users/a6/Applications/hbase-1.2.6/bin/../lib/api-asn1-api-1.0.0-M20.jar:/Users/a6/Applications/hbase-1.2.6/bin/../lib/api-util-1.0.0-M20.jar:/Users/a6/Applications/hbase-1.2.6/bin/../lib/asm-3.1.jar:/Users/a6/Applications/hbase-1.2.6/bin/../lib/avro-1.7.4.jar:/Users/a6/Applications/hbase-1.2.6/bin/../lib/commons-beanutils-1.7.0.jar:/Users/a6/Applications/hbase-1.2.6/bin/../lib/commons-beanutils-core-1.8.0.jar:/Users/a6/Applications/hbase-1.2.6/bin/../lib/commons-cli-1.2.jar:/Users/a6/Applications/hbase-1.2.6/bin/../lib/commons-codec-1.9.jar:/Users/a6/Applications/hbase-1.2.6/bin/../lib/commons-collections-3.2.2.jar:/Users/a6/Applications/hbase-1.2.6/bin/../lib/commons-compress-1.4.1.jar:/Users/a6/Applications/hbase-1.2.6/bin/../lib/commons-configuration-1.6.jar:/Users/a6/Applications/hbase-1.2.6/bin/../lib/commons-daemon-1.0.13.jar:/Users/a6/Applications/hbase-1.2.6/bin/../lib/commons-digester-1.8.jar:/Users/a6/Applications/hbase-1.2.6/bin/../lib/commons-el-1.0.jar:/Users/a6/Applications/hbase-1.2.6/bin/../lib/commons-httpclient-3.1.jar:/Users/a6/Applications/hbase-1.2.6/bin/../lib/commons-io-2.4.jar:/Users/a6/Applications/hbase-1.2.6/bin/../lib/commons-lang-2.6.jar:/Users/a6/Applications/hbase-1.2.6/bin/../lib/commons-logging-1.2.jar:/Users/a6/Applications/hbase-1.2.6/bin/../lib/commons-math-2.2.jar:/Users/a6/Applications/hbase-1.2.6/bin/../lib/commons-math3-3.1.1.jar:/Users/a6/Applications/hbase-1.2.6/bin/../lib/commons-net-3.1.jar:/Users/a6/Applications/hbase-1.2.6/bin/../lib/disruptor-3.3.0.jar:/Users/a6/Applications/hbase-1.2.6/bin/../lib/findbugs-annotations-1.3.9-1.jar:/Users/a6/Applications/hbase-1.2.6/bin/../lib/guava-12.0.1.jar:/Users/a6/Applications/hbase-1.2.6/bin/../lib/guice-3.0.jar:/Users/a6/Applications/hbase-1.2.6/bin/../lib/guice-servlet-3.0.jar:/Users/a6/Applications/hbase-1.2.6/bin/../lib/hadoop-annotations-2.5.1.jar:/Users/a6/Applications/hbase-1.2.6/bin/../lib/hadoop-auth-2.5.1.jar:/Users/a6/Applications/hbase-1.2.6/bin/../lib/hadoop-client-2.5.1.jar:/Users/a6/Applications/hbase-1.2.6/bin/../lib/hadoop-common-2.5.1.jar:/Users/a6/Applications/hbase-1.2.6/bin/../lib/hadoop-hdfs-2.5.1.jar:/Users/a6/Applications/hbase-1.2.6/bin/../lib/hadoop-mapreduce-client-app-2.5.1.jar:/Users/a6/Applications/hbase-1.2.6/bin/../lib/hadoop-mapreduce-client-common-2.5.1.jar:/Users/a6/Applications/hbase-1.2.6/bin/../lib/hadoop-mapreduce-client-core-2.5.1.jar:/Users/a6/Applications/hbase-1.2.6/bin/../lib/hadoop-mapreduce-client-jobclient-2.5.1.jar:/Users/a6/Applications/hbase-1.2.6/bin/../lib/hadoop-mapreduce-client-shuffle-2.5.1.jar:/Users/a6/Applications/hbase-1.2.6/bin/../lib/hadoop-yarn-api-2.5.1.jar:/Users/a6/Applications/hbase-1.2.6/bin/../lib/hadoop-yarn-client-2.5.1.jar:/Users/a6/Applications/hbase-1.2.6/bin/../lib/hadoop-yarn-common-2.5.1.jar:/Users/a6/Applications/hbase-1.2.6/bin/../lib/hadoop-yarn-server-common-2.5.1.jar:/Users/a6/Applications/hbase-1.2.6/bin/../lib/hbase-annotations-1.2.6-tests.jar:/Users/a6/Applications/hbase-1.2.6/bin/../lib/hbase-annotations-1.2.6.jar:/Users/a6/Applications/hbase-1.2.6/bin/../lib/hbase-client-1.2.6.jar:/Users/a6/Applications/hbase-1.2.6/bin/../lib/hbase-common-1.2.6-tests.jar:/Users/a6/Applications/hbase-1.2.6/bin/../lib/hbase-common-1.2.6.jar:/Users/a6/Applications/hbase-1.2.6/bin/../lib/hbase-examples-1.2.6.jar:/Users/a6/Applications/hbase-1.2.6/bin/../lib/hbase-external-blockcache-1.2.6.jar:/Users/a6/Applications/hbase-1.2.6/bin/../lib/hbase-hadoop-compat-1.2.6.jar:/Users/a6/Applications/hbase-1.2.6/bin/../lib/hbase-hadoop2-compat-1.2.6.jar:/Users/a6/Applications/hbase-1.2.6/bin/../lib/hbase-it-1.2.6-tests.jar:/Users/a6/Applications/hbase-1.2.6/bin/../lib/hbase-it-1.2.6.jar:/Users/a6/Applications/hbase-1.2.6/bin/../lib/hbase-prefix-tree-1.2.6.jar:/Users/a6/Applications/hbase-1.2.6/bin/../lib/hbase-procedure-1.2.6.jar:/Users/a6/Applications/hbase-1.2.6/bin/../lib/hbase-protocol-1.2.6.jar:/Users/a6/Applications/hbase-1.2.6/bin/../lib/hbase-resource-bundle-1.2.6.jar:/Users/a6/Applications/hbase-1.2.6/bin/../lib/hbase-rest-1.2.6.jar:/Users/a6/Applications/hbase-1.2.6/bin/../lib/hbase-server-1.2.6-tests.jar:/Users/a6/Applications/hbase-1.2.6/bin/../lib/hbase-server-1.2.6.jar:/Users/a6/Applications/hbase-1.2.6/bin/../lib/hbase-shell-1.2.6.jar:/Users/a6/Applications/hbase-1.2.6/bin/../lib/hbase-thrift-1.2.6.jar:/Users/a6/Applications/hbase-1.2.6/bin/../lib/htrace-core-3.1.0-incubating.jar:/Users/a6/Applications/hbase-1.2.6/bin/../lib/httpclient-4.2.5.jar:/Users/a6/Applications/hbase-1.2.6/bin/../lib/httpcore-4.4.1.jar:/Users/a6/Applications/hbase-1.2.6/bin/../lib/jackson-core-asl-1.9.13.jar:/Users/a6/Applications/hbase-1.2.6/bin/../lib/jackson-jaxrs-1.9.13.jar:/Users/a6/Applications/hbase-1.2.6/bin/../lib/jackson-mapper-asl-1.9.13.jar:/Users/a6/Applications/hbase-1.2.6/bin/../lib/jackson-xc-1.9.13.jar:/Users/a6/Applications/hbase-1.2.6/bin/../lib/jamon-runtime-2.4.1.jar:/Users/a6/Applications/hbase-1.2.6/bin/../lib/jasper-compiler-5.5.23.jar:/Users/a6/Applications/hbase-1.2.6/bin/../lib/jasper-runtime-5.5.23.jar:/Users/a6/Applications/hbase-1.2.6/bin/../lib/java-xmlbuilder-0.4.jar:/Users/a6/Applications/hbase-1.2.6/bin/../lib/javax.inject-1.jar:/Users/a6/Applications/hbase-1.2.6/bin/../lib/jaxb-api-2.2.2.jar:/Users/a6/Applications/hbase-1.2.6/bin/../lib/jaxb-impl-2.2.3-1.jar:/Users/a6/Applications/hbase-1.2.6/bin/../lib/jcodings-1.0.8.jar:/Users/a6/Applications/hbase-1.2.6/bin/../lib/jersey-client-1.9.jar:/Users/a6/Applications/hbase-1.2.6/bin/../lib/jersey-core-1.9.jar:/Users/a6/Applications/hbase-1.2.6/bin/../lib/jersey-guice-1.9.jar:/Users/a6/Applications/hbase-1.2.6/bin/../lib/jersey-json-1.9.jar:/Users/a6/Applications/hbase-1.2.6/bin/../lib/jersey-server-1.9.jar:/Users/a6/Applications/hbase-1.2.6/bin/../lib/jets3t-0.9.0.jar:/Users/a6/Applications/hbase-1.2.6/bin/../lib/jettison-1.3.3.jar:/Users/a6/Applications/hbase-1.2.6/bin/../lib/jetty-6.1.26.jar:/Users/a6/Applications/hbase-1.2.6/bin/../lib/jetty-sslengine-6.1.26.jar:/Users/a6/Applications/hbase-1.2.6/bin/../lib/jetty-util-6.1.26.jar:/Users/a6/Applications/hbase-1.2.6/bin/../lib/joni-2.1.2.jar:/Users/a6/Applications/hbase-1.2.6/bin/../lib/jruby-complete-1.6.8.jar:/Users/a6/Applications/hbase-1.2.6/bin/../lib/jsch-0.1.42.jar:/Users/a6/Applications/hbase-1.2.6/bin/../lib/jsp-2.1-6.1.14.jar:/Users/a6/Applications/hbase-1.2.6/bin/../lib/jsp-api-2.1-6.1.14.jar:/Users/a6/Applications/hbase-1.2.6/bin/../lib/junit-4.12.jar:/Users/a6/Applications/hbase-1.2.6/bin/../lib/leveldbjni-all-1.8.jar:/Users/a6/Applications/hbase-1.2.6/bin/../lib/libthrift-0.9.3.jar:/Users/a6/Applications/hbase-1.2.6/bin/../lib/log4j-1.2.17.jar:/Users/a6/Applications/hbase-1.2.6/bin/../lib/metrics-core-2.2.0.jar:/Users/a6/Applications/hbase-1.2.6/bin/../lib/netty-all-4.0.23.Final.jar:/Users/a6/Applications/hbase-1.2.6/bin/../lib/paranamer-2.3.jar:/Users/a6/Applications/hbase-1.2.6/bin/../lib/protobuf-java-2.5.0.jar:/Users/a6/Applications/hbase-1.2.6/bin/../lib/servlet-api-2.5-6.1.14.jar:/Users/a6/Applications/hbase-1.2.6/bin/../lib/servlet-api-2.5.jar:/Users/a6/Applications/hbase-1.2.6/bin/../lib/slf4j-api-1.7.7.jar:/Users/a6/Applications/hbase-1.2.6/bin/../lib/slf4j-log4j12-1.7.5.jar:/Users/a6/Applications/hbase-1.2.6/bin/../lib/snappy-java-1.0.4.1.jar:/Users/a6/Applications/hbase-1.2.6/bin/../lib/spymemcached-2.11.6.jar:/Users/a6/Applications/hbase-1.2.6/bin/../lib/xmlenc-0.52.jar:/Users/a6/Applications/hbase-1.2.6/bin/../lib/xz-1.0.jar:/Users/a6/Applications/hbase-1.2.6/bin/../lib/zookeeper-3.4.6.jar:/Users/a6/Applications/hadoop-2.6.5/etc/hadoop:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/common/lib/jaxb-impl-2.2.3-1.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/common/lib/jsr305-1.3.9.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/common/lib/activation-1.1.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/common/lib/curator-recipes-2.6.0.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/common/lib/commons-configuration-1.6.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/common/lib/commons-beanutils-1.7.0.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/common/lib/xz-1.0.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/common/lib/junit-4.11.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/common/lib/commons-httpclient-3.1.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/common/lib/stax-api-1.0-2.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/common/lib/slf4j-log4j12-1.7.5.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/common/lib/apacheds-i18n-2.0.0-M15.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/common/lib/httpclient-4.2.5.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/common/lib/jaxb-api-2.2.2.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/common/lib/mockito-all-1.8.5.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/common/lib/jackson-jaxrs-1.9.13.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/common/lib/commons-logging-1.1.3.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/common/lib/jasper-compiler-5.5.23.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/common/lib/slf4j-api-1.7.5.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/common/lib/jersey-json-1.9.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/common/lib/jasper-runtime-5.5.23.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/common/lib/avro-1.7.4.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/common/lib/log4j-1.2.17.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/common/lib/commons-cli-1.2.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/common/lib/commons-digester-1.8.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/common/lib/servlet-api-2.5.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/common/lib/hadoop-annotations-2.6.5.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/common/lib/protobuf-java-2.5.0.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/common/lib/hadoop-auth-2.6.5.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/common/lib/xmlenc-0.52.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/common/lib/jackson-xc-1.9.13.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/common/lib/jetty-util-6.1.26.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/common/lib/guava-11.0.2.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/common/lib/commons-compress-1.4.1.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/common/lib/htrace-core-3.0.4.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/common/lib/commons-io-2.4.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/common/lib/jackson-core-asl-1.9.13.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/common/lib/jersey-core-1.9.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/common/lib/jsp-api-2.1.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/common/lib/commons-codec-1.4.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/common/lib/netty-3.6.2.Final.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/common/lib/curator-framework-2.6.0.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/common/lib/jetty-6.1.26.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/common/lib/commons-beanutils-core-1.8.0.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/common/lib/jersey-server-1.9.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/common/lib/java-xmlbuilder-0.4.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/common/lib/curator-client-2.6.0.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/common/lib/paranamer-2.3.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/common/lib/zookeeper-3.4.6.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/common/lib/commons-collections-3.2.2.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/common/lib/jettison-1.1.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/common/lib/asm-3.2.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/common/lib/api-asn1-api-1.0.0-M20.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/common/lib/apacheds-kerberos-codec-2.0.0-M15.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/common/lib/hamcrest-core-1.3.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/common/lib/api-util-1.0.0-M20.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/common/lib/commons-net-3.1.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/common/lib/gson-2.2.4.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/common/lib/jets3t-0.9.0.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/common/lib/commons-lang-2.6.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/common/lib/jsch-0.1.42.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/common/lib/commons-el-1.0.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/common/lib/snappy-java-1.0.4.1.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/common/lib/jackson-mapper-asl-1.9.13.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/common/lib/commons-math3-3.1.1.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/common/lib/httpcore-4.2.5.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/common/hadoop-common-2.6.5-tests.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/common/hadoop-nfs-2.6.5.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/common/hadoop-common-2.6.5.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/hdfs:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/hdfs/lib/jsr305-1.3.9.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/hdfs/lib/xercesImpl-2.9.1.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/hdfs/lib/commons-logging-1.1.3.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/hdfs/lib/jasper-runtime-5.5.23.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/hdfs/lib/log4j-1.2.17.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/hdfs/lib/commons-cli-1.2.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/hdfs/lib/xml-apis-1.3.04.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/hdfs/lib/servlet-api-2.5.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/hdfs/lib/protobuf-java-2.5.0.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/hdfs/lib/xmlenc-0.52.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/hdfs/lib/jetty-util-6.1.26.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/hdfs/lib/guava-11.0.2.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/hdfs/lib/htrace-core-3.0.4.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/hdfs/lib/commons-io-2.4.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/hdfs/lib/jackson-core-asl-1.9.13.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/hdfs/lib/jersey-core-1.9.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/hdfs/lib/jsp-api-2.1.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/hdfs/lib/commons-codec-1.4.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/hdfs/lib/netty-3.6.2.Final.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/hdfs/lib/jetty-6.1.26.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/hdfs/lib/jersey-server-1.9.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/hdfs/lib/asm-3.2.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/hdfs/lib/commons-lang-2.6.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/hdfs/lib/commons-el-1.0.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/hdfs/lib/jackson-mapper-asl-1.9.13.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/hdfs/lib/commons-daemon-1.0.13.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/hdfs/hadoop-hdfs-2.6.5-tests.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/hdfs/hadoop-hdfs-2.6.5.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/hdfs/hadoop-hdfs-nfs-2.6.5.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/yarn/lib/jaxb-impl-2.2.3-1.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/yarn/lib/jsr305-1.3.9.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/yarn/lib/activation-1.1.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/yarn/lib/aopalliance-1.0.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/yarn/lib/guice-servlet-3.0.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/yarn/lib/xz-1.0.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/yarn/lib/commons-httpclient-3.1.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/yarn/lib/stax-api-1.0-2.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/yarn/lib/jline-0.9.94.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/yarn/lib/jaxb-api-2.2.2.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/yarn/lib/jackson-jaxrs-1.9.13.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/yarn/lib/commons-logging-1.1.3.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/yarn/lib/jersey-json-1.9.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/yarn/lib/log4j-1.2.17.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/yarn/lib/commons-cli-1.2.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/yarn/lib/servlet-api-2.5.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/yarn/lib/protobuf-java-2.5.0.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/yarn/lib/jackson-xc-1.9.13.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/yarn/lib/jetty-util-6.1.26.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/yarn/lib/guava-11.0.2.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/yarn/lib/commons-compress-1.4.1.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/yarn/lib/commons-io-2.4.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/yarn/lib/jackson-core-asl-1.9.13.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/yarn/lib/jersey-core-1.9.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/yarn/lib/commons-codec-1.4.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/yarn/lib/netty-3.6.2.Final.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/yarn/lib/jetty-6.1.26.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/yarn/lib/jersey-server-1.9.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/yarn/lib/guice-3.0.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/yarn/lib/jersey-client-1.9.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/yarn/lib/jersey-guice-1.9.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/yarn/lib/zookeeper-3.4.6.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/yarn/lib/commons-collections-3.2.2.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/yarn/lib/jettison-1.1.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/yarn/lib/asm-3.2.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/yarn/lib/commons-lang-2.6.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/yarn/lib/leveldbjni-all-1.8.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/yarn/lib/jackson-mapper-asl-1.9.13.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/yarn/lib/javax.inject-1.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/yarn/hadoop-yarn-common-2.6.5.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/yarn/hadoop-yarn-server-web-proxy-2.6.5.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/yarn/hadoop-yarn-server-nodemanager-2.6.5.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/yarn/hadoop-yarn-server-resourcemanager-2.6.5.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/yarn/hadoop-yarn-server-common-2.6.5.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/yarn/hadoop-yarn-client-2.6.5.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/yarn/hadoop-yarn-registry-2.6.5.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/yarn/hadoop-yarn-applications-distributedshell-2.6.5.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/yarn/hadoop-yarn-server-applicationhistoryservice-2.6.5.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/yarn/hadoop-yarn-applications-unmanaged-am-launcher-2.6.5.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/yarn/hadoop-yarn-server-tests-2.6.5.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/yarn/hadoop-yarn-api-2.6.5.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/mapreduce/lib/aopalliance-1.0.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/mapreduce/lib/guice-servlet-3.0.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/mapreduce/lib/xz-1.0.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/mapreduce/lib/junit-4.11.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/mapreduce/lib/avro-1.7.4.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/mapreduce/lib/log4j-1.2.17.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/mapreduce/lib/hadoop-annotations-2.6.5.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/mapreduce/lib/protobuf-java-2.5.0.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/mapreduce/lib/commons-compress-1.4.1.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/mapreduce/lib/commons-io-2.4.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/mapreduce/lib/jackson-core-asl-1.9.13.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/mapreduce/lib/jersey-core-1.9.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/mapreduce/lib/netty-3.6.2.Final.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/mapreduce/lib/jersey-server-1.9.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/mapreduce/lib/guice-3.0.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/mapreduce/lib/jersey-guice-1.9.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/mapreduce/lib/paranamer-2.3.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/mapreduce/lib/asm-3.2.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/mapreduce/lib/hamcrest-core-1.3.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/mapreduce/lib/leveldbjni-all-1.8.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/mapreduce/lib/snappy-java-1.0.4.1.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/mapreduce/lib/jackson-mapper-asl-1.9.13.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/mapreduce/lib/javax.inject-1.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/mapreduce/hadoop-mapreduce-client-common-2.6.5.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/mapreduce/hadoop-mapreduce-client-shuffle-2.6.5.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/mapreduce/hadoop-mapreduce-client-jobclient-2.6.5.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/mapreduce/hadoop-mapreduce-client-app-2.6.5.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/mapreduce/hadoop-mapreduce-client-jobclient-2.6.5-tests.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/mapreduce/hadoop-mapreduce-client-hs-plugins-2.6.5.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/mapreduce/hadoop-mapreduce-client-hs-2.6.5.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/mapreduce/hadoop-mapreduce-examples-2.6.5.jar:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/mapreduce/hadoop-mapreduce-client-core-2.6.5.jar:/Users/a6/Applications/hadoop-2.6.5/contrib/capacity-scheduler/*.jar

2018-05-15 17:31:23,336 INFO [main] zookeeper.ZooKeeper: Client environment:java.library.path=/Users/a6/Applications/hadoop-2.6.5/lib/native

2018-05-15 17:31:23,336 INFO [main] zookeeper.ZooKeeper: Client environment:java.io.tmpdir=/var/folders/bm/dccwv2v97y75hdshqnh1bbpr0000gn/T/

2018-05-15 17:31:23,336 INFO [main] zookeeper.ZooKeeper: Client environment:java.compiler=<NA>

2018-05-15 17:31:23,336 INFO [main] zookeeper.ZooKeeper: Client environment:os.name=Mac OS X

2018-05-15 17:31:23,336 INFO [main] zookeeper.ZooKeeper: Client environment:os.arch=x86_64

2018-05-15 17:31:23,336 INFO [main] zookeeper.ZooKeeper: Client environment:os.version=10.13.2

2018-05-15 17:31:23,337 INFO [main] zookeeper.ZooKeeper: Client environment:user.name=a6

2018-05-15 17:31:23,337 INFO [main] zookeeper.ZooKeeper: Client environment:user.home=/Users/a6

2018-05-15 17:31:23,337 INFO [main] zookeeper.ZooKeeper: Client environment:user.dir=/Users/a6/Applications/hbase-1.2.6/bin

2018-05-15 17:31:23,338 INFO [main] zookeeper.ZooKeeper: Initiating client connection, connectString=localhost:2182 sessionTimeout=90000 watcher=hconnection-0x5ed731d00x0, quorum=localhost:2182, baseZNode=/hbase

2018-05-15 17:31:23,360 INFO [main-SendThread(localhost:2182)] zookeeper.ClientCnxn: Opening socket connection to server localhost/127.0.0.1:2182. Will not attempt to authenticate using SASL (unknown error)

2018-05-15 17:31:23,361 INFO [main-SendThread(localhost:2182)] zookeeper.ClientCnxn: Socket connection established to localhost/127.0.0.1:2182, initiating session

2018-05-15 17:31:23,371 INFO [main-SendThread(localhost:2182)] zookeeper.ClientCnxn: Session establishment complete on server localhost/127.0.0.1:2182, sessionid = 0x163615ea3e6000c, negotiated timeout = 40000

2018-05-15 17:31:23,455 INFO [main] util.RegionSizeCalculator: Calculating region sizes for table "emp".

2018-05-15 17:31:23,834 INFO [main] client.ConnectionManager$HConnectionImplementation: Closing master protocol: MasterService

2018-05-15 17:31:23,834 INFO [main] client.ConnectionManager$HConnectionImplementation: Closing zookeeper sessionid=0x163615ea3e6000c

2018-05-15 17:31:23,837 INFO [main] zookeeper.ZooKeeper: Session: 0x163615ea3e6000c closed

2018-05-15 17:31:23,837 INFO [main-EventThread] zookeeper.ClientCnxn: EventThread shut down

2018-05-15 17:31:23,933 INFO [main] mapreduce.JobSubmitter: number of splits:1

2018-05-15 17:31:23,955 INFO [main] Configuration.deprecation: io.bytes.per.checksum is deprecated. Instead, use dfs.bytes-per-checksum

2018-05-15 17:31:24,115 INFO [main] mapreduce.JobSubmitter: Submitting tokens for job: job_1526346976211_0003

2018-05-15 17:31:24,513 INFO [main] impl.YarnClientImpl: Submitted application application_1526346976211_0003

2018-05-15 17:31:24,561 INFO [main] mapreduce.Job: The url to track the job: http://localhost:8088/proxy/application_1526346976211_0003/

2018-05-15 17:31:24,562 INFO [main] mapreduce.Job: Running job: job_1526346976211_0003

2018-05-15 17:31:36,842 INFO [main] mapreduce.Job: Job job_1526346976211_0003 running in uber mode : false

2018-05-15 17:31:36,844 INFO [main] mapreduce.Job: map 0% reduce 0%

2018-05-15 17:31:43,965 INFO [main] mapreduce.Job: map 100% reduce 0%

2018-05-15 17:31:44,980 INFO [main] mapreduce.Job: Job job_1526346976211_0003 completed successfully

2018-05-15 17:31:45,120 INFO [main] mapreduce.Job: Counters: 43

File System Counters

FILE: Number of bytes read=0

FILE: Number of bytes written=139577

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

HDFS: Number of bytes read=64

HDFS: Number of bytes written=323

HDFS: Number of read operations=4

HDFS: Number of large read operations=0

HDFS: Number of write operations=2

Job Counters

Launched map tasks=1

Data-local map tasks=1

Total time spent by all maps in occupied slots (ms)=4842

Total time spent by all reduces in occupied slots (ms)=0

Total time spent by all map tasks (ms)=4842

Total vcore-seconds taken by all map tasks=4842

Total megabyte-seconds taken by all map tasks=4958208

Map-Reduce Framework

Map input records=1

Map output records=1

Input split bytes=64

Spilled Records=0

Failed Shuffles=0

Merged Map outputs=0

GC time elapsed (ms)=73

CPU time spent (ms)=0

Physical memory (bytes) snapshot=0

Virtual memory (bytes) snapshot=0

Total committed heap usage (bytes)=111149056

HBase Counters

BYTES_IN_REMOTE_RESULTS=0

BYTES_IN_RESULTS=210

MILLIS_BETWEEN_NEXTS=517

NOT_SERVING_REGION_EXCEPTION=0

NUM_SCANNER_RESTARTS=0

NUM_SCAN_RESULTS_STALE=0

REGIONS_SCANNED=1

REMOTE_RPC_CALLS=0

REMOTE_RPC_RETRIES=0

ROWS_FILTERED=0

ROWS_SCANNED=1

RPC_CALLS=3

RPC_RETRIES=0

File Input Format Counters

Bytes Read=0

File Output Format Counters

Bytes Written=323localhost:bin a6$ hadoop dfs -ls /hbase/emp_bak

DEPRECATED: Use of this script to execute hdfs command is deprecated.

Instead use the hdfs command for it.

18/05/15 17:34:29 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

Found 2 items

-rw-r--r-- 1 a6 supergroup 0 2018-05-15 17:31 /hbase/emp_bak/_SUCCESS

-rw-r--r-- 1 a6 supergroup 323 2018-05-15 17:31 /hbase/emp_bak/part-m-00000

localhost:bin a6$ hadoop dfs -cat /hbase/emp_bak/*

DEPRECATED: Use of this script to execute hdfs command is deprecated.

Instead use the hdfs command for it.

18/05/15 17:34:37 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

SEQ1org.apache.hadoop.hbase.io.ImmutableBytesWritable%org.apache.hadoop.hbase.client.ResultI~

�F�;�H��[$���1�

,

personal datacity ����,(2 hyderabad

'

personal dataname ����,(2raju

5

1professional data

designation ����,(2manager

.

1professional datasalary ����,(250000 )localhost:bin a6$ hbase org.apache.hadoop.hbase.mapreduce.Export emp file:///Users/a6/Applications/experiment_data/hbase_data/bakcreate 'emp_bak','personal data','professional data'5)、将hdfs上的数据导入到备份目标表中

localhost:bin a6$ hbase org.apache.hadoop.hbase.mapreduce.Driver import emp_bak /hbase/emp_bak/*

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/Users/a6/Applications/hbase-1.2.6/lib/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/common/lib/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory]

2018-05-15 17:37:07,154 WARN [main] util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

2018-05-15 17:37:08,045 INFO [main] client.RMProxy: Connecting to ResourceManager at /0.0.0.0:8032

2018-05-15 17:37:09,852 INFO [main] input.FileInputFormat: Total input paths to process : 1

2018-05-15 17:37:09,907 INFO [main] mapreduce.JobSubmitter: number of splits:1

2018-05-15 17:37:10,026 INFO [main] mapreduce.JobSubmitter: Submitting tokens for job: job_1526346976211_0005

2018-05-15 17:37:10,384 INFO [main] impl.YarnClientImpl: Submitted application application_1526346976211_0005

2018-05-15 17:37:10,413 INFO [main] mapreduce.Job: The url to track the job: http://localhost:8088/proxy/application_1526346976211_0005/

2018-05-15 17:37:10,413 INFO [main] mapreduce.Job: Running job: job_1526346976211_0005

2018-05-15 17:37:18,621 INFO [main] mapreduce.Job: Job job_1526346976211_0005 running in uber mode : false

2018-05-15 17:37:18,622 INFO [main] mapreduce.Job: map 0% reduce 0%

2018-05-15 17:37:25,705 INFO [main] mapreduce.Job: map 100% reduce 0%

2018-05-15 17:37:25,716 INFO [main] mapreduce.Job: Job job_1526346976211_0005 completed successfully

2018-05-15 17:37:25,832 INFO [main] mapreduce.Job: Counters: 30

File System Counters

FILE: Number of bytes read=0

FILE: Number of bytes written=139121

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

HDFS: Number of bytes read=436

HDFS: Number of bytes written=0

HDFS: Number of read operations=3

HDFS: Number of large read operations=0

HDFS: Number of write operations=0

Job Counters

Launched map tasks=1

Data-local map tasks=1

Total time spent by all maps in occupied slots (ms)=4804

Total time spent by all reduces in occupied slots (ms)=0

Total time spent by all map tasks (ms)=4804

Total vcore-seconds taken by all map tasks=4804

Total megabyte-seconds taken by all map tasks=4919296

Map-Reduce Framework

Map input records=1

Map output records=1

Input split bytes=113

Spilled Records=0

Failed Shuffles=0

Merged Map outputs=0

GC time elapsed (ms)=86

CPU time spent (ms)=0

Physical memory (bytes) snapshot=0

Virtual memory (bytes) snapshot=0

Total committed heap usage (bytes)=112197632

File Input Format Counters

Bytes Read=323

File Output Format Counters

Bytes Written=0

2018-05-15 17:37:25,842 INFO [main] mapreduce.Job: Running job: job_1526346976211_0005

2018-05-15 17:37:25,848 INFO [main] mapreduce.Job: Job job_1526346976211_0005 running in uber mode : false

2018-05-15 17:37:25,849 INFO [main] mapreduce.Job: map 100% reduce 0%

2018-05-15 17:37:25,855 INFO [main] mapreduce.Job: Job job_1526346976211_0005 completed successfully

2018-05-15 17:37:25,862 INFO [main] mapreduce.Job: Counters: 30

File System Counters

FILE: Number of bytes read=0

FILE: Number of bytes written=139121

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

HDFS: Number of bytes read=436

HDFS: Number of bytes written=0

HDFS: Number of read operations=3

HDFS: Number of large read operations=0

HDFS: Number of write operations=0

Job Counters

Launched map tasks=1

Data-local map tasks=1

Total time spent by all maps in occupied slots (ms)=4804

Total time spent by all reduces in occupied slots (ms)=0

Total time spent by all map tasks (ms)=4804

Total vcore-seconds taken by all map tasks=4804

Total megabyte-seconds taken by all map tasks=4919296

Map-Reduce Framework

Map input records=1

Map output records=1

Input split bytes=113

Spilled Records=0

Failed Shuffles=0

Merged Map outputs=0

GC time elapsed (ms)=86

CPU time spent (ms)=0

Physical memory (bytes) snapshot=0

Virtual memory (bytes) snapshot=0

Total committed heap usage (bytes)=112197632

File Input Format Counters

Bytes Read=323

File Output Format Counters

Bytes Written=0这样基本就完成了hbase表中的数据我们可以转化为mapreduce任务进程开始导出导入。当然也可以这么备份的。

6)、最后我们仔细看一下hbase导出和导入的关键命令参数

localhost:bin a6$ hbase org.apache.hadoop.hbase.mapreduce.Export

ERROR: Wrong number of arguments: 0

Usage: Export [-D <property=value>]* <tablename> <outputdir> [<versions> [<starttime> [<endtime>]] [^[regex pattern] or [Prefix] to filter]]

Note: -D properties will be applied to the conf used.

For example:

-D mapreduce.output.fileoutputformat.compress=true

-D mapreduce.output.fileoutputformat.compress.codec=org.apache.hadoop.io.compress.GzipCodec

-D mapreduce.output.fileoutputformat.compress.type=BLOCK

Additionally, the following SCAN properties can be specified

to control/limit what is exported..

-D hbase.mapreduce.scan.column.family=<familyName>

-D hbase.mapreduce.include.deleted.rows=true

-D hbase.mapreduce.scan.row.start=<ROWSTART>

-D hbase.mapreduce.scan.row.stop=<ROWSTOP>

For performance consider the following properties:

-Dhbase.client.scanner.caching=100

-Dmapreduce.map.speculative=false

-Dmapreduce.reduce.speculative=false

For tables with very wide rows consider setting the batch size as below:

-Dhbase.export.scanner.batch=10

localhost:bin a6$ hbase org.apache.hadoop.hbase.mapreduce.Driver import

ERROR: Wrong number of arguments: 0

Usage: Import [options] <tablename> <inputdir>

By default Import will load data directly into HBase. To instead generate

HFiles of data to prepare for a bulk data load, pass the option:

-Dimport.bulk.output=/path/for/output

To apply a generic org.apache.hadoop.hbase.filter.Filter to the input, use

-Dimport.filter.class=<name of filter class>

-Dimport.filter.args=<comma separated list of args for filter

NOTE: The filter will be applied BEFORE doing key renames via the HBASE_IMPORTER_RENAME_CFS property. Futher, filters will only use the Filter#filterRowKey(byte[] buffer, int offset, int length) method to identify whether the current row needs to be ignored completely for processing and Filter#filterKeyValue(KeyValue) method to determine if the KeyValue should be added; Filter.ReturnCode#INCLUDE and #INCLUDE_AND_NEXT_COL will be considered as including the KeyValue.

To import data exported from HBase 0.94, use

-Dhbase.import.version=0.94

For performance consider the following options:

-Dmapreduce.map.speculative=false

-Dmapreduce.reduce.speculative=false

-Dimport.wal.durability=<Used while writing data to hbase. Allowed values are the supported durability values like SKIP_WAL/ASYNC_WAL/SYNC_WAL/...>二、基于Hbase snapshot数据快速备份方法

1.Snapshot备份的优点是什么?

HBase以往数据的备份基于distcp或者copyTable等工具,这些备份机制或多或少对当前的online数据读写存在一定的影响,Snapshot提供了一种快速的数据备份方式,无需进行数据copy。

参见下图

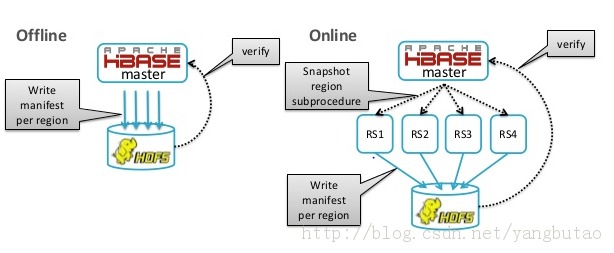

2.HBase数据的备份的方式有几种?Snapshot包括在线和离线的,他们之间有什么区别?

Snapshot包括在线和离线的

(1)离线方式是disabletable,由HBase Master遍历HDFS中的table metadata和hfiles,建立对他们的引用。

(2)在线方式是enabletable,由Master指示region server进行snapshot操作,在此过程中,master和regionserver之间类似两阶段commit的snapshot操作。

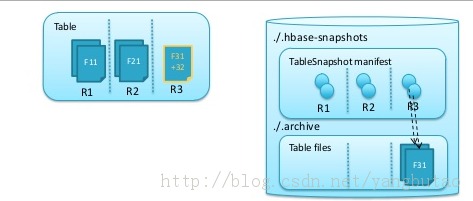

HFile是不可变的,只能append和delete, region的split和compact,都不会对snapshot引用的文件做删除(除非删除snapshot文件),这些文件会归档到archive目录下,进而需要重新调整snapshot文件中相关hfile的引用位置关系。

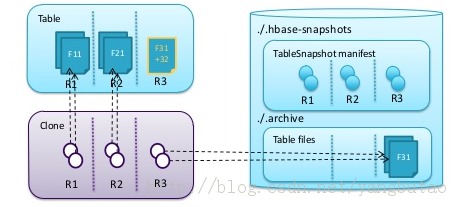

基于snapshot文件,可以做clone一个新表,restore,export到另外一个集群中操作;其中clone生成的新表只是增加元数据,相关的数据文件还是复用snapshot指定的数据文件

参见clone新表操作示意图:

3.snashot的shell的命令都由哪些?如何删除、查看快照?如何导出到另外一个集群?

snashot相关的操作命令如下:

1)创建快照(查看快照->查看快照snapshot命令相关参数->创建快照—>查看快照)

hbase(main):002:0> list_snapshots

SNAPSHOT TABLE + CREATION TIME

0 row(s) in 0.0290 seconds

=> []

hbase(main):003:0> snapshot

ERROR: wrong number of arguments (0 for 2)

Here is some help for this command:

Take a snapshot of specified table. Examples:

hbase> snapshot 'sourceTable', 'snapshotName'

hbase> snapshot 'namespace:sourceTable', 'snapshotName', {SKIP_FLUSH => true}

hbase(main):004:0> snapshot 'emp','emp_snapshot'

0 row(s) in 0.3730 seconds

hbase(main):005:0> list_snapshots

SNAPSHOT TABLE + CREATION TIME

emp_snapshot emp (Wed May 16 09:44:53 +0800 2018)

1 row(s) in 0.0190 seconds

=> ["emp_snapshot"]2)删除并查看快照

hbase(main):006:0> delete_snapshot 'emp_snapshot'

0 row(s) in 0.0390 seconds

hbase(main):007:0> list_snapshots

SNAPSHOT TABLE + CREATION TIME

0 row(s) in 0.0040 seconds

=> []3)基于快照,clone一个新表

hbase(main):011:0> clone_snapshot 'emp_snapshot','new_emp'

0 row(s) in 0.5290 seconds

hbase(main):013:0> scan 'new_emp'

ROW COLUMN+CELL

1 column=personal data:city, timestamp=1526269334560, value=hyderabad

1 column=personal data:name, timestamp=1526269326929, value=raju

1 column=professional data:designation, timestamp=1526269345044, value=manager

1 column=professional data:salary, timestamp=1526269352605, value=50000

1 row(s) in 0.1050 seconds

hbase(main):014:0> desc 'new_emp'

Table new_emp is ENABLED

new_emp

COLUMN FAMILIES DESCRIPTION

{NAME => 'personal data', BLOOMFILTER => 'ROW', VERSIONS => '1', IN_MEMORY => 'false', KEEP_DELETED_CELLS => 'FALSE', DATA_BLOCK_ENCODING => 'NONE', TTL => 'FOREVER', COMPRESSION => 'NONE', MIN_VERSIONS => '0', BLOCKCACHE => 'true', BLOCKSIZE => '65536', REPLICATION_SCOPE

=> '0'}

{NAME => 'professional data', BLOOMFILTER => 'ROW', VERSIONS => '1', IN_MEMORY => 'false', KEEP_DELETED_CELLS => 'FALSE', DATA_BLOCK_ENCODING => 'NONE', TTL => 'FOREVER', COMPRESSION => 'NONE', MIN_VERSIONS => '0', BLOCKCACHE => 'true', BLOCKSIZE => '65536', REPLICATION_S

COPE => '0'}

2 row(s) in 0.0370 seconds4)基于快照恢复表(原hbase表emp需要删除)

hbase(main):027:0>gt; list

TABLE

new_emp

t1

test

3 row(s) in 0.0130 seconds

=> ["new_emp", "t1", "test"]

hbase(main):028:0> list_snapshots

SNAPSHOT TABLE + CREATION TIME

emp_snapshot emp (Wed May 16 09:45:25 +0800 2018)

1 row(s) in 0.0130 seconds

=> ["emp_snapshot"]

hbase(main):029:0> restore_snapshot 'emp_snapshot'

0 row(s) in 0.3700 seconds

hbase(main):030:0> list

TABLE

emp

new_emp

t1

test

4 row(s) in 0.0240 seconds

=> ["emp", "new_emp", "t1", "test"]5)基于快照将数据导出到另外一个集群中的本地文件中

利用mapreduce job将emp_snapshot这个snapshot 导出到本地目录/Users/a6/Applications/experiment_data/hbase_data中的bak_emp_snapshot(不存在)

localhost:bin a6$ hbase org.apache.hadoop.hbase.snapshot.ExportSnapshot -snapshot 'emp_snapshot' -copy-to file:///Users/a6/Applications/experiment_data/hbase_data/bak_emp_snapshot -mappers 16

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/Users/a6/Applications/hbase-1.2.6/lib/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/common/lib/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory]

2018-05-16 10:21:47,310 WARN [main] util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

2018-05-16 10:21:47,633 INFO [main] snapshot.ExportSnapshot: Copy Snapshot Manifest

2018-05-16 10:21:47,922 INFO [main] client.RMProxy: Connecting to ResourceManager at /0.0.0.0:8032

2018-05-16 10:21:50,233 INFO [main] snapshot.ExportSnapshot: Loading Snapshot 'emp_snapshot' hfile list

2018-05-16 10:21:50,547 INFO [main] mapreduce.JobSubmitter: number of splits:2

2018-05-16 10:21:50,732 INFO [main] mapreduce.JobSubmitter: Submitting tokens for job: job_1526434993990_0001

2018-05-16 10:21:51,182 INFO [main] impl.YarnClientImpl: Submitted application application_1526434993990_0001

2018-05-16 10:21:51,268 INFO [main] mapreduce.Job: The url to track the job: http://localhost:8088/proxy/application_1526434993990_0001/

2018-05-16 10:21:51,269 INFO [main] mapreduce.Job: Running job: job_1526434993990_0001

2018-05-16 10:22:02,425 INFO [main] mapreduce.Job: Job job_1526434993990_0001 running in uber mode : false

2018-05-16 10:22:02,427 INFO [main] mapreduce.Job: map 0% reduce 0%

2018-05-16 10:22:09,722 INFO [main] mapreduce.Job: map 50% reduce 0%

2018-05-16 10:22:10,731 INFO [main] mapreduce.Job: map 100% reduce 0%

2018-05-16 10:22:10,740 INFO [main] mapreduce.Job: Job job_1526434993990_0001 completed successfully

2018-05-16 10:22:10,848 INFO [main] mapreduce.Job: Counters: 37

File System Counters

FILE: Number of bytes read=9985

FILE: Number of bytes written=291407

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

HDFS: Number of bytes read=408

HDFS: Number of bytes written=0

HDFS: Number of read operations=2

HDFS: Number of large read operations=0

HDFS: Number of write operations=0

Job Counters

Launched map tasks=2

Other local map tasks=2

Total time spent by all maps in occupied slots (ms)=9683

Total time spent by all reduces in occupied slots (ms)=0

Total time spent by all map tasks (ms)=9683

Total vcore-seconds taken by all map tasks=9683

Total megabyte-seconds taken by all map tasks=9915392

Map-Reduce Framework

Map input records=2

Map output records=0

Input split bytes=408

Spilled Records=0

Failed Shuffles=0

Merged Map outputs=0

GC time elapsed (ms)=155

CPU time spent (ms)=0

Physical memory (bytes) snapshot=0

Virtual memory (bytes) snapshot=0

Total committed heap usage (bytes)=212860928

org.apache.hadoop.hbase.snapshot.ExportSnapshot$Counter

BYTES_COPIED=9985

BYTES_EXPECTED=9985

BYTES_SKIPPED=0

COPY_FAILED=0

FILES_COPIED=2

FILES_SKIPPED=0

MISSING_FILES=0

File Input Format Counters

Bytes Read=0

File Output Format Counters

Bytes Written=0

2018-05-16 10:22:10,851 INFO [main] snapshot.ExportSnapshot: Finalize the Snapshot Export

2018-05-16 10:22:10,852 INFO [main] snapshot.ExportSnapshot: Verify snapshot integrity

2018-05-16 10:22:10,875 INFO [main] snapshot.ExportSnapshot: Export Completed: emp_snapshot查看快照备份到本地的备份文件结构:

localhost:hbase_data a6$ ls -R

bak_emp_snapshot

./bak_emp_snapshot:

archive

./bak_emp_snapshot/archive:

data

./bak_emp_snapshot/archive/data:

default

./bak_emp_snapshot/archive/data/default:

emp

./bak_emp_snapshot/archive/data/default/emp:

f8d3b4ead1603d0e9350dc426fce7fd7

./bak_emp_snapshot/archive/data/default/emp/f8d3b4ead1603d0e9350dc426fce7fd7:

personal data professional data

./bak_emp_snapshot/archive/data/default/emp/f8d3b4ead1603d0e9350dc426fce7fd7/personal data:

9111be6b05e746ddb8507e8daf5a4eb0

./bak_emp_snapshot/archive/data/default/emp/f8d3b4ead1603d0e9350dc426fce7fd7/professional data:

c264d32ef37b4b6f9953b388f007d059

localhost:hbase_data a6$6)基于快照将数据导出到另外一个集群中的hdfs上

localhost:bin a6$ hbase org.apache.hadoop.hbase.snapshot.ExportSnapshot -snapshot 'emp_snapshot' -copy-to hdfs:///hbase/bak_emp_snapshot -mappers 16

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/Users/a6/Applications/hbase-1.2.6/lib/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/Users/a6/Applications/hadoop-2.6.5/share/hadoop/common/lib/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory]

2018-05-16 10:29:02,343 WARN [main] util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

2018-05-16 10:29:03,034 INFO [main] snapshot.ExportSnapshot: Copy Snapshot Manifest

2018-05-16 10:29:03,423 INFO [main] client.RMProxy: Connecting to ResourceManager at /0.0.0.0:8032

2018-05-16 10:29:04,368 INFO [main] snapshot.ExportSnapshot: Loading Snapshot 'emp_snapshot' hfile list

2018-05-16 10:29:04,730 INFO [main] mapreduce.JobSubmitter: number of splits:2

2018-05-16 10:29:04,863 INFO [main] mapreduce.JobSubmitter: Submitting tokens for job: job_1526434993990_0002

2018-05-16 10:29:05,129 INFO [main] impl.YarnClientImpl: Submitted application application_1526434993990_0002

2018-05-16 10:29:05,160 INFO [main] mapreduce.Job: The url to track the job: http://localhost:8088/proxy/application_1526434993990_0002/

2018-05-16 10:29:05,160 INFO [main] mapreduce.Job: Running job: job_1526434993990_0002

2018-05-16 10:29:13,260 INFO [main] mapreduce.Job: Job job_1526434993990_0002 running in uber mode : false

2018-05-16 10:29:13,262 INFO [main] mapreduce.Job: map 0% reduce 0%

2018-05-16 10:29:18,354 INFO [main] mapreduce.Job: Task Id : attempt_1526434993990_0002_m_000000_0, Status : FAILED

Error: Java heap space

2018-05-16 10:29:19,377 INFO [main] mapreduce.Job: Task Id : attempt_1526434993990_0002_m_000001_0, Status : FAILED

Error: Java heap space

2018-05-16 10:29:25,432 INFO [main] mapreduce.Job: map 50% reduce 0%

2018-05-16 10:29:26,438 INFO [main] mapreduce.Job: map 100% reduce 0%

2018-05-16 10:29:26,450 INFO [main] mapreduce.Job: Job job_1526434993990_0002 completed successfully

2018-05-16 10:29:26,554 INFO [main] mapreduce.Job: Counters: 38

File System Counters

FILE: Number of bytes read=9985

FILE: Number of bytes written=281240

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

HDFS: Number of bytes read=408

HDFS: Number of bytes written=9985

HDFS: Number of read operations=8

HDFS: Number of large read operations=0

HDFS: Number of write operations=8

Job Counters

Failed map tasks=2

Launched map tasks=4

Other local map tasks=4

Total time spent by all maps in occupied slots (ms)=17871

Total time spent by all reduces in occupied slots (ms)=0

Total time spent by all map tasks (ms)=17871

Total vcore-seconds taken by all map tasks=17871

Total megabyte-seconds taken by all map tasks=18299904

Map-Reduce Framework

Map input records=2

Map output records=0

Input split bytes=408

Spilled Records=0

Failed Shuffles=0

Merged Map outputs=0

GC time elapsed (ms)=235

CPU time spent (ms)=0

Physical memory (bytes) snapshot=0

Virtual memory (bytes) snapshot=0

Total committed heap usage (bytes)=257949696

org.apache.hadoop.hbase.snapshot.ExportSnapshot$Counter

BYTES_COPIED=9985

BYTES_EXPECTED=9985

BYTES_SKIPPED=0

COPY_FAILED=0

FILES_COPIED=2

FILES_SKIPPED=0

MISSING_FILES=0

File Input Format Counters

Bytes Read=0

File Output Format Counters

Bytes Written=0

2018-05-16 10:29:26,556 INFO [main] snapshot.ExportSnapshot: Finalize the Snapshot Export

2018-05-16 10:29:26,563 INFO [main] snapshot.ExportSnapshot: Verify snapshot integrity

2018-05-16 10:29:26,647 INFO [main] snapshot.ExportSnapshot: Export Completed: emp_snapshotlocalhost:hbase_data a6$ hadoop dfs -ls /hbase/

DEPRECATED: Use of this script to execute hdfs command is deprecated.

Instead use the hdfs command for it.

18/05/16 10:29:34 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

Found 2 items

drwxr-xr-x - a6 supergroup 0 2018-05-16 10:29 /hbase/bak_emp_snapshot

drwxr-xr-x - a6 supergroup 0 2018-05-15 17:31 /hbase/emp_bak

localhost:hbase_data a6$ hadoop dfs -ls /hbase/bak_emp_snapshot

DEPRECATED: Use of this script to execute hdfs command is deprecated.

Instead use the hdfs command for it.

18/05/16 10:29:45 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

Found 2 items

drwxr-xr-x - a6 supergroup 0 2018-05-16 10:29 /hbase/bak_emp_snapshot/.hbase-snapshot

drwxr-xr-x - a6 supergroup 0 2018-05-16 10:29 /hbase/bak_emp_snapshot/archive

localhost:hbase_data a6$localhost:hbase_data a6$ hadoop dfs -ls -R /hbase/bak_emp_snapshot

DEPRECATED: Use of this script to execute hdfs command is deprecated.

Instead use the hdfs command for it.

18/05/16 10:34:15 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

drwxr-xr-x - a6 supergroup 0 2018-05-16 10:29 /hbase/bak_emp_snapshot/.hbase-snapshot

drwxr-xr-x - a6 supergroup 0 2018-05-16 10:29 /hbase/bak_emp_snapshot/.hbase-snapshot/.tmp

drwxr-xr-x - a6 supergroup 0 2018-05-16 10:29 /hbase/bak_emp_snapshot/.hbase-snapshot/emp_snapshot

-rw-r--r-- 1 a6 supergroup 0 2018-05-16 10:29 /hbase/bak_emp_snapshot/.hbase-snapshot/emp_snapshot/.inprogress

-rw-r--r-- 1 a6 supergroup 30 2018-05-16 10:29 /hbase/bak_emp_snapshot/.hbase-snapshot/emp_snapshot/.snapshotinfo

-rw-r--r-- 1 a6 supergroup 703 2018-05-16 10:29 /hbase/bak_emp_snapshot/.hbase-snapshot/emp_snapshot/data.manifest

drwxr-xr-x - a6 supergroup 0 2018-05-16 10:29 /hbase/bak_emp_snapshot/archive

drwxr-xr-x - a6 supergroup 0 2018-05-16 10:29 /hbase/bak_emp_snapshot/archive/data

drwxr-xr-x - a6 supergroup 0 2018-05-16 10:29 /hbase/bak_emp_snapshot/archive/data/default

drwxr-xr-x - a6 supergroup 0 2018-05-16 10:29 /hbase/bak_emp_snapshot/archive/data/default/emp

drwxr-xr-x - a6 supergroup 0 2018-05-16 10:29 /hbase/bak_emp_snapshot/archive/data/default/emp/f8d3b4ead1603d0e9350dc426fce7fd7

drwxr-xr-x - a6 supergroup 0 2018-05-16 10:29 /hbase/bak_emp_snapshot/archive/data/default/emp/f8d3b4ead1603d0e9350dc426fce7fd7/personal data

-rw-rw-rw- 1 a6 staff 4976 2018-05-16 10:29 /hbase/bak_emp_snapshot/archive/data/default/emp/f8d3b4ead1603d0e9350dc426fce7fd7/personal data/9111be6b05e746ddb8507e8daf5a4eb0

drwxr-xr-x - a6 supergroup 0 2018-05-16 10:29 /hbase/bak_emp_snapshot/archive/data/default/emp/f8d3b4ead1603d0e9350dc426fce7fd7/professional data

-rw-rw-rw- 1 a6 staff 5009 2018-05-16 10:29 /hbase/bak_emp_snapshot/archive/data/default/emp/f8d3b4ead1603d0e9350dc426fce7fd7/professional data/c264d32ef37b4b6f9953b388f007d059

localhost:hbase_data a6$其他备份方法:https://www.cnblogs.com/ios123/p/6399699.html