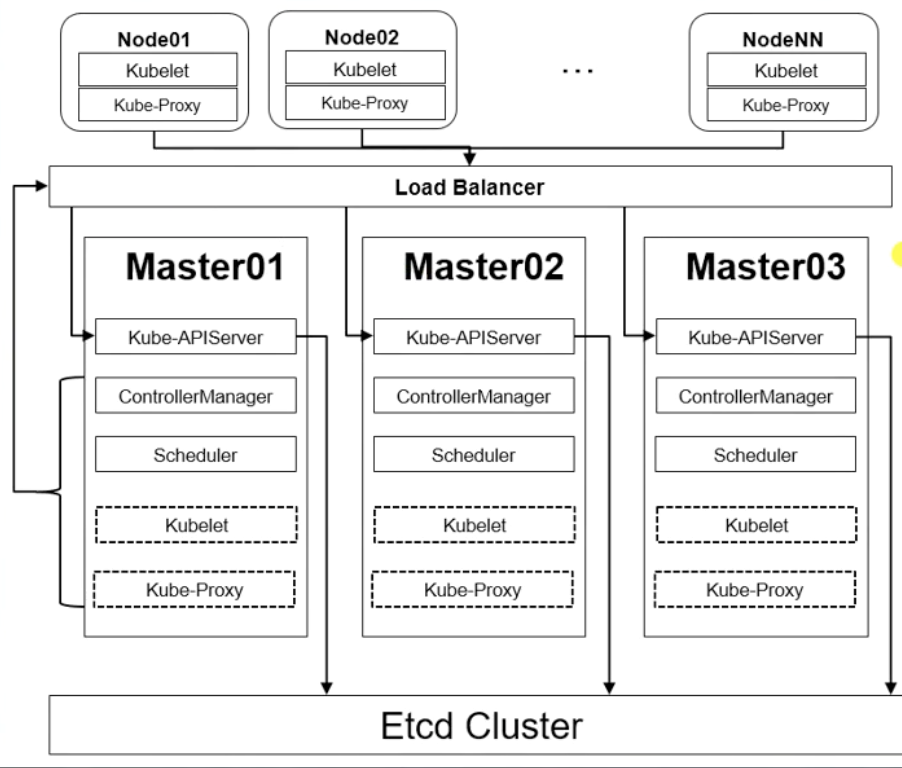

集群架构图:

集群架构信息

【关闭所有节点swap分区】

# swapoff -a && sysctl -w vm.swappiness=0 # sed -i '/swap/s/^/&#/' /etc/fstab

【yum环境配置】

curl -o /etc/yum.repos.d/CentOS-Base.repo https://mirrors.aliyun.com/repo/Centos-7.repo yum install -y yum-utils device-mapper-persistent-data lvm2 yum-config-manager --add-repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

【集群节点时间同步】

# rpm -ivh http://mirrors.wlnmp.com/centos/wlnmp-release-centos.noarch.rpm # yum install -y ntpdate #echo 'Asia/Shanghai' > /etc/timezone #ntpdate time2.aliyun.com

设置时间定期执行任务

# crontab -l

*/1 * * * * ntpdate time2.aliyun.com

【设置ssh免密】

# ssh-keygen -t rsa

# for i in k8s-master01 k8s-master02 k8s-master03 k8s-node01 k8s-node02;do ssh-copy-id -i .ssh/id_rsa.pub $i ;done

#Master01下载安装文件

#链接: https://pan.baidu.com/s/1zdh46AnHrk4NabaPClwn8A 密码: 1u7n #从网盘中下载证书以及k8s集群所依赖的yaml文件

#【部署ipvsadmin】

yum install ipvsadm ipset sysstat conntrack libseccomp -y

# cat /etc/modules-load.d/ipvs.conf

ip_vs

ip_vs_lc

ip_vs_wlc

ip_vs_rr

ip_vs_wrr

ip_vs_lblc

ip_vs_lblcr

ip_vs_dh

ip_vs_sh

ip_vs_fo

ip_vs_nq

ip_vs_sed

ip_vs_ftp

ip_vs_sh

nf_conntrack

ip_tables

ip_set

xt_set

ipt_set

ipt_rpfilter

ipt_REJECT

ipip

#systemctl enable --now systemd-modules-load.service

#所有节点配置内核参数

cat <<EOF > /etc/sysctl.d/k8s.conf

net.ipv4.ip_forward = 1 net.bridge.bridge-nf-call-iptables = 1 net.bridge.bridge-nf-call-ip6tables = 1 fs.may_detach_mounts = 1 vm.overcommit_memory=1 vm.panic_on_oom=0 fs.inotify.max_user_watches=89100 fs.file-max=52706963 fs.nr_open=52706963 net.netfilter.nf_conntrack_max=2310720 net.ipv4.tcp_keepalive_time = 600 net.ipv4.tcp_keepalive_probes = 3 net.ipv4.tcp_keepalive_intvl =15 net.ipv4.tcp_max_tw_buckets = 36000 net.ipv4.tcp_tw_reuse = 1 net.ipv4.tcp_max_orphans = 327680 net.ipv4.tcp_orphan_retries = 3 net.ipv4.tcp_syncookies = 1 net.ipv4.tcp_max_syn_backlog = 16384 net.ipv4.ip_conntrack_max = 65536 net.ipv4.tcp_max_syn_backlog = 16384 net.ipv4.tcp_timestamps = 0 net.core.somaxconn = 16384

EOF

#systctl --system

#reboot

#lsmod | grep --color=auto -e ip_vs -e nf_conntrack

【docker部署】

#yum install -y docker-ce-19.03.*

#systemctl start docker

#mkdir -p /etc/docker

#cat > /etc/docker/daemon.json <<EOF

{ "registry-mirrors": [ "https://registry.docker-cn.com", "http://hub-mirror.c.163.com", "https://docker.mirrors.ustc.edu.cn" ], "exec-opts": ["native.cgroupdriver=systemd"] }

#EOF

#systemctl daemon-reload && systemctl enable --now docker

【kubernetes组件以及etcd部署】

#systemctl daemon-reload && systemctl enable --now dockerwget

[root@k8s-master01 ~]#wget https://github.com/etcd-io/etcd/releases/download/v3.4.12/etcd-v3.4.12-linux-amd64.tar.gz

[root@k8s-master01 ~]#tar -zxvf etcd-v3.4.12-linux-amd64.tar.gz --strip-components=1 -C /usr/local/bin etcd-v3.4.12-linux-amd64/etcd{,ctl} #将etcd、etcdctl二进制文件传送到/usr/local/bin目录中

[root@k8s-master01 ~]#wget https://dl.k8s.io/v1.20.0/kubernetes-server-linux-amd64.tar.gz

#将kubernetes二进制文件(kubelet,kubectl,kube-apiserver,kube-controller-manager,kube-scheduler,kube-proxy)解压到指定的/usr/local/bin目录中;这里的--strip-components=3表示去除3级目录结构,只将需要的二进制文件拷贝到/usr/local/bin/目录中

[root@k8s-master01 ~]#tar -xf kubernetes-server-linux-amd64.tar.gz --strip-components=3 -C /usr/local/bin kubernetes/server/bin/kube{let,ctl,-apiserver,-controller-manager,-scheduler,-proxy}

#定义环境变量,将master01节点上解压出来的二进制文件拷贝到另外两个master节点

[root@k8s-master01 ~]#MasterNodes='k8s-master02 k8s-master03'

[root@k8s-master01 ~]#for NODE in $MasterNodes; do echo $NODE; scp /usr/local/bin/kube{let,ctl,-apiserver,-controller-manager,-scheduler,-proxy} $NODE:/usr/local/bin/; scp /usr/local/bin/etcd* $NODE:/usr/local/bin/; done

#而对于node节点,只需要将master01节点生成的kubelet和kubelet-proxy组件拷贝到node节点即可

[root@k8s-master01 ~]#WorkNodes='k8s-node01 k8s-node02'

[root@k8s-master01 ~]#for NODE in $WorkNodes;do scp /usr/local/bin/kube{let,-proxy} $NODE:/usr/local/bin/ ; done

【生成证书】

所有节点创建/opt/cni/bin目录

#mkdir -p /opt/cni/bin

安装cfssl证书生成工具

#wget "https://pkg.cfssl.org/R1.2/cfssl_linux-amd64" -O /usr/local/bin/cfssl #wget "https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64" -O /usr/local/bin/cfssljson #chmod +x /usr/local/bin/cfssl /usr/local/bin/cfssljson

在所有master节点创建etcd证书

#mkdir /etc/etcd/ssl -p

在kubernetes集群所有节点创建kubernetes相关pki证书目录

#mkdir -p /etc/kubernetes/kpi

Ps: https://pan.baidu.com/s/1zdh46AnHrk4NabaPClwn8A 密码: 1u7n #从网盘中下载的k8s-ha-install.tar.gz 压缩文件

[root@k8s-master01 ~]# tar xvf k8s-ha-install.tar.gz --strip-components=1 [root@k8s-master01 ~]# cd k8s-ha-install/ [root@k8s-master01 k8s-ha-install]# ls /root/k8s-ha-install/pki/ admin-csr.json ca-config.json etcd-ca-csr.json front-proxy-ca-csr.json kubelet-csr.json manager-csr.json apiserver-csr.json ca-csr.json etcd-csr.json front-proxy-client-csr.json kube-proxy-csr.json scheduler-csr.json

#在Master01节点上生成etcd证书,生成证书的CSR文件,主要包含证书签名请求文件,配置了一些域名,公司,单位等信息;

[root@k8s-master01 pki]# cfssl gencert -initca etcd-ca-csr.json | cfssljson -bare /etc/etcd/ssl/etcd-ca

[root@k8s-master01 pki]# ls /etc/etcd/ssl/

etcd-ca.csr etcd-ca-key.pem etcd-ca.pem

[root@k8s-master01 pki]# cfssl gencert

-ca=/etc/etcd/ssl/etcd-ca.pem

-ca-key=/etc/etcd/ssl/etcd-ca-key.pem

-config=ca-config.json

-hostname=127.0.0.1,k8s-master01,k8s-master02,k8s-master03,192.168.60.101,192.168.60.102,192.168.60.103

-profile=kubernetes

etcd-csr.json | cfssljson -bare /etc/etcd/ssl/etcd

#将etcd相关的证书文件复制到其他Master节点

#MasterNodes='k8s-master02 k8s-master03'

#WorkNodes='k8s-node01 k8s-node02'

[root@k8s-master01 pki]# for NODE in $MasterNodes;do ssh $NODE "mkdir -p /etc/etcd/ssl";for FILE in etcd-ca-key.pem etcd-ca.pem etcd-key.pem etcd.pem; do scp /etc/etcd/ssl/${FILE} $NODE:/etc/etcd/ssl/${FILE};done; done

【k8s组件证书】

#Master01生成kubernetes证书;

[root@k8s-master01 ~]# cd /root/k8s-ha-install/pki/

[root@k8s-master01 pki]# cfssl gencert -initca ca-csr.json | cfssljson -bare /etc/kubernetes/pki/ca

#10.96.0.1是k8s-service的网段,如果需要更改k8sservice网段,那么久需要更改10.96.0.1,如果不是高可用集群,那么192.168.60.236为master01的vip

[root@k8s-master01 pki]#cfssl gencert -ca=/etc/kubernetes/pki/ca.pem -ca-key=/etc/kubernetes/pki/ca-key.pem -config=ca-config.json -hostname=10.96.0.1,192.168.60.236,127.0.0.1,kubernetes,kubernetes.default,kubernetes.default.svc,kubernetes.default.svc.cluster,kubernetes.default.svc.cluster.local,192.168.60.101,192.168.60.102,192.168.0.103 -profile=kubernetes apiserver-csr.json | cfssljson -bare /etc/kubernetes/pki/apiserver

#生成apiserver的聚合证书;

[root@k8s-master01 pki]# cfssl gencert -initca front-proxy-ca-csr.json | cfssljson -bare /etc/kubernetes/pki/front-proxy-ca

[root@k8s-master01 pki]# cfssl gencert -ca=/etc/kubernetes/pki/front-proxy-ca.pem -ca-key=/etc/kubernetes/pki/front-proxy-ca-key.pem -config=ca-config.json -profile=kubernetes front-proxy-client-csr.json | cfssljson -bare /etc/kubernetes/pki/front-proxy-client

#生成controller-manage客户端证书

[root@k8s-master01 pki]# cfssl gencert -ca=/etc/kubernetes/pki/ca.pem -ca-key=/etc/kubernetes/pki/ca-key.pem -config=ca-config.json -profile=kubernetes manager-csr.json | cfssljson -bare /etc/kubernetes/pki/controller-manager #设置一个集群项,主要用于配置多个集群 [root@k8s-master01 pki]# kubectl config set-cluster kubernetes > --certificate-authority=/etc/kubernetes/pki/ca.pem > --embed-certs=true > --server=https://192.168.60.236:8443 > --kubeconfig=/etc/kubernetes/controller-manager.kubeconfig Cluster "kubernetes" set. #设置一个用户项 [root@k8s-master01 pki]# kubectl config set-credentials system:kube-controller-manager > --client-certificate=/etc/kubernetes/pki/controller-manager.pem > --client-key=/etc/kubernetes/pki/controller-manager-key.pem > --embed-certs=true > --kubeconfig=/etc/kubernetes/controller-manager.kubeconfig User "system:kube-controller-manager" set. #设置一个环境项,一个上下文,通过kube-controller-manager用户名连接kubernetes集群 [root@k8s-master01 pki]# kubectl config set-context system:kube-controller-manager@kubernetes > --cluster=kubernetes > --user=system:kube-controller-manager > --kubeconfig=/etc/kubernetes/controller-manager.kubeconfig Context "system:kube-controller-manager@kubernetes" created. #使用某个环境当做默认的环境 [root@k8s-master01 pki]# kubectl config use-context system:kube-controller-manager@kubernetes > --kubeconfig=/etc/kubernetes/controller-manager.kubeconfig Switched to context "system:kube-controller-manager@kubernetes".

#生成scheduler证书文件

root@k8s-master01 pki]# cfssl gencert

> -ca=/etc/kubernetes/pki/ca.pem

> -ca-key=/etc/kubernetes/pki/ca-key.pem

> -config=ca-config.json

> -profile=kubernetes

> scheduler-csr.json | cfssljson -bare /etc/kubernetes/pki/scheduler

2020/12/21 14:59:32 [INFO] generate received request

2020/12/21 14:59:32 [INFO] received CSR

2020/12/21 14:59:32 [INFO] generating key: rsa-2048

2020/12/21 14:59:32 [INFO] encoded CSR

2020/12/21 14:59:32 [INFO] signed certificate with serial number 212482711725599124851040824209737958939315938395

2020/12/21 14:59:32 [WARNING] This certificate lacks a "hosts" field. This makes it unsuitable for

websites. For more information see the Baseline Requirements for the Issuance and Management

of Publicly-Trusted Certificates, v.1.1.6, from the CA/Browser Forum (https://cabforum.org);

[root@k8s-master01 pki]# kubectl config set-cluster kubernetes --certificate-authority=/etc/kubernetes/pki/ca.pem --embed-certs=true --server=https://192.168.60.236:8443 --kubeconfig=/etc/kubernetes/scheduler.kubeconfig Cluster "kubernetes" set. [root@k8s-master01 pki]# kubectl config set-credentials system:kube-scheduler --client-certificate=/etc/kubernetes/pki/scheduler.pem --client-key=/etc/kubernetes/pki/scheduler-key.pem --embed-certs=true --kubeconfig=/etc/kubernetes/scheduler.kubeconfig User "system:kube-scheduler" set. [root@k8s-master01 pki]# kubectl config set-context system:kube-scheduler@kubernetes --cluster=kubernetes --user=system:kube-scheduler --kubeconfig=/etc/kubernetes/scheduler.kubeconfig Context "system:kube-scheduler@kubernetes" created. [root@k8s-master01 pki]# kubectl config use-context system:kube-scheduler@kubernetes --kubeconfig=/etc/kubernetes/scheduler.kubeconfig Switched to context "system:kube-scheduler@kubernetes".

#生成admin证书,主要用于管理kubernetes集群 [root@k8s-master01 pki]# cfssl gencert -ca=/etc/kubernetes/pki/ca.pem -ca-key=/etc/kubernetes/pki/ca-key.pem -config=ca-config.json -profile=kubernetes admin-csr.json | cfssljson -bare /etc/kubernetes/pki/admin 2020/12/21 15:03:35 [INFO] generate received request 2020/12/21 15:03:35 [INFO] received CSR 2020/12/21 15:03:35 [INFO] generating key: rsa-2048 2020/12/21 15:03:35 [INFO] encoded CSR 2020/12/21 15:03:35 [INFO] signed certificate with serial number 335202371700726560784659537380501229533603198548 2020/12/21 15:03:35 [WARNING] This certificate lacks a "hosts" field. This makes it unsuitable for websites. For more information see the Baseline Requirements for the Issuance and Management of Publicly-Trusted Certificates, v.1.1.6, from the CA/Browser Forum (https://cabforum.org); specifically, section 10.2.3 ("Information Requirements").

[root@k8s-master01 pki]# kubectl config set-cluster kubernetes --certificate-authority=/etc/kubernetes/pki/ca.pem --embed-certs=true --server=https://192.168.60.236:8443 --kubeconfig=/etc/kubernetes/admin.kubeconfig

Cluster "kubernetes" set.

[root@k8s-master01 pki]# kubectl config set-credentials kubernetes-admin --client-certificate=/etc/kubernetes/pki/admin.pem --client-key=/etc/kubernetes/pki/admin-key.pem --embed-certs=true --kubeconfig=/etc/kubernetes/admin.kubeconfig

User "kubernetes-admin" set.

[root@k8s-master01 pki]# kubectl config set-context kubernetes-admin@kubernetes --cluster=kubernetes --user=kubernetes-admin --kubeconfig=/etc/kubernetes/admin.kubeconfig

Context "kubernetes-admin@kubernetes" created.

[root@k8s-master01 pki]# kubectl config use-context kubernetes-admin@kubernetes --kubeconfig=/etc/kubernetes/admin.kubeconfig

Switched to context "kubernetes-admin@kubernetes".

#创建ServiceAccount key会生成一个与之绑定的secret,那么secret会产生一个token;

[root@k8s-master01 pki]# openssl genrsa -out /etc/kubernetes/pki/sa.key 2048 Generating RSA private key, 2048 bit long modulus .................................................................................................................................+++ ......................................+++ e is 65537 (0x10001)

[root@k8s-master01 pki]# openssl rsa -in /etc/kubernetes/pki/sa.key -pubout -out /etc/kubernetes/pki/sa.pub

writing RSA key

#将生成的kubernetes的证书发送到其他master节点上

[root@k8s-master01 pki]# for NODE in k8s-master02 k8s-master03; do for FILE in $(ls /etc/kubernetes/pki/ | grep -v etcd); do scp /etc/kubernetes/pki/${FILE} $NODE:/etc/kubernetes/pki/${FILE}; done; for FILE in admin.kubeconfig controller-manager.kubeconfig scheduler.kubeconfig; do scp /etc/kubernetes/${FILE} $NODE:/etc/kubernetes/${FILE}; done; done

【Kubernetes系统组件配置】

#【etcd配置】

k8s-master01配置

[root@k8s-master01 ~]# vim /etc/etcd/etcd.config.yml

name: 'k8s-master01' data-dir: /var/lib/etcd wal-dir: /var/lib/etcd/wal snapshot-count: 5000 heartbeat-interval: 100 election-timeout: 1000 quota-backend-bytes: 0 listen-peer-urls: 'https://192.168.60.101:2380' listen-client-urls: 'https://192.168.60.101:2379,http://127.0.0.1:2379' max-snapshots: 3 max-wals: 5 cors: initial-advertise-peer-urls: 'https://192.168.60.101:2380' advertise-client-urls: 'https://192.168.60.101:2379' discovery: discovery-fallback: 'proxy' discovery-proxy: discovery-srv: initial-cluster: 'k8s-master01=https://192.168.60.101:2380,k8s-master02=https://192.168.60.102:2380,k8s-master03=https://192.168.60.103:2380' initial-cluster-token: 'etcd-k8s-cluster' initial-cluster-state: 'new' strict-reconfig-check: false enable-v2: true enable-pprof: true proxy: 'off' proxy-failure-wait: 5000 proxy-refresh-interval: 30000 proxy-dial-timeout: 1000 proxy-write-timeout: 5000 proxy-read-timeout: 0 client-transport-security: cert-file: '/etc/kubernetes/pki/etcd/etcd.pem' key-file: '/etc/kubernetes/pki/etcd/etcd-key.pem' client-cert-auth: true trusted-ca-file: '/etc/kubernetes/pki/etcd/etcd-ca.pem' auto-tls: true peer-transport-security: cert-file: '/etc/kubernetes/pki/etcd/etcd.pem' key-file: '/etc/kubernetes/pki/etcd/etcd-key.pem' peer-client-cert-auth: true trusted-ca-file: '/etc/kubernetes/pki/etcd/etcd-ca.pem' auto-tls: true debug: false log-package-levels: log-outputs: [default] force-new-cluster: false

#在master01节点创建etcd service文件,用于设置开机自启

[root@k8s-master01 ~]# vim /usr/lib/systemd/system/etcd.service

[Unit] Description=Etcd Service Documentation=https://coreos.com/etcd/docs/latest/ After=network.target [Service] Type=notify ExecStart=/usr/local/bin/etcd --config-file=/etc/etcd/etcd.config.yml Restart=on-failure RestartSec=10 LimitNOFILE=65536 [Install] WantedBy=multi-user.target Alias=etcd1.service

[root@k8s-master01 ~]#mkdir /etc/kubernetes/pki/etcd

[root@k8s-master01 ~]#ln -s /etc/etcd/ssl/* /etc/kubernetes/pki/etcd/

[root@k8s-master01 ~]#systemctl daemon-reload

[root@k8s-master01 ~]#systemctl enable --now etcd

#k8s-master02配置

[root@k8s-master02 ~]# vim /etc/etcd/etcd.config.yml

name: 'k8s-master02' data-dir: /var/lib/etcd wal-dir: /var/lib/etcd/wal snapshot-count: 5000 heartbeat-interval: 100 election-timeout: 1000 quota-backend-bytes: 0 listen-peer-urls: 'https://192.168.60.102:2380' listen-client-urls: 'https://192.168.60.102:2379,http://127.0.0.1:2379' max-snapshots: 3 max-wals: 5 cors: initial-advertise-peer-urls: 'https://192.168.60.102:2380' advertise-client-urls: 'https://192.168.60.102:2379' discovery: discovery-fallback: 'proxy' discovery-proxy: discovery-srv: initial-cluster: 'k8s-master01=https://192.168.60.101:2380,k8s-master02=https://192.168.60.102:2380,k8s-master03=https://192.168.60.103:2380' initial-cluster-token: 'etcd-k8s-cluster' initial-cluster-state: 'new' strict-reconfig-check: false enable-v2: true enable-pprof: true proxy: 'off' proxy-failure-wait: 5000 proxy-refresh-interval: 30000 proxy-dial-timeout: 1000 proxy-write-timeout: 5000 proxy-read-timeout: 0 client-transport-security: cert-file: '/etc/kubernetes/pki/etcd/etcd.pem' key-file: '/etc/kubernetes/pki/etcd/etcd-key.pem' client-cert-auth: true trusted-ca-file: '/etc/kubernetes/pki/etcd/etcd-ca.pem' auto-tls: true peer-transport-security: cert-file: '/etc/kubernetes/pki/etcd/etcd.pem' key-file: '/etc/kubernetes/pki/etcd/etcd-key.pem' peer-client-cert-auth: true trusted-ca-file: '/etc/kubernetes/pki/etcd/etcd-ca.pem' auto-tls: true debug: false log-package-levels: log-outputs: [default] force-new-cluster: false

[root@k8s-master02 ~]# vim /usr/lib/systemd/system/etcd.service

[Unit] Description=Etcd Service Documentation=https://coreos.com/etcd/docs/latest/ After=network.target [Service] Type=notify ExecStart=/usr/local/bin/etcd --config-file=/etc/etcd/etcd.config.yml Restart=on-failure RestartSec=10 LimitNOFILE=65536 [Install] WantedBy=multi-user.target Alias=etcd2.service

[root@k8s-master02 ~]#mkdir /etc/kubernetes/pki/etcd

[root@k8s-master02 ~]#ln -s /etc/etcd/ssl/* /etc/kubernetes/pki/etcd/

[root@k8s-master02 ~]#systemctl daemon-reload

[root@k8s-master02 ~]#systemctl enable --now etcd

#k8s-master03

[root@k8s-master03 ~]# vim /etc/etcd/etcd.config.yml #修改配置文件

name: 'k8s-master03' data-dir: /var/lib/etcd wal-dir: /var/lib/etcd/wal snapshot-count: 5000 heartbeat-interval: 100 election-timeout: 1000 quota-backend-bytes: 0 listen-peer-urls: 'https://192.168.60.103:2380' listen-client-urls: 'https://192.168.60.103:2379,http://127.0.0.1:2379' max-snapshots: 3 max-wals: 5 cors: initial-advertise-peer-urls: 'https://192.168.60.103:2380' advertise-client-urls: 'https://192.168.60.103:2379' discovery: discovery-fallback: 'proxy' discovery-proxy: discovery-srv: initial-cluster: 'k8s-master01=https://192.168.60.101:2380,k8s-master02=https://192.168.60.102:2380,k8s-master03=https://192.168.60.103:2380' initial-cluster-token: 'etcd-k8s-cluster' initial-cluster-state: 'new' strict-reconfig-check: false enable-v2: true enable-pprof: true proxy: 'off' proxy-failure-wait: 5000 proxy-refresh-interval: 30000 proxy-dial-timeout: 1000 proxy-write-timeout: 5000 proxy-read-timeout: 0 client-transport-security: cert-file: '/etc/kubernetes/pki/etcd/etcd.pem' key-file: '/etc/kubernetes/pki/etcd/etcd-key.pem' client-cert-auth: true trusted-ca-file: '/etc/kubernetes/pki/etcd/etcd-ca.pem' auto-tls: true peer-transport-security: cert-file: '/etc/kubernetes/pki/etcd/etcd.pem' key-file: '/etc/kubernetes/pki/etcd/etcd-key.pem' peer-client-cert-auth: true trusted-ca-file: '/etc/kubernetes/pki/etcd/etcd-ca.pem' auto-tls: true debug: false log-package-levels: log-outputs: [default] force-new-cluster: false

[root@k8s-master03 ~]# vim /usr/lib/systemd/system/etcd.service

[Unit] Description=Etcd Service Documentation=https://coreos.com/etcd/docs/latest/ After=network.target [Service] Type=notify ExecStart=/usr/local/bin/etcd --config-file=/etc/etcd/etcd.config.yml Restart=on-failure RestartSec=10 LimitNOFILE=65536 [Install] WantedBy=multi-user.target Alias=etcd3.service

[root@k8s-master03 ~]#mkdir /etc/kubernetes/pki/etcd

[root@k8s-master03 ~]#ln -s /etc/etcd/ssl/* /etc/kubernetes/pki/etcd/

[root@k8s-master03 ~]#systemctl daemon-reload

[root@k8s-master03 ~]#systemctl enable --now etcd

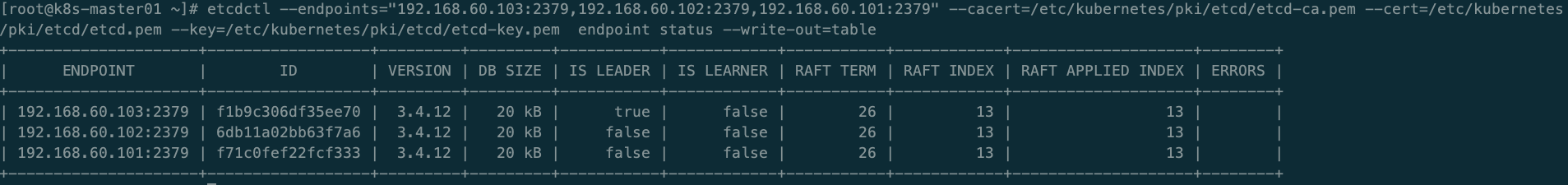

#验证etcd集群是否正常运行

[root@k8s-master01 ~]# export ETCDCTL_API=3 #切换etcd API为3版本

[root@k8s-master01 ~]# etcdctl --endpoints="192.168.60.103:2379,192.168.60.102:2379,192.168.60.101:2379" --cacert=/etc/kubernetes/pki/etcd/etcd-ca.pem --cert=/etc/kubernetes/pki/etcd/etcd.pem --key=/etc/kubernetes/pki/etcd/etcd-key.pem endpoint status --write-out=table

etcd集群成功运行,并且192.168.60.103为etcd集群的leader节点;

【高可用集群配置】

主要为三台k8s-master节点配置一个高可用,采用haproxy+keepalived形式,实现高可用架构

Ps:所有Master节点部署keepalived和haproxy软件

#yum install -y keepalived haproxy

# vim /etc/haproxy/haproxy.cfg

global maxconn 2000 ulimit-n 16384 log 127.0.0.1 local0 err stats timeout 30s defaults log global mode http option httplog timeout connect 5000 timeout client 50000 timeout server 50000 timeout http-request 15s timeout http-keep-alive 15s frontend k8s-master bind 0.0.0.0:8443 bind 127.0.0.1:8443 mode tcp option tcplog tcp-request inspect-delay 5s default_backend k8s-master backend k8s-master mode tcp option tcplog option tcp-check balance roundrobin default-server inter 10s downinter 5s rise 2 fall 2 slowstart 60s maxconn 250 maxqueue 256 weight 100 server k8s-master01 192.168.60.101:6443 check server k8s-master02 192.168.60.102:6443 check server k8s-master03 192.168.60.103:6443 check

# systemctl enable --now haproxy #为每个master节点上的haproxy服务设置开机自启动

#修改三台k8s-master节点keepliaved配置文件

[root@k8s-master01 ~]# vim /etc/keepalived/keepalived.conf

Configuration File for keepalived global_defs { router_id LVS_DEVEL } vrrp_script chk_apiserver { script "/etc/keepalived/check_apiserver.sh" interval 5 weight -5 fall 2 rise 1 } vrrp_instance VI_1 { state MASTER interface eth0 mcast_src_ip 192.168.60.101 virtual_router_id 51 priority 101 nopreempt advert_int 2 authentication { auth_type PASS auth_pass K8SHA_KA_AUTH } virtual_ipaddress { 192.168.60.236 } track_script { chk_apiserver } }

[root@k8s-master02 ~]# vim /etc/keepalived/keepalived.conf

! Configuration File for keepalived global_defs { router_id LVS_DEVEL } vrrp_script chk_apiserver { script "/etc/keepalived/check_apiserver.sh" interval 5 weight -5 fall 2 rise 1 } vrrp_instance VI_1 { state BACKUP interface eth0 mcast_src_ip 192.168.60.102 virtual_router_id 51 priority 100 nopreempt advert_int 2 authentication { auth_type PASS auth_pass K8SHA_KA_AUTH } virtual_ipaddress { 192.168.60.236 } track_script { chk_apiserver } }

[root@k8s-master03 ~]# vim /etc/keepalived/keepalived.conf

! Configuration File for keepalived global_defs { router_id LVS_DEVEL } vrrp_script chk_apiserver { script "/etc/keepalived/check_apiserver.sh" interval 5 weight -5 fall 2 rise 1 } vrrp_instance VI_1 { state BACKUP interface eth0 mcast_src_ip 192.168.60.103 virtual_router_id 51 priority 100 nopreempt advert_int 2 authentication { auth_type PASS auth_pass K8SHA_KA_AUTH } virtual_ipaddress { 192.168.60.236 } track_script { chk_apiserver } }

Ps:每个k8s-master节点编写健康检查脚本;

# vim /etc/keepalived/check_apiserver.sh

#!/bin/bash err=0 for k in $(seq 1 3) do check_code=$(pgrep haproxy) if [[ $check_code == "" ]]; then err=$(expr $err + 1) sleep 1 continue else err=0 break fi done if [[ $err != "0" ]]; then echo "systemctl stop keepalived" /usr/bin/systemctl stop keepalived exit 1 else exit 0 fi

#chmod +x /etc/keepalived/check_apiserver.sh

#systemctl enable --now keepalived

验证高可用集群是否正常,在任意节点通过ping或者telnet进行测试即可如

【kubernetes组件】

#mkdir -p /etc/kubernetes/manifests/ /etc/systemd/system/kubelet.service.d /var/lib/kubelet /var/log/kubernetes #为所有节点创建目录,用于后续环境部署

【部署kubernetes-apiserver组件】

#Master01配置

[root@k8s-master01 ~]# vim /usr/lib/systemd/system/kube-apiserver.service

[Unit] Description=Kubernetes API Server Documentation=https://github.com/kubernetes/kubernetes After=network.target [Service] ExecStart=/usr/local/bin/kube-apiserver --v=2 --logtostderr=true --allow-privileged=true --bind-address=0.0.0.0 --secure-port=6443 --insecure-port=0 --advertise-address=192.168.60.101 --service-cluster-ip-range=10.96.0.0/12 --service-node-port-range=30000-32767 --etcd-servers=https://192.168.60.101:2379,https://192.168.60.102:2379,https://192.168.60.103:2379 --etcd-cafile=/etc/etcd/ssl/etcd-ca.pem --etcd-certfile=/etc/etcd/ssl/etcd.pem --etcd-keyfile=/etc/etcd/ssl/etcd-key.pem --client-ca-file=/etc/kubernetes/pki/ca.pem --tls-cert-file=/etc/kubernetes/pki/apiserver.pem --tls-private-key-file=/etc/kubernetes/pki/apiserver-key.pem --kubelet-client-certificate=/etc/kubernetes/pki/apiserver.pem --kubelet-client-key=/etc/kubernetes/pki/apiserver-key.pem --service-account-key-file=/etc/kubernetes/pki/sa.pub --service-account-signing-key-file=/etc/kubernetes/pki/sa.key --service-account-issuer=https://kubernetes.default.svc.cluster.local --kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname --enable-admission-plugins=NamespaceLifecycle,LimitRanger,ServiceAccount,DefaultStorageClass,DefaultTolerationSeconds,NodeRestriction,ResourceQuota --authorization-mode=Node,RBAC --enable-bootstrap-token-auth=true --requestheader-client-ca-file=/etc/kubernetes/pki/front-proxy-ca.pem --proxy-client-cert-file=/etc/kubernetes/pki/front-proxy-client.pem --proxy-client-key-file=/etc/kubernetes/pki/front-proxy-client-key.pem --requestheader-allowed-names=aggregator --requestheader-group-headers=X-Remote-Group --requestheader-extra-headers-prefix=X-Remote-Extra- --requestheader-username-headers=X-Remote-User # --token-auth-file=/etc/kubernetes/token.csv Restart=on-failure RestartSec=10s LimitNOFILE=65535 [Install] WantedBy=multi-user.target

[root@k8s-master01 ~]# systemctl enable --now kube-apiserver

#Master02

[root@k8s-master02 ~]# vim /usr/lib/systemd/system/kube-apiserver.service

[Unit] Description=Kubernetes API Server Documentation=https://github.com/kubernetes/kubernetes After=network.target [Service] ExecStart=/usr/local/bin/kube-apiserver --v=2 --logtostderr=true --allow-privileged=true --bind-address=0.0.0.0 --secure-port=6443 --insecure-port=0 --advertise-address=192.168.60.102 --service-cluster-ip-range=10.96.0.0/12 --service-node-port-range=30000-32767 --etcd-servers=https://192.168.60.101:2379,https://192.168.60.102:2379,https://192.168.60.103:2379 --etcd-cafile=/etc/etcd/ssl/etcd-ca.pem --etcd-certfile=/etc/etcd/ssl/etcd.pem --etcd-keyfile=/etc/etcd/ssl/etcd-key.pem --client-ca-file=/etc/kubernetes/pki/ca.pem --tls-cert-file=/etc/kubernetes/pki/apiserver.pem --tls-private-key-file=/etc/kubernetes/pki/apiserver-key.pem --kubelet-client-certificate=/etc/kubernetes/pki/apiserver.pem --kubelet-client-key=/etc/kubernetes/pki/apiserver-key.pem --service-account-key-file=/etc/kubernetes/pki/sa.pub --service-account-signing-key-file=/etc/kubernetes/pki/sa.key --service-account-issuer=https://kubernetes.default.svc.cluster.local --kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname --enable-admission-plugins=NamespaceLifecycle,LimitRanger,ServiceAccount,DefaultStorageClass,DefaultTolerationSeconds,NodeRestriction,ResourceQuota --authorization-mode=Node,RBAC --enable-bootstrap-token-auth=true --requestheader-client-ca-file=/etc/kubernetes/pki/front-proxy-ca.pem --proxy-client-cert-file=/etc/kubernetes/pki/front-proxy-client.pem --proxy-client-key-file=/etc/kubernetes/pki/front-proxy-client-key.pem --requestheader-allowed-names=aggregator --requestheader-group-headers=X-Remote-Group --requestheader-extra-headers-prefix=X-Remote-Extra- --requestheader-username-headers=X-Remote-User # --token-auth-file=/etc/kubernetes/token.csv Restart=on-failure RestartSec=10s LimitNOFILE=65535 [Install] WantedBy=multi-user.target

[root@k8s-master02 ~]# systemctl enable --now kube-apiserver

#Master03

[root@k8s-master03 ~]# vim /usr/lib/systemd/system/kube-apiserver.service

[Unit] Description=Kubernetes API Server Documentation=https://github.com/kubernetes/kubernetes After=network.target [Service] ExecStart=/usr/local/bin/kube-apiserver --v=2 --logtostderr=true --allow-privileged=true --bind-address=0.0.0.0 --secure-port=6443 --insecure-port=0 --advertise-address=192.168.60.103 --service-cluster-ip-range=10.96.0.0/12 --service-node-port-range=30000-32767 --etcd-servers=https://192.168.60.101:2379,https://192.168.60.102:2379,https://192.168.60.103:2379 --etcd-cafile=/etc/etcd/ssl/etcd-ca.pem --etcd-certfile=/etc/etcd/ssl/etcd.pem --etcd-keyfile=/etc/etcd/ssl/etcd-key.pem --client-ca-file=/etc/kubernetes/pki/ca.pem --tls-cert-file=/etc/kubernetes/pki/apiserver.pem --tls-private-key-file=/etc/kubernetes/pki/apiserver-key.pem --kubelet-client-certificate=/etc/kubernetes/pki/apiserver.pem --kubelet-client-key=/etc/kubernetes/pki/apiserver-key.pem --service-account-key-file=/etc/kubernetes/pki/sa.pub --service-account-signing-key-file=/etc/kubernetes/pki/sa.key --service-account-issuer=https://kubernetes.default.svc.cluster.local --kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname --enable-admission-plugins=NamespaceLifecycle,LimitRanger,ServiceAccount,DefaultStorageClass,DefaultTolerationSeconds,NodeRestriction,ResourceQuota --authorization-mode=Node,RBAC --enable-bootstrap-token-auth=true --requestheader-client-ca-file=/etc/kubernetes/pki/front-proxy-ca.pem --proxy-client-cert-file=/etc/kubernetes/pki/front-proxy-client.pem --proxy-client-key-file=/etc/kubernetes/pki/front-proxy-client-key.pem --requestheader-allowed-names=aggregator --requestheader-group-headers=X-Remote-Group --requestheader-extra-headers-prefix=X-Remote-Extra- --requestheader-username-headers=X-Remote-User # --token-auth-file=/etc/kubernetes/token.csv Restart=on-failure RestartSec=10s LimitNOFILE=65535 [Install] WantedBy=multi-user.target

[root@k8s-master03 ~]# systemctl enable --now kube-apiserver

【kube-controller-manager】

所有master节点配置kube-controller-manager-service

#vim /usr/lib/systemmd/system/kube-controller-manager.service

[Unit] Description=Kubernetes Controller Manager Documentation=https://github.com/kubernetes/kubernetes After=network.target [Service] ExecStart=/usr/local/bin/kube-controller-manager --v=2 --logtostderr=true --address=127.0.0.1 --root-ca-file=/etc/kubernetes/pki/ca.pem --cluster-signing-cert-file=/etc/kubernetes/pki/ca.pem --cluster-signing-key-file=/etc/kubernetes/pki/ca-key.pem --service-account-private-key-file=/etc/kubernetes/pki/sa.key --kubeconfig=/etc/kubernetes/controller-manager.kubeconfig --leader-elect=true --use-service-account-credentials=true --node-monitor-grace-period=40s --node-monitor-period=5s --pod-eviction-timeout=2m0s --controllers=*,bootstrapsigner,tokencleaner --allocate-node-cidrs=true --cluster-cidr=172.16.0.0/12 --requestheader-client-ca-file=/etc/kubernetes/pki/front-proxy-ca.pem --node-cidr-mask-size=24 Restart=always RestartSec=10s [Install] WantedBy=multi-user.target

#systemctl daemon-reload

#systemctl enable --now kube-controller-manager

【部署Scheduler组件】

#vim /usr/lib/systemd/system/kube-scheduler.service

[Unit] Description=Kubernetes Scheduler Documentation=https://github.com/kubernetes/kubernetes After=network.target [Service] ExecStart=/usr/local/bin/kube-scheduler --v=2 --logtostderr=true --address=127.0.0.1 --leader-elect=true --kubeconfig=/etc/kubernetes/scheduler.kubeconfig Restart=always RestartSec=10s [Install] WantedBy=multi-user.target

# systemctl daemon-reload

# systemctl enable --now kube-scheduler

【TSI Bootstrapping配置】

在kuberlete集群环境中,node节点的组件kubelet和kybe-proxy需要与Master端(kube-apiserver)进行通信,为了确保通信私密性其不受干扰,并确保集群的每个组件都在与另一个受信任的组件通信,在这里使用客户端TLS证书

只需要在Master01 创建bootstrap配置文件,为node节点生成TLS证书文件

[root@k8s-master01 ~]# cd /root/k8s-ha-install/bootstrap/

[root@k8s-master01 bootstrap]# kubectl config set-cluster kubernetes --certificate-authority=/etc/kubernetes/pki/ca.pem --embed-certs=true --server=https://192.168.60.236:8443 --kubeconfig=/etc/kubernetes/bootstrap-kubelet.kubeconfig

Cluster "kubernetes" set.

[root@k8s-master01 bootstrap]# kubectl config set-credentials tls-bootstrap-token-user --token=c8ad9c.2e4d610cf3e7426e --kubeconfig=/etc/kubernetes/bootstrap-kubelet.kubeconfig

User "tls-bootstrap-token-user" set.

[root@k8s-master01 bootstrap]# kubectl config set-context tls-bootstrap-token-user@kubernetes --cluster=kubernetes --user=tls-bootstrap-token-user --kubeconfig=/etc/kubernetes/bootstrap-kubelet.kubeconfig

Context "tls-bootstrap-token-user@kubernetes" modified.

[root@k8s-master01 bootstrap]# kubectl config use-context tls-bootstrap-token-user@kubernetes --kubeconfig=/etc/kubernetes/bootstrap-kubelet.kubeconfig

Switched to context "tls-bootstrap-token-user@kubernetes".

[root@k8s-master01 ~]# mkdir -p /root/.kube ; cp /etc/kubernetes/admin.kubeconfig /root/.kube/config

[root@k8s-master01 bootstrap]# kubectl create -f bootstrap.secret.yaml #创建一个secret的yaml文件

【Node节点配置】

复制证书,将在kubernetes的生成的证书复制到其他master节点和node节点上

[root@k8s-master01 ~]# cd /etc/kubernetes/

[root@k8s-master01 kubernetes]# for NODE in k8s-master02 k8s-master03 k8s-node01 k8s-node02; do ssh $NODE mkdir -p /etc/kubernetes/pki /etc/etcd/ssl /etc/etcd/ssl; for FILE in etcd-ca.pem etcd.pem etcd-key.pem; do scp /etc/etcd/ssl/$FILE $NODE:/etc/etcd/ssl/; done; for FILE in pki/ca.pem pki/ca-key.pem pki/front-proxy-ca.pem bootstrap-kubelet.kubeconfig; do scp /etc/kubernetes/$FILE $NODE:/etc/kubernetes/${FILE}; done; done

kubelet.service服务配置

#vim /usr/lib/systemd/system/kubelet.servic

[Unit] Description=Kubernetes Kubelet Documentation=https://github.com/kubernetes/kubernetes After=docker.service Requires=docker.service [Service] ExecStart=/usr/local/bin/kubelet Restart=always StartLimitInterval=0 RestartSec=10 [Install] WantedBy=multi-user.target

# vim /etc/systemd/system/kubelet.service.d/10-kubelet.conf

[Unit] Description=Kubernetes Kubelet Documentation=https://github.com/kubernetes/kubernetes After=docker.service Requires=docker.service [Service] ExecStart=/usr/local/bin/kubelet Restart=always StartLimitInterval=0 RestartSec=10 [Install] WantedBy=multi-user.target [root@k8s-node01 ~]# cat /etc/systemd/system/kubelet.service.d/10-kubelet.conf [Service] Environment="KUBELET_KUBECONFIG_ARGS=--bootstrap-kubeconfig=/etc/kubernetes/bootstrap-kubelet.kubeconfig --kubeconfig=/etc/kubernetes/kubelet.kubeconfig" Environment="KUBELET_SYSTEM_ARGS=--network-plugin=cni --cni-conf-dir=/etc/cni/net.d --cni-bin-dir=/opt/cni/bin" Environment="KUBELET_CONFIG_ARGS=--config=/etc/kubernetes/kubelet-conf.yml --pod-infra-container-image=registry.cn-hangzhou.aliyuncs.com/google_containers/pause-amd64:3.2" Environment="KUBELET_EXTRA_ARGS=--node-labels=node.kubernetes.io/node='' " ExecStart= ExecStart=/usr/local/bin/kubelet $KUBELET_KUBECONFIG_ARGS $KUBELET_CONFIG_ARGS $KUBELET_SYSTEM_ARGS $KUBELET_EXTRA_ARGS

# vim /etc/kubernetes/kubelet-conf.yml

apiVersion: kubelet.config.k8s.io/v1beta1 kind: KubeletConfiguration address: 0.0.0.0 port: 10250 readOnlyPort: 10255 authentication: anonymous: enabled: false webhook: cacheTTL: 2m0s enabled: true x509: clientCAFile: /etc/kubernetes/pki/ca.pem authorization: mode: Webhook webhook: cacheAuthorizedTTL: 5m0s cacheUnauthorizedTTL: 30s cgroupDriver: systemd cgroupsPerQOS: true clusterDNS: - 10.96.0.10 clusterDomain: cluster.local containerLogMaxFiles: 5 containerLogMaxSize: 10Mi contentType: application/vnd.kubernetes.protobuf cpuCFSQuota: true cpuManagerPolicy: none cpuManagerReconcilePeriod: 10s enableControllerAttachDetach: true enableDebuggingHandlers: true enforceNodeAllocatable: - pods eventBurst: 10 eventRecordQPS: 5 evictionHard: imagefs.available: 15% memory.available: 100Mi nodefs.available: 10% nodefs.inodesFree: 5% evictionPressureTransitionPeriod: 5m0s failSwapOn: true fileCheckFrequency: 20s hairpinMode: promiscuous-bridge healthzBindAddress: 127.0.0.1 healthzPort: 10248 httpCheckFrequency: 20s imageGCHighThresholdPercent: 85 imageGCLowThresholdPercent: 80 imageMinimumGCAge: 2m0s iptablesDropBit: 15 iptablesMasqueradeBit: 14 kubeAPIBurst: 10 kubeAPIQPS: 5 makeIPTablesUtilChains: true maxOpenFiles: 1000000 maxPods: 110 nodeStatusUpdateFrequency: 10s oomScoreAdj: -999 podPidsLimit: -1 registryBurst: 10 registryPullQPS: 5 resolvConf: /etc/resolv.conf rotateCertificates: true runtimeRequestTimeout: 2m0s serializeImagePulls: true staticPodPath: /etc/kubernetes/manifests streamingConnectionIdleTimeout: 4h0m0s syncFrequency: 1m0s volumeStatsAggPeriod: 1m0s

# systemctl daemon-reload

# systemctl enable --now kubelet

#在所有Master以及node节点上配置kube-proxy

[root@k8s-master01 # cd /root/k8s-ha-install/

[root@k8s-master01 k8s-ha-install]# kubectl -n kube-system create serviceaccount kube-proxy

ubeconfig

kubectl config set-context kubernetes --cluster=kubernetes --user=kubernetes --kubeconfig=/etc/kubernetes/kube-proxy.kubeconfig

kubectl config use-context kubernetes --kubeconfig=/etc/kubernetes/kube-proxy.kubeconfigserviceaccount/kube-proxy created

[root@k8s-master01 k8s-ha-install]# kubectl create clusterrolebinding system:kube-proxy --clusterrole system:node-proxier --serviceaccount kube-system:kube-proxy

clusterrolebinding.rbac.authorization.k8s.io/system:kube-proxy created

[root@k8s-master01 k8s-ha-install]# SECRET=$(kubectl -n kube-system get sa/kube-proxy

> --output=jsonpath='{.secrets[0].name}')

[root@k8s-master01 k8s-ha-install]# JWT_TOKEN=$(kubectl -n kube-system get secret/$SECRET

> --output=jsonpath='{.data.token}' | base64 -d)

[root@k8s-master01 k8s-ha-install]# PKI_DIR=/etc/kubernetes/pki

[root@k8s-master01 k8s-ha-install]# K8S_DIR=/etc/kubernetes

[root@k8s-master01 k8s-ha-install]# kubectl config set-cluster kubernetes --certificate-authority=/etc/kubernetes/pki/ca.pem --embed-certs=true --server=https://192.168.60.236:8443 --kubeconfig=${K8S_DIR}/kube-proxy.kubeconfig

Cluster "kubernetes" set.

[root@k8s-master01 k8s-ha-install]# kubectl config set-credentials kubernetes --token=${JWT_TOKEN} --kubeconfig=/etc/kubernetes/kube-proxy.kubeconfig

User "kubernetes" set.

[root@k8s-master01 k8s-ha-install]# kubectl config set-context kubernetes --cluster=kubernetes --user=kubernetes --kubeconfig=/etc/kubernetes/kube-proxy.kubeconfig

Context "kubernetes" created.

[root@k8s-master01 k8s-ha-install]# kubectl config use-context kubernetes --kubeconfig=/etc/kubernetes/kube-proxy.kubeconfig

[root@k8s-master01 k8s-ha-install]# vim kube-proxy/kube-proxy.conf

apiVersion: kubeproxy.config.k8s.io/v1alpha1 bindAddress: 0.0.0.0 clientConnection: acceptContentTypes: "" burst: 10 contentType: application/vnd.kubernetes.protobuf kubeconfig: /etc/kubernetes/kube-proxy.kubeconfig qps: 5 clusterCIDR: 172.16.0.0/12 configSyncPeriod: 15m0s conntrack: max: null maxPerCore: 32768 min: 131072 tcpCloseWaitTimeout: 1h0m0s tcpEstablishedTimeout: 24h0m0s enableProfiling: false healthzBindAddress: 0.0.0.0:10256 hostnameOverride: "" iptables: masqueradeAll: false masqueradeBit: 14 minSyncPeriod: 0s syncPeriod: 30s ipvs: masqueradeAll: true minSyncPeriod: 5s scheduler: "rr" syncPeriod: 30s kind: KubeProxyConfiguration metricsBindAddress: 127.0.0.1:10249 mode: "ipvs" nodePortAddresses: null oomScoreAdj: -999 portRange: "" udpIdleTimeout: 250ms

#在k8s-master01节点将kube-proxy的system.service文件发送到其他节点

[root@k8s-master01 k8s-ha-install]# for NODE in k8s-master01 k8s-master02 k8s-master03; do scp ${K8S_DIR}/kube-proxy.kubeconfig $NODE:/etc/kubernetes/kube-proxy.kubeconfig; scp kube-proxy/kube-proxy.conf $NODE:/etc/kubernetes/kube-proxy.conf; scp kube-proxy/kube-proxy.service $NODE:/usr/lib/systemd/system/kube-proxy.service; done

[root@k8s-master01 k8s-ha-install]# for NODE in k8s-node01 k8s-node02; do

> scp /etc/kubernetes/kube-proxy.kubeconfig $NODE:/etc/kubernetes/kube-proxy.kubeconfig

> scp kube-proxy/kube-proxy.conf $NODE:/etc/kubernetes/kube-proxy.conf

> scp kube-proxy/kube-proxy.service $NODE:/usr/lib/systemd/system/kube-proxy.service

> done

#启用所有k8s node节点上的kube-proxy组件

# systemctl daemon-reload

# systemctl enable --now kube-proxy

【部署Calico 】

[root@k8s-master01 ~]# cd /root/k8s-ha-install/calico/

[root@k8s-master01 calico]# sed -i 's#etcd_endpoints: "http://<ETCD_IP>:<ETCD_PORT>"#etcd_endpoints: "https://192.168.60.101:2379,https://192.168.60.102:2379,https://192.168.60.103:2379"#g' calico-etcd.yaml

[root@k8s-master01 calico]# sed -i 's#etcd_endpoints: "http://<ETCD_IP>:<ETCD_PORT>"#etcd_endpoints: "https://192.168.60.101:2379,https://192.168.60.102:2379,https://192.168.60.103:2379"#g' calico-etcd.yaml

[root@k8s-master01 calico]# ETCD_CA=`cat /etc/kubernetes/pki/etcd/etcd-ca.pem | base64 | tr -d '

'`

[root@k8s-master01 calico]# ETCD_CERT=`cat /etc/kubernetes/pki/etcd/etcd.pem | base64 | tr -d '

'`

[root@k8s-master01 calico]# ETCD_KEY=`cat /etc/kubernetes/pki/etcd/etcd-key.pem | base64 | tr -d '

'`

[root@k8s-master01 calico]# sed -i "s@# etcd-key: null@etcd-key: ${ETCD_KEY}@g; s@# etcd-cert: null@etcd-cert: ${ETCD_CERT}@g; s@# etcd-ca: null@etcd-ca: ${ETCD_CA}@g" calico-etcd.yaml

[root@k8s-master01 calico]# sed -i 's#etcd_ca: ""#etcd_ca: "/calico-secrets/etcd-ca"#g; s#etcd_cert: ""#etcd_cert: "/calico-secrets/etcd-cert"#g; s#etcd_key: "" #etcd_key: "/calico-secrets/etcd-key" #g' calico-etcd.yaml

[root@k8s-master01 calico]# POD_SUBNET="172.16.0.0/12"

[root@k8s-master01 calico]# sed -i 's@# - name: CALICO_IPV4POOL_CIDR@- name: CALICO_IPV4POOL_CIDR@g; s@# value: "192.168.0.0/16"@ value: '"${POD_SUBNET}"'@g' calico-etcd.yaml

[root@k8s-master01 calico]# kubectl apply -f calico-etcd.yaml

secret/calico-etcd-secrets created configmap/calico-config created clusterrole.rbac.authorization.k8s.io/calico-kube-controllers created clusterrolebinding.rbac.authorization.k8s.io/calico-kube-controllers created clusterrole.rbac.authorization.k8s.io/calico-node created clusterrolebinding.rbac.authorization.k8s.io/calico-node created daemonset.apps/calico-node created serviceaccount/calico-node created deployment.apps/calico-kube-controllers created serviceaccount/calico-kube-controllers created

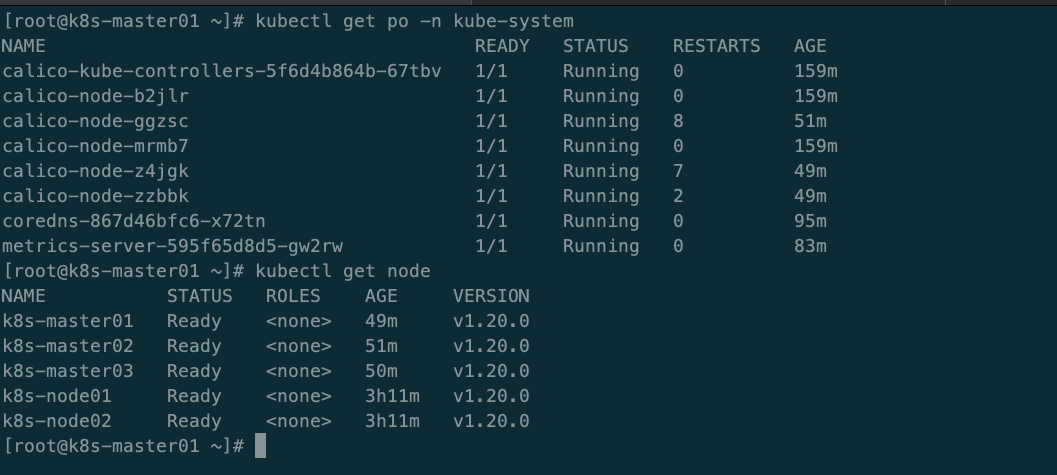

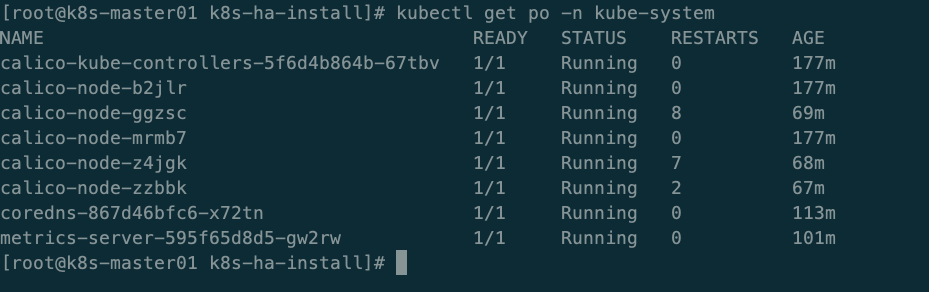

[root@k8s-master01 calico]# kubectl get po -n kube-system #查看node节点状态

【安装CoreDNS】

[root@k8s-master01 ~]# cd /root/k8s-ha-install/

[root@k8s-master01 k8s-ha-install]#vim CoreDNS/coredns.yaml

apiVersion: v1 kind: ServiceAccount metadata: name: coredns namespace: kube-system --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRole metadata: labels: kubernetes.io/bootstrapping: rbac-defaults name: system:coredns rules: - apiGroups: - "" resources: - endpoints - services - pods - namespaces verbs: - list - watch --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: annotations: rbac.authorization.kubernetes.io/autoupdate: "true" labels: kubernetes.io/bootstrapping: rbac-defaults name: system:coredns roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: system:coredns subjects: - kind: ServiceAccount name: coredns namespace: kube-system --- apiVersion: v1 kind: ConfigMap metadata: name: coredns namespace: kube-system data: Corefile: | .:53 { errors health { lameduck 5s } ready kubernetes cluster.local in-addr.arpa ip6.arpa { fallthrough in-addr.arpa ip6.arpa } prometheus :9153 forward . /etc/resolv.conf { max_concurrent 1000 } cache 30 loop reload loadbalance } --- apiVersion: apps/v1 kind: Deployment metadata: name: coredns namespace: kube-system labels: k8s-app: kube-dns kubernetes.io/name: "CoreDNS" spec: # replicas: not specified here: # 1. Default is 1. # 2. Will be tuned in real time if DNS horizontal auto-scaling is turned on. strategy: type: RollingUpdate rollingUpdate: maxUnavailable: 1 selector: matchLabels: k8s-app: kube-dns template: metadata: labels: k8s-app: kube-dns spec: priorityClassName: system-cluster-critical serviceAccountName: coredns tolerations: - key: "CriticalAddonsOnly" operator: "Exists" nodeSelector: kubernetes.io/os: linux affinity: podAntiAffinity: preferredDuringSchedulingIgnoredDuringExecution: - weight: 100 podAffinityTerm: labelSelector: matchExpressions: - key: k8s-app operator: In values: ["kube-dns"] topologyKey: kubernetes.io/hostname containers: - name: coredns image: registry.cn-beijing.aliyuncs.com/dotbalo/coredns:1.7.0 imagePullPolicy: IfNotPresent resources: limits: memory: 170Mi requests: cpu: 100m memory: 70Mi args: [ "-conf", "/etc/coredns/Corefile" ] volumeMounts: - name: config-volume mountPath: /etc/coredns readOnly: true ports: - containerPort: 53 name: dns protocol: UDP - containerPort: 53 name: dns-tcp protocol: TCP - containerPort: 9153 name: metrics protocol: TCP securityContext: allowPrivilegeEscalation: false capabilities: add: - NET_BIND_SERVICE drop: - all readOnlyRootFilesystem: true livenessProbe: httpGet: path: /health port: 8080 scheme: HTTP initialDelaySeconds: 60 timeoutSeconds: 5 successThreshold: 1 failureThreshold: 5 readinessProbe: httpGet: path: /ready port: 8181 scheme: HTTP dnsPolicy: Default volumes: - name: config-volume configMap: name: coredns items: - key: Corefile path: Corefile --- apiVersion: v1 kind: Service metadata: name: kube-dns namespace: kube-system annotations: prometheus.io/port: "9153" prometheus.io/scrape: "true" labels: k8s-app: kube-dns kubernetes.io/cluster-service: "true" kubernetes.io/name: "CoreDNS" spec: selector: k8s-app: kube-dns clusterIP: 10.96.0.10 ports: - name: dns port: 53 protocol: UDP - name: dns-tcp port: 53 protocol: TCP - name: metrics port: 9153 protocol: TCP [root@k8s-master01 k8s-ha-install]# cat CoreDNS/coredns.yaml | grep 10.96.0.10 clusterIP: 10.96.0.10

[root@k8s-master01 k8s-ha-install]# kubectl create -f CoreDNS/coredns.yaml

serviceaccount/coredns created

clusterrole.rbac.authorization.k8s.io/system:coredns created

clusterrolebinding.rbac.authorization.k8s.io/system:coredns created

configmap/coredns created

deployment.apps/coredns created

service/kube-dns created

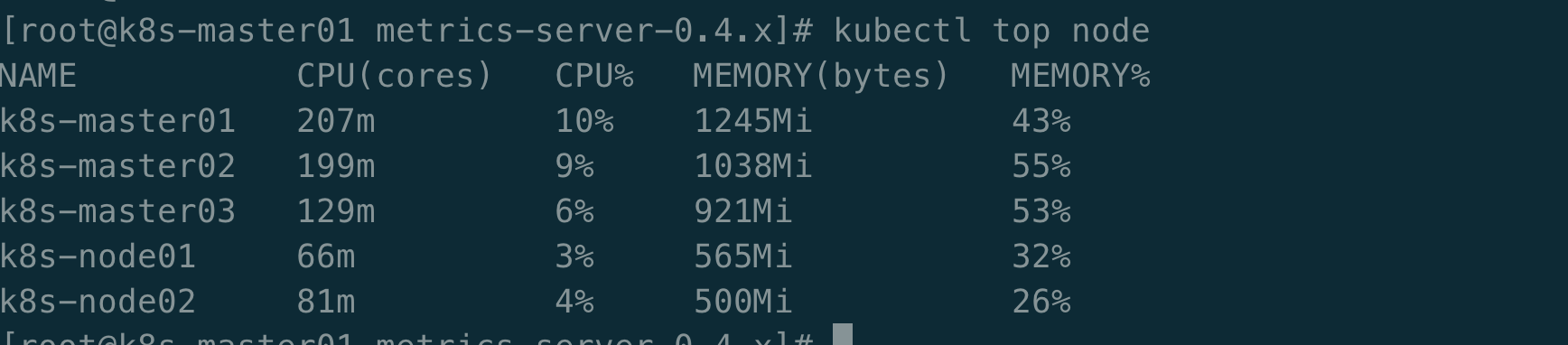

【安装Metrics Server】

在新版Kubernetes中系统资源的采集均使用Metrics-server,可以通过Metrics采集节点和Pod的内存、磁盘、CPU和网络的使用率

[root@k8s-master01 ~]# cd /root/k8s-ha-install/metrics-server-0.4.x

[root@k8s-master01 metrics-server-0.4.x]# kubectl create -f .

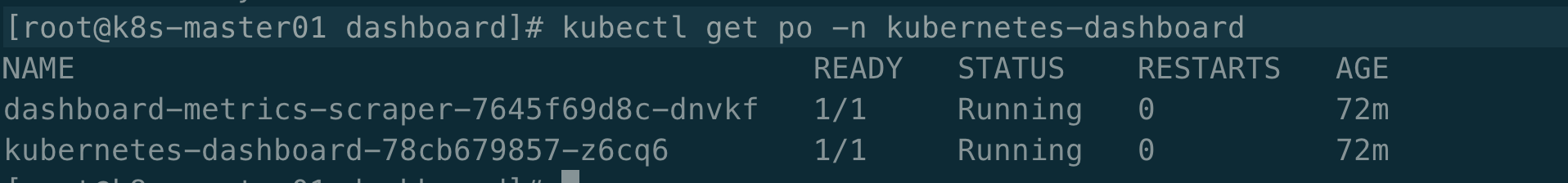

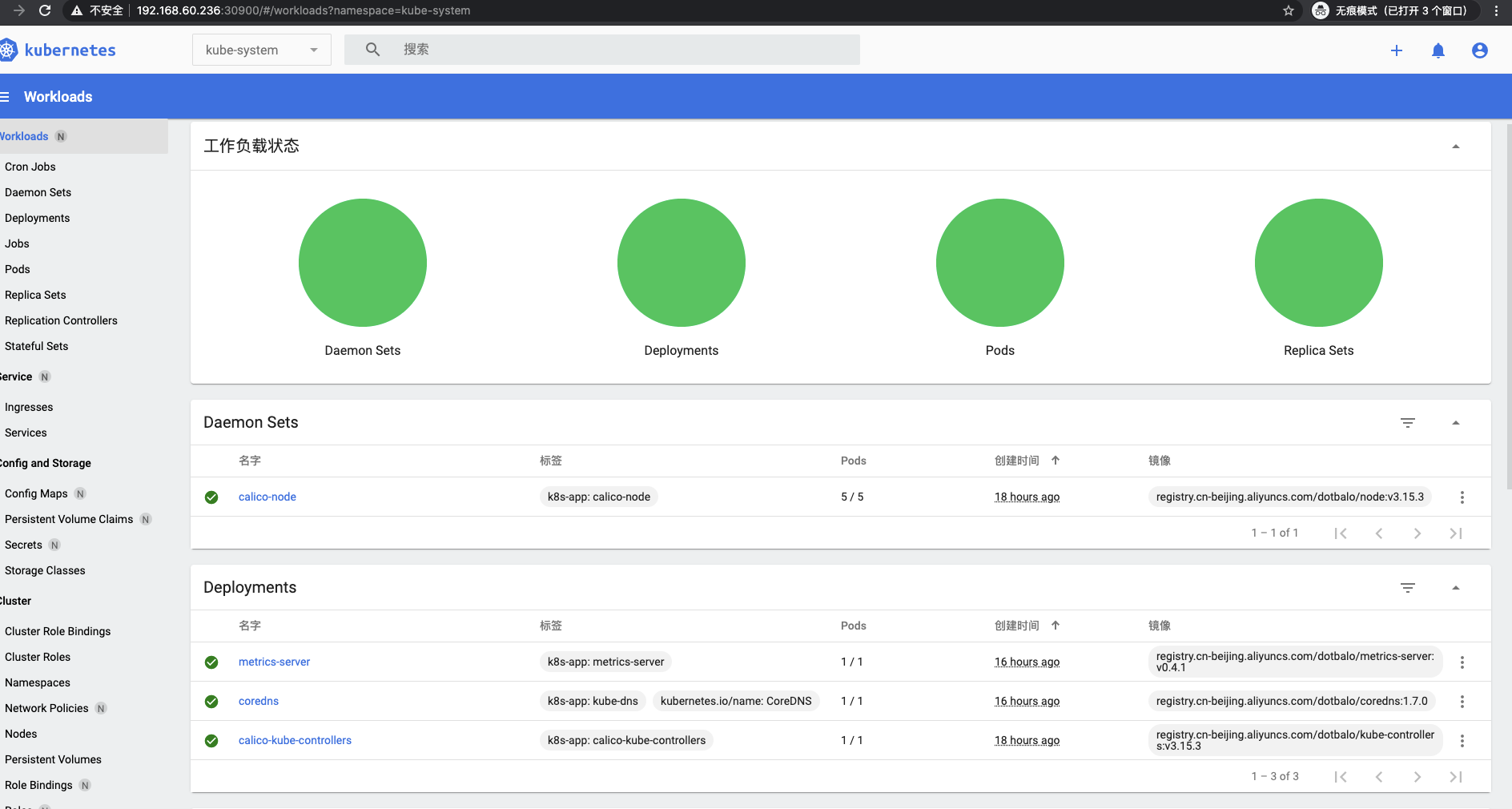

【安装dashboard 】

[root@k8s-master01 ~]# cd /root/k8s-ha-install/dashboard/

[root@k8s-master01 dashboard]# kubectl create -f .

[root@k8s-master01 dashboard]# kubectl get po -n kubernetes-dashboard

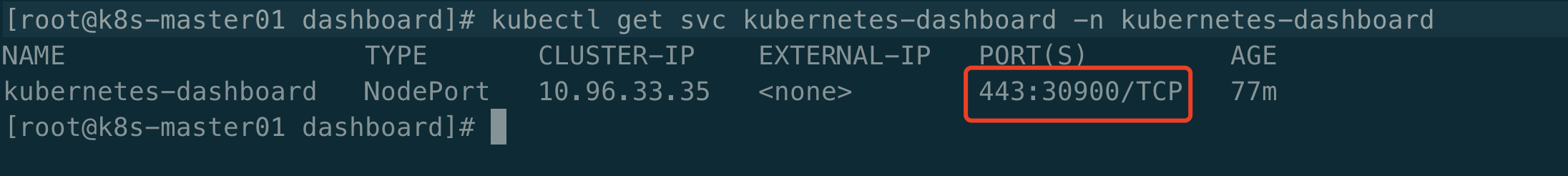

[root@k8s-master01 dashboard]# kubectl edit svc kubernetes-dashboard -n kubernetes-dashboard #更改dashboard的svc为NodePort

apiVersion: v1 kind: Service metadata: creationTimestamp: "2020-12-24T01:20:21Z" labels: k8s-app: kubernetes-dashboard name: kubernetes-dashboard namespace: kubernetes-dashboard resourceVersion: "30932" uid: 141a3d84-aa0f-414f-995f-5d40a609ca22 spec: clusterIP: 10.96.33.35 clusterIPs: - 10.96.33.35 externalTrafficPolicy: Cluster ports: - nodePort: 30900 port: 443 protocol: TCP targetPort: 8443 selector: k8s-app: kubernetes-dashboard sessionAffinity: None type: NodePort status: loadBalancer: {}

将Cluster IP更改为NodePort(Ps:如果已经为NodePort可忽略次步骤)

[root@k8s-master01 dashboard]# kubectl get svc kubernetes-dashboard -n kubernetes-dashboard # 查看dashboard暴露的端口号,通过任意安装了kube-proxy宿主机或者VIP+端口即可访问到dashboard界面

# kubectl -n kube-system describe secret $(kubectl -n kube-system get secret | grep admin-user | awk '{print $1}') #查看token,用于dashboard界面认证

Name: admin-user-token-lvc4w Namespace: kube-system Labels: <none> Annotations: kubernetes.io/service-account.name: admin-user kubernetes.io/service-account.uid: 02c91803-12bc-4ca4-8107-5690f6551adb Type: kubernetes.io/service-account-token Data ==== ca.crt: 1411 bytes namespace: 11 bytes token: eyJhbGciOiJSUzI1NiIsImtpZCI6IkQ2LXlsQXZkSWFjQzQ5TFE4N1dQalJCeXpRNUdBQmkwOVJSamRINGVabHMifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlLXN5c3RlbSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJhZG1pbi11c2VyLXRva2VuLWx2YzR3Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQubmFtZSI6ImFkbWluLXVzZXIiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC51aWQiOiIwMmM5MTgwMy0xMmJjLTRjYTQtODEwNy01NjkwZjY1NTFhZGIiLCJzdWIiOiJzeXN0ZW06c2VydmljZWFjY291bnQ6a3ViZS1zeXN0ZW06YWRtaW4tdXNlciJ9.vszo1cC_bZMwYUoPC71xSQH7P1pbLqkxFRF4RslVj1_eKsAQF0fE7N83Uwu9AiE_Van_nQfocTWIUz6db6cu6o4HYDALLn26oyrJ8mdZZHoLbdiGo-UBo8WbAAxl_n195hxTLZLSminj-ck28bCMtKpaYUZ9DnkToo26afMla8YO-Hj7h2AVcX2P4i7wQVKkb9H2-ImR1hBik1t2an_bsL8ycU00_-50RNNlTEr7CIHxmabGXjFI9R55lPuZW-uQDH3DbPnwbwFNTdr3tV1mriv8aF0l-6-lJbXGyY7t4mlPyiu5BakpLt-Dky3ZcNfCKAcB_XyzgKYFqaPmBgcJ1Q

#https://192.168.60.236:30900/#/login 我这里选择是的访问VIP加上暴露的30900端口号;

【集群验证】

新建一个kubernetes po

[root@k8s-master01 ~]# cat<<EOF | kubectl apply -f - apiVersion: v1 kind: Pod metadata: name: busybox namespace: default spec: containers: - name: busybox image: busybox:1.28 command: - sleep - "3600" imagePullPolicy: IfNotPresent restartPolicy: Always EOF

2、验证pod是否能解析跨namespace的service;在这里 busybox是在default的namespache上,而kube-dns则是在kube-system命名空间的services;

[root@k8s-master01 ~]# kubectl exec busybox -n default -- nslookup kube-dns.kube-system Server: 10.96.0.10 Address 1: 10.96.0.10 kube-dns.kube-system.svc.cluster.local Name: kube-dns.kube-system Address 1: 10.96.0.10 kube-dns.kube-system.svc.cluster.local

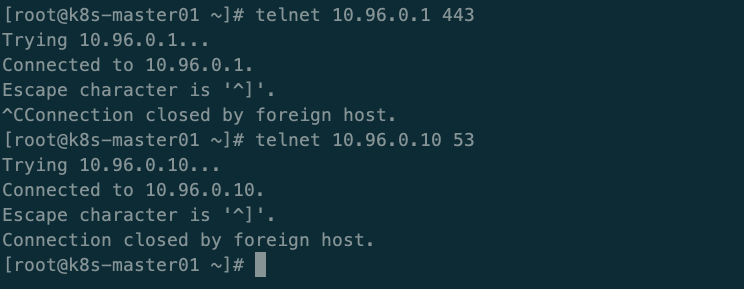

3、验证每个节点都必须能访问kubernetes的kubernetes svc 443端口和kube-dns的service 53端口

[root@k8s-master01 ~]# kubectl get svc #查看一下kubernetes services地址 NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 5d1h [root@k8s-master01 ~]# kubectl get svc -n kube-system # NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE kube-dns ClusterIP 10.96.0.10 <none> 53/UDP,53/TCP,9153/TCP 4d23h metrics-server ClusterIP 10.111.213.213 <none> 443/TCP 4d23h

4、验证集群pod之间能正常通信

[root@k8s-master01 ~]# kubectl get po -n kube-system -owide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES calico-kube-controllers-5f6d4b864b-67tbv 1/1 Running 3 5d 192.168.60.105 k8s-node02 <none> <none> calico-node-b2jlr 1/1 Running 3 5d 192.168.60.104 k8s-node01 <none> <none> calico-node-ggzsc 1/1 Running 10 4d22h 192.168.60.102 k8s-master02 <none> <none> calico-node-mrmb7 1/1 Running 3 5d 192.168.60.105 k8s-node02 <none> <none> calico-node-z4jgk 1/1 Running 9 4d22h 192.168.60.103 k8s-master03 <none> <none> calico-node-zzbbk 1/1 Running 4 4d22h 192.168.60.101 k8s-master01 <none> <none> coredns-867d46bfc6-x72tn 1/1 Running 3 4d23h 172.17.125.8 k8s-node01 <none> <none> metrics-server-595f65d8d5-gw2rw 1/1 Running 3 4d23h 172.17.125.7 k8s-node01 <none> <none>

[root@k8s-master01 ~]# kubectl exec -it calico-node-b2jlr -n kube-system -- bash #通过kubectl命令进入node01容器中

[root@k8s-master01 ~]# kubectl get pod -n default -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

busybox 1/1 Running 0 47m 172.25.92.65 k8s-master02 <none> <none>

[root@k8s-master01 ~]# kubectl exec -it busybox -n default -- sh #进入busybox容器中,通过ping命令检测pod之间以及跨namespace能否正常通信

root@k8s-master01 ~]# kubectl create deploy nginx --image=nginx --replicas=3 #创建并部署一个nginx pod,并生成三个副本数量

deployment.apps/nginx created

[root@k8s-master01 ~]# kubectl get deploy

NAME READY UP-TO-DATE AVAILABLE AGE

nginx 3/3 3 3 47s

[root@k8s-master01 ~]# kubectl get pod -n default -owide #获取default命令空间下的pod的详细信息 NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES busybox 1/1 Running 0 59m 172.25.92.65 k8s-master02 <none> <none> nginx 1/1 Running 0 4m6s 172.18.195.1 k8s-master03 <none> <none> nginx-6799fc88d8-lhxr7 1/1 Running 0 2m19s 172.25.244.198 k8s-master01 <none> <none> nginx-6799fc88d8-snd7l 1/1 Running 0 2m19s 172.27.14.193 k8s-node02 <none> <none> nginx-6799fc88d8-wr7v8 1/1 Running 0 2m19s 172.25.244.197 k8s-master01 <none> <none>

[root@k8s-master01 ~]# kubectl delete deploy nginx #删除nginx的pod

END!