环境win7+vamvare10+centos7

一、新建三台centos7 64位的虚拟机

master 192.168.137.100 root/123456 node1 192.168.137.101 root/123456 node2 192.168.137.102 root/123456

二、关闭三台虚拟机的防火墙,在每台虚拟机里面执行:

systemctl stop firewalld.service

systemctl disable firewalld.service

三、在三台虚拟机里面的/etc/hosts添加三行

192.168.137.100 master 192.168.137.101 node1 192.168.137.102 node2

四、为三台机器设置ssh免密登录

1、CentOS7默认没有启动ssh无密登录,去掉/etc/ssh/sshd_config其中1行的注释,每台服务器都要设置

#PubkeyAuthentication yes

然后重启ssh服务

systemctl restart sshd

2、在master机器的/root执行:ssh-keygen -t rsa命令,一直按回车。三台机器都要执行。

[root@master ~]# ssh-keygen -t rsa Generating public/private rsa key pair. Enter file in which to save the key (/root/.ssh/id_rsa): Created directory '/root/.ssh'. Enter passphrase (empty for no passphrase): Enter same passphrase again: Your identification has been saved in /root/.ssh/id_rsa. Your public key has been saved in /root/.ssh/id_rsa.pub. The key fingerprint is: SHA256:aMUO8b/EkylqTMb9+71ePnQv0CWQohsaMeAbMH+t87M root@master The key's randomart image is: +---[RSA 2048]----+ | o ... . | | = o= . o | | + oo=. . . | | =.Boo o . .| | . OoSoB . o | | =.+.+ o. ...| | + o o .. +| | . o . ..+.| | E ....+oo| +----[SHA256]-----+

3、在master上合并公钥到authorized_keys文件

[root@master ~]# cd /root/.ssh/ [root@master .ssh]# ll total 8 -rw-------. 1 root root 1679 Apr 19 11:10 id_rsa -rw-r--r--. 1 root root 393 Apr 19 11:10 id_rsa.pub [root@master .ssh]# cat id_rsa.pub>> authorized_keys

4、将master的authorized_keys复制到node1和node2节点

scp /root/.ssh/authorized_keys root@192.168.137.101:/root/.ssh/ scp /root/.ssh/authorized_keys root@192.168.137.102:/root/.ssh/

5、测试:

[root@master ~]# ssh root@192.168.137.101 Last login: Thu Apr 19 11:41:23 2018 from 192.168.137.100 [root@node1 ~]#

[root@master ~]# ssh root@192.168.137.102 Last login: Mon Apr 23 10:40:38 2018 from 192.168.137.1 [root@node2 ~]#

五、为三台机器安装jdk

1、jdk下载地址:https://pan.baidu.com/s/1-fhy_zbGbEXR1SBK8V7aNQ

2、创建目录:/home/java

mkdir -p /home/java

3、将下载的文件jdk-7u79-linux-x64.tar.gz,放到/home/java底下,并执行以下命令:

tar -zxf jdk-7u79-linux-x64.tar.gz

rm -rf tar -zxf jdk-7u79-linux-x64.tar.gz

4、配置环境变量:

vi /etc/profile,添加以下内容

export JAVA_HOME=/home/java/jdk1.7.0_79 export CLASSPATH=.:$JAVA_HOME/jre/lib/rt.jar:$JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tools.jar export PATH=$PATH:$JAVA_HOME/bin

然后:source /etc/profile

测试:

[root@master jdk1.7.0_79]# java -version java version "1.7.0_79" Java(TM) SE Runtime Environment (build 1.7.0_79-b15) Java HotSpot(TM) 64-Bit Server VM (build 24.79-b02, mixed mode) [root@master jdk1.7.0_79]#

六、安装hadoop 2.7(只在Master服务器解压,再复制到Slave服务器)

1、创建/home/hadoop目录

mkdir -p /home/hadoop

2、将hadoop-2.7.0.tar.gz放到/home/hadoop下并解压

tar -zxf hadoop-2.7.0.tar.gz

3、在/home/hadoop目录下创建数据存放的文件夹,tmp、hdfs/data、hdfs/name

[root@master hadoop]# mkdir tmp [root@master hadoop]# mkdir -p hdfs/data [root@master hadoop]# mkdir -p hdfs/name

4、配置配置/home/hadoop/hadoop-2.7.0/etc/hadoop目录下的core-site.xml

<?xml version="1.0" encoding="UTF-8"?> <?xml-stylesheet type="text/xsl" href="configuration.xsl"?> <configuration> <property> <!-- 这里的值指的是默认的HDFS路径。当有多个HDFS集群同时工作时,用户如果不写集群名称,那么默认使用哪个哪?在这里指定!该值来自于hdfs-site.xml中的配置 --> <name>fs.defaultFS</name> <value>hdfs://192.168.137.100:9000</value> </property> <property> <name>fs.default.name</name> <value>hdfs://192.168.137.100:9000</value> </property> <property> <name>hadoop.tmp.dir</name> <value>file:/home/hadoop/tmp</value> </property> <property> <!--流文件的缓冲区--> <name>io.file.buffer.size</name> <value>131702</value> </property> </configuration>

5、配置/home/hadoop/hadoop-2.7.0/etc/hadoop目录下的hdfs-site.xml

<?xml version="1.0" encoding="UTF-8"?> <?xml-stylesheet type="text/xsl" href="configuration.xsl"?> <configuration> <!-- 设置secondarynamenode的http通讯地址 --> <property> <name>dfs.namenode.secondary.http-address</name> <value>192.168.137.100:9001</value> </property> <property> <name>dfs.namenode.name.dir</name> <value>file:/home/hadoop/dfs/name</value> </property> <property> <name>dfs.datanode.data.dir</name> <value>file:/home/hadoop/dfs/data</value> </property> <!--指定DataNode存储block的副本数量--> <property> <name>dfs.replication</name> <value>2</value> </property> <!--这里抽象出两个NameService实际上就是给这个HDFS集群起了个别名--> <property> <name>dfs.nameservices</name> <value>mycluster</value> </property> <property> <name>dfs.webhdfs.enabled</name> <value>true</value> </property> </configuration>

6、将/home/hadoop/hadoop-2.7.0/etc/hadoop目录下的mapred-site.xml.template复制一份,并命名成mapred-site.xml

<?xml version="1.0"?> <?xml-stylesheet type="text/xsl" href="configuration.xsl"?> <configuration> <property> <name>mapreduce.framework.name</name> <value>yarn</value> </property> <property> <name>mapreduce.jobhistory.address</name> <value>192.168.137.100:10020</value> </property> <property> <name>mapreduce.jobhistory.webapp.address</name> <value>192.168.137.100:19888</value> </property> </configuration>

7、配置/home/hadoop/hadoop-2.7.0/etc/hadoop目录下的yarn-site.xml

<?xml version="1.0"?> <configuration> <!--NodeManager上运行的附属服务。需配置成mapreduce_shuffle,才可运行MapReduce程序,默认值:“”--> <property> <name>yarn.nodemanager.aux-services</name> <value>mapreduce_shuffle</value> </property> <property> <name>yarn.nodemanager.auxservices.mapreduce.shuffle.class</name> <value>org.apache.hadoop.mapred.ShuffleHandler</value> </property> <!--ResourceManager 对客户端暴露的地址。客户端通过该地址向RM提交应用程序,杀死应用程序等 默认值:${yarn.resourcemanager.hostname}:8032--> <property> <name>yarn.resourcemanager.address</name> <value>192.168.137.100:8032</value> </property> <!--ResourceManager 对ApplicationMaster暴露的访问地址。ApplicationMaster通过该地址向RM申请资源、释放资源等。 默认值:${yarn.resourcemanager.hostname}:8030--> <property> <name>yarn.resourcemanager.scheduler.address</name> <value>192.168.137.100:8030</value> </property> <!--ResourceManager 对NodeManager暴露的地址。NodeManager通过该地址向RM汇报心跳,领取任务等。 默认值:${yarn.resourcemanager.hostname}:8031--> <property> <name>yarn.resourcemanager.resource-tracker.address</name> <value>192.168.137.100:8031</value> </property> <!--ResourceManager 对管理员暴露的访问地址。管理员通过该地址向RM发送管理命令等。 默认值:${yarn.resourcemanager.hostname}:8033--> <property> <name>yarn.resourcemanager.admin.address</name> <value>192.168.137.100:8033</value> </property> <!--ResourceManager对外web ui地址。用户可通过该地址在浏览器中查看集群各类信息。 默认值:${yarn.resourcemanager.hostname}:8088--> <property> <name>yarn.resourcemanager.webapp.address</name> <value>192.168.137.100:8088</value> </property> <!--NodeManager总的可用物理内存。注意,该参数是不可修改的,一旦设置,整个运行过程中不可动态修改。另外,该参数的默认值是8192MB,即使你的机器内存不够8192MB,YARN也会按照这些内存来使用(傻不傻?),因此,这个值通过一定要配置。--> <property> <name>yarn.nodemanager.resource.memory-mb</name> <value>768</value> </property> </configuration>

8、配置/home/hadoop/hadoop-2.7.0/etc/hadoop目录下hadoop-env.sh、yarn-env.sh的JAVA_HOME,不设置的话,启动不了

export JAVA_HOME=/home/java/jdk1.7.0_79

9、配置/home/hadoop/hadoop-2.7.0/etc/hadoop目录下的slaves,删除默认的localhost,增加2个从节点

192.168.137.101 192.168.137.102

10、将配置好的Hadoop复制到各个节点对应位置上

scp -r /home/hadoop 192.168.137.101:/home/ scp -r /home/hadoop 192.168.137.102:/home/

11、在Master服务器启动hadoop,从节点会自动启动,进入/home/hadoop/hadoop-2.7.0

1)初始化:bin/hdfs namenode -format

2)全部启动sbin/start-all.sh,也可以分开sbin/start-dfs.sh、sbin/start-yarn.sh

[root@master hadoop-2.7.0]# sbin/start-all.sh This script is Deprecated. Instead use start-dfs.sh and start-yarn.sh Starting namenodes on [master] master: starting namenode, logging to /home/hadoop/hadoop-2.7.0/logs/hadoop-root-namenode-master.out 192.168.137.101: starting datanode, logging to /home/hadoop/hadoop-2.7.0/logs/hadoop-root-datanode-node1.out 192.168.137.102: starting datanode, logging to /home/hadoop/hadoop-2.7.0/logs/hadoop-root-datanode-node2.out starting yarn daemons starting resourcemanager, logging to /home/hadoop/hadoop-2.7.0/logs/yarn-root-resourcemanager-master.out 192.168.137.101: starting nodemanager, logging to /home/hadoop/hadoop-2.7.0/logs/yarn-root-nodemanager-node1.out 192.168.137.102: starting nodemanager, logging to /home/hadoop/hadoop-2.7.0/logs/yarn-root-nodemanager-node2.out

3)停止的话,输入命令,sbin/stop-all.sh

4)输入命令,jps,可以看到相关信息

[root@master hadoop-2.7.0]# jps 1765 ResourceManager 2025 Jps

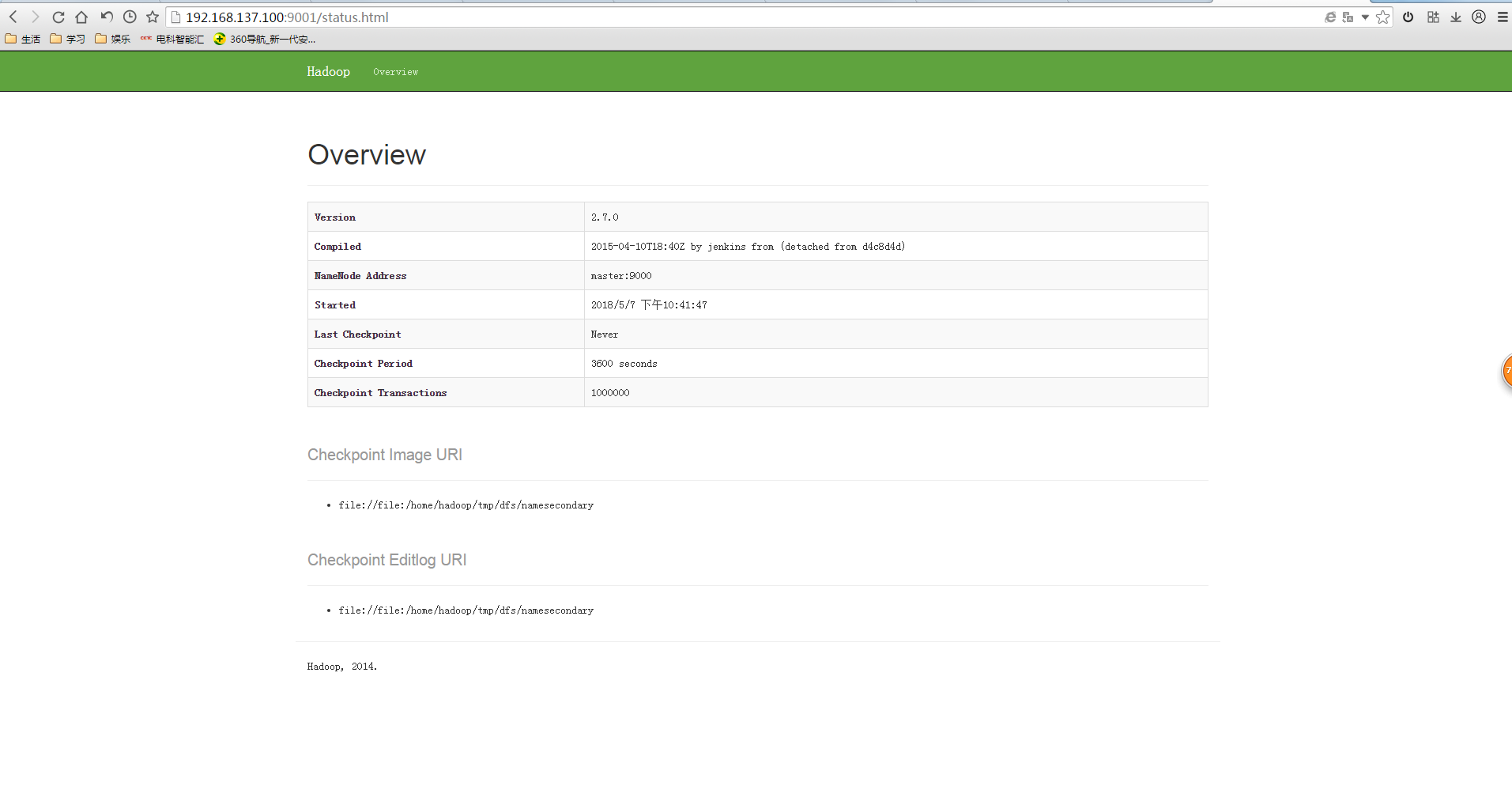

12、浏览器查看

1)resourcemanager.webapp.address的界面

2)namenode的界面