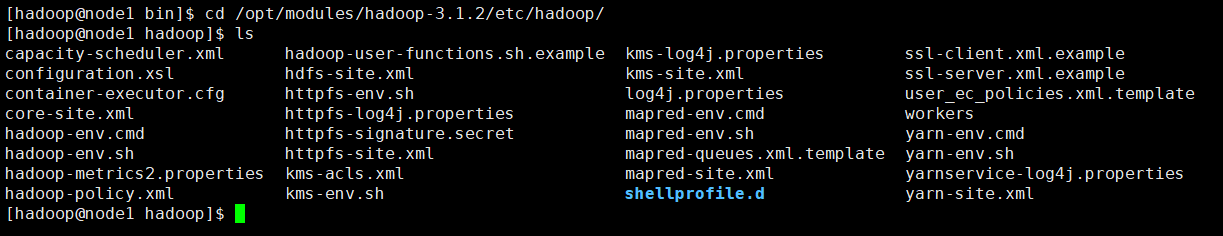

-修改hadoop的配置文件

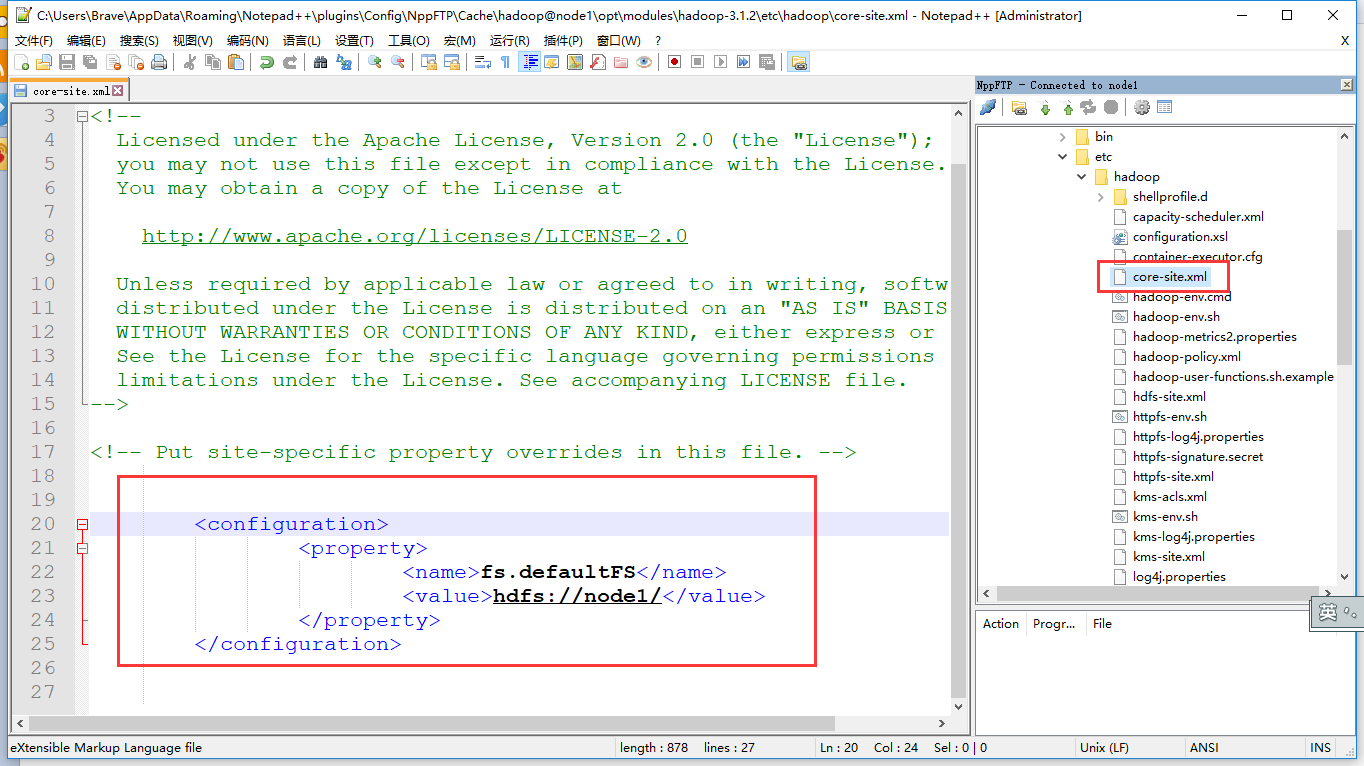

首先修改core-site.xml,添加以下内容

<property>

<name>fs.defaultFS</name>

<value>hdfs://node1/</value>

</property>

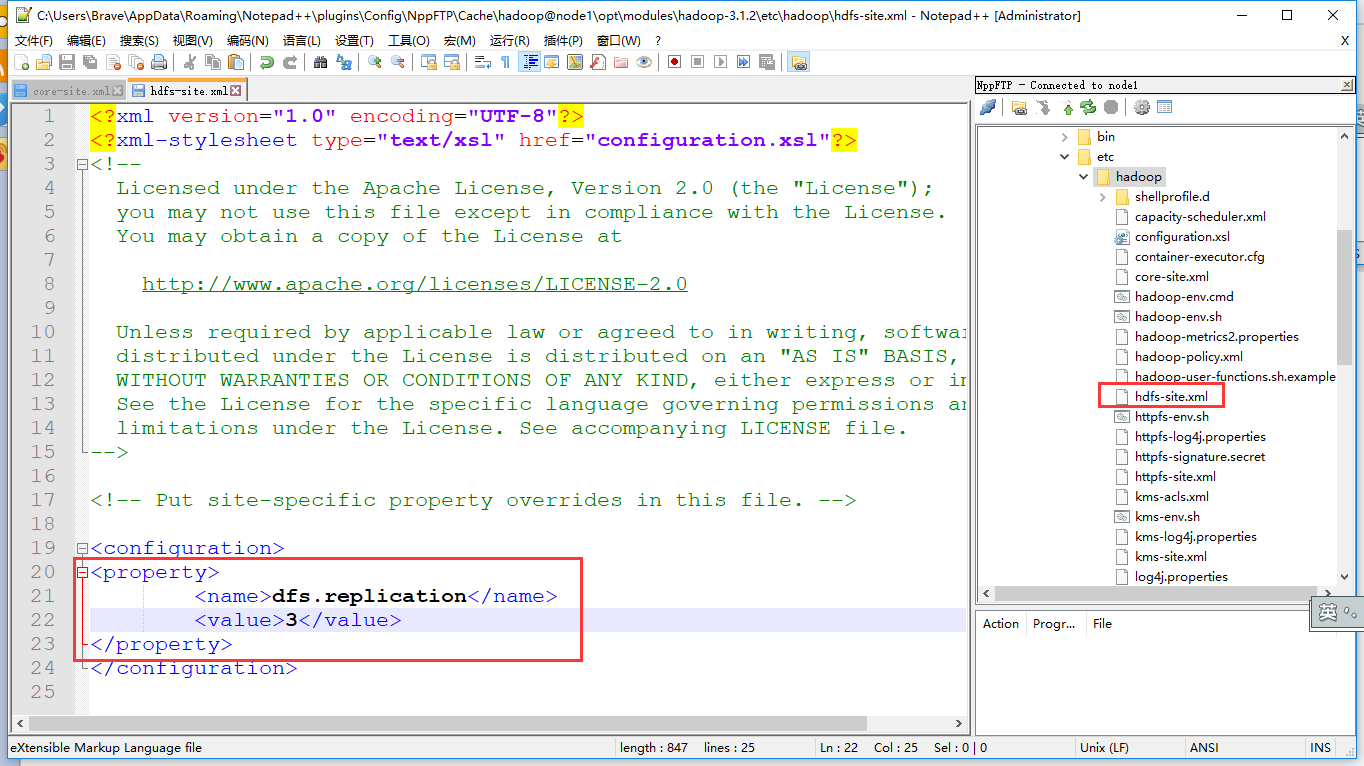

修改hdfs-site.xml

<property>

<name>dfs.replication</name>

<value>3</value>

</property>

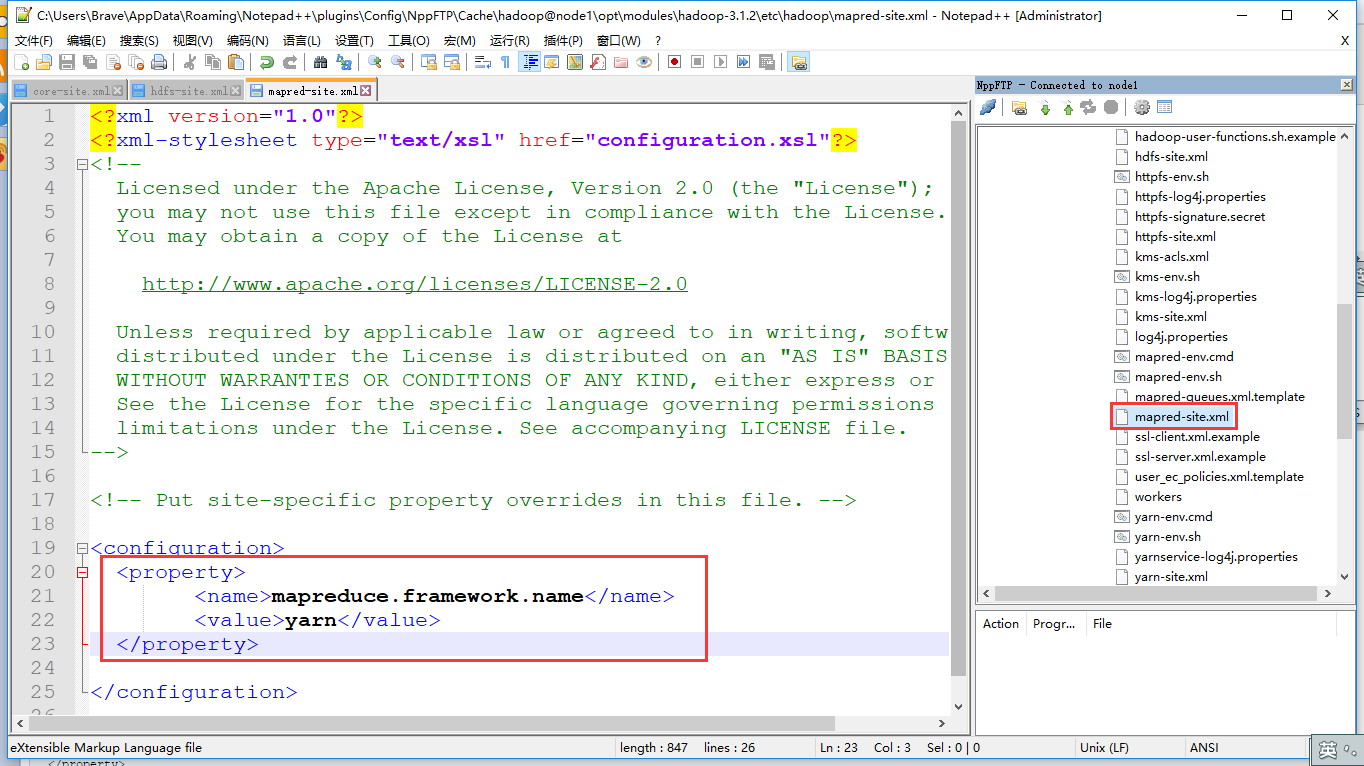

修改mapred-site.xml

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

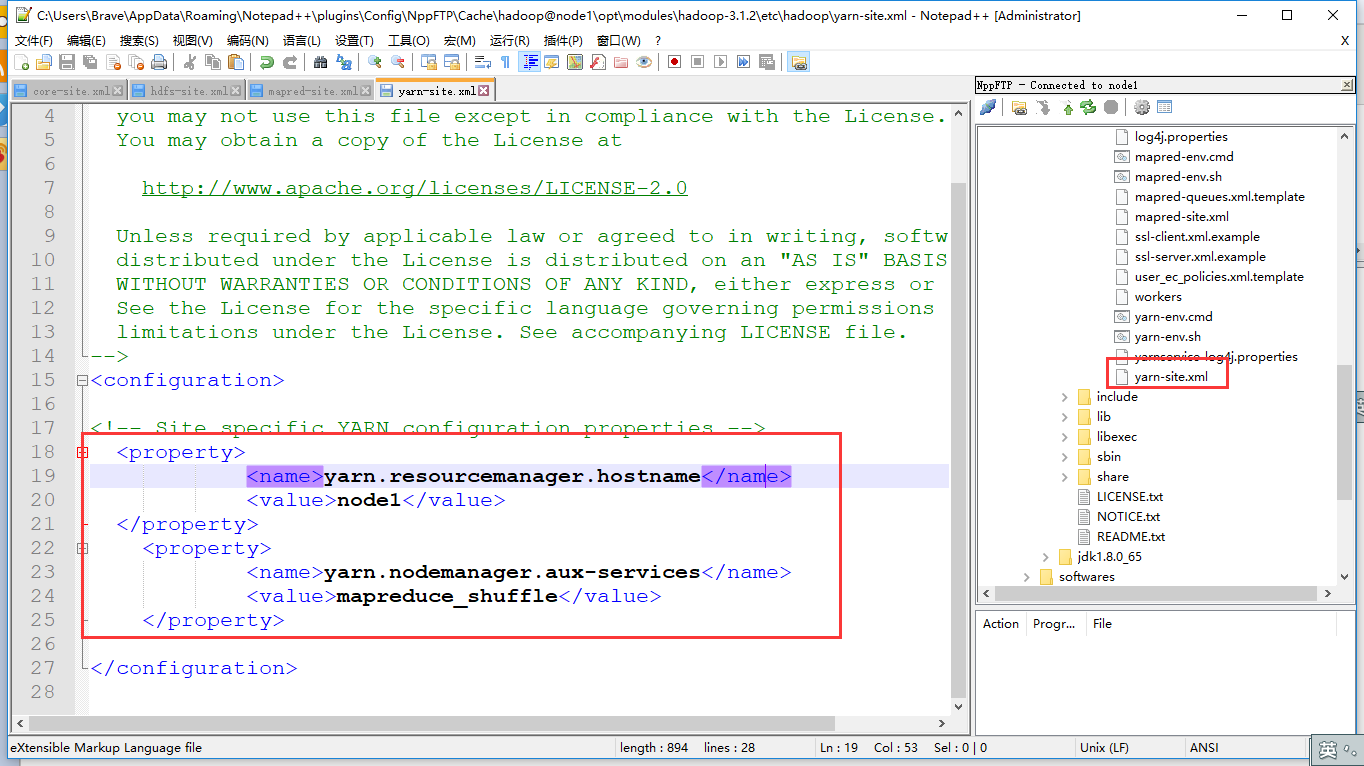

修改yarn-site.xml

<property>

<name>yarn.resourcemanager.hostname</name>

<value>node1</value>

</property>

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

修改workers文件,把datanode的节点配置进来

修改hadoop-env.sh文件

接下来我们把node1节点配置好的hadoop分发到其他机器上去

scp -r hadoop-3.1.2/ hadoop@node2:/opt/modules/

scp -r hadoop-3.1.2/ hadoop@node3:/opt/modules/

scp -r hadoop-3.1.2/ hadoop@node4:/opt/modules/

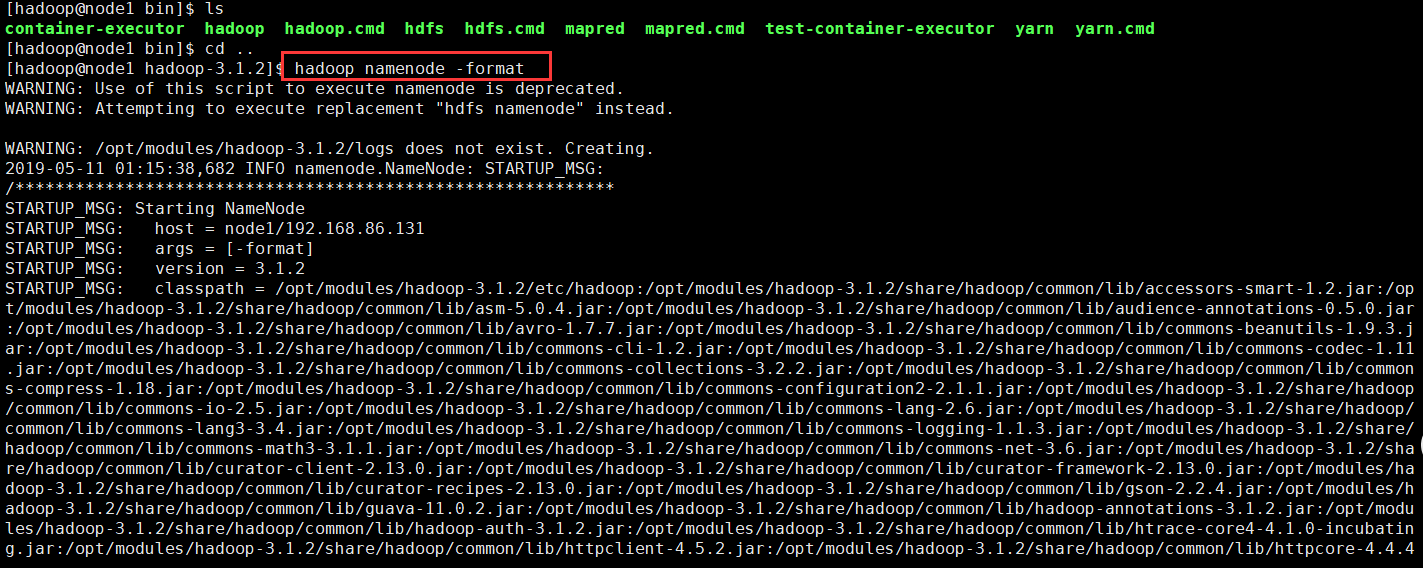

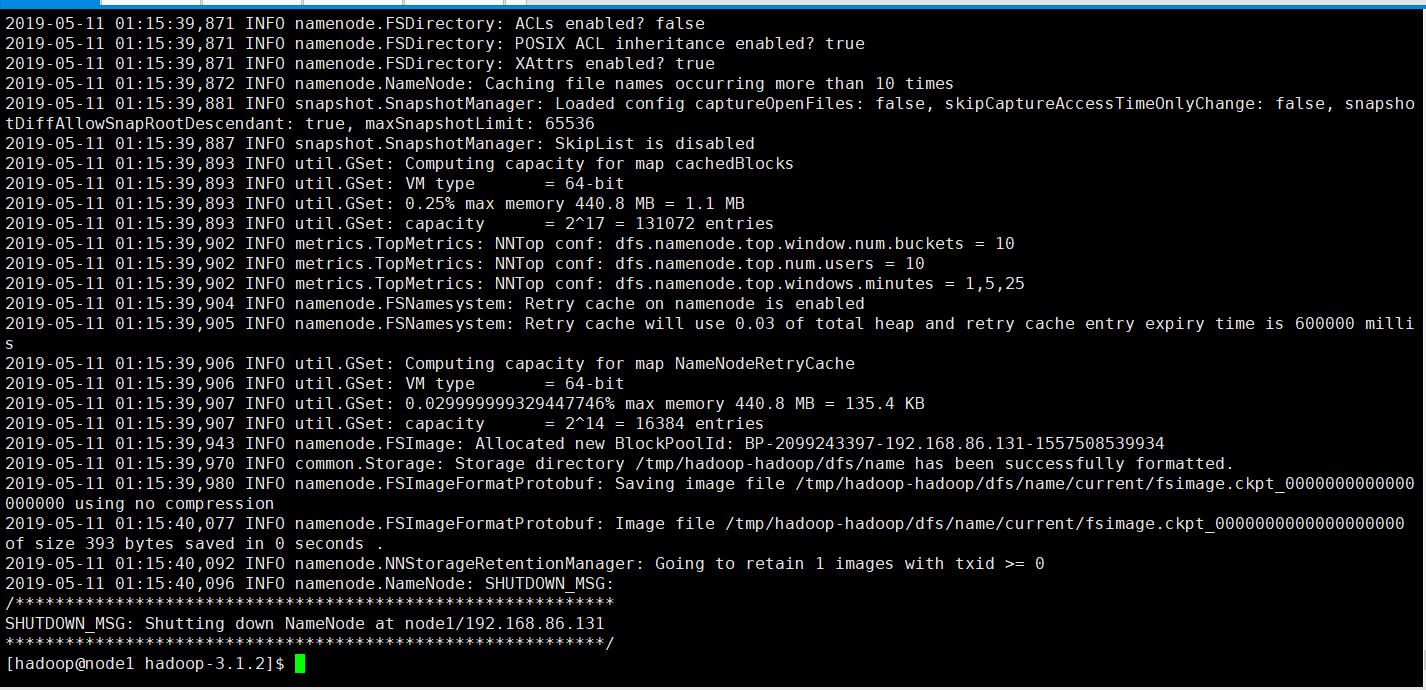

接下来格式化namenode

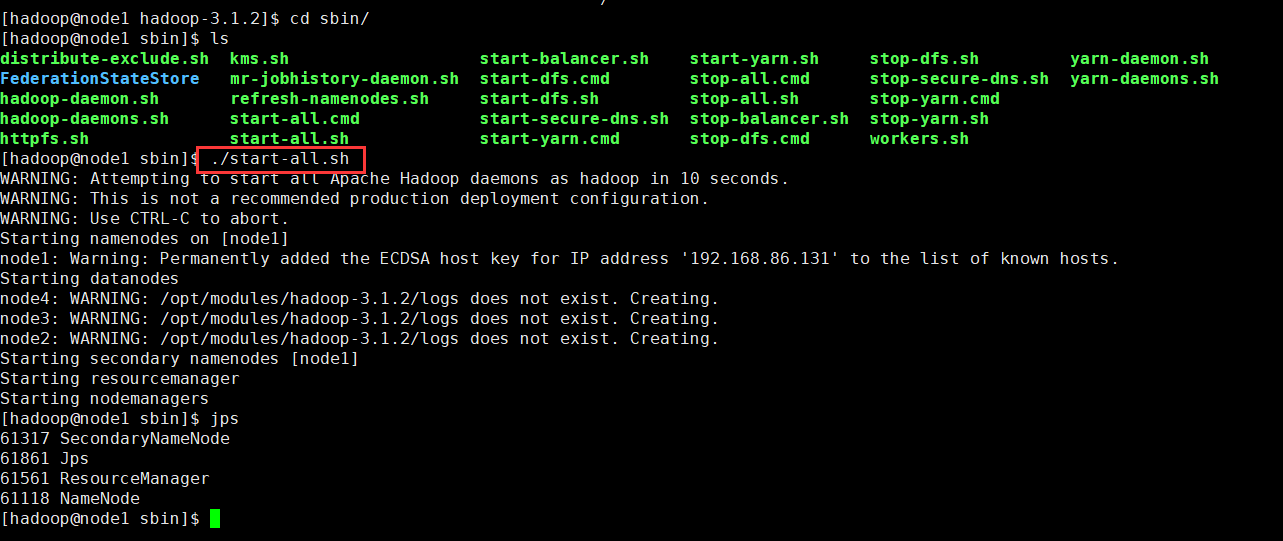

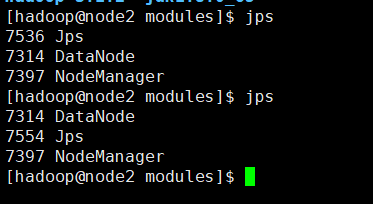

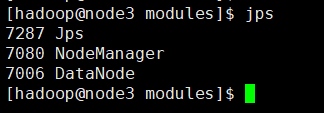

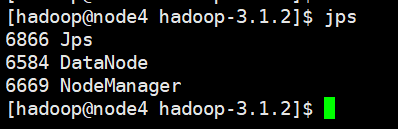

启动hadoop

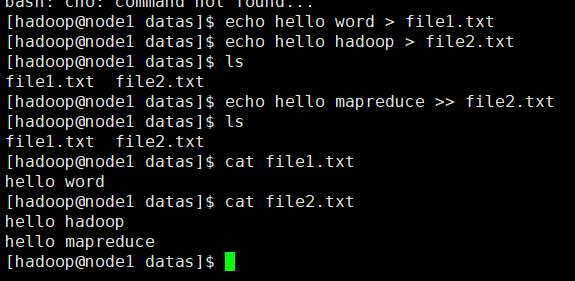

下面我们运行一个下案例

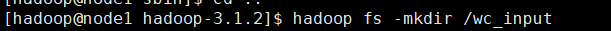

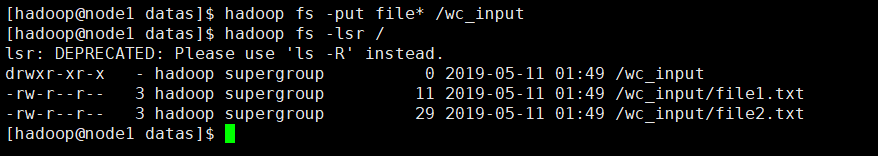

在hdfs创建目录

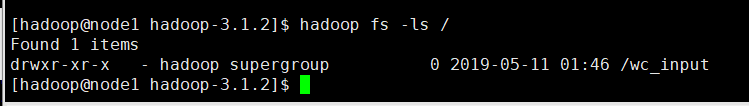

把刚刚本地创建的两个文件上传到hdfs

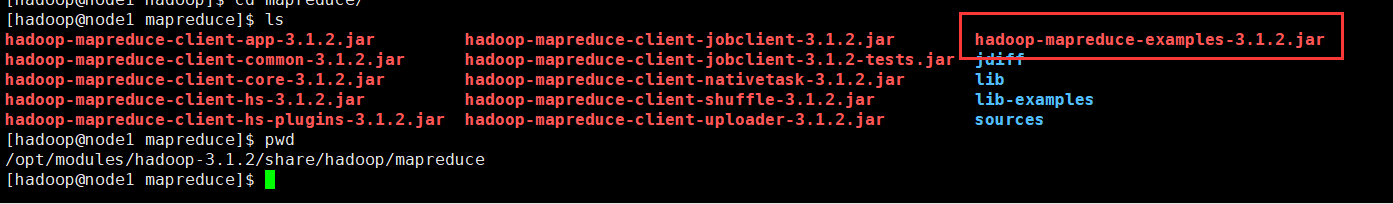

利用自带的架包来运行mapreduce程序

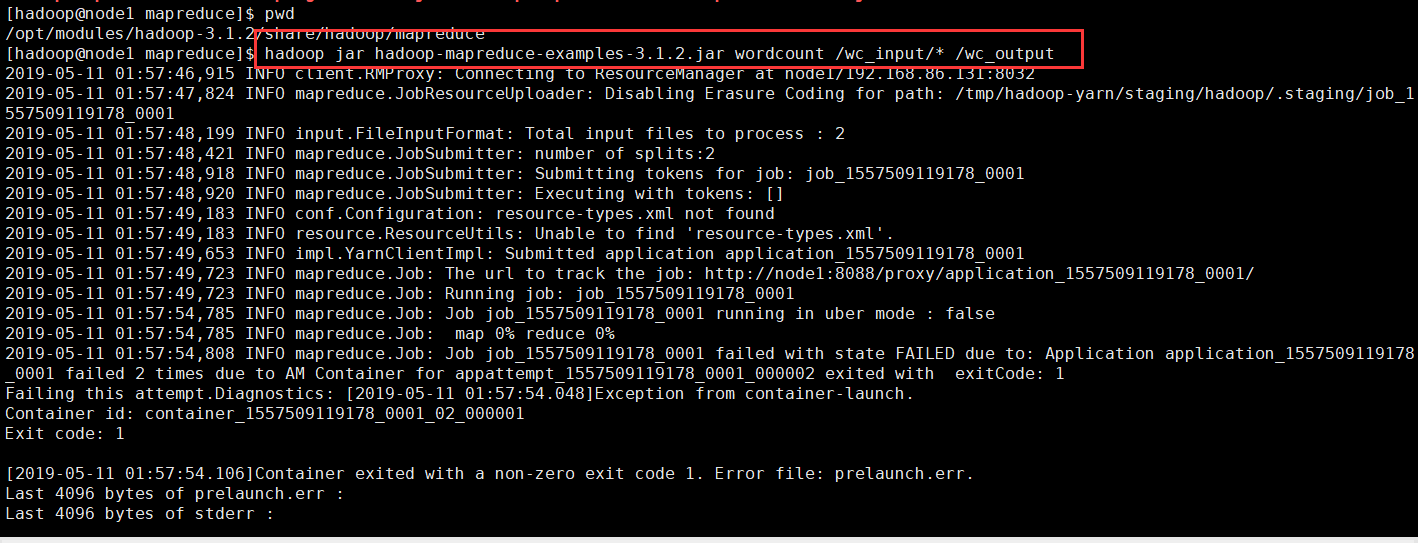

可以看到报错了!!!

[hadoop@node1 mapreduce]$ pwd /opt/modules/hadoop-3.1.2/share/hadoop/mapreduce [hadoop@node1 mapreduce]$ hadoop jar hadoop-mapreduce-examples-3.1.2.jar wordcount /wc_input/* /wc_output 2019-05-11 01:57:46,915 INFO client.RMProxy: Connecting to ResourceManager at node1/192.168.86.131:8032 2019-05-11 01:57:47,824 INFO mapreduce.JobResourceUploader: Disabling Erasure Coding for path: /tmp/hadoop-yarn/staging/hadoop/.staging/job_1557509119178_0001 2019-05-11 01:57:48,199 INFO input.FileInputFormat: Total input files to process : 2 2019-05-11 01:57:48,421 INFO mapreduce.JobSubmitter: number of splits:2 2019-05-11 01:57:48,918 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1557509119178_0001 2019-05-11 01:57:48,920 INFO mapreduce.JobSubmitter: Executing with tokens: [] 2019-05-11 01:57:49,183 INFO conf.Configuration: resource-types.xml not found 2019-05-11 01:57:49,183 INFO resource.ResourceUtils: Unable to find 'resource-types.xml'. 2019-05-11 01:57:49,653 INFO impl.YarnClientImpl: Submitted application application_1557509119178_0001 2019-05-11 01:57:49,723 INFO mapreduce.Job: The url to track the job: http://node1:8088/proxy/application_1557509119178_0001/ 2019-05-11 01:57:49,723 INFO mapreduce.Job: Running job: job_1557509119178_0001 2019-05-11 01:57:54,785 INFO mapreduce.Job: Job job_1557509119178_0001 running in uber mode : false 2019-05-11 01:57:54,785 INFO mapreduce.Job: map 0% reduce 0% 2019-05-11 01:57:54,808 INFO mapreduce.Job: Job job_1557509119178_0001 failed with state FAILED due to: Application application_1557509119178_0001 failed 2 times due to AM Container for appattempt_1557509119178_0001_000002 exited with exitCode: 1 Failing this attempt.Diagnostics: [2019-05-11 01:57:54.048]Exception from container-launch. Container id: container_1557509119178_0001_02_000001 Exit code: 1 [2019-05-11 01:57:54.106]Container exited with a non-zero exit code 1. Error file: prelaunch.err. Last 4096 bytes of prelaunch.err : Last 4096 bytes of stderr : Error: Could not find or load main class org.apache.hadoop.mapreduce.v2.app.MRAppMaster Please check whether your etc/hadoop/mapred-site.xml contains the below configuration: <property> <name>yarn.app.mapreduce.am.env</name> <value>HADOOP_MAPRED_HOME=${full path of your hadoop distribution directory}</value> </property> <property> <name>mapreduce.map.env</name> <value>HADOOP_MAPRED_HOME=${full path of your hadoop distribution directory}</value> </property> <property> <name>mapreduce.reduce.env</name> <value>HADOOP_MAPRED_HOME=${full path of your hadoop distribution directory}</value> </property> [2019-05-11 01:57:54.106]Container exited with a non-zero exit code 1. Error file: prelaunch.err. Last 4096 bytes of prelaunch.err : Last 4096 bytes of stderr : Error: Could not find or load main class org.apache.hadoop.mapreduce.v2.app.MRAppMaster Please check whether your etc/hadoop/mapred-site.xml contains the below configuration: <property> <name>yarn.app.mapreduce.am.env</name> <value>HADOOP_MAPRED_HOME=${full path of your hadoop distribution directory}</value> </property> <property> <name>mapreduce.map.env</name> <value>HADOOP_MAPRED_HOME=${full path of your hadoop distribution directory}</value> </property> <property> <name>mapreduce.reduce.env</name> <value>HADOOP_MAPRED_HOME=${full path of your hadoop distribution directory}</value> </property> For more detailed output, check the application tracking page: http://node1:8088/cluster/app/application_1557509119178_0001 Then click on links to logs of each attempt. . Failing the application. 2019-05-11 01:57:54,840 INFO mapreduce.Job: Counters: 0

因为我用的是hadoop 3.x版本的,我们这样解决这个问题

在mapred-site.xml添加下面语句

<configuration>

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

<property>

<name>yarn.app.mapreduce.am.env</name>

<value>HADOOP_MAPRED_HOME=/opt/modules/hadoop-3.1.2</value>

</property>

<property>

<name>mapreduce.map.env</name>

<value>HADOOP_MAPRED_HOME=/opt/modules/hadoop-3.1.2</value>

</property>

<property>

<name>mapreduce.reduce.env</name>

<value>HADOOP_MAPRED_HOME=/opt/modules/hadoop-3.1.2</value>

</property>

</configuration>

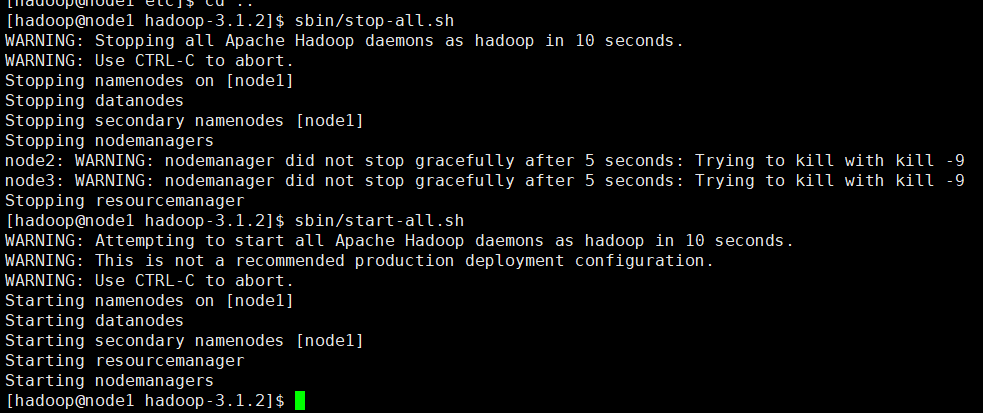

把配置文件分发给其他3个节点

再重启hadoop

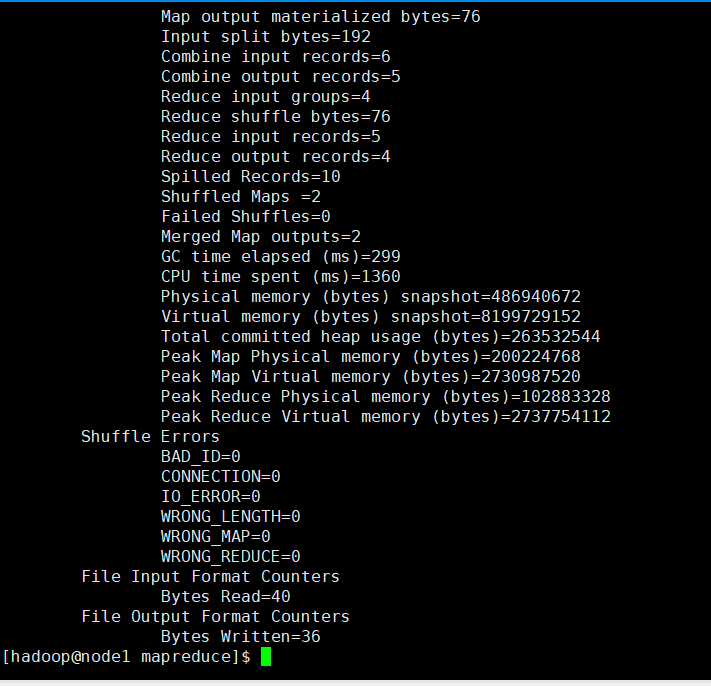

再次运行程序

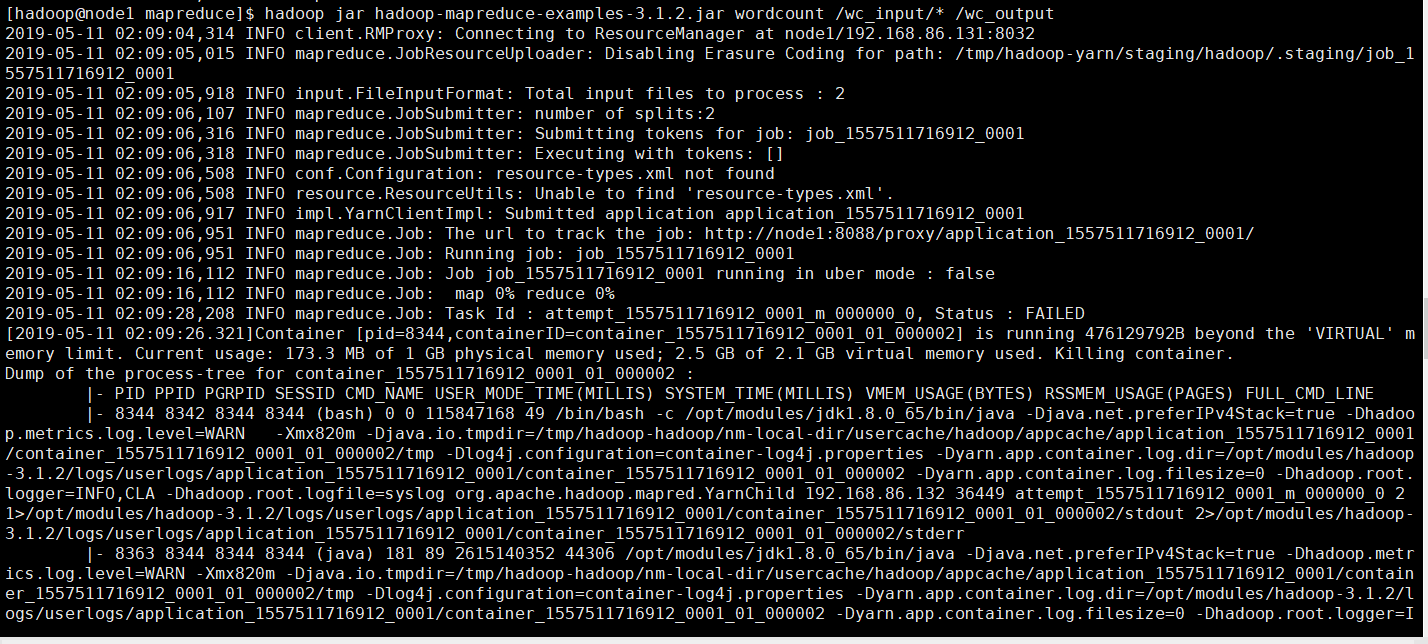

[hadoop@node1 mapreduce]$ hadoop jar hadoop-mapreduce-examples-3.1.2.jar wordcount /wc_input/* /wc_output 2019-05-11 02:09:04,314 INFO client.RMProxy: Connecting to ResourceManager at node1/192.168.86.131:8032 2019-05-11 02:09:05,015 INFO mapreduce.JobResourceUploader: Disabling Erasure Coding for path: /tmp/hadoop-yarn/staging/hadoop/.staging/job_1557511716912_0001 2019-05-11 02:09:05,918 INFO input.FileInputFormat: Total input files to process : 2 2019-05-11 02:09:06,107 INFO mapreduce.JobSubmitter: number of splits:2 2019-05-11 02:09:06,316 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1557511716912_0001 2019-05-11 02:09:06,318 INFO mapreduce.JobSubmitter: Executing with tokens: [] 2019-05-11 02:09:06,508 INFO conf.Configuration: resource-types.xml not found 2019-05-11 02:09:06,508 INFO resource.ResourceUtils: Unable to find 'resource-types.xml'. 2019-05-11 02:09:06,917 INFO impl.YarnClientImpl: Submitted application application_1557511716912_0001 2019-05-11 02:09:06,951 INFO mapreduce.Job: The url to track the job: http://node1:8088/proxy/application_1557511716912_0001/ 2019-05-11 02:09:06,951 INFO mapreduce.Job: Running job: job_1557511716912_0001 2019-05-11 02:09:16,112 INFO mapreduce.Job: Job job_1557511716912_0001 running in uber mode : false 2019-05-11 02:09:16,112 INFO mapreduce.Job: map 0% reduce 0% 2019-05-11 02:09:28,208 INFO mapreduce.Job: Task Id : attempt_1557511716912_0001_m_000000_0, Status : FAILED [2019-05-11 02:09:26.321]Container [pid=8344,containerID=container_1557511716912_0001_01_000002] is running 476129792B beyond the 'VIRTUAL' memory limit. Current usage: 173.3 MB of 1 GB physical memory used; 2.5 GB of 2.1 GB virtual memory used. Killing container. Dump of the process-tree for container_1557511716912_0001_01_000002 : |- PID PPID PGRPID SESSID CMD_NAME USER_MODE_TIME(MILLIS) SYSTEM_TIME(MILLIS) VMEM_USAGE(BYTES) RSSMEM_USAGE(PAGES) FULL_CMD_LINE |- 8344 8342 8344 8344 (bash) 0 0 115847168 49 /bin/bash -c /opt/modules/jdk1.8.0_65/bin/java -Djava.net.preferIPv4Stack=true -Dhadoop.metrics.log.level=WARN -Xmx820m -Djava.io.tmpdir=/tmp/hadoop-hadoop/nm-local-dir/usercache/hadoop/appcache/application_1557511716912_0001/container_1557511716912_0001_01_000002/tmp -Dlog4j.configuration=container-log4j.properties -Dyarn.app.container.log.dir=/opt/modules/hadoop-3.1.2/logs/userlogs/application_1557511716912_0001/container_1557511716912_0001_01_000002 -Dyarn.app.container.log.filesize=0 -Dhadoop.root.logger=INFO,CLA -Dhadoop.root.logfile=syslog org.apache.hadoop.mapred.YarnChild 192.168.86.132 36449 attempt_1557511716912_0001_m_000000_0 2 1>/opt/modules/hadoop-3.1.2/logs/userlogs/application_1557511716912_0001/container_1557511716912_0001_01_000002/stdout 2>/opt/modules/hadoop-3.1.2/logs/userlogs/application_1557511716912_0001/container_1557511716912_0001_01_000002/stderr |- 8363 8344 8344 8344 (java) 181 89 2615140352 44306 /opt/modules/jdk1.8.0_65/bin/java -Djava.net.preferIPv4Stack=true -Dhadoop.metrics.log.level=WARN -Xmx820m -Djava.io.tmpdir=/tmp/hadoop-hadoop/nm-local-dir/usercache/hadoop/appcache/application_1557511716912_0001/container_1557511716912_0001_01_000002/tmp -Dlog4j.configuration=container-log4j.properties -Dyarn.app.container.log.dir=/opt/modules/hadoop-3.1.2/logs/userlogs/application_1557511716912_0001/container_1557511716912_0001_01_000002 -Dyarn.app.container.log.filesize=0 -Dhadoop.root.logger=INFO,CLA -Dhadoop.root.logfile=syslog org.apache.hadoop.mapred.YarnChild 192.168.86.132 36449 attempt_1557511716912_0001_m_000000_0 2 [2019-05-11 02:09:27.201]Container killed on request. Exit code is 143 [2019-05-11 02:09:27.228]Container exited with a non-zero exit code 143. 2019-05-11 02:09:29,261 INFO mapreduce.Job: map 50% reduce 0% 2019-05-11 02:09:39,354 INFO mapreduce.Job: Task Id : attempt_1557511716912_0001_m_000000_2, Status : FAILED [2019-05-11 02:09:50.092]Container [pid=8789,containerID=container_1557511716912_0001_01_000005] is running 462477824B beyond the 'VIRTUAL' memory limit. Current usage: 79.1 MB of 1 GB physical memory used; 2.5 GB of 2.1 GB virtual memory used. Killing container. Dump of the process-tree for container_1557511716912_0001_01_000005 : |- PID PPID PGRPID SESSID CMD_NAME USER_MODE_TIME(MILLIS) SYSTEM_TIME(MILLIS) VMEM_USAGE(BYTES) RSSMEM_USAGE(PAGES) FULL_CMD_LINE |- 8803 8789 8789 8789 (java) 154 51 2601488384 19957 /opt/modules/jdk1.8.0_65/bin/java -Djava.net.preferIPv4Stack=true -Dhadoop.metrics.log.level=WARN -Xmx820m -Djava.io.tmpdir=/tmp/hadoop-hadoop/nm-local-dir/usercache/hadoop/appcache/application_1557511716912_0001/container_1557511716912_0001_01_000005/tmp -Dlog4j.configuration=container-log4j.properties -Dyarn.app.container.log.dir=/opt/modules/hadoop-3.1.2/logs/userlogs/application_1557511716912_0001/container_1557511716912_0001_01_000005 -Dyarn.app.container.log.filesize=0 -Dhadoop.root.logger=INFO,CLA -Dhadoop.root.logfile=syslog org.apache.hadoop.mapred.YarnChild 192.168.86.132 36449 attempt_1557511716912_0001_m_000000_2 5 |- 8789 8788 8789 8789 (bash) 0 0 115847168 287 /bin/bash -c /opt/modules/jdk1.8.0_65/bin/java -Djava.net.preferIPv4Stack=true -Dhadoop.metrics.log.level=WARN -Xmx820m -Djava.io.tmpdir=/tmp/hadoop-hadoop/nm-local-dir/usercache/hadoop/appcache/application_1557511716912_0001/container_1557511716912_0001_01_000005/tmp -Dlog4j.configuration=container-log4j.properties -Dyarn.app.container.log.dir=/opt/modules/hadoop-3.1.2/logs/userlogs/application_1557511716912_0001/container_1557511716912_0001_01_000005 -Dyarn.app.container.log.filesize=0 -Dhadoop.root.logger=INFO,CLA -Dhadoop.root.logfile=syslog org.apache.hadoop.mapred.YarnChild 192.168.86.132 36449 attempt_1557511716912_0001_m_000000_2 5 1>/opt/modules/hadoop-3.1.2/logs/userlogs/application_1557511716912_0001/container_1557511716912_0001_01_000005/stdout 2>/opt/modules/hadoop-3.1.2/logs/userlogs/application_1557511716912_0001/container_1557511716912_0001_01_000005/stderr [2019-05-11 02:09:50.628]Container killed on request. Exit code is 143 [2019-05-11 02:09:50.636]Container exited with a non-zero exit code 143. 2019-05-11 02:09:39,364 INFO mapreduce.Job: Task Id : attempt_1557511716912_0001_m_000000_1, Status : FAILED [2019-05-11 02:09:50.636]Container [pid=8763,containerID=container_1557511716912_0001_01_000004] is running 462477824B beyond the 'VIRTUAL' memory limit. Current usage: 80.2 MB of 1 GB physical memory used; 2.5 GB of 2.1 GB virtual memory used. Killing container. Dump of the process-tree for container_1557511716912_0001_01_000004 : |- PID PPID PGRPID SESSID CMD_NAME USER_MODE_TIME(MILLIS) SYSTEM_TIME(MILLIS) VMEM_USAGE(BYTES) RSSMEM_USAGE(PAGES) FULL_CMD_LINE |- 8773 8763 8763 8763 (java) 139 72 2601488384 20242 /opt/modules/jdk1.8.0_65/bin/java -Djava.net.preferIPv4Stack=true -Dhadoop.metrics.log.level=WARN -Xmx820m -Djava.io.tmpdir=/tmp/hadoop-hadoop/nm-local-dir/usercache/hadoop/appcache/application_1557511716912_0001/container_1557511716912_0001_01_000004/tmp -Dlog4j.configuration=container-log4j.properties -Dyarn.app.container.log.dir=/opt/modules/hadoop-3.1.2/logs/userlogs/application_1557511716912_0001/container_1557511716912_0001_01_000004 -Dyarn.app.container.log.filesize=0 -Dhadoop.root.logger=INFO,CLA -Dhadoop.root.logfile=syslog org.apache.hadoop.mapred.YarnChild 192.168.86.132 36449 attempt_1557511716912_0001_m_000000_1 4 |- 8763 8762 8763 8763 (bash) 0 0 115847168 287 /bin/bash -c /opt/modules/jdk1.8.0_65/bin/java -Djava.net.preferIPv4Stack=true -Dhadoop.metrics.log.level=WARN -Xmx820m -Djava.io.tmpdir=/tmp/hadoop-hadoop/nm-local-dir/usercache/hadoop/appcache/application_1557511716912_0001/container_1557511716912_0001_01_000004/tmp -Dlog4j.configuration=container-log4j.properties -Dyarn.app.container.log.dir=/opt/modules/hadoop-3.1.2/logs/userlogs/application_1557511716912_0001/container_1557511716912_0001_01_000004 -Dyarn.app.container.log.filesize=0 -Dhadoop.root.logger=INFO,CLA -Dhadoop.root.logfile=syslog org.apache.hadoop.mapred.YarnChild 192.168.86.132 36449 attempt_1557511716912_0001_m_000000_1 4 1>/opt/modules/hadoop-3.1.2/logs/userlogs/application_1557511716912_0001/container_1557511716912_0001_01_000004/stdout 2>/opt/modules/hadoop-3.1.2/logs/userlogs/application_1557511716912_0001/container_1557511716912_0001_01_000004/stderr [2019-05-11 02:09:50.745]Container killed on request. Exit code is 143 [2019-05-11 02:09:50.746]Container exited with a non-zero exit code 143. 2019-05-11 02:09:39,366 INFO mapreduce.Job: Task Id : attempt_1557511716912_0001_r_000000_0, Status : FAILED [2019-05-11 02:09:38.370]Container [pid=8453,containerID=container_1557511716912_0001_01_000006] is running 440875520B beyond the 'VIRTUAL' memory limit. Current usage: 59.2 MB of 1 GB physical memory used; 2.5 GB of 2.1 GB virtual memory used. Killing container. Dump of the process-tree for container_1557511716912_0001_01_000006 : |- PID PPID PGRPID SESSID CMD_NAME USER_MODE_TIME(MILLIS) SYSTEM_TIME(MILLIS) VMEM_USAGE(BYTES) RSSMEM_USAGE(PAGES) FULL_CMD_LINE |- 8453 8452 8453 8453 (bash) 0 0 115847168 302 /bin/bash -c /opt/modules/jdk1.8.0_65/bin/java -Djava.net.preferIPv4Stack=true -Dhadoop.metrics.log.level=WARN -Xmx820m -Djava.io.tmpdir=/tmp/hadoop-hadoop/nm-local-dir/usercache/hadoop/appcache/application_1557511716912_0001/container_1557511716912_0001_01_000006/tmp -Dlog4j.configuration=container-log4j.properties -Dyarn.app.container.log.dir=/opt/modules/hadoop-3.1.2/logs/userlogs/application_1557511716912_0001/container_1557511716912_0001_01_000006 -Dyarn.app.container.log.filesize=0 -Dhadoop.root.logger=INFO,CLA -Dhadoop.root.logfile=syslog -Dyarn.app.mapreduce.shuffle.logger=INFO,shuffleCLA -Dyarn.app.mapreduce.shuffle.logfile=syslog.shuffle -Dyarn.app.mapreduce.shuffle.log.filesize=0 -Dyarn.app.mapreduce.shuffle.log.backups=0 org.apache.hadoop.mapred.YarnChild 192.168.86.132 36449 attempt_1557511716912_0001_r_000000_0 6 1>/opt/modules/hadoop-3.1.2/logs/userlogs/application_1557511716912_0001/container_1557511716912_0001_01_000006/stdout 2>/opt/modules/hadoop-3.1.2/logs/userlogs/application_1557511716912_0001/container_1557511716912_0001_01_000006/stderr |- 8463 8453 8453 8453 (java) 86 35 2579886080 14860 /opt/modules/jdk1.8.0_65/bin/java -Djava.net.preferIPv4Stack=true -Dhadoop.metrics.log.level=WARN -Xmx820m -Djava.io.tmpdir=/tmp/hadoop-hadoop/nm-local-dir/usercache/hadoop/appcache/application_1557511716912_0001/container_1557511716912_0001_01_000006/tmp -Dlog4j.configuration=container-log4j.properties -Dyarn.app.container.log.dir=/opt/modules/hadoop-3.1.2/logs/userlogs/application_1557511716912_0001/container_1557511716912_0001_01_000006 -Dyarn.app.container.log.filesize=0 -Dhadoop.root.logger=INFO,CLA -Dhadoop.root.logfile=syslog -Dyarn.app.mapreduce.shuffle.logger=INFO,shuffleCLA -Dyarn.app.mapreduce.shuffle.logfile=syslog.shuffle -Dyarn.app.mapreduce.shuffle.log.filesize=0 -Dyarn.app.mapreduce.shuffle.log.backups=0 org.apache.hadoop.mapred.YarnChild 192.168.86.132 36449 attempt_1557511716912_0001_r_000000_0 6 [2019-05-11 02:09:38.403]Container killed on request. Exit code is 143 [2019-05-11 02:09:38.404]Container exited with a non-zero exit code 143. 2019-05-11 02:09:47,416 INFO mapreduce.Job: map 100% reduce 0% 2019-05-11 02:09:48,428 INFO mapreduce.Job: map 100% reduce 100% 2019-05-11 02:09:49,443 INFO mapreduce.Job: Job job_1557511716912_0001 completed successfully 2019-05-11 02:09:49,564 INFO mapreduce.Job: Counters: 56 File System Counters FILE: Number of bytes read=70 FILE: Number of bytes written=648103 FILE: Number of read operations=0 FILE: Number of large read operations=0 FILE: Number of write operations=0 HDFS: Number of bytes read=232 HDFS: Number of bytes written=36 HDFS: Number of read operations=11 HDFS: Number of large read operations=0 HDFS: Number of write operations=2 Job Counters Failed map tasks=3 Failed reduce tasks=1 Launched map tasks=5 Launched reduce tasks=2 Other local map tasks=2 Data-local map tasks=3 Total time spent by all maps in occupied slots (ms)=44855 Total time spent by all reduces in occupied slots (ms)=14105 Total time spent by all map tasks (ms)=44855 Total time spent by all reduce tasks (ms)=14105 Total vcore-milliseconds taken by all map tasks=44855 Total vcore-milliseconds taken by all reduce tasks=14105 Total megabyte-milliseconds taken by all map tasks=45931520 Total megabyte-milliseconds taken by all reduce tasks=14443520 Map-Reduce Framework Map input records=3 Map output records=6 Map output bytes=64 Map output materialized bytes=76 Input split bytes=192 Combine input records=6 Combine output records=5 Reduce input groups=4 Reduce shuffle bytes=76 Reduce input records=5 Reduce output records=4 Spilled Records=10 Shuffled Maps =2 Failed Shuffles=0 Merged Map outputs=2 GC time elapsed (ms)=299 CPU time spent (ms)=1360 Physical memory (bytes) snapshot=486940672 Virtual memory (bytes) snapshot=8199729152 Total committed heap usage (bytes)=263532544 Peak Map Physical memory (bytes)=200224768 Peak Map Virtual memory (bytes)=2730987520 Peak Reduce Physical memory (bytes)=102883328 Peak Reduce Virtual memory (bytes)=2737754112 Shuffle Errors BAD_ID=0 CONNECTION=0 IO_ERROR=0 WRONG_LENGTH=0 WRONG_MAP=0 WRONG_REDUCE=0 File Input Format Counters Bytes Read=40 File Output Format Counters Bytes Written=36 [hadoop@node1 mapreduce]$

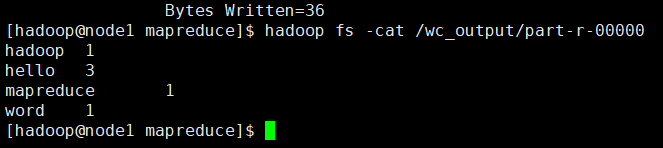

可以看到运行成功了!!!

查看一下运行结果