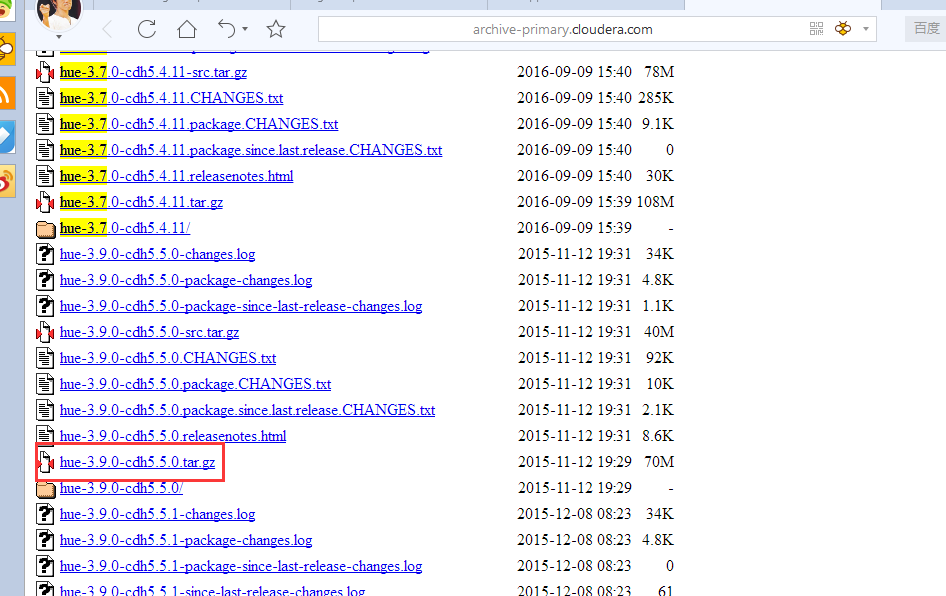

下载版本

cdh版本 http://archive-primary.cloudera.com/cdh5/cdh/5/

我们下载这个

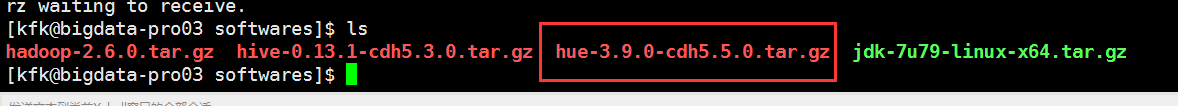

这个是我下载好的

我们解压一下

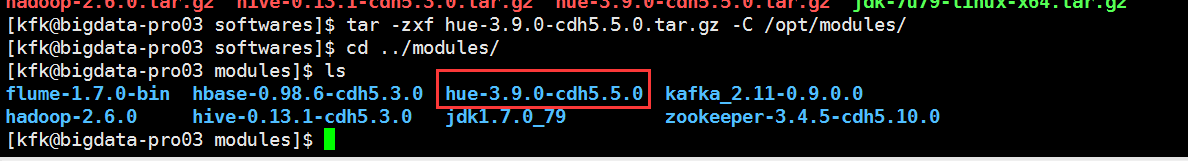

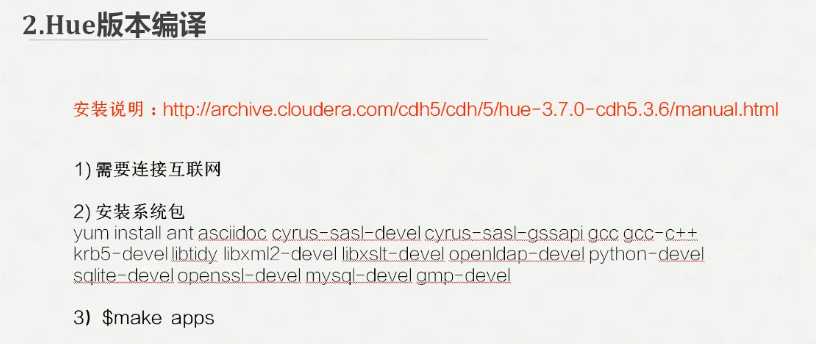

下载需要的系统包

yum install ant asciidoc cyrus-sasl-devel cyrus-sasl-gssapi gcc gcc-c++ krb5-devel ibtidy libxml2-devel libxslt-devel openldap-devel python-devel

sqlite-devel openssl-devel mysql-devel gmp-devel

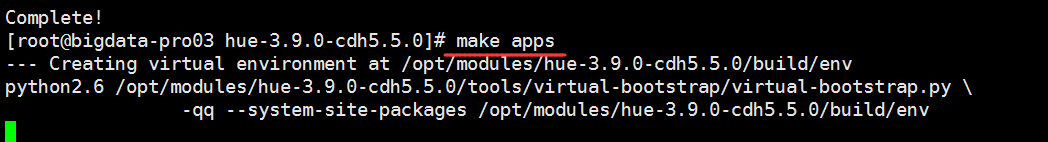

接下来这一步的话可能时间比较久一点起码要三五分钟的,大家耐心等等

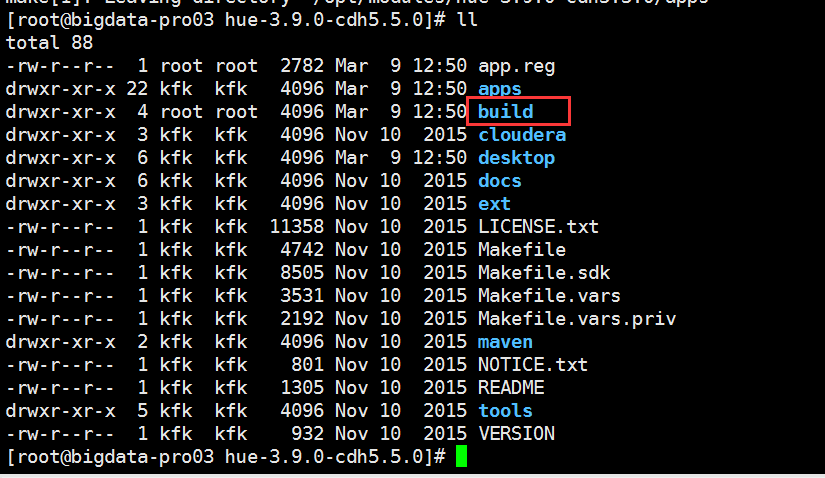

现在我们编译就成功了!!!

我们可以看到生成我们的build目录

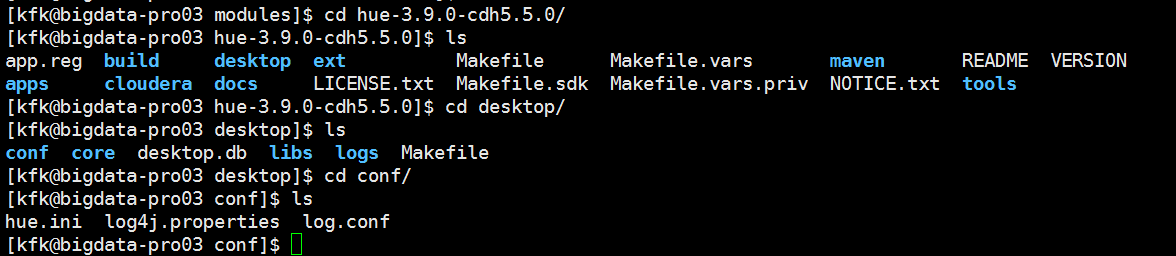

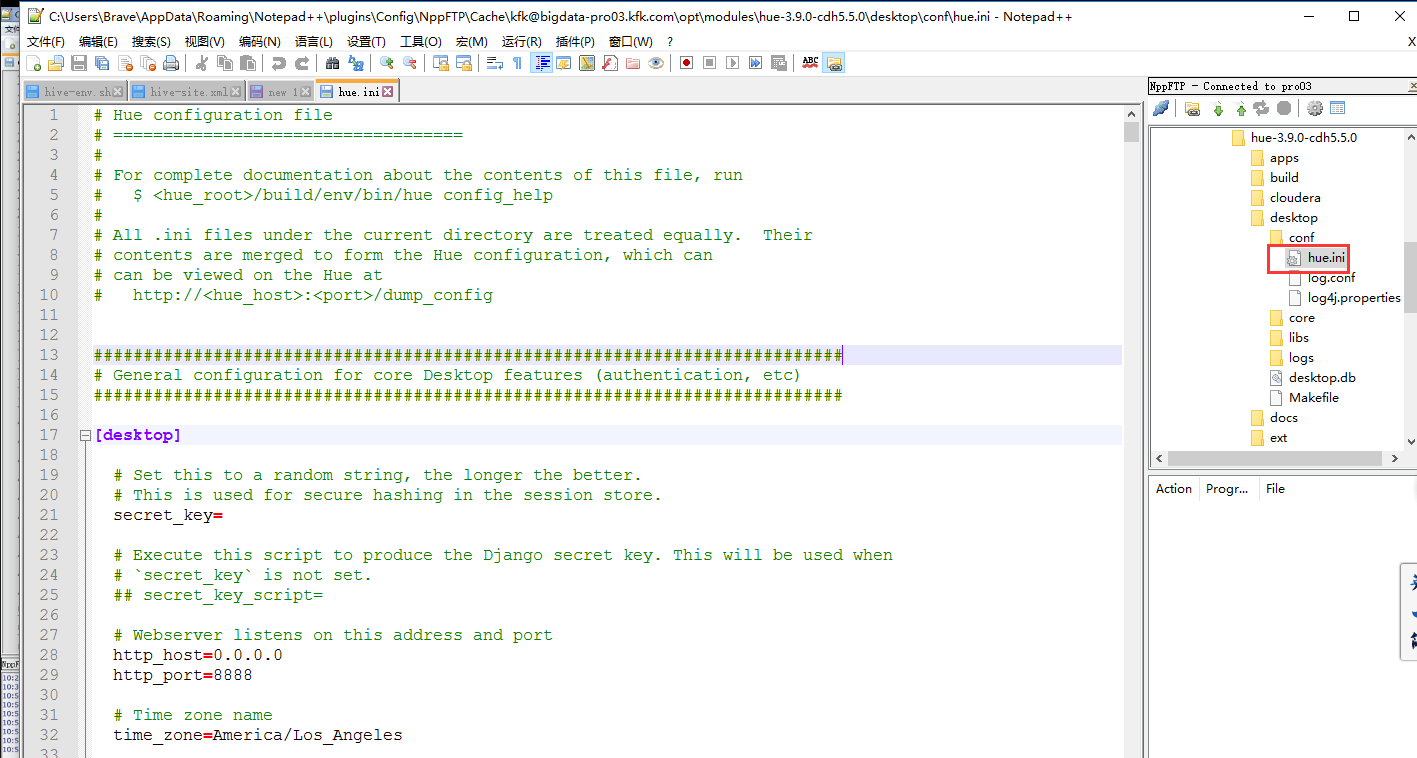

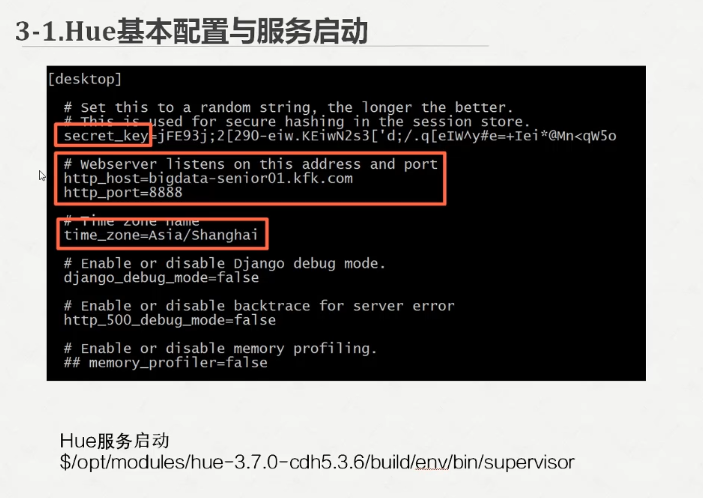

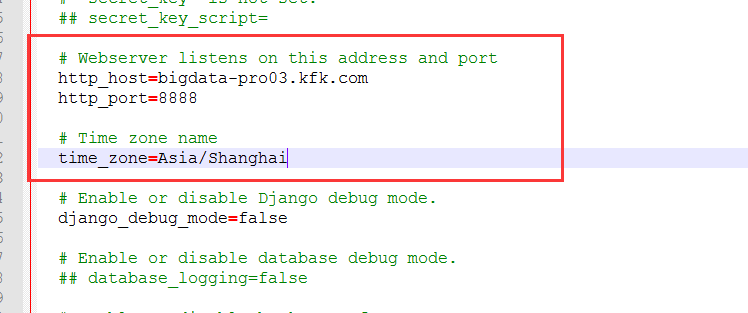

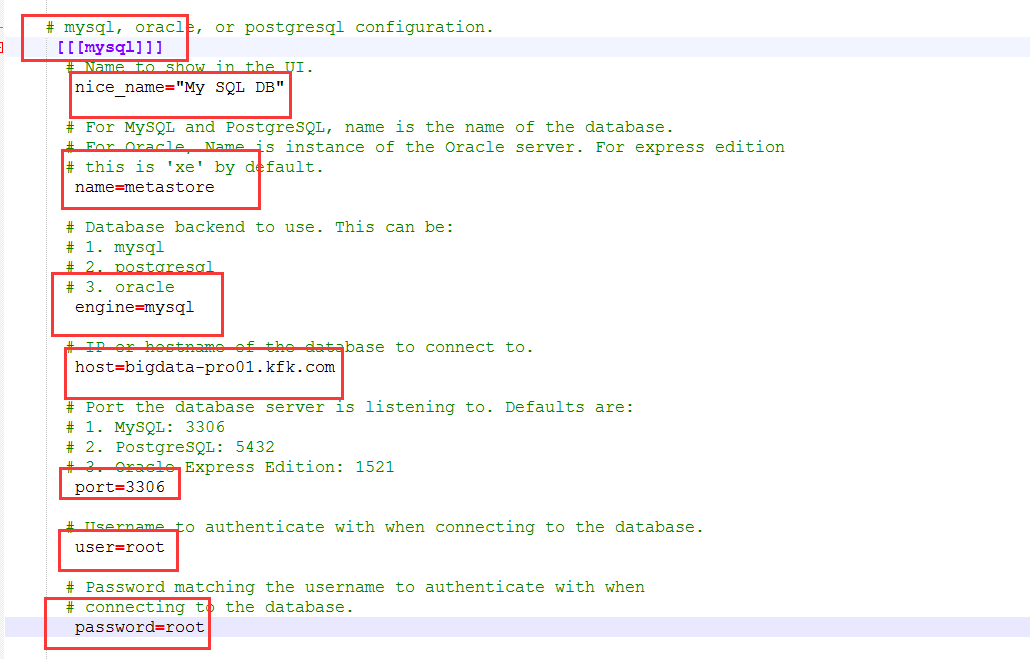

现在我们通过notepad打开这个文件

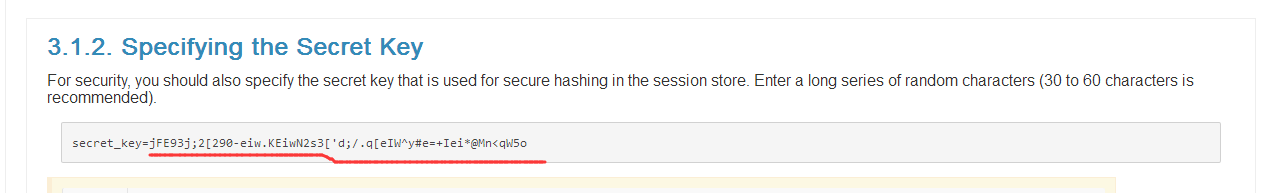

直接把官网的这一串拷贝过来

参考官方说明网址 http://archive.cloudera.com/cdh5/cdh/5/hue-3.9.0-cdh5.5.0/manual.html

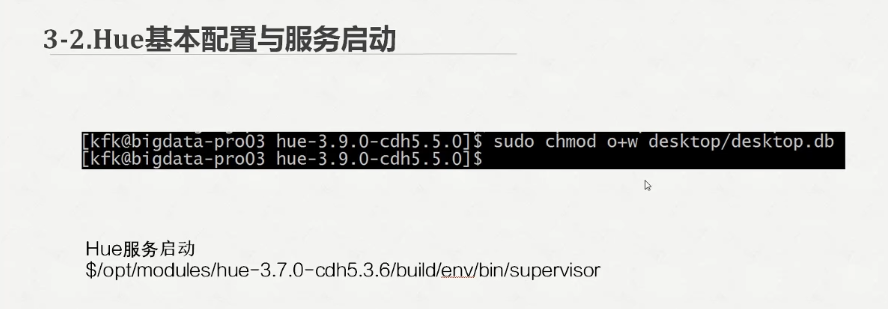

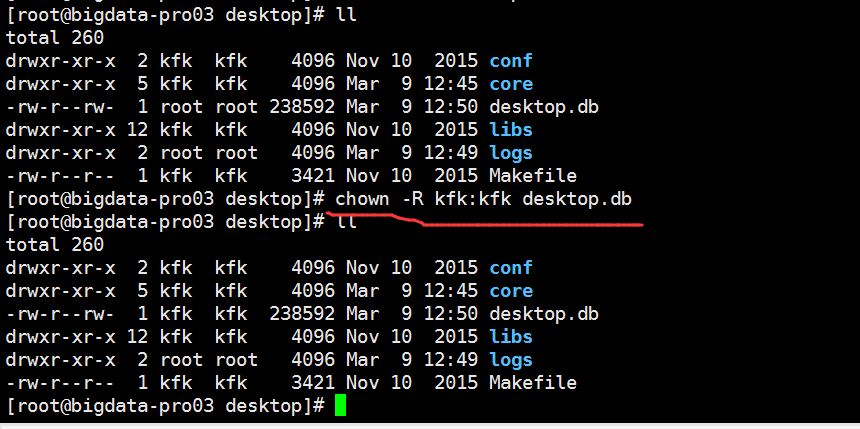

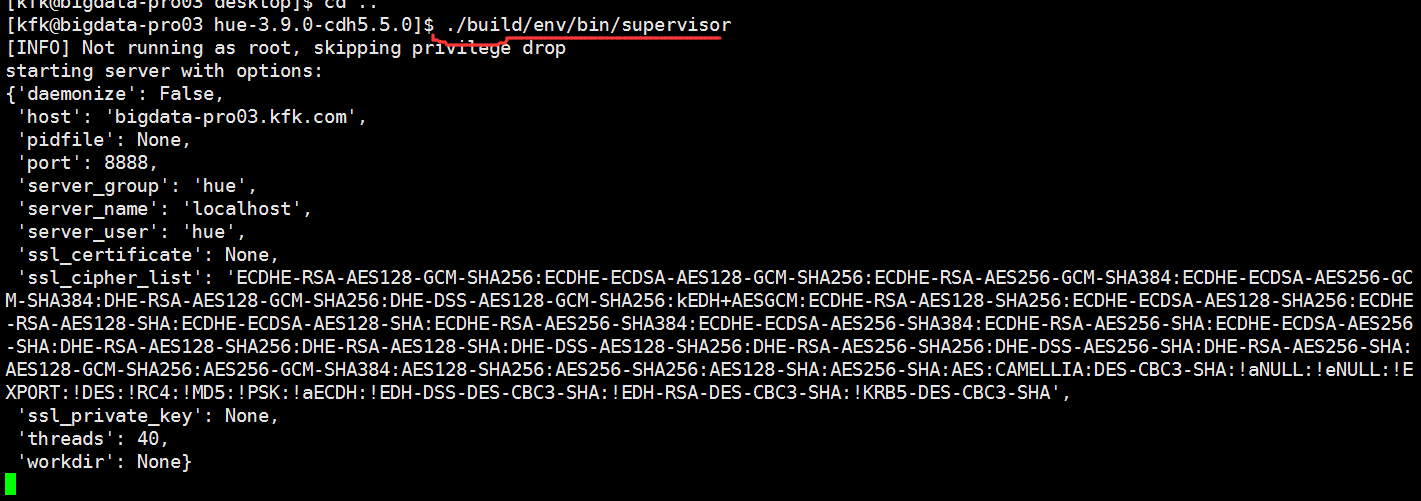

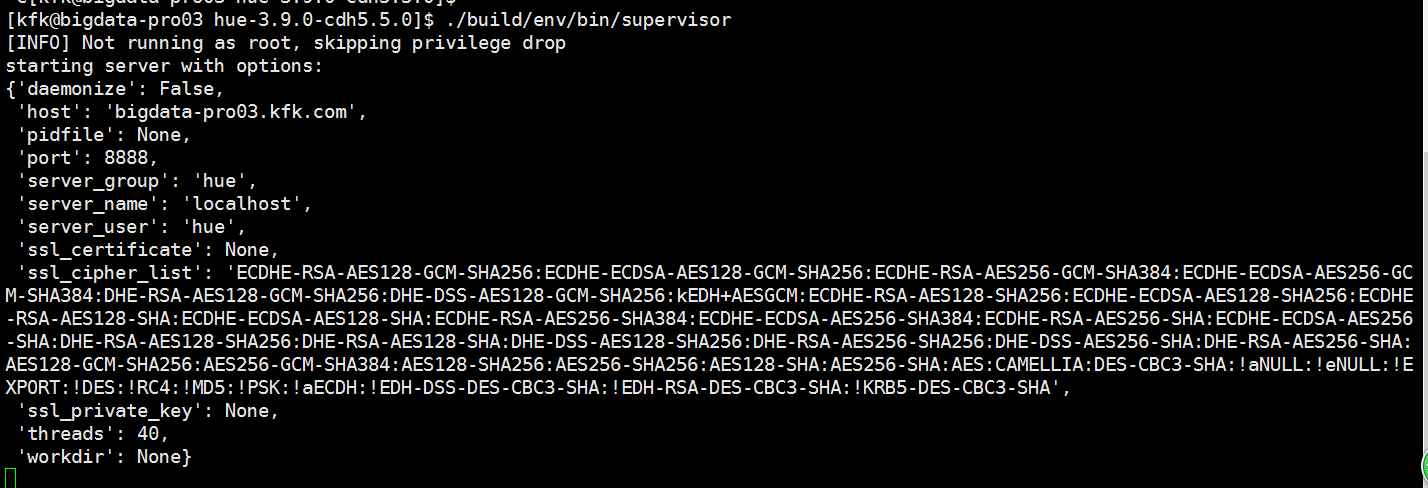

接下来启动服务

可以看到服务启动起来了,打印了一长串的服务信息,我们不管他

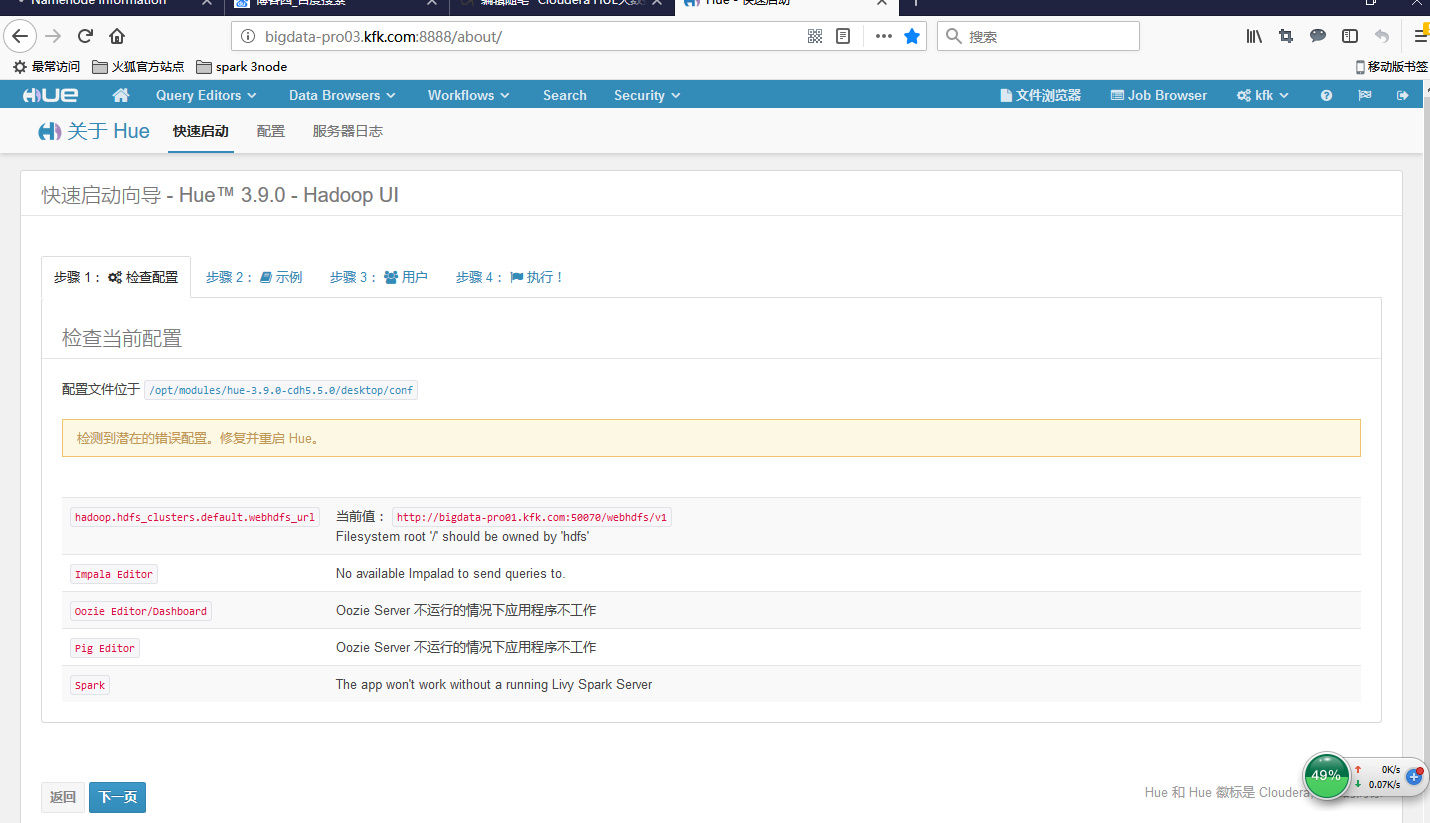

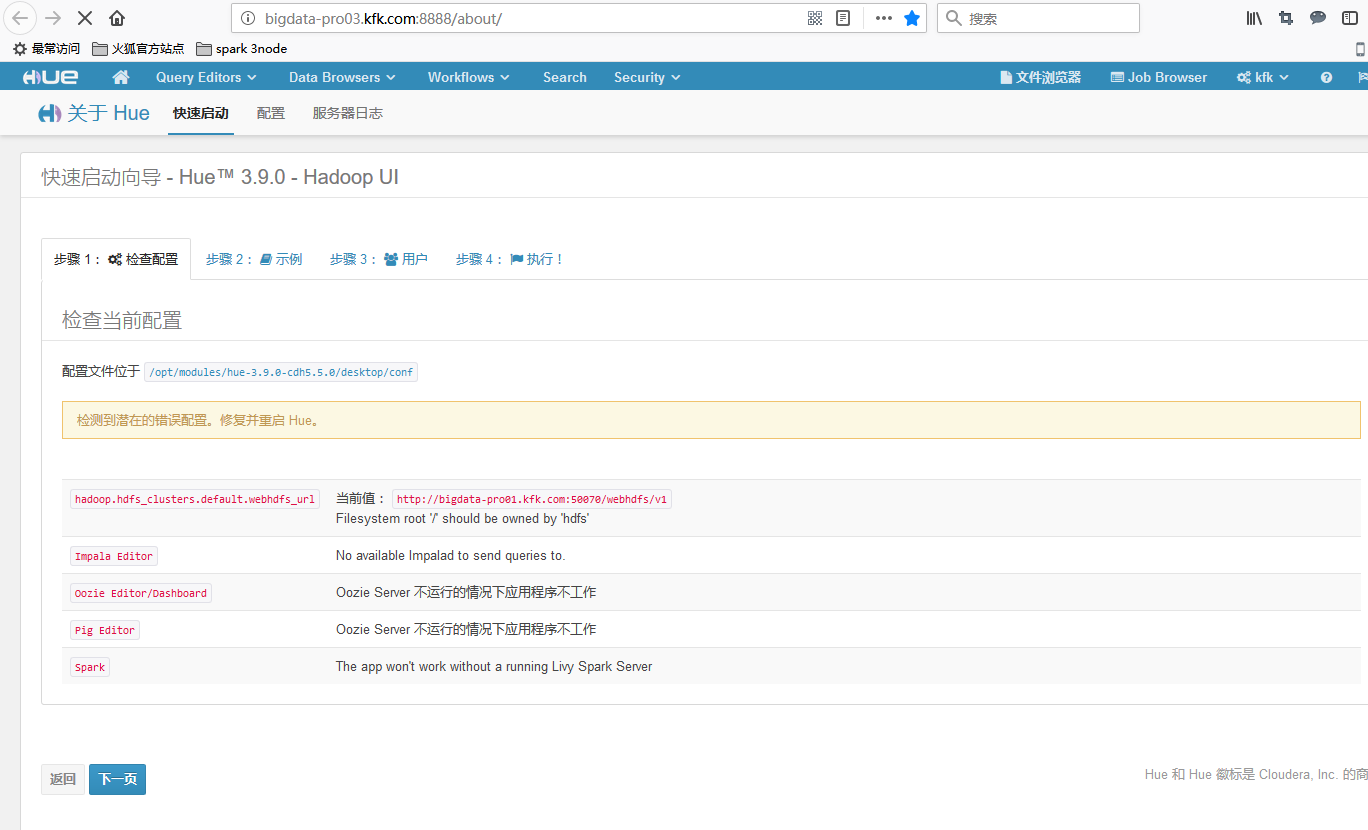

http://bigdata-pro03.kfk.com:8888

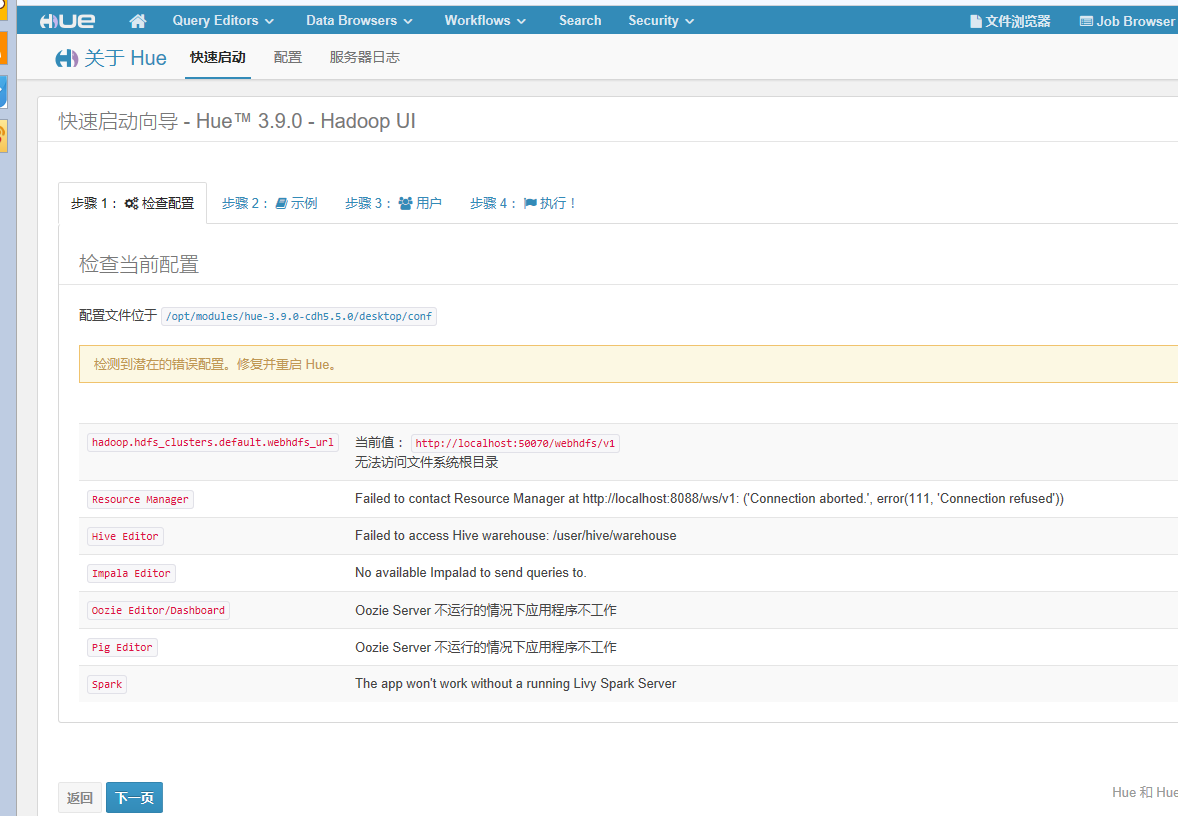

登录这个地址看看这个可视化界面

注意我圈出来的,大家首次登录的话一定要仔细阅读里面的内容

我这里就用 用户名:kfk 密码:kfk

点击创建用户

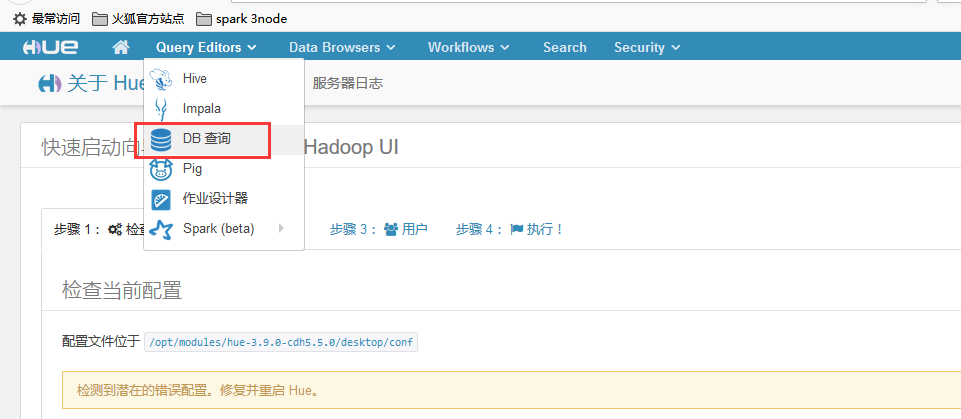

相应的界面我们就进来了

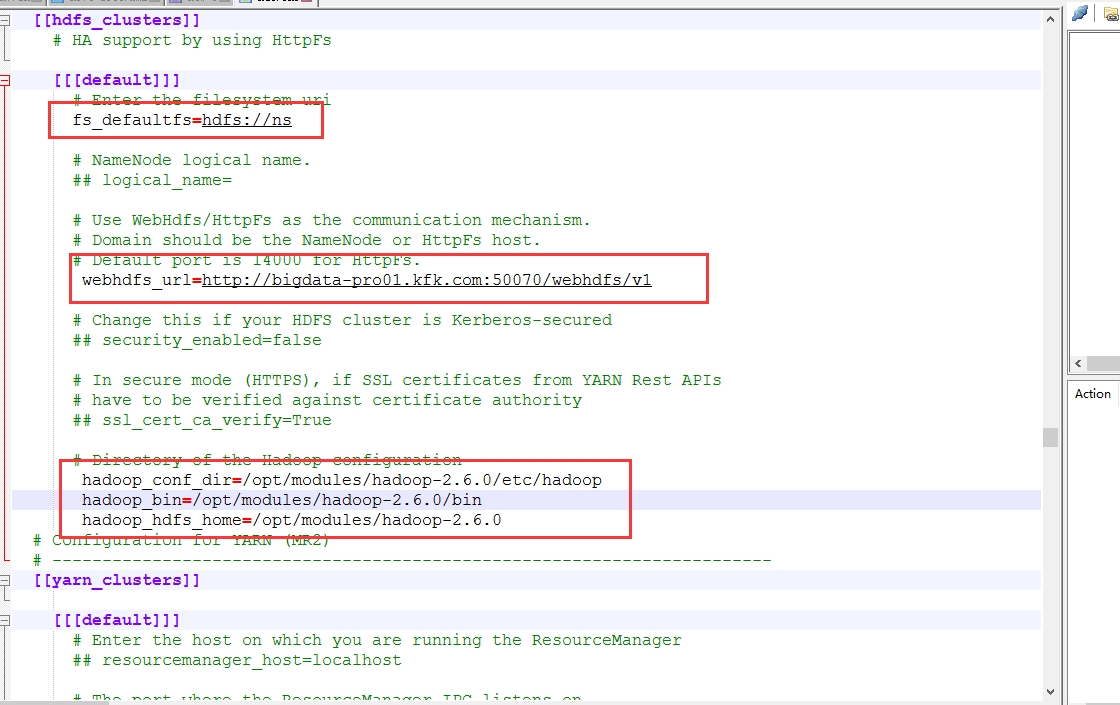

回到hue.ini文件

在hadoop的hdfs-site.xml上添加

<property>

<name>dfs.webhdfs.enabled</name>

<value>true</value>

</property>

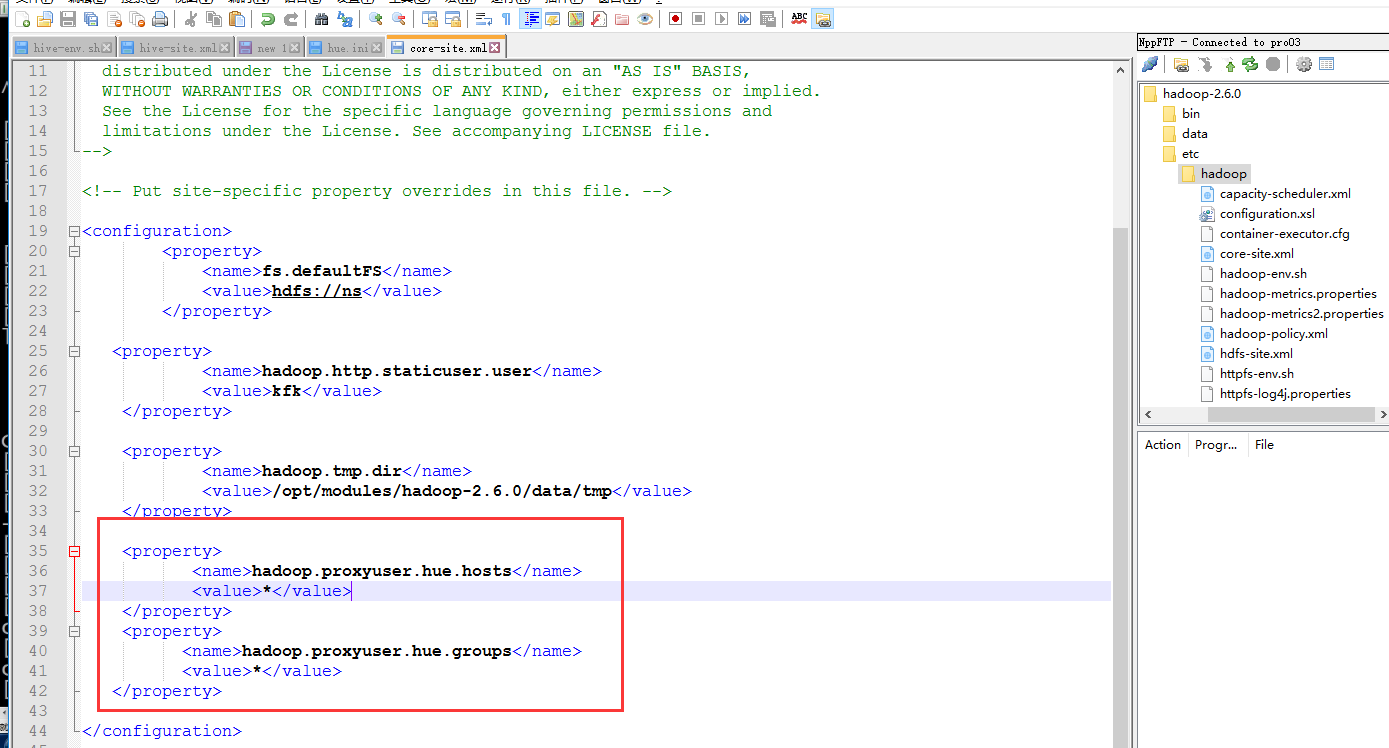

在hadoop的core-site.xml下面加上

<property>

<name>hadoop.proxyuser.hue.hosts</name>

<value>*</value>

</property>

<property>

<name>hadoop.proxyuser.hue.groups</name>

<value>*</value>

</property>

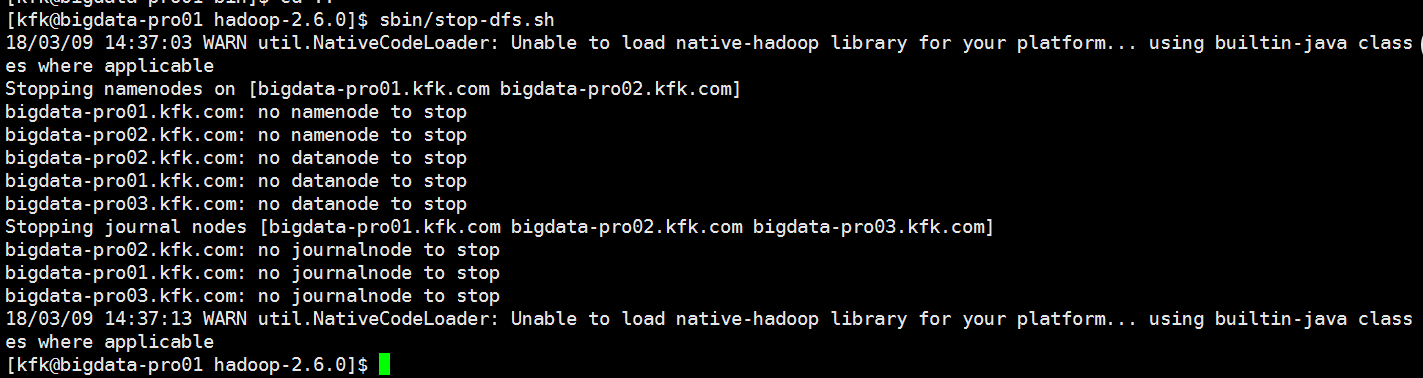

配置完了之后就把配置文件分发到节点1 和节点2上去

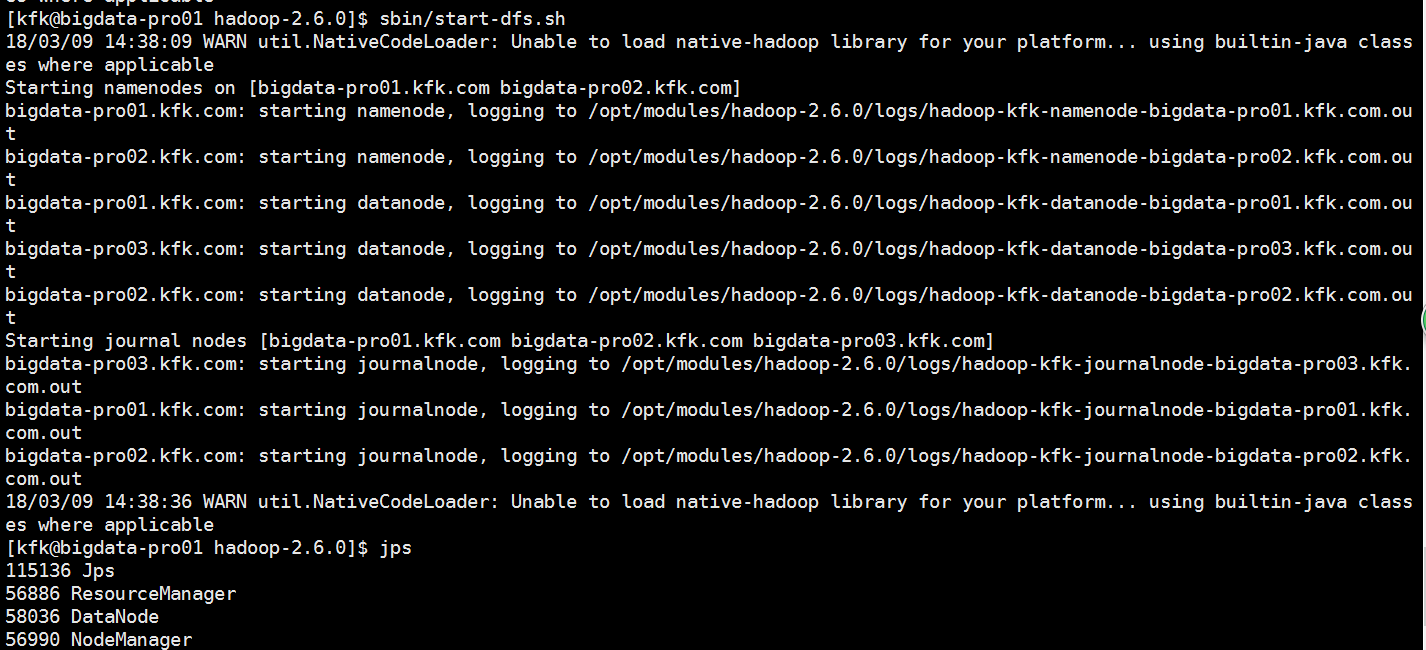

分发完之后我们重启一下服务

把hue也启动一下

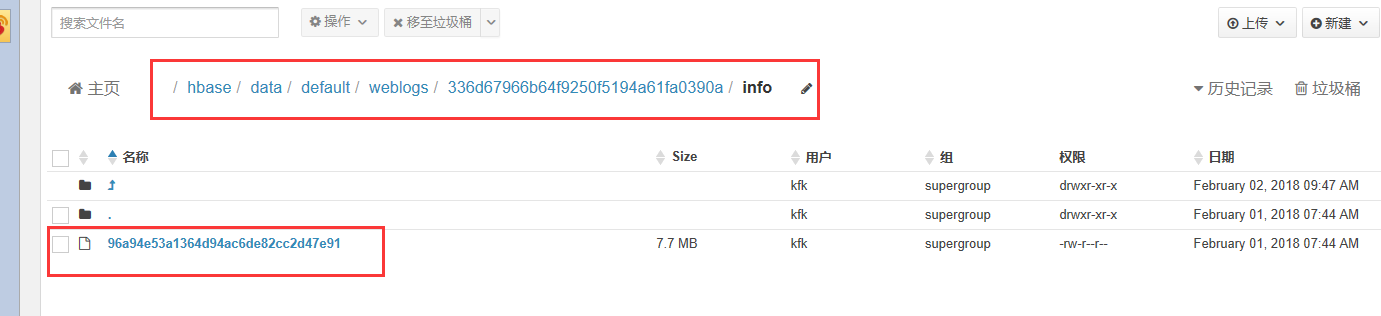

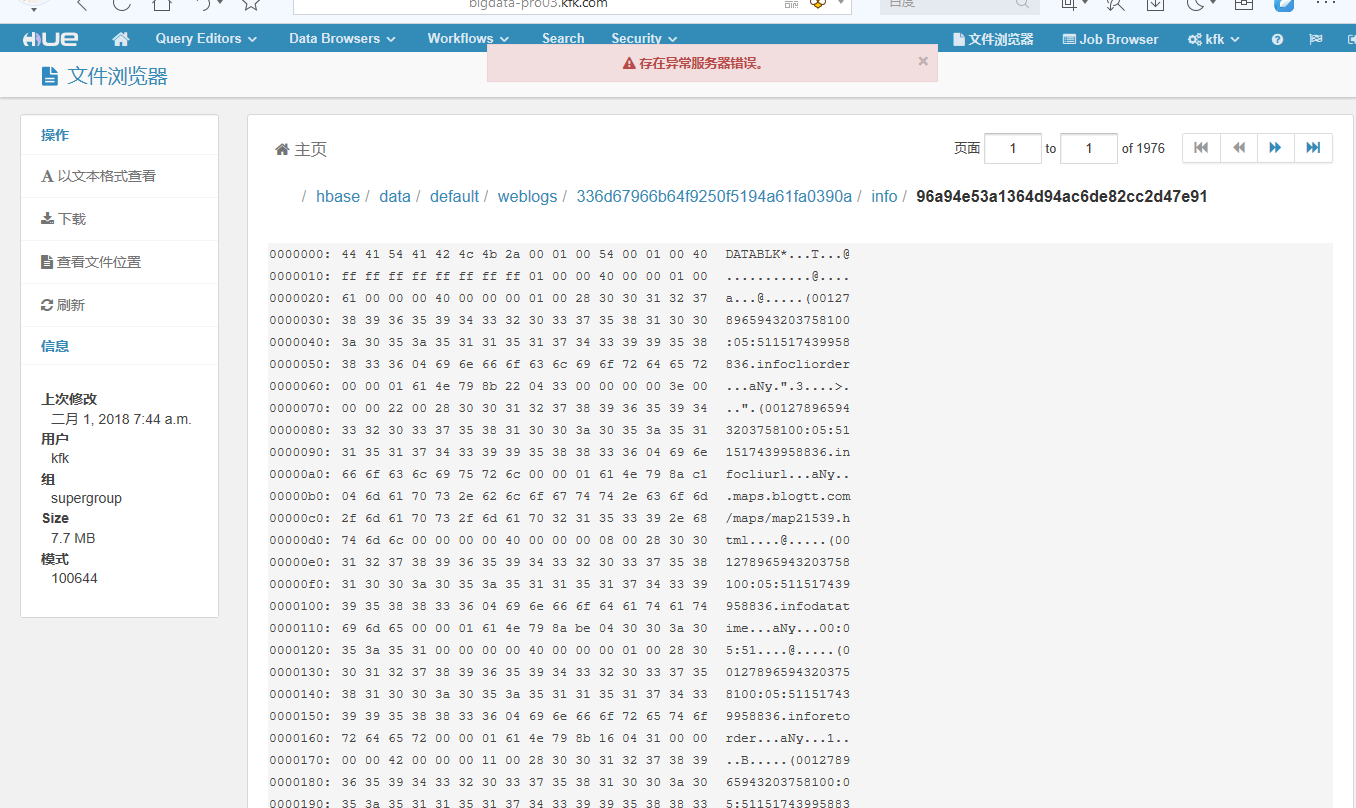

再次进入可视化界面

可以看到我的hdfs目录了

可以进来点开这些数据

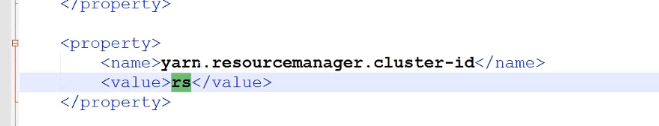

我们首先查看yarn-site.xml

我们配置hue.ini

我们继续配置hue.ini

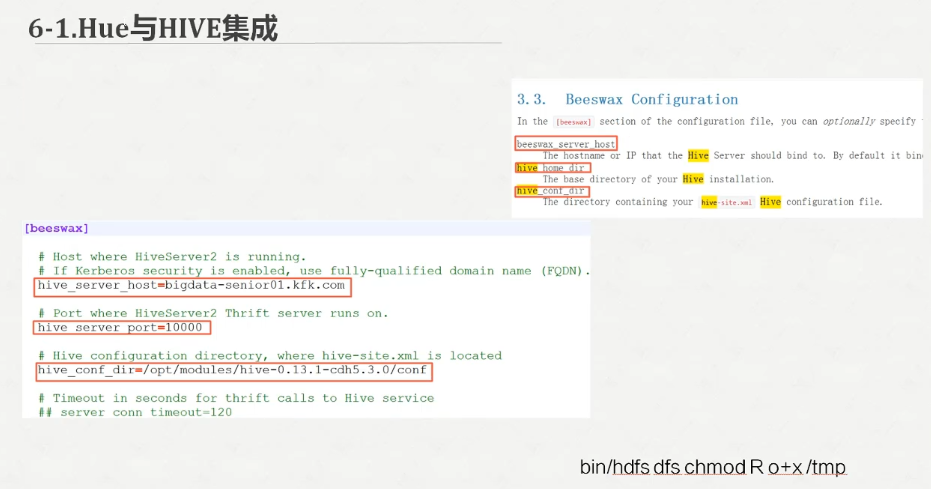

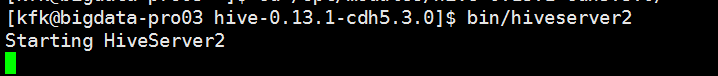

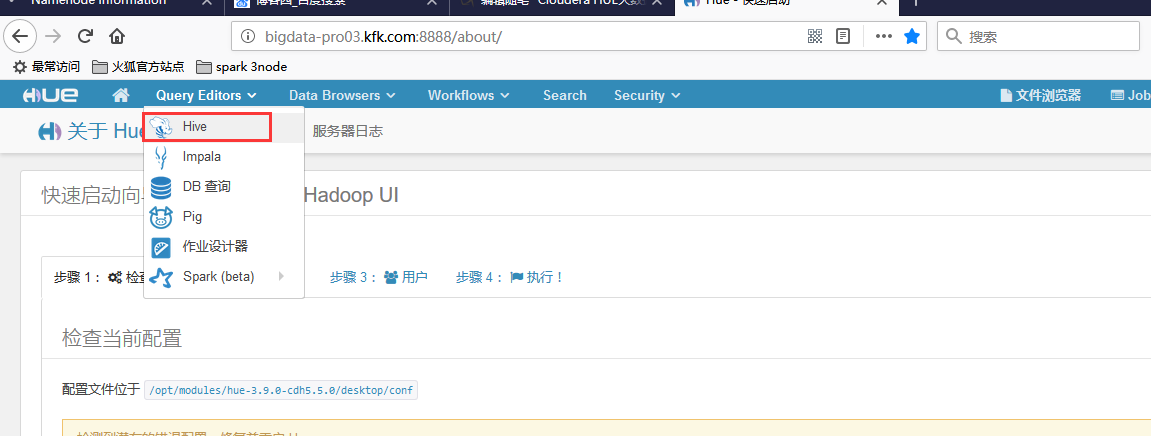

先启动hivesever2

启动一下hue

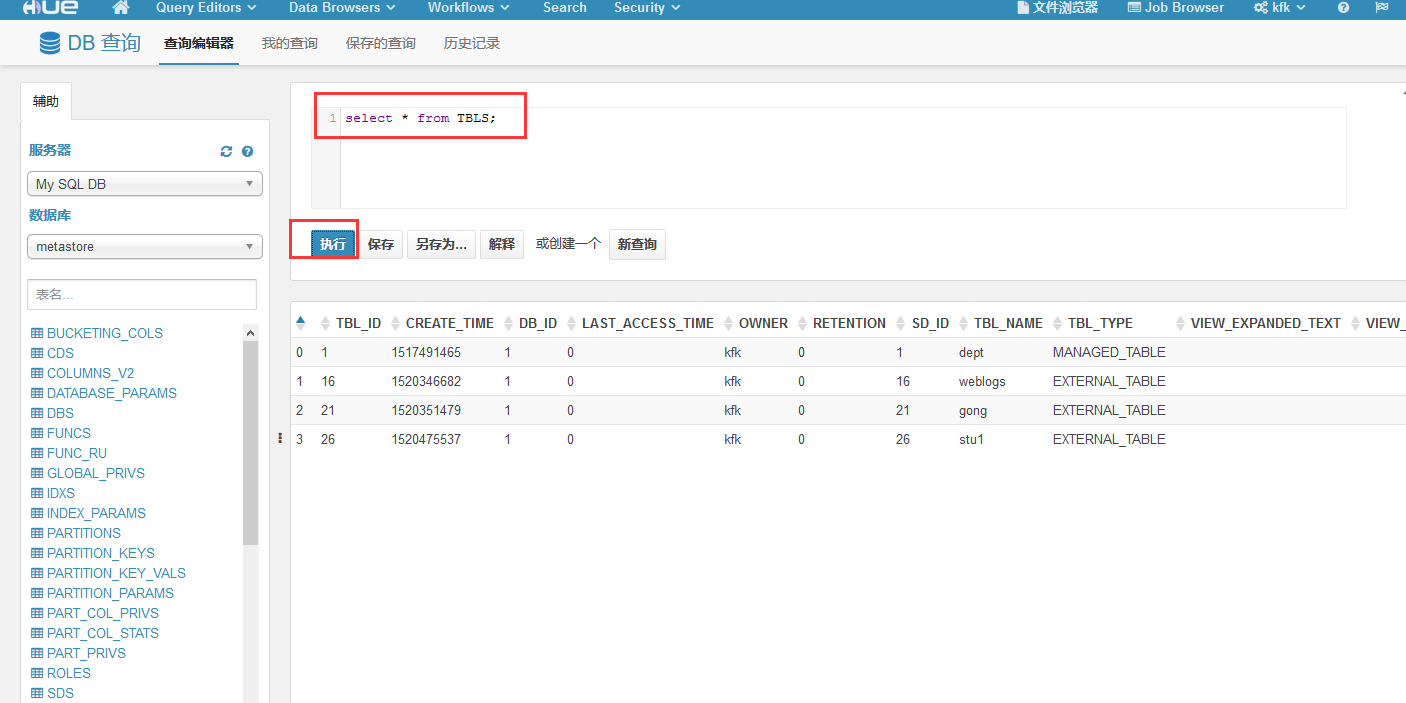

可以看到hive里面我们之前创建的表

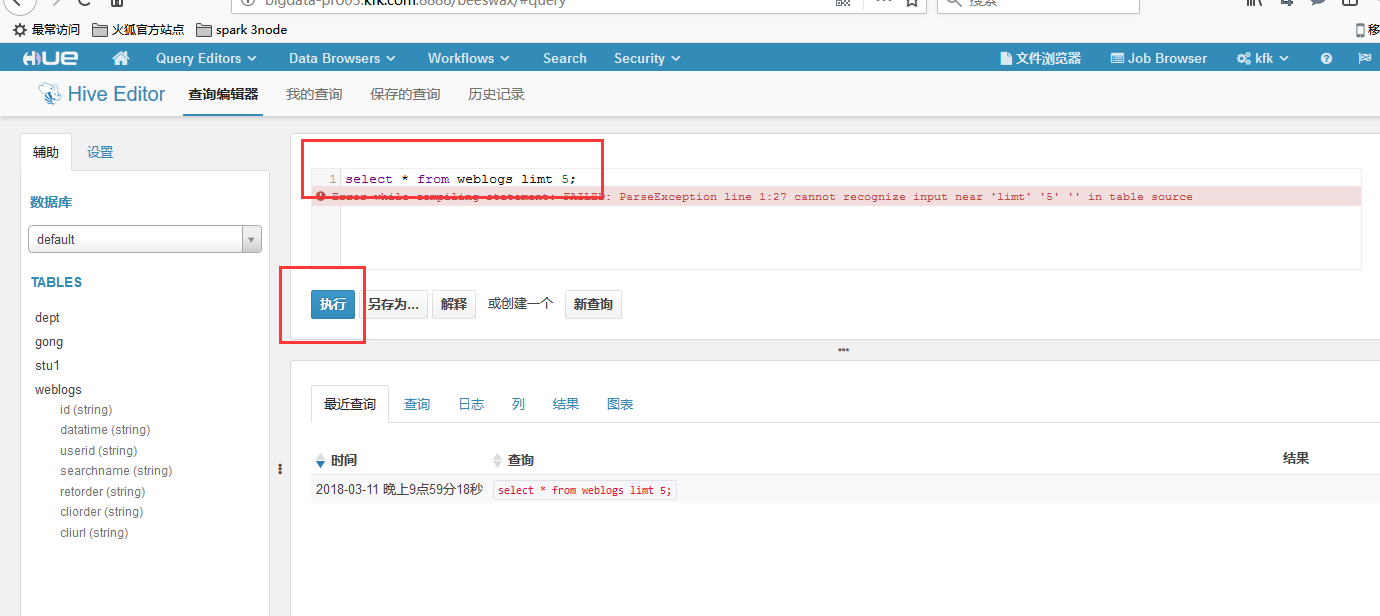

我们试图执行一下查询语句,结果报错了

在xshell里面看到报错的信息

[kfk@bigdata-pro03 hive-0.13.1-cdh5.3.0]$ bin/hiveserver2 Starting HiveServer2 OK OK OK OK OK OK OK OK OK OK OK OK NoViableAltException(287@[180:68: ( ( KW_AS )? alias= Identifier )?]) at org.antlr.runtime.DFA.noViableAlt(DFA.java:158) at org.antlr.runtime.DFA.predict(DFA.java:116) at org.apache.hadoop.hive.ql.parse.HiveParser_FromClauseParser.tableSource(HiveParser_FromClauseParser.java:4777) at org.apache.hadoop.hive.ql.parse.HiveParser_FromClauseParser.fromSource(HiveParser_FromClauseParser.java:3919) at org.apache.hadoop.hive.ql.parse.HiveParser_FromClauseParser.joinSource(HiveParser_FromClauseParser.java:1798) at org.apache.hadoop.hive.ql.parse.HiveParser_FromClauseParser.fromClause(HiveParser_FromClauseParser.java:1456) at org.apache.hadoop.hive.ql.parse.HiveParser.fromClause(HiveParser.java:40272) at org.apache.hadoop.hive.ql.parse.HiveParser.singleSelectStatement(HiveParser.java:38160) at org.apache.hadoop.hive.ql.parse.HiveParser.selectStatement(HiveParser.java:37845) at org.apache.hadoop.hive.ql.parse.HiveParser.regularBody(HiveParser.java:37782) at org.apache.hadoop.hive.ql.parse.HiveParser.queryStatementExpressionBody(HiveParser.java:36989) at org.apache.hadoop.hive.ql.parse.HiveParser.queryStatementExpression(HiveParser.java:36865) at org.apache.hadoop.hive.ql.parse.HiveParser.execStatement(HiveParser.java:1332) at org.apache.hadoop.hive.ql.parse.HiveParser.statement(HiveParser.java:1030) at org.apache.hadoop.hive.ql.parse.ParseDriver.parse(ParseDriver.java:199) at org.apache.hadoop.hive.ql.parse.ParseDriver.parse(ParseDriver.java:166) at org.apache.hadoop.hive.ql.Driver.compile(Driver.java:417) at org.apache.hadoop.hive.ql.Driver.compile(Driver.java:335) at org.apache.hadoop.hive.ql.Driver.compileInternal(Driver.java:1026) at org.apache.hadoop.hive.ql.Driver.compileAndRespond(Driver.java:1019) at org.apache.hive.service.cli.operation.SQLOperation.prepare(SQLOperation.java:100) at org.apache.hive.service.cli.operation.SQLOperation.run(SQLOperation.java:173) at org.apache.hive.service.cli.session.HiveSessionImpl.runOperationWithLogCapture(HiveSessionImpl.java:715) at org.apache.hive.service.cli.session.HiveSessionImpl.executeStatementInternal(HiveSessionImpl.java:370) at org.apache.hive.service.cli.session.HiveSessionImpl.executeStatementAsync(HiveSessionImpl.java:357) at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method) at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:57) at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43) at java.lang.reflect.Method.invoke(Method.java:606) at org.apache.hive.service.cli.session.HiveSessionProxy.invoke(HiveSessionProxy.java:79) at org.apache.hive.service.cli.session.HiveSessionProxy.access$000(HiveSessionProxy.java:37) at org.apache.hive.service.cli.session.HiveSessionProxy$1.run(HiveSessionProxy.java:64) at java.security.AccessController.doPrivileged(Native Method) at javax.security.auth.Subject.doAs(Subject.java:415) at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1628) at org.apache.hadoop.hive.shims.HadoopShimsSecure.doAs(HadoopShimsSecure.java:502) at org.apache.hive.service.cli.session.HiveSessionProxy.invoke(HiveSessionProxy.java:60) at com.sun.proxy.$Proxy16.executeStatementAsync(Unknown Source) at org.apache.hive.service.cli.CLIService.executeStatementAsync(CLIService.java:237) at org.apache.hive.service.cli.thrift.ThriftCLIService.ExecuteStatement(ThriftCLIService.java:392) at org.apache.hive.service.cli.thrift.TCLIService$Processor$ExecuteStatement.getResult(TCLIService.java:1373) at org.apache.hive.service.cli.thrift.TCLIService$Processor$ExecuteStatement.getResult(TCLIService.java:1358) at org.apache.thrift.ProcessFunction.process(ProcessFunction.java:39) at org.apache.thrift.TBaseProcessor.process(TBaseProcessor.java:39) at org.apache.hive.service.auth.TSetIpAddressProcessor.process(TSetIpAddressProcessor.java:55) at org.apache.thrift.server.TThreadPoolServer$WorkerProcess.run(TThreadPoolServer.java:244) at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1145) at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:615) at java.lang.Thread.run(Thread.java:745) FAILED: ParseException line 1:27 cannot recognize input near 'limt' '5' '<EOF>' in table source OK OK NoViableAltException(287@[180:68: ( ( KW_AS )? alias= Identifier )?]) at org.antlr.runtime.DFA.noViableAlt(DFA.java:158) at org.antlr.runtime.DFA.predict(DFA.java:116) at org.apache.hadoop.hive.ql.parse.HiveParser_FromClauseParser.tableSource(HiveParser_FromClauseParser.java:4777) at org.apache.hadoop.hive.ql.parse.HiveParser_FromClauseParser.fromSource(HiveParser_FromClauseParser.java:3919) at org.apache.hadoop.hive.ql.parse.HiveParser_FromClauseParser.joinSource(HiveParser_FromClauseParser.java:1798) at org.apache.hadoop.hive.ql.parse.HiveParser_FromClauseParser.fromClause(HiveParser_FromClauseParser.java:1456) at org.apache.hadoop.hive.ql.parse.HiveParser.fromClause(HiveParser.java:40272) at org.apache.hadoop.hive.ql.parse.HiveParser.singleSelectStatement(HiveParser.java:38160) at org.apache.hadoop.hive.ql.parse.HiveParser.selectStatement(HiveParser.java:37845) at org.apache.hadoop.hive.ql.parse.HiveParser.regularBody(HiveParser.java:37782) at org.apache.hadoop.hive.ql.parse.HiveParser.queryStatementExpressionBody(HiveParser.java:36989) at org.apache.hadoop.hive.ql.parse.HiveParser.queryStatementExpression(HiveParser.java:36865) at org.apache.hadoop.hive.ql.parse.HiveParser.execStatement(HiveParser.java:1332) at org.apache.hadoop.hive.ql.parse.HiveParser.statement(HiveParser.java:1030) at org.apache.hadoop.hive.ql.parse.ParseDriver.parse(ParseDriver.java:199) at org.apache.hadoop.hive.ql.parse.ParseDriver.parse(ParseDriver.java:166) at org.apache.hadoop.hive.ql.Driver.compile(Driver.java:417) at org.apache.hadoop.hive.ql.Driver.compile(Driver.java:335) at org.apache.hadoop.hive.ql.Driver.compileInternal(Driver.java:1026) at org.apache.hadoop.hive.ql.Driver.compileAndRespond(Driver.java:1019) at org.apache.hive.service.cli.operation.SQLOperation.prepare(SQLOperation.java:100) at org.apache.hive.service.cli.operation.SQLOperation.run(SQLOperation.java:173) at org.apache.hive.service.cli.session.HiveSessionImpl.runOperationWithLogCapture(HiveSessionImpl.java:715) at org.apache.hive.service.cli.session.HiveSessionImpl.executeStatementInternal(HiveSessionImpl.java:370) at org.apache.hive.service.cli.session.HiveSessionImpl.executeStatementAsync(HiveSessionImpl.java:357) at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method) at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:57) at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43) at java.lang.reflect.Method.invoke(Method.java:606) at org.apache.hive.service.cli.session.HiveSessionProxy.invoke(HiveSessionProxy.java:79) at org.apache.hive.service.cli.session.HiveSessionProxy.access$000(HiveSessionProxy.java:37) at org.apache.hive.service.cli.session.HiveSessionProxy$1.run(HiveSessionProxy.java:64) at java.security.AccessController.doPrivileged(Native Method) at javax.security.auth.Subject.doAs(Subject.java:415) at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1628) at org.apache.hadoop.hive.shims.HadoopShimsSecure.doAs(HadoopShimsSecure.java:502) at org.apache.hive.service.cli.session.HiveSessionProxy.invoke(HiveSessionProxy.java:60) at com.sun.proxy.$Proxy16.executeStatementAsync(Unknown Source) at org.apache.hive.service.cli.CLIService.executeStatementAsync(CLIService.java:237) at org.apache.hive.service.cli.thrift.ThriftCLIService.ExecuteStatement(ThriftCLIService.java:392) at org.apache.hive.service.cli.thrift.TCLIService$Processor$ExecuteStatement.getResult(TCLIService.java:1373) at org.apache.hive.service.cli.thrift.TCLIService$Processor$ExecuteStatement.getResult(TCLIService.java:1358) at org.apache.thrift.ProcessFunction.process(ProcessFunction.java:39) at org.apache.thrift.TBaseProcessor.process(TBaseProcessor.java:39) at org.apache.hive.service.auth.TSetIpAddressProcessor.process(TSetIpAddressProcessor.java:55) at org.apache.thrift.server.TThreadPoolServer$WorkerProcess.run(TThreadPoolServer.java:244) at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1145) at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:615) at java.lang.Thread.run(Thread.java:745) FAILED: ParseException line 1:27 cannot recognize input near 'limt' '5' '<EOF>' in table source OK

是因为我们的hbase没有启动的原因

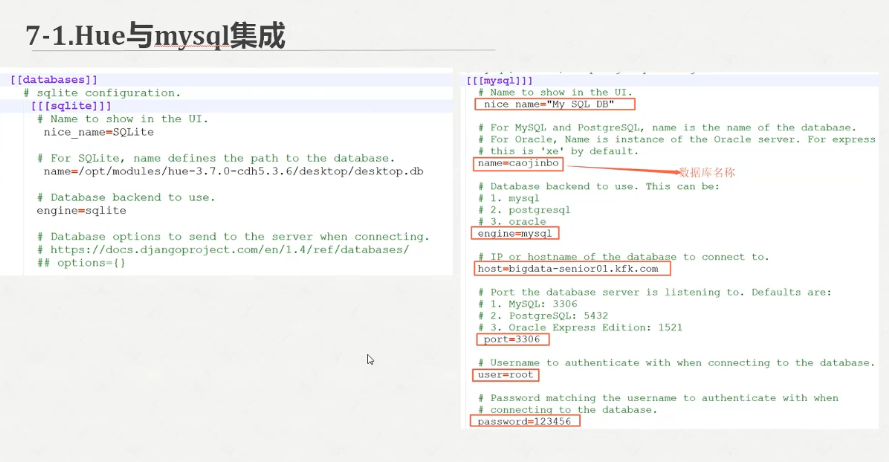

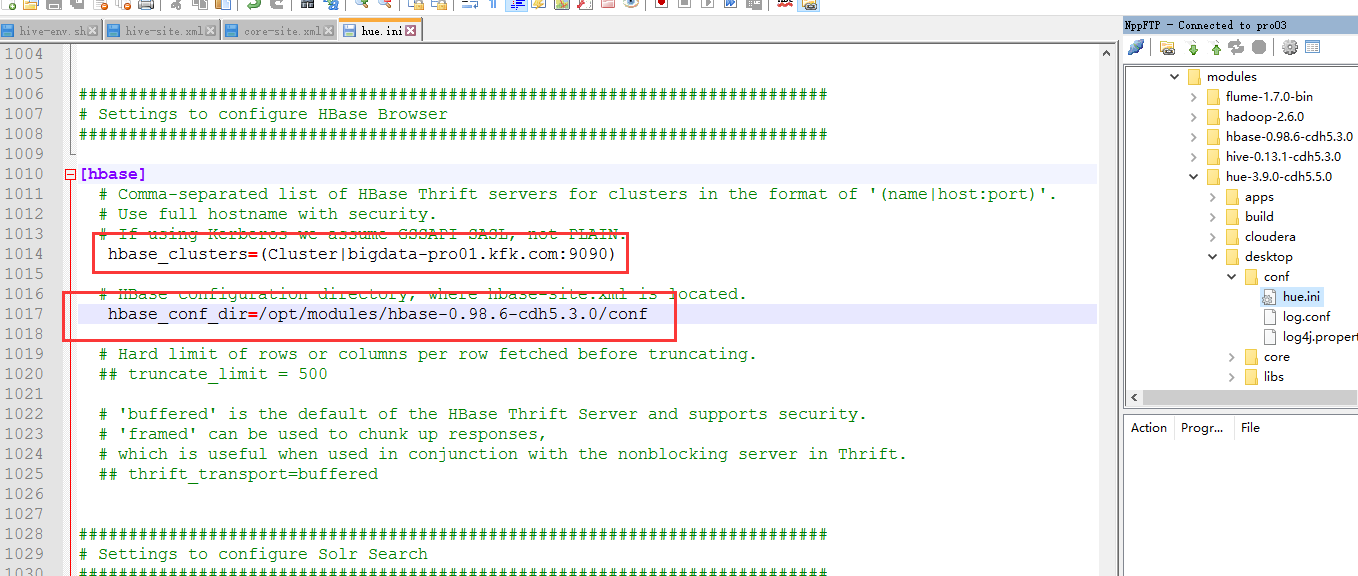

配置hue.ini

重启一下hue

我们重新打开可视化界面

执行一下查询语句

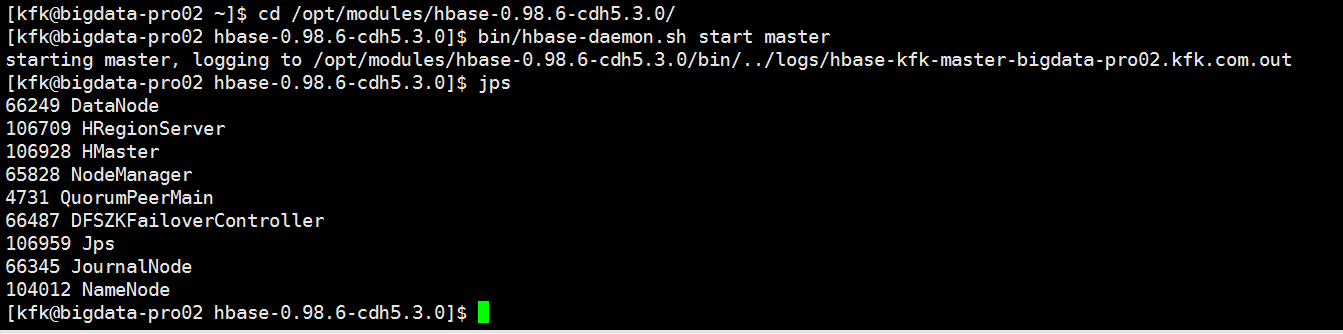

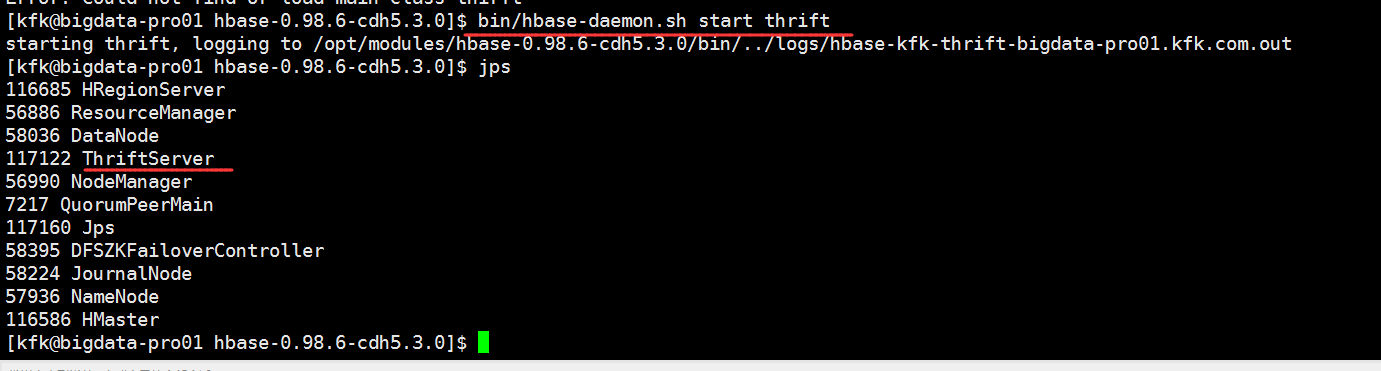

启动hbase

由于hue3.9版本的兼容性问题,下面我们改用hue3.7版本,配置跟3.9一样的。

但是我这里的环境问题,用3.7的版本没办法打开可视化界面,我估计是我的hive hbase的版本太低的原因了,我还是只能用回3.9版本的,还请大家谅解,建议hbase hive都用1.0以上的版本吧

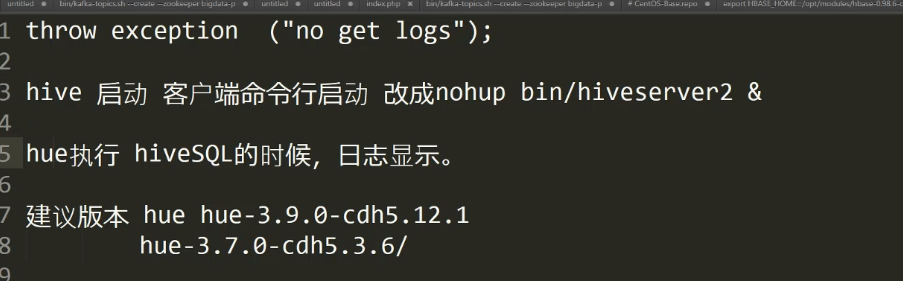

如果大家遇到这个问题,hive命令行没有显示日志的话可以参考一下方法