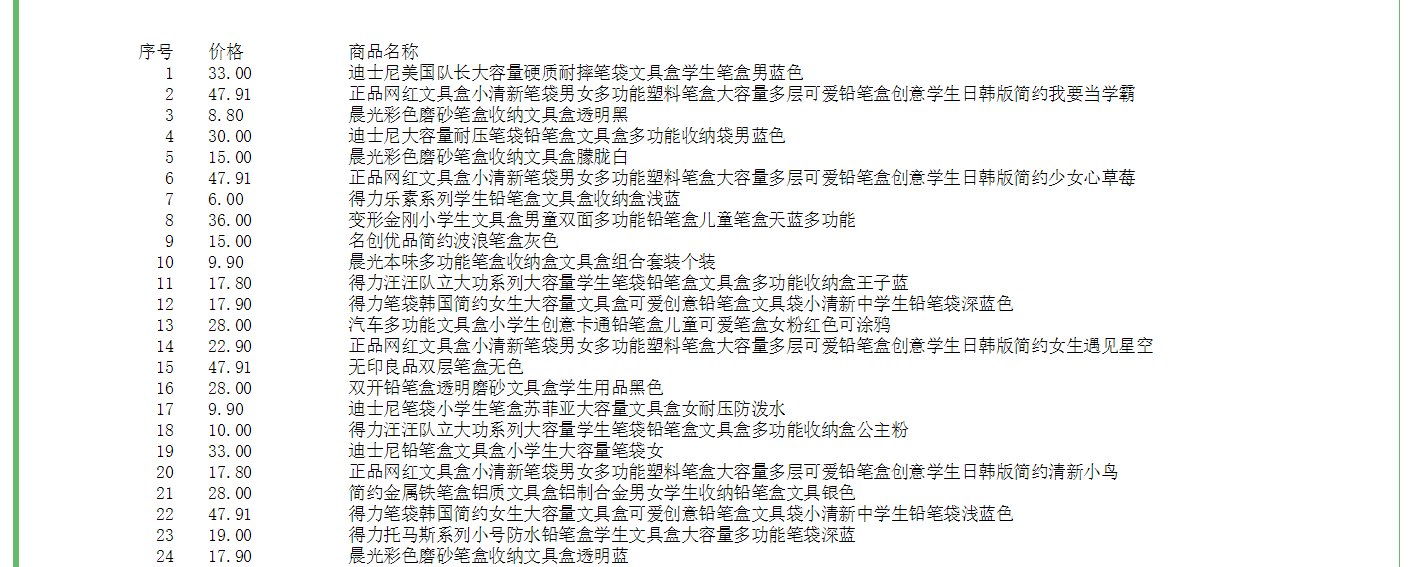

1 import requests 2 import re 3 4 def getHTMLText(url): 5 try: 6 r = requests.get(url, timeout=30) 7 r.raise_for_status() 8 r.encoding = r.apparent_encoding 9 return r.text 10 except: 11 return "" 12 13 def parsePage(ilt, html): 14 try: 15 plt = re.findall(r'data-done="1"><em>¥</em><i>d+.d+</i></strong>',html) 16 tlt = re.findall(r'<em>.+<font class="skcolor_ljg">笔盒</font>.+</em>',html) 17 for i in range(len(plt)): 18 match=re.search(r'd+.d+',plt[i])#这个函数返回的对象是match对象,所以用group属性把价格取出 19 price=match.group(0) 20 list_match=re.findall(r'[u4e00-u9fa5]',tlt[i])#这个字符串的中文提取我想了好久都没想到用什么正则表达式一下子提取出来 21 title='' 22 for m in range(len(list_match)):#后来放弃了用正则表达式一下子提取出来的想法,要是有大佬想到了指点一下呗 23 title=title+list_match[m] 24 ilt.append([price , title]) 25 except: 26 print("") 27 28 def printGoodsList(ilt): 29 tplt = "{:4} {:8} {:16}" 30 print(tplt.format("序号", "价格", "商品名称")) 31 count = 0 32 for g in ilt: 33 count = count + 1 34 print(tplt.format(count, g[0], g[1])) 35 36 def main(): 37 goods = '笔盒' 38 depth=3 39 start_url='https://search.jd.com/Search?keyword='+goods+'&enc=utf-8' 40 infoList = [] 41 for i in range(1,depth): 42 try: 43 url = start_url + '&page=' + str(2*i-1) 44 html = getHTMLText(url) 45 parsePage(infoList, html) 46 except: 47 continue 48 printGoodsList(infoList) 49 main()

1,下面附上参考源码,来源慕课;原来的爬虫是爬淘宝首页商品,不过现在淘宝首页要登录验证,不能直接爬取;但是具有参考价值;

import requests import re def getHTMLText(url): try: r=requests.get(url,timeout=30) r.raise_for_status() r.encoding=r.apparent_encoding return r.text except: return" " def parsePage(ilt,html): try: plt=re.findall(r'"view_price":"[d.]*" ',html) tlt=re.findall(r'"raw_title":".*?"',html) for i in range(len(plt)): price=eval(plt[i].split(':')[1]) title=eval(tlt[i].split(':')[1]) ilt.append([price,title]) except: print("") def printGoodsList(ilt): tplt="{:4} {:8} {:16}" print(tplt.format("序号","价格","商品名称")) count=0 for g in ilt: count=count+1 print(tplt.format(count,g[0],g[1])) def main(): goods='书包' depth=2#搜索结果设置为两页 start_url='https://s.taobao.com/search?q='+goods infoList=[] for i in range(depth): try: url=start_url+'&s='+str(44*i) html=getHTMLText(url)#把网站文本text爬下来 parsePage(infoList,html)#然后把文本里需要的信息爬下来 except: continue printGoodsList(infoList)#然后把信息整理一下打印出来 main()