问题描述

HBase在运行一段时间后,会出现以下2种情况:

1、HMaster节点自动挂掉;

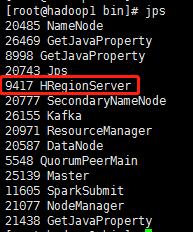

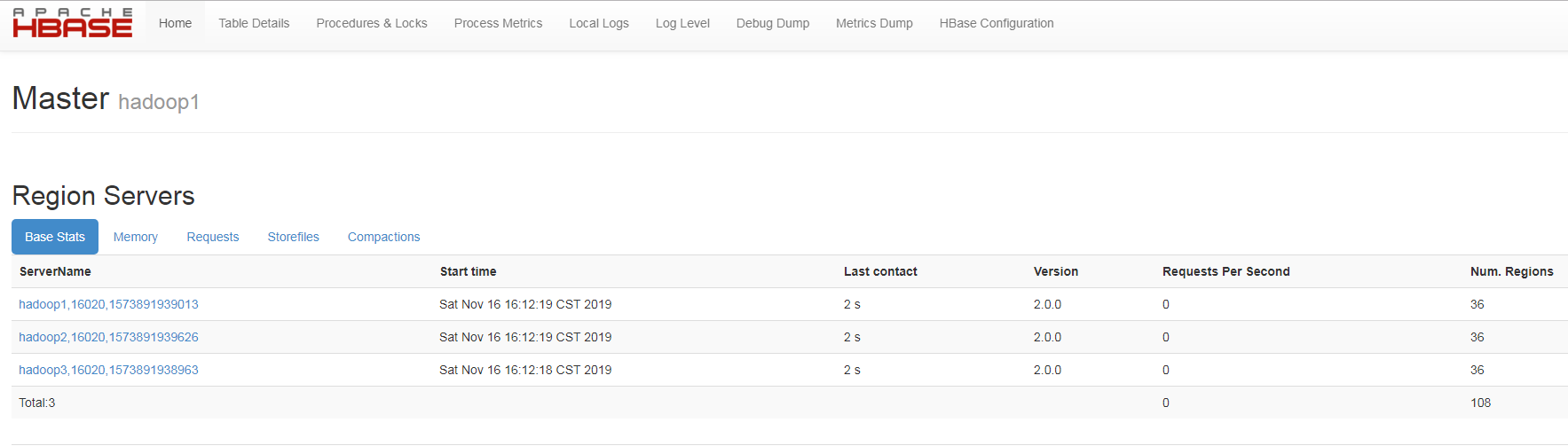

通过jps命令,发现HMaster进程没了,只剩下HRegionServer,此时应用还能正常往HBase写入数据

此时以下界面无法打开:

2、HRegionServer节点自动挂掉

通过jps命令,发现HRegionServer进程没了,此时应用往HBase写入数据时不报错

问题排查

问题1:查看HMaster节点的日志,出现一些异常信息,且跟zk有关。

以下标红的字说明:休眠了64293ms而不是3000ms,这可能是因为垃圾收集暂停时间太长

2019-11-16 15:44:41,142 WARN [ResponseProcessor for block BP-2026369791-127.0.0.1-1560926807945:blk_1085889831_12149164] hdfs.DFSClient: Slow ReadProcessor read fields took 30161ms (threshold=30000ms); ack: seqno: 130 reply: SUCCESS reply: SUCCESS downstreamAckTimeNanos: 18446744073486914000 flag: 0 flag: 0, targets: [DatanodeInfoWithStorage[172.16.10.91:50010,DS-9402e3dd-982e-4f18-be39-3a7dfc0d2672,DISK], DatanodeInfoWithStorage[172.16.10.92:50010,DS-b2143ca2-8adf-450c-b662-17cc8210c565,DISK]] 2019-11-16 15:45:06,862 INFO [LruBlockCacheStatsExecutor] hfile.LruBlockCache: totalSize=884.18 KB, freeSize=1.15 GB, max=1.15 GB, blockCount=0, accesses=0, hits=0, hitRatio=0, cachingAccesses=0, cachingHits=0, cachingHitsRatio=0,evictions=30, evicted=0, evictedPerRun=0.0 2019-11-16 15:49:57,676 INFO [master/hadoop1:16000.splitLogManager..Chore.1] hbase.ScheduledChore: Chore: SplitLogManager Timeout Monitor missed its start time 2019-11-16 15:51:07,614 WARN [master/hadoop1:16000-SendThread(172.16.10.91:2181)] zookeeper.ClientCnxn: Client session timed out, have not heard from server in 73768ms for sessionid 0x1023619d3c30006 2019-11-16 15:51:07,875 INFO [LruBlockCacheStatsExecutor] hfile.LruBlockCache: totalSize=884.18 KB, freeSize=1.15 GB, max=1.15 GB, blockCount=0, accesses=0, hits=0, hitRatio=0, cachingAccesses=0, cachingHits=0, cachingHitsRatio=0,evictions=60, evicted=0, evictedPerRun=0.0 2019-11-16 15:51:07,699 INFO [master/hadoop1:16000.splitLogManager..Chore.1] hbase.ScheduledChore: Chore: SplitLogManager Timeout Monitor missed its start time 2019-11-16 15:51:07,615 WARN [master/hadoop1:16000] util.Sleeper: We slept 64293ms instead of 3000ms, this is likely due to a long garbage collecting pause and it's usually bad, see http://hbase.apache.org/book.html#trouble.rs.runtime.zkexpired 2019-11-16 15:51:07,614 WARN [main-SendThread(172.16.10.91:2181)] zookeeper.ClientCnxn: Client session timed out, have not heard from server in 64945ms for sessionid 0x1023619d3c30002 2019-11-16 15:51:07,924 INFO [master/hadoop1:16000-SendThread(172.16.10.91:2181)] zookeeper.ClientCnxn: Client session timed out, have not heard from server in 73768ms for sessionid 0x1023619d3c30006, closing socket connection and attempting reconnect 2019-11-16 15:51:07,931 INFO [main-SendThread(172.16.10.91:2181)] zookeeper.ClientCnxn: Client session timed out, have not heard from server in 64945ms for sessionid 0x1023619d3c30002, closing socket connection and attempting reconnect 2019-11-16 15:51:07,961 WARN [ResponseProcessor for block BP-2026369791-127.0.0.1-1560926807945:blk_1085889831_12149164] hdfs.DFSClient: DFSOutputStream ResponseProcessor exception for block BP-2026369791-127.0.0.1-1560926807945:blk_1085889831_12149164 java.io.EOFException: Premature EOF: no length prefix available at org.apache.hadoop.hdfs.protocolPB.PBHelper.vintPrefixed(PBHelper.java:2294) at org.apache.hadoop.hdfs.protocol.datatransfer.PipelineAck.readFields(PipelineAck.java:244) at org.apache.hadoop.hdfs.DFSOutputStream$DataStreamer$ResponseProcessor.run(DFSOutputStream.java:847) 2019-11-16 15:51:08,054 WARN [DataStreamer for file /hbase/MasterProcWALs/pv2-00000000000000000475.log block BP-2026369791-127.0.0.1-1560926807945:blk_1085889831_12149164] hdfs.DFSClient: Error Recovery for block BP-2026369791-127.0.0.1-1560926807945:blk_1085889831_12149164 in pipeline DatanodeInfoWithStorage[172.16.10.91:50010,DS-9402e3dd-982e-4f18-be39-3a7dfc0d2672,DISK], DatanodeInfoWithStorage[172.16.10.92:50010,DS-b2143ca2-8adf-450c-b662-17cc8210c565,DISK]: datanode 0(DatanodeInfoWithStorage[172.16.10.91:50010,DS-9402e3dd-982e-4f18-be39-3a7dfc0d2672,DISK]) is bad. 2019-11-16 15:51:08,738 INFO [master/hadoop1:16000-SendThread(172.16.10.92:2181)] zookeeper.ClientCnxn: Opening socket connection to server 172.16.10.92/172.16.10.92:2181. Will not attempt to authenticate using SASL (unknown error) 2019-11-16 15:51:08,738 INFO [master/hadoop1:16000-SendThread(172.16.10.92:2181)] zookeeper.ClientCnxn: Socket connection established to 172.16.10.92/172.16.10.92:2181, initiating session 2019-11-16 15:51:08,784 INFO [main-SendThread(172.16.10.92:2181)] zookeeper.ClientCnxn: Opening socket connection to server 172.16.10.92/172.16.10.92:2181. Will not attempt to authenticate using SASL (unknown error) 2019-11-16 15:51:08,943 WARN [master/hadoop1:16000-SendThread(172.16.10.92:2181)] zookeeper.ClientCnxn: Unable to reconnect to ZooKeeper service, session 0x1023619d3c30006 has expired 2019-11-16 15:51:08,957 INFO [master/hadoop1:16000-SendThread(172.16.10.92:2181)] zookeeper.ClientCnxn: Unable to reconnect to ZooKeeper service, session 0x1023619d3c30006 has expired, closing socket connection 2019-11-16 15:51:08,957 INFO [main-SendThread(172.16.10.92:2181)] zookeeper.ClientCnxn: Socket connection established to 172.16.10.92/172.16.10.92:2181, initiating session 2019-11-16 15:51:08,983 INFO [master/hadoop1:16000-EventThread] zookeeper.ClientCnxn: EventThread shut down for session: 0x1023619d3c30006 2019-11-16 15:51:10,411 INFO [master/hadoop1:16000.splitLogManager..Chore.1] hbase.ScheduledChore: Chore: SplitLogManager Timeout Monitor missed its start time 2019-11-16 15:51:10,973 WARN [main-SendThread(172.16.10.92:2181)] zookeeper.ClientCnxn: Unable to reconnect to ZooKeeper service, session 0x1023619d3c30002 has expired 2019-11-16 15:51:10,973 INFO [main-SendThread(172.16.10.92:2181)] zookeeper.ClientCnxn: Unable to reconnect to ZooKeeper service, session 0x1023619d3c30002 has expired, closing socket connection 2019-11-16 15:51:11,073 WARN [master/hadoop1:16000.Chore.1] zookeeper.ZKUtil: master:16000-0x1023619d3c30002, quorum=172.16.10.91:2181,172.16.10.92:2181,172.16.10.93:2181, baseZNode=/hbase Unable to list children of znode /hbase/replication/peers org.apache.zookeeper.KeeperException$SessionExpiredException: KeeperErrorCode = Session expired for /hbase/replication/peers at org.apache.zookeeper.KeeperException.create(KeeperException.java:127) at org.apache.zookeeper.KeeperException.create(KeeperException.java:51) at org.apache.zookeeper.ZooKeeper.getChildren(ZooKeeper.java:1532) at org.apache.hadoop.hbase.zookeeper.RecoverableZooKeeper.getChildren(RecoverableZooKeeper.java:278) at org.apache.hadoop.hbase.zookeeper.ZKUtil.listChildrenAndWatchForNewChildren(ZKUtil.java:443) at org.apache.hadoop.hbase.zookeeper.ZKUtil.listChildrenAndWatchThem(ZKUtil.java:471) at org.apache.hadoop.hbase.replication.ReplicationPeersZKImpl.getAllPeerIds(ReplicationPeersZKImpl.java:391) at org.apache.hadoop.hbase.master.cleaner.ReplicationZKNodeCleaner.getUnDeletedQueues(ReplicationZKNodeCleaner.java:78) at org.apache.hadoop.hbase.master.cleaner.ReplicationZKNodeCleanerChore.chore(ReplicationZKNodeCleanerChore.java:48) at org.apache.hadoop.hbase.ScheduledChore.run(ScheduledChore.java:186) at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:511) at java.util.concurrent.FutureTask.runAndReset(FutureTask.java:308) at java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask.access$301(ScheduledThreadPoolExecutor.java:180) at java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask.run(ScheduledThreadPoolExecutor.java:294) at org.apache.hadoop.hbase.JitterScheduledThreadPoolExecutorImpl$JitteredRunnableScheduledFuture.run(JitterScheduledThreadPoolExecutorImpl.java:111) at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149) at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624) at java.lang.Thread.run(Thread.java:748) 2019-11-16 15:51:11,164 ERROR [master/hadoop1:16000.Chore.1] zookeeper.ZKWatcher: master:16000-0x1023619d3c30002, quorum=172.16.10.91:2181,172.16.10.92:2181,172.16.10.93:2181, baseZNode=/hbase Received unexpected KeeperException, re-throwing exception org.apache.zookeeper.KeeperException$SessionExpiredException: KeeperErrorCode = Session expired for /hbase/replication/peers at org.apache.zookeeper.KeeperException.create(KeeperException.java:127) at org.apache.zookeeper.KeeperException.create(KeeperException.java:51) at org.apache.zookeeper.ZooKeeper.getChildren(ZooKeeper.java:1532) at org.apache.hadoop.hbase.zookeeper.RecoverableZooKeeper.getChildren(RecoverableZooKeeper.java:278) at org.apache.hadoop.hbase.zookeeper.ZKUtil.listChildrenAndWatchForNewChildren(ZKUtil.java:443) at org.apache.hadoop.hbase.zookeeper.ZKUtil.listChildrenAndWatchThem(ZKUtil.java:471) at org.apache.hadoop.hbase.replication.ReplicationPeersZKImpl.getAllPeerIds(ReplicationPeersZKImpl.java:391) at org.apache.hadoop.hbase.master.cleaner.ReplicationZKNodeCleaner.getUnDeletedQueues(ReplicationZKNodeCleaner.java:78) at org.apache.hadoop.hbase.master.cleaner.ReplicationZKNodeCleanerChore.chore(ReplicationZKNodeCleanerChore.java:48) at org.apache.hadoop.hbase.ScheduledChore.run(ScheduledChore.java:186) at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:511) at java.util.concurrent.FutureTask.runAndReset(FutureTask.java:308) at java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask.access$301(ScheduledThreadPoolExecutor.java:180) at java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask.run(ScheduledThreadPoolExecutor.java:294) at org.apache.hadoop.hbase.JitterScheduledThreadPoolExecutorImpl$JitteredRunnableScheduledFuture.run(JitterScheduledThreadPoolExecutorImpl.java:111) at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149) at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624) at java.lang.Thread.run(Thread.java:748) 2019-11-16 15:51:11,644 ERROR [main-EventThread] master.HMaster: Master server abort: loaded coprocessors are: [] 2019-11-16 15:51:11,644 ERROR [master/hadoop1:16000.Chore.1] master.HMaster: Master server abort: loaded coprocessors are: [] 2019-11-16 15:51:11,644 ERROR [main-EventThread] master.HMaster: ***** ABORTING master hadoop1,16000,1573889992279: master:16000-0x1023619d3c30002, quorum=172.16.10.91:2181,172.16.10.92:2181,172.16.10.93:2181, baseZNode=/hbase master:16000-0x1023619d3c30002 received expired from ZooKeeper, aborting ***** org.apache.zookeeper.KeeperException$SessionExpiredException: KeeperErrorCode = Session expired at org.apache.hadoop.hbase.zookeeper.ZKWatcher.connectionEvent(ZKWatcher.java:520) at org.apache.hadoop.hbase.zookeeper.ZKWatcher.process(ZKWatcher.java:452) at org.apache.zookeeper.ClientCnxn$EventThread.processEvent(ClientCnxn.java:530) at org.apache.zookeeper.ClientCnxn$EventThread.run(ClientCnxn.java:505) 2019-11-16 15:51:11,644 ERROR [master/hadoop1:16000.Chore.1] master.HMaster: ***** ABORTING master hadoop1,16000,1573889992279: Cannot get the list of peers ***** org.apache.zookeeper.KeeperException$SessionExpiredException: KeeperErrorCode = Session expired for /hbase/replication/peers at org.apache.zookeeper.KeeperException.create(KeeperException.java:127) at org.apache.zookeeper.KeeperException.create(KeeperException.java:51) at org.apache.zookeeper.ZooKeeper.getChildren(ZooKeeper.java:1532) at org.apache.hadoop.hbase.zookeeper.RecoverableZooKeeper.getChildren(RecoverableZooKeeper.java:278) at org.apache.hadoop.hbase.zookeeper.ZKUtil.listChildrenAndWatchForNewChildren(ZKUtil.java:443) at org.apache.hadoop.hbase.zookeeper.ZKUtil.listChildrenAndWatchThem(ZKUtil.java:471) at org.apache.hadoop.hbase.replication.ReplicationPeersZKImpl.getAllPeerIds(ReplicationPeersZKImpl.java:391) at org.apache.hadoop.hbase.master.cleaner.ReplicationZKNodeCleaner.getUnDeletedQueues(ReplicationZKNodeCleaner.java:78) at org.apache.hadoop.hbase.master.cleaner.ReplicationZKNodeCleanerChore.chore(ReplicationZKNodeCleanerChore.java:48) at org.apache.hadoop.hbase.ScheduledChore.run(ScheduledChore.java:186) at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:511) at java.util.concurrent.FutureTask.runAndReset(FutureTask.java:308) at java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask.access$301(ScheduledThreadPoolExecutor.java:180) at java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask.run(ScheduledThreadPoolExecutor.java:294) at org.apache.hadoop.hbase.JitterScheduledThreadPoolExecutorImpl$JitteredRunnableScheduledFuture.run(JitterScheduledThreadPoolExecutorImpl.java:111) at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149) at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624) at java.lang.Thread.run(Thread.java:748) 2019-11-16 15:51:11,675 INFO [master/hadoop1:16000.Chore.1] regionserver.HRegionServer: ***** STOPPING region server 'hadoop1,16000,1573889992279' ***** 2019-11-16 15:51:11,675 INFO [master/hadoop1:16000.Chore.1] regionserver.HRegionServer: STOPPED: Stopped by master/hadoop1:16000.Chore.1 2019-11-16 15:51:11,675 INFO [main-EventThread] regionserver.HRegionServer: ***** STOPPING region server 'hadoop1,16000,1573889992279' ***** 2019-11-16 15:51:11,675 INFO [main-EventThread] regionserver.HRegionServer: STOPPED: Stopped by main-EventThread 2019-11-16 15:51:11,751 WARN [master/hadoop1:16000.Chore.1] zookeeper.ZKUtil: master:16000-0x1023619d3c30002, quorum=172.16.10.91:2181,172.16.10.92:2181,172.16.10.93:2181, baseZNode=/hbase Unable to get data of znode /hbase/master org.apache.zookeeper.KeeperException$SessionExpiredException: KeeperErrorCode = Session expired for /hbase/master at org.apache.zookeeper.KeeperException.create(KeeperException.java:127) at org.apache.zookeeper.KeeperException.create(KeeperException.java:51) at org.apache.zookeeper.ZooKeeper.getData(ZooKeeper.java:1212) at org.apache.hadoop.hbase.zookeeper.RecoverableZooKeeper.getData(RecoverableZooKeeper.java:340) at org.apache.hadoop.hbase.zookeeper.ZKUtil.getData(ZKUtil.java:610) at org.apache.hadoop.hbase.zookeeper.MasterAddressTracker.getMasterAddress(MasterAddressTracker.java:150) at org.apache.hadoop.hbase.master.ActiveMasterManager.stop(ActiveMasterManager.java:269) at org.apache.hadoop.hbase.master.HMaster.stop(HMaster.java:2724) at org.apache.hadoop.hbase.master.HMaster.stopMaster(HMaster.java:2716) at org.apache.hadoop.hbase.master.HMaster.abort(HMaster.java:2631) at org.apache.hadoop.hbase.replication.ReplicationPeersZKImpl.getAllPeerIds(ReplicationPeersZKImpl.java:393) at org.apache.hadoop.hbase.master.cleaner.ReplicationZKNodeCleaner.getUnDeletedQueues(ReplicationZKNodeCleaner.java:78) at org.apache.hadoop.hbase.master.cleaner.ReplicationZKNodeCleanerChore.chore(ReplicationZKNodeCleanerChore.java:48) at org.apache.hadoop.hbase.ScheduledChore.run(ScheduledChore.java:186) at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:511) at java.util.concurrent.FutureTask.runAndReset(FutureTask.java:308) at java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask.access$301(ScheduledThreadPoolExecutor.java:180) at java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask.run(ScheduledThreadPoolExecutor.java:294) at org.apache.hadoop.hbase.JitterScheduledThreadPoolExecutorImpl$JitteredRunnableScheduledFuture.run(JitterScheduledThreadPoolExecutorImpl.java:111) at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149) at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624) at java.lang.Thread.run(Thread.java:748) 2019-11-16 15:51:11,751 WARN [main-EventThread] zookeeper.ZKUtil: master:16000-0x1023619d3c30002, quorum=172.16.10.91:2181,172.16.10.92:2181,172.16.10.93:2181, baseZNode=/hbase Unable to get data of znode /hbase/master org.apache.zookeeper.KeeperException$SessionExpiredException: KeeperErrorCode = Session expired for /hbase/master at org.apache.zookeeper.KeeperException.create(KeeperException.java:127) at org.apache.zookeeper.KeeperException.create(KeeperException.java:51) at org.apache.zookeeper.ZooKeeper.getData(ZooKeeper.java:1212) at org.apache.hadoop.hbase.zookeeper.RecoverableZooKeeper.getData(RecoverableZooKeeper.java:340) at org.apache.hadoop.hbase.zookeeper.ZKUtil.getData(ZKUtil.java:610) at org.apache.hadoop.hbase.zookeeper.MasterAddressTracker.getMasterAddress(MasterAddressTracker.java:150) at org.apache.hadoop.hbase.master.ActiveMasterManager.stop(ActiveMasterManager.java:269) at org.apache.hadoop.hbase.master.HMaster.stop(HMaster.java:2724) at org.apache.hadoop.hbase.master.HMaster.stopMaster(HMaster.java:2716) at org.apache.hadoop.hbase.master.HMaster.abort(HMaster.java:2631) at org.apache.hadoop.hbase.zookeeper.ZKWatcher.connectionEvent(ZKWatcher.java:520) at org.apache.hadoop.hbase.zookeeper.ZKWatcher.process(ZKWatcher.java:452) at org.apache.zookeeper.ClientCnxn$EventThread.processEvent(ClientCnxn.java:530) at org.apache.zookeeper.ClientCnxn$EventThread.run(ClientCnxn.java:505) 2019-11-16 15:51:11,752 ERROR [main-EventThread] zookeeper.ZKWatcher: master:16000-0x1023619d3c30002, quorum=172.16.10.91:2181,172.16.10.92:2181,172.16.10.93:2181, baseZNode=/hbase Received unexpected KeeperException, re-throwing exception org.apache.zookeeper.KeeperException$SessionExpiredException: KeeperErrorCode = Session expired for /hbase/master at org.apache.zookeeper.KeeperException.create(KeeperException.java:127) at org.apache.zookeeper.KeeperException.create(KeeperException.java:51) at org.apache.zookeeper.ZooKeeper.getData(ZooKeeper.java:1212) at org.apache.hadoop.hbase.zookeeper.RecoverableZooKeeper.getData(RecoverableZooKeeper.java:340) at org.apache.hadoop.hbase.zookeeper.ZKUtil.getData(ZKUtil.java:610) at org.apache.hadoop.hbase.zookeeper.MasterAddressTracker.getMasterAddress(MasterAddressTracker.java:150) at org.apache.hadoop.hbase.master.ActiveMasterManager.stop(ActiveMasterManager.java:269) at org.apache.hadoop.hbase.master.HMaster.stop(HMaster.java:2724) at org.apache.hadoop.hbase.master.HMaster.stopMaster(HMaster.java:2716) at org.apache.hadoop.hbase.master.HMaster.abort(HMaster.java:2631) at org.apache.hadoop.hbase.zookeeper.ZKWatcher.connectionEvent(ZKWatcher.java:520) at org.apache.hadoop.hbase.zookeeper.ZKWatcher.process(ZKWatcher.java:452) at org.apache.zookeeper.ClientCnxn$EventThread.processEvent(ClientCnxn.java:530) at org.apache.zookeeper.ClientCnxn$EventThread.run(ClientCnxn.java:505) 2019-11-16 15:51:11,752 ERROR [master/hadoop1:16000.Chore.1] zookeeper.ZKWatcher: master:16000-0x1023619d3c30002, quorum=172.16.10.91:2181,172.16.10.92:2181,172.16.10.93:2181, baseZNode=/hbase Received unexpected KeeperException, re-throwing exception org.apache.zookeeper.KeeperException$SessionExpiredException: KeeperErrorCode = Session expired for /hbase/master at org.apache.zookeeper.KeeperException.create(KeeperException.java:127) at org.apache.zookeeper.KeeperException.create(KeeperException.java:51) at org.apache.zookeeper.ZooKeeper.getData(ZooKeeper.java:1212) at org.apache.hadoop.hbase.zookeeper.RecoverableZooKeeper.getData(RecoverableZooKeeper.java:340) at org.apache.hadoop.hbase.zookeeper.ZKUtil.getData(ZKUtil.java:610) at org.apache.hadoop.hbase.zookeeper.MasterAddressTracker.getMasterAddress(MasterAddressTracker.java:150) at org.apache.hadoop.hbase.master.ActiveMasterManager.stop(ActiveMasterManager.java:269) at org.apache.hadoop.hbase.master.HMaster.stop(HMaster.java:2724) at org.apache.hadoop.hbase.master.HMaster.stopMaster(HMaster.java:2716) at org.apache.hadoop.hbase.master.HMaster.abort(HMaster.java:2631) at org.apache.hadoop.hbase.replication.ReplicationPeersZKImpl.getAllPeerIds(ReplicationPeersZKImpl.java:393) at org.apache.hadoop.hbase.master.cleaner.ReplicationZKNodeCleaner.getUnDeletedQueues(ReplicationZKNodeCleaner.java:78) at org.apache.hadoop.hbase.master.cleaner.ReplicationZKNodeCleanerChore.chore(ReplicationZKNodeCleanerChore.java:48) at org.apache.hadoop.hbase.ScheduledChore.run(ScheduledChore.java:186) at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:511) at java.util.concurrent.FutureTask.runAndReset(FutureTask.java:308) at java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask.access$301(ScheduledThreadPoolExecutor.java:180) at java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask.run(ScheduledThreadPoolExecutor.java:294) at org.apache.hadoop.hbase.JitterScheduledThreadPoolExecutorImpl$JitteredRunnableScheduledFuture.run(JitterScheduledThreadPoolExecutorImpl.java:111) at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149) at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624) at java.lang.Thread.run(Thread.java:748) 2019-11-16 15:51:11,764 INFO [main-EventThread] zookeeper.ClientCnxn: EventThread shut down for session: 0x1023619d3c30002 2019-11-16 15:51:11,764 ERROR [master/hadoop1:16000.Chore.1] hbase.ScheduledChore: Caught error java.lang.NullPointerException at java.util.HashSet.<init>(HashSet.java:119) at org.apache.hadoop.hbase.master.cleaner.ReplicationZKNodeCleaner.getUnDeletedQueues(ReplicationZKNodeCleaner.java:78) at org.apache.hadoop.hbase.master.cleaner.ReplicationZKNodeCleanerChore.chore(ReplicationZKNodeCleanerChore.java:48) at org.apache.hadoop.hbase.ScheduledChore.run(ScheduledChore.java:186) at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:511) at java.util.concurrent.FutureTask.runAndReset(FutureTask.java:308) at java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask.access$301(ScheduledThreadPoolExecutor.java:180) at java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask.run(ScheduledThreadPoolExecutor.java:294) at org.apache.hadoop.hbase.JitterScheduledThreadPoolExecutorImpl$JitteredRunnableScheduledFuture.run(JitterScheduledThreadPoolExecutorImpl.java:111) at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149) at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624) at java.lang.Thread.run(Thread.java:748) 2019-11-16 15:51:11,780 INFO [master/hadoop1:16000.Chore.1] hbase.ScheduledChore: Chore: hadoop1,16000,1573889992279-ClusterStatusChore was stopped 2019-11-16 15:51:11,780 INFO [master/hadoop1:16000.Chore.1] hbase.ScheduledChore: Chore: hadoop1,16000,1573889992279-BalancerChore was stopped 2019-11-16 15:51:11,799 INFO [master/hadoop1:16000.Chore.1] master.ReplicationLogCleaner: Stopping replicationLogCleaner-0x1023619d3c30006, quorum=172.16.10.91:2181,172.16.10.92:2181,172.16.10.93:2181, baseZNode=/hbase 2019-11-16 15:51:11,821 INFO [master/hadoop1:16000.Chore.1] hbase.ScheduledChore: Chore: LogsCleaner was stopped 2019-11-16 15:51:11,862 INFO [master/hadoop1:16000.Chore.1] hbase.ScheduledChore: Chore: HFileCleaner was stopped 2019-11-16 15:51:11,863 INFO [master/hadoop1:16000.Chore.1] hbase.ScheduledChore: Chore: hadoop1,16000,1573889992279-RegionNormalizerChore was stopped 2019-11-16 15:51:11,863 INFO [master/hadoop1:16000.Chore.1] hbase.ScheduledChore: Chore: CatalogJanitor-hadoop1:16000 was stopped 2019-11-16 15:51:12,345 INFO [master/hadoop1:16000] regionserver.HRegionServer: Stopping infoServer 2019-11-16 15:51:12,411 INFO [master/hadoop1:16000.splitLogManager..Chore.1] hbase.ScheduledChore: Chore: SplitLogManager Timeout Monitor was stopped 2019-11-16 15:51:15,268 WARN [RpcServer.default.FPBQ.Fifo.handler=29,queue=2,port=16000] ipc.RpcServer: (responseTooSlow): {"call":"RegionServerReport(org.apache.hadoop.hbase.shaded.protobuf.generated.RegionServerStatusProtos$RegionServerReportRequest)","starttimems":1573890604188,"responsesize":0,"method":"RegionServerReport","param":"server host_name: "hadoop1" port: 16020 start_code: 1573889993445 load { numberOfRequests: 50 }","processingtimems":63719,"client":"172.16.10.91:56479","queuetimems":0,"class":"HMaster"} 2019-11-16 15:51:15,591 INFO [master/hadoop1:16000] handler.ContextHandler: Stopped o.e.j.w.WebAppContext@38d17d80{/,null,UNAVAILABLE}{file:/usr/local/hbase-2.0.0/hbase-webapps/master} 2019-11-16 15:51:16,159 INFO [master/hadoop1:16000] server.AbstractConnector: Stopped ServerConnector@661f1c57{HTTP/1.1,[http/1.1]}{0.0.0.0:60010} 2019-11-16 15:51:16,187 INFO [master/hadoop1:16000] handler.ContextHandler: Stopped o.e.j.s.ServletContextHandler@36c0d0bd{/static,file:///usr/local/hbase-2.0.0/hbase-webapps/static/,UNAVAILABLE} 2019-11-16 15:51:16,204 INFO [master/hadoop1:16000] handler.ContextHandler: Stopped o.e.j.s.ServletContextHandler@2042ccce{/logs,file:///usr/local/hbase-2.0.0/logs/,UNAVAILABLE} 2019-11-16 15:51:16,395 INFO [master/hadoop1:16000] regionserver.HRegionServer: stopping server hadoop1,16000,1573889992279 2019-11-16 15:51:16,412 INFO [master/hadoop1:16000] zookeeper.ReadOnlyZKClient: Close zookeeper connection 0x0ad0061b to 172.16.10.91:2181,172.16.10.92:2181,172.16.10.93:2181 2019-11-16 15:51:16,412 INFO [master/hadoop1:16000] regionserver.HRegionServer: stopping server hadoop1,16000,1573889992279; all regions closed. 2019-11-16 15:51:16,413 INFO [master/hadoop1:16000] hbase.ChoreService: Chore service for: master/hadoop1:16000 had [[ScheduledChore: Name: hadoop1,16000,1573889992279-MobCompactionChore Period: 604800 Unit: SECONDS], [ScheduledChore: Name: hadoop1,16000,1573889992279-ExpiredMobFileCleanerChore Period: 86400 Unit: SECONDS]] on shutdown 2019-11-16 15:51:16,427 INFO [master/hadoop1:16000] master.MasterMobCompactionThread: Waiting for Mob Compaction Thread to finish... 2019-11-16 15:51:16,428 INFO [master/hadoop1:16000] master.MasterMobCompactionThread: Waiting for Region Server Mob Compaction Thread to finish... 2019-11-16 15:51:16,428 WARN [master/hadoop1:16000] zookeeper.ZKUtil: master:16000-0x1023619d3c30002, quorum=172.16.10.91:2181,172.16.10.92:2181,172.16.10.93:2181, baseZNode=/hbase Unable to get data of znode /hbase/master org.apache.zookeeper.KeeperException$SessionExpiredException: KeeperErrorCode = Session expired for /hbase/master at org.apache.zookeeper.KeeperException.create(KeeperException.java:127) at org.apache.zookeeper.KeeperException.create(KeeperException.java:51) at org.apache.zookeeper.ZooKeeper.getData(ZooKeeper.java:1212) at org.apache.hadoop.hbase.zookeeper.RecoverableZooKeeper.getData(RecoverableZooKeeper.java:340) at org.apache.hadoop.hbase.zookeeper.ZKUtil.getData(ZKUtil.java:610) at org.apache.hadoop.hbase.zookeeper.MasterAddressTracker.getMasterAddress(MasterAddressTracker.java:150) at org.apache.hadoop.hbase.master.ActiveMasterManager.stop(ActiveMasterManager.java:269) at org.apache.hadoop.hbase.master.HMaster.stopServiceThreads(HMaster.java:1195) at org.apache.hadoop.hbase.regionserver.HRegionServer.run(HRegionServer.java:1114) at org.apache.hadoop.hbase.master.HMaster.run(HMaster.java:566) at java.lang.Thread.run(Thread.java:748) 2019-11-16 15:51:16,428 ERROR [master/hadoop1:16000] zookeeper.ZKWatcher: master:16000-0x1023619d3c30002, quorum=172.16.10.91:2181,172.16.10.92:2181,172.16.10.93:2181, baseZNode=/hbase Received unexpected KeeperException, re-throwing exception org.apache.zookeeper.KeeperException$SessionExpiredException: KeeperErrorCode = Session expired for /hbase/master at org.apache.zookeeper.KeeperException.create(KeeperException.java:127) at org.apache.zookeeper.KeeperException.create(KeeperException.java:51) at org.apache.zookeeper.ZooKeeper.getData(ZooKeeper.java:1212) at org.apache.hadoop.hbase.zookeeper.RecoverableZooKeeper.getData(RecoverableZooKeeper.java:340) at org.apache.hadoop.hbase.zookeeper.ZKUtil.getData(ZKUtil.java:610) at org.apache.hadoop.hbase.zookeeper.MasterAddressTracker.getMasterAddress(MasterAddressTracker.java:150) at org.apache.hadoop.hbase.master.ActiveMasterManager.stop(ActiveMasterManager.java:269) at org.apache.hadoop.hbase.master.HMaster.stopServiceThreads(HMaster.java:1195) at org.apache.hadoop.hbase.regionserver.HRegionServer.run(HRegionServer.java:1114) at org.apache.hadoop.hbase.master.HMaster.run(HMaster.java:566) at java.lang.Thread.run(Thread.java:748) 2019-11-16 15:51:16,428 INFO [master/hadoop1:16000] assignment.AssignmentManager: Stopping assignment manager 2019-11-16 15:51:16,799 INFO [master/hadoop1:16000] procedure2.RemoteProcedureDispatcher: Stopping procedure remote dispatcher 2019-11-16 15:51:16,799 INFO [master/hadoop1:16000] procedure2.ProcedureExecutor: Stopping 2019-11-16 15:51:17,000 INFO [master/hadoop1:16000] wal.WALProcedureStore: Stopping the WAL Procedure Store, isAbort=false 2019-11-16 15:51:38,488 INFO [master/hadoop1:16000] hbase.ChoreService: Chore service for: master/hadoop1:16000.splitLogManager. had [] on shutdown 2019-11-16 15:51:38,636 INFO [master/hadoop1:16000] flush.MasterFlushTableProcedureManager: stop: server shutting down. 2019-11-16 15:51:38,679 INFO [master/hadoop1:16000] ipc.NettyRpcServer: Stopping server on /172.16.10.91:16000 2019-11-16 15:51:40,096 WARN [master/hadoop1:16000] regionserver.HRegionServer: Failed deleting my ephemeral node org.apache.zookeeper.KeeperException$SessionExpiredException: KeeperErrorCode = Session expired for /hbase/rs/hadoop1,16000,1573889992279 at org.apache.zookeeper.KeeperException.create(KeeperException.java:127) at org.apache.zookeeper.KeeperException.create(KeeperException.java:51) at org.apache.zookeeper.ZooKeeper.delete(ZooKeeper.java:873) at org.apache.hadoop.hbase.zookeeper.RecoverableZooKeeper.delete(RecoverableZooKeeper.java:166) at org.apache.hadoop.hbase.zookeeper.ZKUtil.deleteNode(ZKUtil.java:1231) at org.apache.hadoop.hbase.zookeeper.ZKUtil.deleteNode(ZKUtil.java:1220) at org.apache.hadoop.hbase.regionserver.HRegionServer.deleteMyEphemeralNode(HRegionServer.java:1568) at org.apache.hadoop.hbase.regionserver.HRegionServer.run(HRegionServer.java:1122) at org.apache.hadoop.hbase.master.HMaster.run(HMaster.java:566) at java.lang.Thread.run(Thread.java:748) 2019-11-16 15:51:40,133 INFO [master/hadoop1:16000] regionserver.HRegionServer: Exiting; stopping=hadoop1,16000,1573889992279; zookeeper connection closed.

然后去看相应时间GC的日志:

发现在15:50分左右,出现了一次GC,耗时:63.2450700 secs。

从而可以断定,是因为GC期间服务全被堵塞了,无法响应外部请求;由于超出了zk允许的最大中断时间(40s),zk服务自动剔除该hbase节点。hbase gc后试图继续与zk连续,但得不到响应,故停止服务

2019-11-16T15:48:54.792+0800: 546.099: Total time for which application threads were stopped: 0.0001972 seconds, Stopping threads took: 0.0000609 seconds 2019-11-16T15:49:25.011+0800: 576.319: Total time for which application threads were stopped: 0.0006850 seconds, Stopping threads took: 0.0002741 seconds 2019-11-16T15:49:25.012+0800: 576.319: Total time for which application threads were stopped: 0.0003173 seconds, Stopping threads took: 0.0000438 seconds 2019-11-16T15:49:27.012+0800: 578.320: Total time for which application threads were stopped: 0.0004070 seconds, Stopping threads took: 0.0000815 seconds 2019-11-16T15:49:28.013+0800: 579.320: Total time for which application threads were stopped: 0.0004502 seconds, Stopping threads took: 0.0000730 seconds 2019-11-16T15:49:29.013+0800: 580.321: Total time for which application threads were stopped: 0.0004346 seconds, Stopping threads took: 0.0000679 seconds 2019-11-16T15:49:37.076+0800: 588.383: Total time for which application threads were stopped: 0.0053718 seconds, Stopping threads took: 0.0049407 seconds 2019-11-16T15:49:47.077+0800: 598.385: Total time for which application threads were stopped: 0.0006014 seconds, Stopping threads took: 0.0001549 seconds 2019-11-16T15:49:57.275+0800: 608.582: Total time for which application threads were stopped: 1.5978862 seconds, Stopping threads took: 0.0081536 seconds 2019-11-16T15:49:57.357+0800: 608.664: Total time for which application threads were stopped: 0.0810708 seconds, Stopping threads took: 0.0395464 seconds 2019-11-16T15:49:57.397+0800: 608.704: Total time for which application threads were stopped: 0.0400561 seconds, Stopping threads took: 0.0202738 seconds 2019-11-16T15:49:57.676+0800: 608.983: Total time for which application threads were stopped: 0.2749559 seconds, Stopping threads took: 0.0463266 seconds 2019-11-16T15:49:57.686+0800: 608.994: Total time for which application threads were stopped: 0.0102411 seconds, Stopping threads took: 0.0097304 seconds 2019-11-16T15:50:04.321+0800: 615.629: [GC (Allocation Failure) 2019-11-16T15:50:04.707+0800: 616.015: [ParNew Desired survivor size 67108864 bytes, new threshold 15 (max 15) - age 1: 9371352 bytes, 9371352 total - age 2: 4838936 bytes, 14210288 total - age 3: 6062112 bytes, 20272400 total : 294250K->26226K(393216K), 62.8591600 secs] 294250K->26226K(3014656K), 63.2450700 secs] [Times: user=0.28 sys=0.41, real=63.23 secs] 2019-11-16T15:51:07.567+0800: 678.874: Total time for which application threads were stopped: 63.3780698 seconds, Stopping threads took: 0.0001344 seconds 2019-11-16T15:51:07.697+0800: 679.004: Total time for which application threads were stopped: 0.0767111 seconds, Stopping threads took: 0.0474820 seconds 2019-11-16T15:51:07.875+0800: 679.182: Total time for which application threads were stopped: 0.1745242 seconds, Stopping threads took: 0.1312083 seconds 2019-11-16T15:51:07.905+0800: 679.213: Total time for which application threads were stopped: 0.0298393 seconds, Stopping threads took: 0.0233279 seconds 2019-11-16T15:51:08.172+0800: 679.480: Total time for which application threads were stopped: 0.1184843 seconds, Stopping threads took: 0.0465035 seconds 2019-11-16T15:51:08.452+0800: 679.760: Total time for which application threads were stopped: 0.1325020 seconds, Stopping threads took: 0.1321667 seconds 2019-11-16T15:51:08.884+0800: 680.191: Total time for which application threads were stopped: 0.0991417 seconds, Stopping threads took: 0.0448200 seconds 2019-11-16T15:51:09.028+0800: 680.336: Total time for which application threads were stopped: 0.0449934 seconds, Stopping threads took: 0.0447280 seconds 2019-11-16T15:51:10.410+0800: 681.718: Total time for which application threads were stopped: 1.0410454 seconds, Stopping threads took: 1.0110414 seconds 2019-11-16T15:51:11.644+0800: 682.951: Total time for which application threads were stopped: 0.1198907 seconds, Stopping threads took: 0.1193440 seconds 2019-11-16T15:51:11.751+0800: 683.058: Total time for which application threads were stopped: 0.0030797 seconds, Stopping threads took: 0.0026453 seconds 2019-11-16T15:51:11.769+0800: 683.077: Total time for which application threads were stopped: 0.0057162 seconds, Stopping threads took: 0.0043807 seconds 2019-11-16T15:51:11.779+0800: 683.087: Total time for which application threads were stopped: 0.0097116 seconds, Stopping threads took: 0.0094623 seconds 2019-11-16T15:51:11.862+0800: 683.169: Total time for which application threads were stopped: 0.0410152 seconds, Stopping threads took: 0.0281023 seconds 2019-11-16T15:51:12.074+0800: 683.381: Total time for which application threads were stopped: 0.2106658 seconds, Stopping threads took: 0.2104016 seconds 2019-11-16T15:51:12.422+0800: 683.729: Total time for which application threads were stopped: 0.0109280 seconds, Stopping threads took: 0.0104571 seconds 2019-11-16T15:51:12.431+0800: 683.738: Total time for which application threads were stopped: 0.0089406 seconds, Stopping threads took: 0.0086042 seconds 2019-11-16T15:51:13.305+0800: 684.613: Total time for which application threads were stopped: 0.0034802 seconds, Stopping threads took: 0.0030049 seconds

注:gc日志输出的路径和文件名,要在bin/hbase-env.sh文件的HBASE_OPTS中设置

问题2:查看RegionServer节点的日志,出现一些GC和异常信息:

2019-11-16 17:36:35,403 INFO [regionserver/hadoop1:16020.Chore.1] hbase.ScheduledChore: Chore: MemstoreFlusherChore missed its start time 2019-11-16 17:36:35,403 INFO [regionserver/hadoop1:16020.Chore.2] hbase.ScheduledChore: Chore: CompactionChecker missed its start time 2019-11-16 17:36:35,527 WARN [JvmPauseMonitor] util.JvmPauseMonitor: Detected pause in JVM or host machine (eg GC): pause of approximately 40315ms GC pool 'ParNew' had collection(s): count=1 time=39859ms 2019-11-16 17:36:35,582 WARN [RpcServer.default.FPBQ.Fifo.handler=29,queue=2,port=16020] ipc.RpcServer: (responseTooSlow): {"call":"Multi(org.apache.hadoop.hbase.shaded.protobuf.generated.ClientProtos$MultiRequest)","starttimems":1573896954590,"responsesize":16,"method":"Multi","param":"region= TERMINAL_DETAIL_DATA,2,1573617342390.91c77640ad1b2f43b5fe347f41d835b8., for 1 actions and 1st row key=29223370463942606807463901012100","processingtimems":40842,"client":"172.16.99.2:2210","queuetimems":0,"class":"HRegionServer"} 2019-11-16 17:36:35,583 WARN [RpcServer.default.FPBQ.Fifo.handler=25,queue=1,port=16020] ipc.RpcServer: (responseTooSlow): {"call":"Multi(org.apache.hadoop.hbase.shaded.protobuf.generated.ClientProtos$MultiRequest)","starttimems":1573896954597,"responsesize":10,"method":"Multi","param":"region= TERMINAL_DETAIL_DATA,2,1573617342390.91c77640ad1b2f43b5fe347f41d835b8., for 1 actions and 1st row key=29223370463942598807001514010400","processingtimems":40835,"client":"172.16.99.2:2210","queuetimems":0,"class":"HRegionServer"} 2019-11-16 17:36:35,595 WARN [RpcServer.default.FPBQ.Fifo.handler=26,queue=2,port=16020] ipc.RpcServer: (responseTooSlow): {"call":"Multi(org.apache.hadoop.hbase.shaded.protobuf.generated.ClientProtos$MultiRequest)","starttimems":1573896954590,"responsesize":16,"method":"Multi","param":"region= TERMINAL_DETAIL_DATA,5,1573617342390.18ad8bf75167740d2ba58df4fe71f189., for 1 actions and 1st row key=59223370463950389807568502012100","processingtimems":40842,"client":"172.16.99.2:2210","queuetimems":0,"class":"HRegionServer"} 2019-11-16 17:36:35,819 INFO [AsyncFSWAL-0] wal.AbstractFSWAL: Slow sync cost: 117 ms, current pipeline: [DatanodeInfoWithStorage[172.16.10.91:50010,DS-9402e3dd-982e-4f18-be39-3a7dfc0d2672,DISK], DatanodeInfoWithStorage[172.16.10.93:50010,DS-b843ad5a-97c2-42f5-ac4f-619acca6219c,DISK]] 2019-11-16 17:36:35,819 INFO [AsyncFSWAL-0] wal.AbstractFSWAL: Slow sync cost: 117 ms, current pipeline: [DatanodeInfoWithStorage[172.16.10.91:50010,DS-9402e3dd-982e-4f18-be39-3a7dfc0d2672,DISK], DatanodeInfoWithStorage[172.16.10.93:50010,DS-b843ad5a-97c2-42f5-ac4f-619acca6219c,DISK]] 2019-11-16 17:36:36,061 INFO [main-SendThread(172.16.10.93:2181)] zookeeper.ClientCnxn: Opening socket connection to server 172.16.10.93/172.16.10.93:2181. Will not attempt to authenticate using SASL (unknown error) 2019-11-16 17:36:36,189 WARN [main-SendThread(172.16.10.93:2181)] zookeeper.ClientCnxn: Session 0x2042fa555aa0251 for server null, unexpected error, closing socket connection and attempting reconnect java.net.ConnectException: Connection refused at sun.nio.ch.SocketChannelImpl.checkConnect(Native Method) at sun.nio.ch.SocketChannelImpl.finishConnect(SocketChannelImpl.java:717) at org.apache.zookeeper.ClientCnxnSocketNIO.doTransport(ClientCnxnSocketNIO.java:361) at org.apache.zookeeper.ClientCnxn$SendThread.run(ClientCnxn.java:1141) 2019-11-16 17:36:37,236 INFO [main-SendThread(172.16.10.92:2181)] zookeeper.ClientCnxn: Opening socket connection to server 172.16.10.92/172.16.10.92:2181. Will not attempt to authenticate using SASL (unknown error) 2019-11-16 17:36:37,236 INFO [main-SendThread(172.16.10.92:2181)] zookeeper.ClientCnxn: Socket connection established to 172.16.10.92/172.16.10.92:2181, initiating session 2019-11-16 17:36:37,589 WARN [main-SendThread(172.16.10.92:2181)] zookeeper.ClientCnxn: Unable to reconnect to ZooKeeper service, session 0x2042fa555aa0251 has expired 2019-11-16 17:36:37,884 INFO [main-SendThread(172.16.10.92:2181)] zookeeper.ClientCnxn: Unable to reconnect to ZooKeeper service, session 0x2042fa555aa0251 has expired, closing socket connection 2019-11-16 17:36:39,183 WARN [regionserver/hadoop1:16020] zookeeper.ZKUtil: regionserver:16020-0x2042fa555aa0251, quorum=172.16.10.91:2181,172.16.10.92:2181,172.16.10.93:2181, baseZNode=/hbase Unable to get data of znode /hbase/master org.apache.zookeeper.KeeperException$SessionExpiredException: KeeperErrorCode = Session expired for /hbase/master at org.apache.zookeeper.KeeperException.create(KeeperException.java:127) at org.apache.zookeeper.KeeperException.create(KeeperException.java:51) at org.apache.zookeeper.ZooKeeper.getData(ZooKeeper.java:1212) at org.apache.hadoop.hbase.zookeeper.RecoverableZooKeeper.getData(RecoverableZooKeeper.java:340) at org.apache.hadoop.hbase.zookeeper.ZKUtil.getDataInternal(ZKUtil.java:661) at org.apache.hadoop.hbase.zookeeper.ZKUtil.getDataAndWatch(ZKUtil.java:637) at org.apache.hadoop.hbase.zookeeper.ZKNodeTracker.getData(ZKNodeTracker.java:180) at org.apache.hadoop.hbase.zookeeper.MasterAddressTracker.getMasterAddress(MasterAddressTracker.java:129) at org.apache.hadoop.hbase.regionserver.HRegionServer.createRegionServerStatusStub(HRegionServer.java:2475) at org.apache.hadoop.hbase.regionserver.HRegionServer.tryRegionServerReport(HRegionServer.java:1190) at org.apache.hadoop.hbase.regionserver.HRegionServer.run(HRegionServer.java:989) at java.lang.Thread.run(Thread.java:748) 2019-11-16 17:36:39,553 ERROR [regionserver/hadoop1:16020] zookeeper.ZKWatcher: regionserver:16020-0x2042fa555aa0251, quorum=172.16.10.91:2181,172.16.10.92:2181,172.16.10.93:2181, baseZNode=/hbase Received unexpected KeeperException, re-throwing exception org.apache.zookeeper.KeeperException$SessionExpiredException: KeeperErrorCode = Session expired for /hbase/master at org.apache.zookeeper.KeeperException.create(KeeperException.java:127) at org.apache.zookeeper.KeeperException.create(KeeperException.java:51) at org.apache.zookeeper.ZooKeeper.getData(ZooKeeper.java:1212) at org.apache.hadoop.hbase.zookeeper.RecoverableZooKeeper.getData(RecoverableZooKeeper.java:340) at org.apache.hadoop.hbase.zookeeper.ZKUtil.getDataInternal(ZKUtil.java:661) at org.apache.hadoop.hbase.zookeeper.ZKUtil.getDataAndWatch(ZKUtil.java:637) at org.apache.hadoop.hbase.zookeeper.ZKNodeTracker.getData(ZKNodeTracker.java:180) at org.apache.hadoop.hbase.zookeeper.MasterAddressTracker.getMasterAddress(MasterAddressTracker.java:129) at org.apache.hadoop.hbase.regionserver.HRegionServer.createRegionServerStatusStub(HRegionServer.java:2475) at org.apache.hadoop.hbase.regionserver.HRegionServer.tryRegionServerReport(HRegionServer.java:1190) at org.apache.hadoop.hbase.regionserver.HRegionServer.run(HRegionServer.java:989) at java.lang.Thread.run(Thread.java:748) 2019-11-16 17:36:46,083 INFO [regionserver/hadoop1:16020.Chore.3] regionserver.HRegionServer: MemstoreFlusherChore requesting flush of TERMINAL_DETAIL_DATA,2,1573617342390.91c77640ad1b2f43b5fe347f41d835b8. because F1 has an old edit so flush to free WALs after random delay 264353ms 2019-11-16 17:36:46,083 INFO [regionserver/hadoop1:16020.Chore.3] regionserver.HRegionServer: MemstoreFlusherChore requesting flush of TERMINAL_DETAIL_DATA,6,1573617342390.622d87ccf68b5944f0e09db363f9cc5e. because F1 has an old edit so flush to free WALs after random delay 7288ms 2019-11-16 17:36:46,083 INFO [regionserver/hadoop1:16020.Chore.3] regionserver.HRegionServer: MemstoreFlusherChore requesting flush of TERMINAL_DETAIL_DATA,4,1573617342390.fbb4d1d0d473e3db06c71a54404ca479. because F1 has an old edit so flush to free WALs after random delay 63104ms 2019-11-16 17:36:46,083 INFO [regionserver/hadoop1:16020.Chore.3] regionserver.HRegionServer: MemstoreFlusherChore requesting flush of TERMINAL_DETAIL_DATA,5,1573617342390.18ad8bf75167740d2ba58df4fe71f189. because F1 has an old edit so flush to free WALs after random delay 220784ms 2019-11-16 17:36:46,084 INFO [AsyncFSWAL-0] wal.AbstractFSWAL: Slow sync cost: 1985 ms, current pipeline: [DatanodeInfoWithStorage[172.16.10.91:50010,DS-9402e3dd-982e-4f18-be39-3a7dfc0d2672,DISK], DatanodeInfoWithStorage[172.16.10.93:50010,DS-b843ad5a-97c2-42f5-ac4f-619acca6219c,DISK]] 2019-11-16 17:36:46,184 INFO [JvmPauseMonitor] util.JvmPauseMonitor: Detected pause in JVM or host machine (eg GC): pause of approximately 1554ms No GCs detected

解决方案

1、把hbase与zk的timeout时间加长为5分钟,但这并不能很好的解决问题

进入hbase安装主目录,vi conf/hbase-site.xml

<property>

<name>zookeeper.session.timeout</name>

<value>300000</value>

<!--默认: 180000 :zookeeper 会话超时时间,单位是毫秒 -->

</property>

注:这个时间还依赖于zookeeper自身的超时时间,如果太短也需要设置一下,在zookeeper的配置文件中配置:minSessionTimeout,maxSessionTimeout。

2、避免gc的影响,加大hbase的堆内存

进入hbase安装主目录,vi bin/hbase-env.sh

调整HBASE_REGIONSERVER_OPTS值:

export HBASE_OPTS="$HBASE_OPTS -Xmx8g -Xms8g -Xmn512m -Xss256k -XX:MaxPermSize=256m -XX:SurvivorRatio=2 -XX:MaxTenuringThreshold=15 -XX:CMSInitiatingOccupancyFraction=65 -XX:+UseParNewGC -XX:+UseConcMarkSweepGC -XX:+CMSParallelRemarkEnabled -XX:+UseCMSCompactAtFullCollection -XX:+UseCMSInitiatingOccupancyOnly -XX:-DisableExplicitGC -verbose:gc -XX:+PrintGCDetails -XX:+PrintGCDateStamps -XX:+PrintGCApplicationStoppedTime -XX:+PrintTenuringDistribution -Xloggc:$HBASE_HOME/logs/gc-$(hostname)-hbase.log"

3、重启HBase集群