概要

Long Short Term Memory networks,简称作LSTM。

Recurrent Neural Networks

Recurrent Neural Networks,简称作RNN,其结构包含回环,可以用来解决有信息停留的问题。

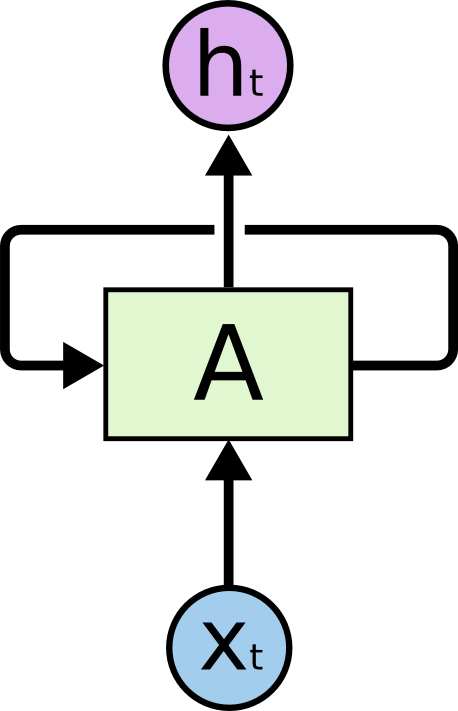

其基本机构如下图所示:

如上图所示,神经网络的一块,A,获得输入xt,并产生输出ht。该结构允许把信息传递给下一个时刻。

一个RNN可以看成许多个相同神经网络的复制,一个神经网络把信息传递给下一个神经网络。如果我们把这个回环打开,就变成下图所示的结构:

这种结构自然被用于语音识别(speech recognition),语言建模( language modeling),翻译(translation),图片抓取(image captioning)等问题中。应用范围还在不断扩展中。

长期依赖问题(The Problem of Long-Term Dependencies)

参考

- Christopher Olah's blog post on RNNs and LSTMs.

- This is the shortest and most accessible read.

- [post] 翻译理解LSTM网络

- Deep Learning Book chapter on RNNs.

- This will be a very technical read and is recommended for students very comfortable with advanced mathematical notation and scientific papers.

- Andrej Karpathy's lecture on Recurrent Neural Networks.

- This is a fairly long lecture (around an hour) but covers the content quite well as always with Karpathy.

- [post] Anyone Can Learn To Code an LSTM-RNN in Python (Part 1: RNN)