解决方案:

在conf后面加上. set("spark.testing.memory", "471859201")

bug报错:

Exception in thread "main" java.lang.IllegalArgumentException: System memory 259522560 must be at 。。。

解决方案:

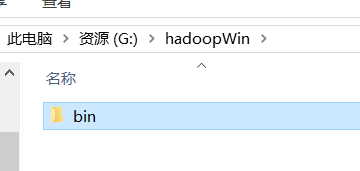

hadoop-common-bin-2.7.3-x64.zip解压到任意目录。

bug报错:

org.apache.hadoop.util.Shell$ExitCodeException: /bin/bash: line

bug原因:

win环境运行hadoop任务,需要下载相应的运行环境。

解决方案:

for (x <- glomTest(arrayTwo).collect()) {

println(x.toList)

}

bug报错:

array(array(),array()) 这种rdd打印不出来

解决方案:

bin/kafka-console-consumer.sh --bootstrap-server ambari02:9092 --from-beginning --topic first

bug报错:

zooker ,bootstrap

bug原因:

之前连接zookeeper是老版本,新版本要用 --boot..,同时端口号改为9092

解决方案:

hive-site.xml

1 将Type改成dbcp

2 将mysql-connect-java 的连接驱动 放到spark/jars目录下

x

1

// 数据库连接 2

<property> 3

<name>datanucleus.connectionPoolingType</name>4

<value>dbcp</value>5

<description>6

Expects one of [bonecp, dbcp, hikaricp, none].7

Specify connection pool library for datanucleus8

</description>9

</property>10

//版本校验11

<property>12

<name>hive.metastore.schema.verification</name>13

<value>false</value>14

<description>15

Enforce metastore schema version consistency.16

True: Verify that version information stored in is compatible with one from Hive jars. Also disable automatic17

schema migration attempt. Users are required to manually migrate schema after Hive upgrade which ensures18

proper metastore schema migration. (Default)19

False: Warn if the version information stored in metastore doesn't match with one from in Hive jars.20

</description>21

</property>bug报错:

org.datanucleus.exceptions.NucleusUserException: The connection pool plugin of type "HikariCP" was not found in the CLASSPATH! at org.datanucleus.store.rdbms.ConnectionFactoryImpl.generateDataSources(ConnectionFactoryImpl.java:234)

bug原因:

hive-site.xml默认配置的数据库连接方式就是HikariCP。

bug报错:

org.apache.hadoop.hive.metastore.ObjectStore (line: 6684) : Version information found in metastore differs 3.1.0 from expected schema version 1.2.0. Schema verififcation is disabled hive.metastore.schema.verification so setting version.

Exception in thread "main" java.lang.NoClassDefFoundError: org/apache/spark/sql/catalyst/catalog/HiveTableRelation