代码已经拷贝到了公司电脑的:

/Users/baidu/Documents/Data/Work/Code/Self/hadoop_mr_streaming_jobs

首先是主控脚本 main.sh

调用的是 extract.py

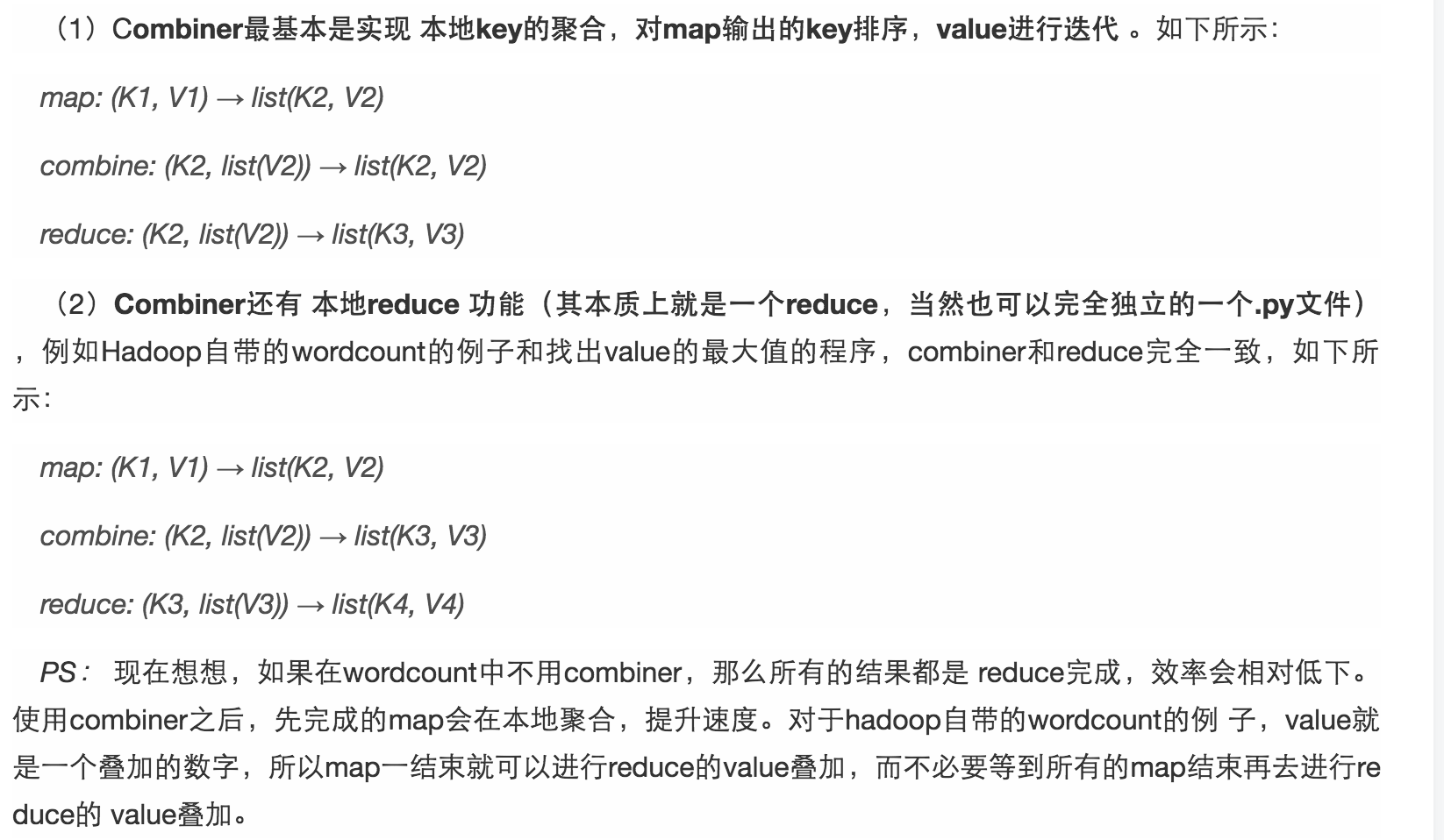

然后发现写的不太好。其中有一个combiner,可以看这里:

https://blog.csdn.net/u010700335/article/details/72649186

streaming 脚本的时候,是以管道为基础的:

(5) Python脚本

|

1

2

3

|

import sysfor line in sys.stdin:.......

|

#!/usr/bin/env python import sys # maps words to their counts word2count = {} # input comes from STDIN (standard input) for line in sys.stdin: # remove leading and trailing whitespace line = line.strip() # split the line into words while removing any empty strings words = filter(lambda word: word, line.split()) # increase counters for word in words: # write the results to STDOUT (standard output); # what we output here will be the input for the # Reduce step, i.e. the input for reducer.py # # tab-delimited; the trivial word count is 1 print '%s %s' % (word, 1) #--------------------------------------------------------------------------------------------------------- #!/usr/bin/env python from operator import itemgetter import sys # maps words to their counts word2count = {} # input comes from STDIN for line in sys.stdin: # remove leading and trailing whitespace line = line.strip() # parse the input we got from mapper.py word, count = line.split() # convert count (currently a string) to int try: count = int(count) word2count[word] = word2count.get(word, 0) + count except ValueError: # count was not a number, so silently # ignore/discard this line pass # sort the words lexigraphically; # # this step is NOT required, we just do it so that our # final output will look more like the official Hadoop # word count examples sorted_word2count = sorted(word2count.items(), key=itemgetter(0)) # write the results to STDOUT (standard output) for word, count in sorted_word2count: print '%s %s'% (word, count)