报错背景:

CDH集成了Flume服务,准备通过Flume将kafka中的数据放到HDFS中,

启动Flume的时候报错。

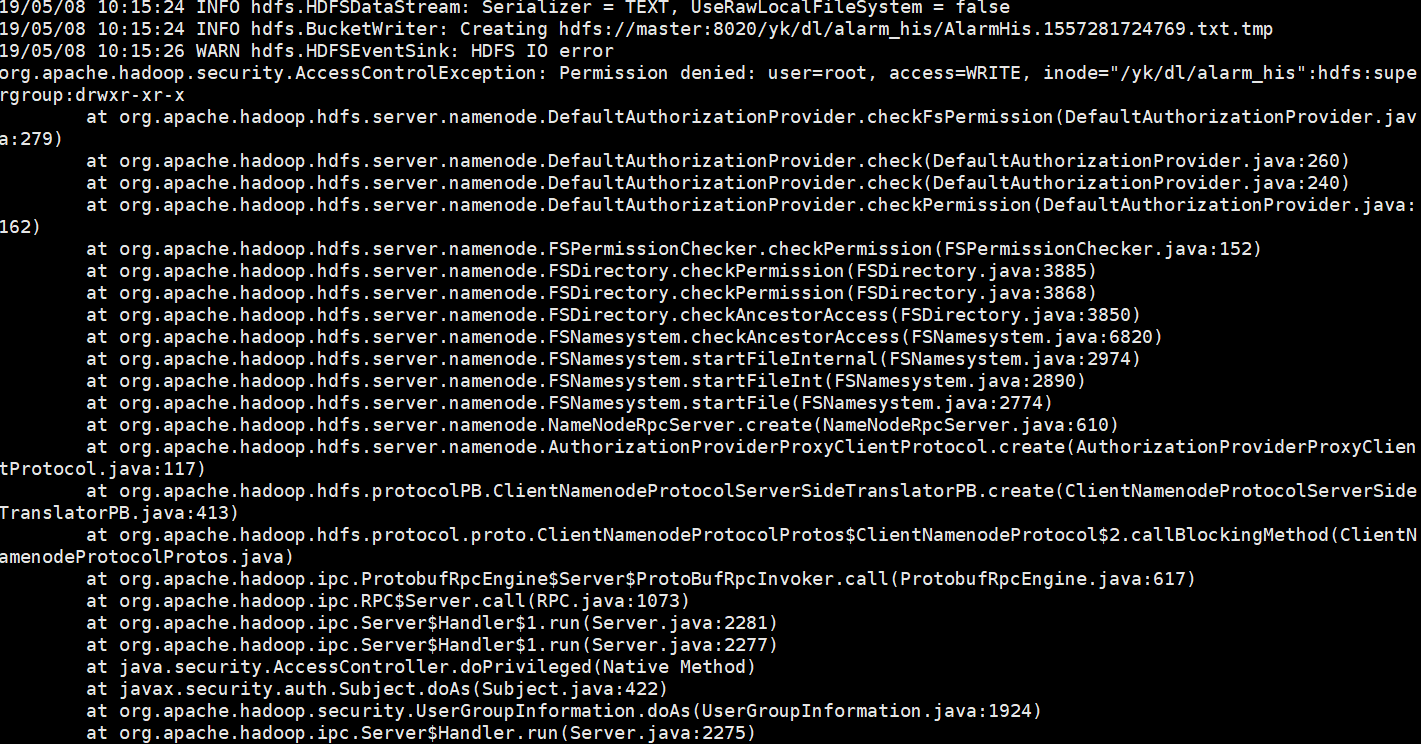

报错现象:

19/05/08 10:15:24 INFO hdfs.HDFSDataStream: Serializer = TEXT, UseRawLocalFileSystem = false 19/05/08 10:15:24 INFO hdfs.BucketWriter: Creating hdfs://master:8020/yk/dl/alarm_his/AlarmHis.1557281724769.txt.tmp 19/05/08 10:15:26 WARN hdfs.HDFSEventSink: HDFS IO error org.apache.hadoop.security.AccessControlException: Permission denied: user=root, access=WRITE, inode="/yk/dl/alarm_his":hdfs:supergroup:drwxr-xr-x at org.apache.hadoop.hdfs.server.namenode.DefaultAuthorizationProvider.checkFsPermission(DefaultAuthorizationProvider.java:279) at org.apache.hadoop.hdfs.server.namenode.DefaultAuthorizationProvider.check(DefaultAuthorizationProvider.java:260) at org.apache.hadoop.hdfs.server.namenode.DefaultAuthorizationProvider.check(DefaultAuthorizationProvider.java:240) at org.apache.hadoop.hdfs.server.namenode.DefaultAuthorizationProvider.checkPermission(DefaultAuthorizationProvider.java:162) at org.apache.hadoop.hdfs.server.namenode.FSPermissionChecker.checkPermission(FSPermissionChecker.java:152) at org.apache.hadoop.hdfs.server.namenode.FSDirectory.checkPermission(FSDirectory.java:3885) at org.apache.hadoop.hdfs.server.namenode.FSDirectory.checkPermission(FSDirectory.java:3868) at org.apache.hadoop.hdfs.server.namenode.FSDirectory.checkAncestorAccess(FSDirectory.java:3850) at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.checkAncestorAccess(FSNamesystem.java:6820) at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.startFileInternal(FSNamesystem.java:2974) at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.startFileInt(FSNamesystem.java:2890) at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.startFile(FSNamesystem.java:2774) at org.apache.hadoop.hdfs.server.namenode.NameNodeRpcServer.create(NameNodeRpcServer.java:610) at org.apache.hadoop.hdfs.server.namenode.AuthorizationProviderProxyClientProtocol.create(AuthorizationProviderProxyClientProtocol.java:117) at org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolServerSideTranslatorPB.create(ClientNamenodeProtocolServerSideTranslatorPB.java:413) at org.apache.hadoop.hdfs.protocol.proto.ClientNamenodeProtocolProtos$ClientNamenodeProtocol$2.callBlockingMethod(ClientNamenodeProtocolProtos.java) at org.apache.hadoop.ipc.ProtobufRpcEngine$Server$ProtoBufRpcInvoker.call(ProtobufRpcEngine.java:617) at org.apache.hadoop.ipc.RPC$Server.call(RPC.java:1073) at org.apache.hadoop.ipc.Server$Handler$1.run(Server.java:2281) at org.apache.hadoop.ipc.Server$Handler$1.run(Server.java:2277) at java.security.AccessController.doPrivileged(Native Method) at javax.security.auth.Subject.doAs(Subject.java:422) at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1924) at org.apache.hadoop.ipc.Server$Handler.run(Server.java:2275) at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method) at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62) at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45) at java.lang.reflect.Constructor.newInstance(Constructor.java:423) at org.apache.hadoop.ipc.RemoteException.instantiateException(RemoteException.java:106) at org.apache.hadoop.ipc.RemoteException.unwrapRemoteException(RemoteException.java:73) at org.apache.hadoop.hdfs.DFSOutputStream.newStreamForCreate(DFSOutputStream.java:2136) at org.apache.hadoop.hdfs.DFSClient.create(DFSClient.java:1804) at org.apache.hadoop.hdfs.DFSClient.create(DFSClient.java:1728) at org.apache.hadoop.hdfs.DistributedFileSystem$7.doCall(DistributedFileSystem.java:438) at org.apache.hadoop.hdfs.DistributedFileSystem$7.doCall(DistributedFileSystem.java:434) at org.apache.hadoop.fs.FileSystemLinkResolver.resolve(FileSystemLinkResolver.java:81) at org.apache.hadoop.hdfs.DistributedFileSystem.create(DistributedFileSystem.java:434) at org.apache.hadoop.hdfs.DistributedFileSystem.create(DistributedFileSystem.java:375) at org.apache.hadoop.fs.FileSystem.create(FileSystem.java:926) at org.apache.hadoop.fs.FileSystem.create(FileSystem.java:907) at org.apache.hadoop.fs.FileSystem.create(FileSystem.java:804) at org.apache.hadoop.fs.FileSystem.create(FileSystem.java:793) at org.apache.flume.sink.hdfs.HDFSDataStream.doOpen(HDFSDataStream.java:81) at org.apache.flume.sink.hdfs.HDFSDataStream.open(HDFSDataStream.java:108) at org.apache.flume.sink.hdfs.BucketWriter$1.call(BucketWriter.java:262) at org.apache.flume.sink.hdfs.BucketWriter$1.call(BucketWriter.java:252) at org.apache.flume.sink.hdfs.BucketWriter$9$1.run(BucketWriter.java:701) at org.apache.flume.auth.SimpleAuthenticator.execute(SimpleAuthenticator.java:50) at org.apache.flume.sink.hdfs.BucketWriter$9.call(BucketWriter.java:698) at java.util.concurrent.FutureTask.run(FutureTask.java:266) at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149) at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624) at java.lang.Thread.run(Thread.java:748)

报错原因:

根据日志信息可以解读出,root用户想要在文件系统里面创建一个目录,失败。

原因是,root用户无法操作hdfs

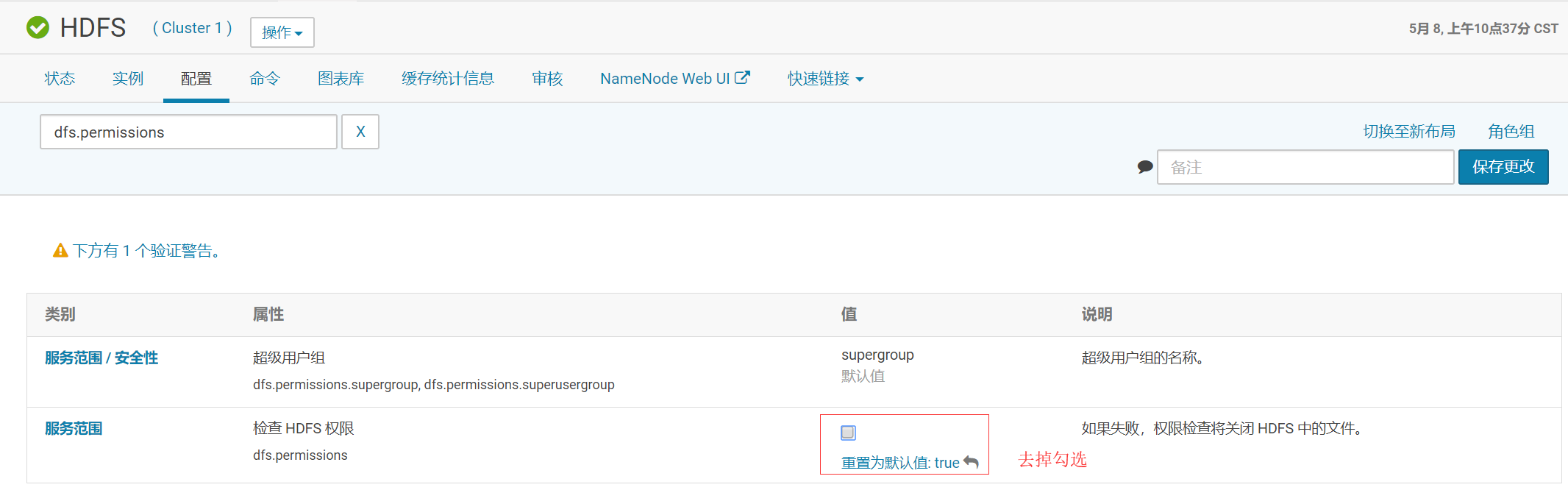

报错解决:

重启HDFS即可