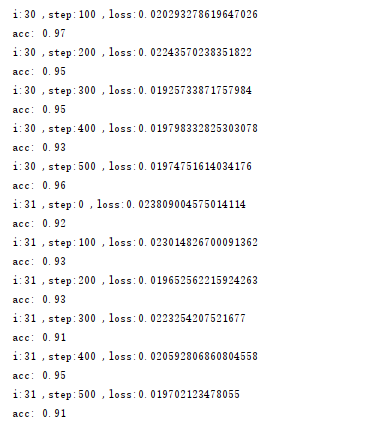

import tensorflow as tf from tensorflow.keras import optimizers,layers # 定义数据预处理函数 def preprocess(x,y): x = tf.cast(x,dtype=tf.float32) / 255 # 将特征数据转化为float32类型,并缩放到0到1之间 y = tf.cast(y,dtype=tf.int32) # 将标记数据转化为int32类型 y = tf.one_hot(y,depth= 10) # 将标记数据转为one_hot编码 return x,y def get_data(): # 加载手写数字数据 mnist = tf.keras.datasets.mnist (train_x, train_y), (test_x, test_y) = mnist.load_data() # 开始预处理数据 # 训练数据 db = tf.data.Dataset.from_tensor_slices((train_x,train_y)) # 将数据特征与标记组合 db = db.map(preprocess) # 根据预处理函数对组合数据进行处理 db = db.shuffle(60000).batch(100) # 将数据按10000行为单位打乱,并以100行为一个整体进行随机梯度下降 # 测试数据 db_test = tf.data.Dataset.from_tensor_slices((test_x,test_y)) db_test = db_test.map(preprocess) db_test = db_test.shuffle(10000).batch(100) return db,db_test # 测试代码 db,db_test = get_data() # 获取训练和测试数据 # 设置超参 iter = 100 learn_rate = 0.01 # 定义模型和优化器 model = tf.keras.Sequential([ layers.Dense(512, activation='relu'), layers.Dense(256, activation='relu'), # 全连接 layers.Dense(10) ]) optimizer = optimizers.SGD(learning_rate=learn_rate) # 优化器 # 迭代代码 for i in range(iter): for step,(x,y) in enumerate(db): # 对每个batch样本做梯度计算 # print('x.shape:{},y.shape:{}'.format(x.shape,y.shape)) with tf.GradientTape() as tape: x = tf.reshape(x,(-1,28*28)) # 将28*28展开为784 out = model(x) loss = tf.reduce_mean(tf.square(out-y)) grads = tape.gradient(loss,model.trainable_variables) # 求梯度 grads,_ = tf.clip_by_global_norm(grads,15) # 梯度参数进行限幅,防止偏导的nan和无穷大 optimizer.apply_gradients(zip(grads,model.trainable_variables)) # 优化器进行参数优化 if step % 100 == 0: print('i:{} ,step:{} ,loss:{}'.format(i, step,loss.numpy())) # 求准确率 acc = tf.equal(tf.argmax(out,axis=1),tf.argmax(y,axis=1)) acc = tf.cast(acc,tf.int8) acc = tf.reduce_mean(tf.cast(acc,tf.float32)) print('acc:',acc.numpy())