docker网络介绍

docker安装时,会自动在host上创建三个网络:

[root@localhost ~]# docker network list/ls NETWORK ID NAME DRIVER SCOPE 979fbb278d03 bridge bridge local e3852e4d242e host host local 70b78b4570c6 none null local

docker--none网络

none网络就是什么都没有的网络,挂在这个网络下的容器除了lo,没有其他任何网卡,容器创建时,可以通过 --network=none 指定使用 none 网络。

[root@localhost ~]# docker run -it --network=none busybox / # ip a 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue qlen 1 link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo valid_lft forever preferred_lft forever

none网络的应用:封闭的网络意味着隔离,一些对安全性要求高并且不需要联网的应用可以使用none 网络。比如某个容器的唯一用途是生成随机密码,就可以放到 none 网络中避免密码被窃取。

docker--host网络

连接到host网络的容器,共享 docker host 的网络栈,容器的网络配置与host一样,可以通过 --network=host 指定使用host网络。

[root@localhost ~]# docker run -it --network=host busybox / # ip a 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue qlen 1 link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo valid_lft forever preferred_lft forever inet6 ::1/128 scope host valid_lft forever preferred_lft forever 2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast qlen 1000 link/ether 00:0c:29:fa:cf:23 brd ff:ff:ff:ff:ff:ff inet 192.168.42.30/24 brd 192.168.42.255 scope global ens33 valid_lft forever preferred_lft forever inet6 fe80::20c:29ff:fefa:cf23/64 scope link valid_lft forever preferred_lft forever 51: docker0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue link/ether 02:42:d4:ac:3b:55 brd ff:ff:ff:ff:ff:ff inet 172.17.0.1/16 brd 172.17.255.255 scope global docker0 valid_lft forever preferred_lft forever inet6 fe80::42:d4ff:feac:3b55/64 scope link valid_lft forever preferred_lft forever 63: veth80d568f@if62: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1500 qdisc noqueue master docker0 link/ether 32:ed:04:82:f2:73 brd ff:ff:ff:ff:ff:ff inet6 fe80::30ed:4ff:fe82:f273/64 scope link valid_lft forever preferred_lft forever 67: veth6a18c66@if66: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1500 qdisc noqueue master docker0 link/ether 1e:84:3b:4c:9b:a4 brd ff:ff:ff:ff:ff:ff inet6 fe80::1c84:3bff:fe4c:9ba4/64 scope link valid_lft forever preferred_lft forever 69: veth049b197@if68: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1500 qdisc noqueue master docker0 link/ether 1e:f1:4b:c1:f6:ff brd ff:ff:ff:ff:ff:ff inet6 fe80::1cf1:4bff:fec1:f6ff/64 scope link valid_lft forever preferred_lft forever 71: vethed43fa8@if70: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1500 qdisc noqueue master docker0 link/ether c2:d3:b0:25:e4:88 brd ff:ff:ff:ff:ff:ff inet6 fe80::c0d3:b0ff:fe25:e488/64 scope link valid_lft forever preferred_lft forever 73: veth48839bd@if72: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1500 qdisc noqueue master docker0 link/ether 3e:5e:03:10:78:7d brd ff:ff:ff:ff:ff:ff inet6 fe80::3c5e:3ff:fe10:787d/64 scope link valid_lft forever preferred_lft forever 75: vethb9e42c7@if74: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1500 qdisc noqueue master docker0 link/ether ae:11:c9:bb:12:18 brd ff:ff:ff:ff:ff:ff inet6 fe80::ac11:c9ff:febb:1218/64 scope link valid_lft forever preferred_lft forever 79: veth04ba522@if78: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1500 qdisc noqueue master docker0 link/ether 3e:1d:14:5c:78:94 brd ff:ff:ff:ff:ff:ff inet6 fe80::3c1d:14ff:fe5c:7894/64 scope link valid_lft forever preferred_lft forever

在容器中可以看到 host 的所有网卡,并且连 hostname 也是 host 的。host网络的使用场景又是什么呢?

docker--bridge网络

docker在安装时会创建一个docker0的linux bridge网桥,不指定网络会默认挂到docker0上。

[root@localhost ~]# brctl show

bridge name bridge id STP enabled interfaces

docker0 8000.0242d4ac3b55 no veth049b197

veth04ba522

[root@localhost ~]# docker run -it --network=bridge busybox / # ip a 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue qlen 1 link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo valid_lft forever preferred_lft forever 80: eth0@if81: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1500 qdisc noqueue link/ether 02:42:ac:11:00:09 brd ff:ff:ff:ff:ff:ff inet 172.17.0.9/16 brd 172.17.255.255 scope global eth0 valid_lft forever preferred_lft forever

veth04ba522与eth0@if81是一对veth pair。

[root@localhost ~]# docker network inspect bridge [ { "Name": "bridge", "Id": "979fbb278d03596b502fb8d02d22d1e8fc42f6a8beb7602ba6eecb08bdf512ec", "Created": "2019-06-18T16:43:55.651454994+08:00", "Scope": "local", "Driver": "bridge", "EnableIPv6": false, "IPAM": { "Driver": "default", "Options": null, "Config": [ { "Subnet": "172.17.0.0/16", "Gateway": "172.17.0.1" } ]

创建 user--defined网络

我们可以通过 bridge 创建类似前面默认的 bridge 网络。

(1)利用bridge驱动创建名为my-net2网桥(docker会自动分配网段)

[root@localhost ~]# docker network create --driver bridge my-net2

b326209167c6dbb7e867659bbfa266615bf9312632fbdbaa83e35a5d48825867

(2)查看当前网络结构变化

[root@localhost ~]# docker network list NETWORK ID NAME DRIVER SCOPE 979fbb278d03 bridge bridge local e3852e4d242e host host local b326209167c6 my-net2 bridge local 70b78b4570c6 none null local

(3)查看容器bridge网桥配置(bridge就是容器和网桥形成一对veth pair)

[root@localhost ~]# docker network inspect my-net2 [ { "Name": "my-net2", "Id": "b326209167c6dbb7e867659bbfa266615bf9312632fbdbaa83e35a5d48825867", "Created": "2019-06-18T19:41:51.182792345+08:00", "Scope": "local", "Driver": "bridge", "EnableIPv6": false, "IPAM": { "Driver": "default", "Options": {}, "Config": [ { "Subnet": "172.18.0.0/16", "Gateway": "172.18.0.1" }

(4)利用bridge驱动创建名为my-net3网桥(user-defined网段及网关)

[root@localhost ~]# docker network create --driver bridge --subnet 172.18.2.0/24 --gateway 172.18.2.1 my-net3 Error response from daemon: Pool overlaps with other one on this address space

这是因为新创建的网络自定义的子网ip与已有的网络子网ip冲突。只需要重新定义子网ip段就可以。

[root@localhost ~]# docker network create --driver bridge --subnet 172.20.2.0/24 --gateway 172.20.2.1 my-net3 f021bec39166a23f449dd04e17977f77ce0c817ae7c0e21d97431a0679375351

(5)启动容器使用新建的my-net3网络

[root@localhost ~]# docker run -it --network=my-net3 busybox /bin/sh / # ip a 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue qlen 1 link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo valid_lft forever preferred_lft forever 90: eth0@if91: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1500 qdisc noqueue link/ether 02:42:ac:14:02:02 brd ff:ff:ff:ff:ff:ff inet 172.20.2.2/24 brd 172.20.2.255 scope global eth0 valid_lft forever preferred_lft forever

(6)启动容器使用my-net3网络并指定ip(只有使用 --subnet 创建的网络才能指定静态 IP,如果是docker自动分配的网段不可以指定ip)

[root@localhost ~]# docker run -it --network=my-net3 --ip 172.20.2.120 busybox / # ip a 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue qlen 1 link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo valid_lft forever preferred_lft forever 92: eth0@if93: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1500 qdisc noqueue link/ether 02:42:ac:14:02:78 brd ff:ff:ff:ff:ff:ff inet 172.20.2.120/24 brd 172.20.2.255 scope global eth0 valid_lft forever preferred_lft forever

(7)让已启动不同vlan的ningx容器,可以连接到my-net2(其实在nigx中新建了my-net2的网卡)

[root@localhost ~]# docker run -itd --network=my-net3 busybox /bin/sh 377f3157bfd48ef38997c0bf12fc86e0cf30a4fe42c50213ff1108854848df14 [root@localhost ~]# docker network connect my-net2 377f3157bfd4 [root@localhost ~]# docker exec -it 377f3157bfd4 /bin/sh / # ip a 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue qlen 1 link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo valid_lft forever preferred_lft forever 106: eth0@if107: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1500 qdisc noqueue link/ether 02:42:ac:14:02:03 brd ff:ff:ff:ff:ff:ff inet 172.20.2.3/24 brd 172.20.2.255 scope global eth0 valid_lft forever preferred_lft forever 108: eth1@if109: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1500 qdisc noqueue link/ether 02:42:ac:12:00:03 brd ff:ff:ff:ff:ff:ff inet 172.18.0.3/16 brd 172.18.255.255 scope global eth1 valid_lft forever preferred_lft forever

此容器同时拥有了net2和net3的子网ip。

(8)使用--name指定启动容器名字,可以使用docker自带DNS通信,但只能工作在user-defined 网络,默认的 bridge 网络是无法使用 DNS 的.

[root@localhost ~]# docker run -itd --network=my-net2 --name=b1 busybox 9b3be1daeca760f6cc5eb62fbd4142c72efc3c07be5b70c56059da7946751101 [root@localhost ~]# docker run -itd --network=my-net2 --name=b2 busybox ba94b2359471d16b6a8e12025c99245d1ab6af123b346641128f081b031d71bc [root@localhost ~]# docker exec -it b1 /bin/sh / # ping b2 PING b2 (172.18.0.5): 56 data bytes 64 bytes from 172.18.0.5: seq=0 ttl=64 time=0.150 ms [root@localhost ~]# docker exec -it b2 /bin/sh / # ping b1 PING b1 (172.18.0.4): 56 data bytes 64 bytes from 172.18.0.4: seq=0 ttl=64 time=0.059 ms

在创建容器时使用相同网络,并且给容器命名。意思是给两个容器之间做了域名解析和配置相同网段,所以能ping通。

(9)容器之间的网络互联

a). 首先创建一个 db 容器

[root@localhost ~]# docker run -itd --name db busybox

b). 创一个 web 容器,并使其连接到 db

[root@localhost ~]# docker run -itd --name web --link db:dblink busybox /bin/sh

-link db:dblink 实际是连接对端的名字和这个链接的名字,也就是和 db 容器 建立一个叫做 dblink 的链接

c).查看链接的情况

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES 141e370eea76 busybox "/bin/sh" 8 seconds ago Up 7 seconds web 9f89a1945f6b busybox "sh" 54 seconds ago Up 53 seconds db

d)进入web容器,使用 ping 命令来测试网络链接的情况

[root@localhost ~]# docker exec -it 141e370eea76 /bin/sh/

# ping db PING db (172.17.0.4): 56 data bytes 64 bytes from 172.17.0.4: seq=0 ttl=64 time=0.445 ms

发现可以ping通,连接有效

e)尝试进入db容易,使用ping命令测试

[root@localhost docker]# docker exec -it 9f89a1945f6b /bin/sh / # ping web ping: bad address 'web'

发现无法ping通,因此可以推断出容器之间的网络连通是单向的,即哪个容器建立了连接,哪个容易才能连接到另一个容器,反之不行

(10)容器端口映射 在启动容器的时候,如果不指定参数,在容器外部是没有办法通过网络来访问容 器内部的网络应用和服务的

当容器需要通信时,我们可以使用 -P (大) &&-p (小)来指定端口映射

-P : Docker 会随机映射一个 49000 ~ 49900 的端口到容器内部开放的网络端口

p :则可以指定要映射的端口,并且在一个指定的端口上只可以绑定一个容器。

支持的格式有

iP : HostPort : ContainerPort

IP : : ContainerPort

IP : HostPort :

如果不指定就随机

查看映射

docker port 容器名

拓展:用脚本删除所有容器

[root@localhost docker]# for id in `docker ps -a | grep a | awk -F " +" '{print $1}'` ;do docker rm -f $id ;done

a)映射所有接口地址,此时绑定本地所有接口上的 5000 到容器的 5000 接口, 访问任何一个本地接口的 5000 ,都会直接访问到容器内部

docker run -dti -p 5000:5000 nginx /bin/bash

b)多次使用可以实现多个接口的映射

[root@localhost docker]# docker run -itd -p 5000:22 -p 5001:23 nginx /bin/bash

查看容器信息,发现新容器的的22,23端口都映射到了宿主机的5000和5001端口。

[root@localhost docker]# docker ps -a CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES ea3b9412d96d nginx "/bin/bash" 5 seconds ago Up 5 seconds 80/tcp, 0.0.0.0:5000->22/tcp, 0.0.0.0:5001->23/tcp hardcore_darwin

c)映射到指定地址的指定接口 此时会绑定本地 192.168.4.169 接口上的 5000 到容器的80 接口

docker run -dti -p 192.168.253.9:5000:80 nginx /bin/bash

[root@localhost docker]# docker run -dti -p 192.168.253.9:5000:80 nginx /bin/bash

[root@localhost docker]# docker ps -a CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES 132746ef0996 nginx "/bin/bash" About a minute ago Up About a minute 192.168.253.9:5000->80/tcp practical_lichterman

d) 映射到指定地址的任意接口 此时会绑定本地 192.168.4.169 接口上的任意一个接口到容器的 5000 接口

docker run -dti -p 192.168.4.169::5000 nginx /bin/bash

e) 使用接口标记来指定接口的类型

docker run -dti -p 192.168.4.169::5000/UDP nginx /bin/bash

指定接口位udp类型

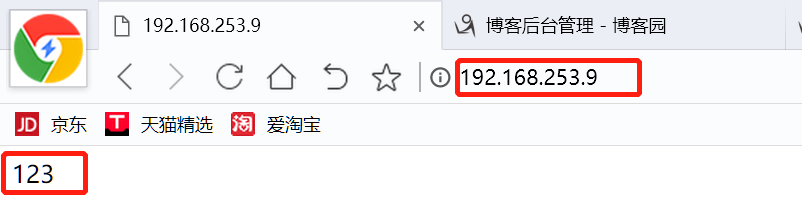

(11)实验:通过端口映射实现访问本地的 IP:PORT 可以访问到容器内的 web

a)将容器80端口映射到主机8080端口,注意末尾不要加环境变量

docker run -itd -p 80:80 --name nginx nginx:latest

b) 查看刚运行docker

docker ps -a

[root@localhost docker]# docker ps -a CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES 0382dfbbd606 nginx:latest "nginx -g 'daemon of…" 5 seconds ago Up 4 seconds 0.0.0.0:80->80/tcp nginx

c) 进入容器

docker exec -it nginx /bin/sh,并在 容器内部编辑网页文件 index.html

# cd /usr/share/nginx/ # ls html # cd html # ls 50x.html index.html # echo '123' > index.html

d)到宿主机上打开浏览器输入 IP:PORT 访问验证

一些报错:

1)[root@localhost /]# docker run -itd --name busy busybox:latest /bin/bash

e105747eb16e52da2637379d75e221f46812955c929696234abbf1321fd56da3

docker: Error stat /bin/bash: no such file or directory": unknown

这是因为环境变量不可用,换成/bin/sh或者不加环境变量

2)[root@localhost /]# docker run -itd --name busy busybox:latest /bin/sh

docker:

Error response from daemon: Conflict. The container name "/busy" is

already in use by container

"e105747eb16e52da2637379d75e221f46812955c929696234abbf1321fd56da3". You

have to remove (or rename) that container to be able to reuse that name.

这是因为容器名已经存在,需要删除已经存在的容器名

3)[root@localhost /]# docker network create --driver bridge my-net2

Error networks have overlapping IPv4

这是因为网桥冲突,删除冲突的网桥。

brctl delbr 网桥

systemctl restart docker

4)error:executable file not found in $PATH": unknown

这是因为命令的顺序错误

5)root@localhost /]# docker run -it --network=my-net3 --ip 192.168.253.13 busybox:latest /bin/sh

docker: Error response from daemon: Invalid address 192.168.253.13: It does not belong to any of this network's subnets.

这是因为自定义的ip不在可使用ip的范围内。

6)Error No chain/target/match by that name.

这是因为没有重启docker,或者关闭firewalld和iptables(不建议)

7)docker: Error response from daemon: driver failed programming external connectivity on endpoint vibrant_kepler (0838e9c00e2ffcec24bbd333000cc4de9bd0fa2420c1c330ddb77dfd9d1d534f): Bind for 192.168.253.9:5000 failed: port is already allocated.

这是因为宿主机的端口已经被别的服务占用。

8)宿主机映射到容器之后,使用宿主机ip:port来访问容器的httpd或者nginx服务

报错连接被拒绝

这是因为端口映射的时候加入了环境变量,去掉环境变量即可。

网络排查命令:

iptables -t nat -L

ip r

tcpdump -i docker0 -n icmp

tcpdump -i eth0 -n icmp