官方文档: https://v1-17.docs.kubernetes.io/zh/docs/tasks/administer-cluster/kubeadm/kubeadm-upgrade/

在升级 kubeadm 创建的 Kubernetes 集群时,只能跨一个大版本升级,或者是小版本升级

将 kubeadm 创建的 Kubernetes 集群从 1.16.x 版本升级到 1.17.x 版本,以及从版本 1.17.x 升级到 1.17.y ,其中 y > x。

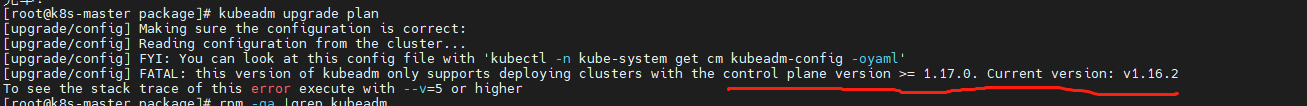

跨版本升级报错,如我之前是v1.16.2,直接升级到 v1.18.20时就报错

一、准备开始

- 您需要有一个由

kubeadm创建并运行着 1.16.0 或更高版本的 Kubernetes 集群。 - 禁用 Swap。

- 集群应使用静态的控制平面和 etcd pod 或者 外部 etcd。

- 务必仔细认真阅读发行说明。

- 务必备份所有重要组件,例如存储在数据库中应用层面的状态。

kubeadm upgrade不会影响您的工作负载,只会涉及 Kubernetes 内部的组件,但备份终究是好的

附加信息

- 升级后,因为容器 spec 哈希值已更改,所以所有容器都会重新启动。

- 您只能从一个次版本升级到下一个次版本,或者同样次版本的补丁版。也就是说,升级时无法跳过版本。 例如,您只能从 1.y 升级到 1.y+1,而不能从 from 1.y 升级到 1.y+2

确定要升级到哪个版本

yum list --showduplicates kubeadm --disableexcludes=kubernetes # 在列表中查找最新的 1.17 版本 # 它看起来应该是 1.17.x-0 ,其中 x 是最新的补丁

二、升级第一个控制平面节点

如果master为单节点,及升级这个节点,这时候 控制平面节点就是单master节点:

# 用最新的修补程序版本替换 1.17.x-0 中的 x yum install -y kubeadm-1.17.x-0 --disableexcludes=kubernetes

1、验证 kubeadm 版本:

kubeadm version

2、腾空控制平面节点:

kubectl get node

kubectl drain $CP_NODE --ignore-daemonsets --delete-local-data

3、在主节点上,运行:

sudo kubeadm upgrade plan

您应该可以看到与下面类似的输出:

[root@k8s-master package]# kubeadm upgrade plan [upgrade/config] Making sure the configuration is correct: [upgrade/config] Reading configuration from the cluster... [upgrade/config] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -oyaml' [preflight] Running pre-flight checks. [upgrade] Making sure the cluster is healthy: [upgrade] Fetching available versions to upgrade to [upgrade/versions] Cluster version: v1.16.2 [upgrade/versions] kubeadm version: v1.17.17 I0630 16:53:55.613495 50734 version.go:251] remote version is much newer: v1.21.2; falling back to: stable-1.17 [upgrade/versions] Latest stable version: v1.17.17 [upgrade/versions] Latest version in the v1.16 series: v1.16.15 Components that must be upgraded manually after you have upgraded the control plane with 'kubeadm upgrade apply': COMPONENT CURRENT AVAILABLE Kubelet 2 x v1.16.2 v1.16.15 Upgrade to the latest version in the v1.16 series: COMPONENT CURRENT AVAILABLE API Server v1.16.2 v1.16.15 Controller Manager v1.16.2 v1.16.15 Scheduler v1.16.2 v1.16.15 Kube Proxy v1.16.2 v1.16.15 CoreDNS 1.6.2 1.6.5 Etcd 3.3.15 3.3.17-0 You can now apply the upgrade by executing the following command: kubeadm upgrade apply v1.16.15 _____________________________________________________________________ Components that must be upgraded manually after you have upgraded the control plane with 'kubeadm upgrade apply': COMPONENT CURRENT AVAILABLE Kubelet 2 x v1.16.2 v1.17.17 Upgrade to the latest stable version: COMPONENT CURRENT AVAILABLE API Server v1.16.2 v1.17.17 Controller Manager v1.16.2 v1.17.17 Scheduler v1.16.2 v1.17.17 Kube Proxy v1.16.2 v1.17.17 CoreDNS 1.6.2 1.6.5 Etcd 3.3.15 3.4.3-0 You can now apply the upgrade by executing the following command: kubeadm upgrade apply v1.17.17 _____________________________________________________________________

此命令检查您的集群是否可以升级,并可以获取到升级的版本。

4、下载镜像,推送到私有仓库

会先获取 kubectl -n kube-system get cm kubeadm-config -oyaml 获取配置

[root@k8s-master package]# kubeadm --kubernetes-version=v1.17.17 config images list W0630 16:24:56.622858 37089 validation.go:28] Cannot validate kubelet config - no validator is available W0630 16:24:56.622893 37089 validation.go:28] Cannot validate kube-proxy config - no validator is available k8s.gcr.io/kube-apiserver:v1.17.17 k8s.gcr.io/kube-controller-manager:v1.17.17 k8s.gcr.io/kube-scheduler:v1.17.17 k8s.gcr.io/kube-proxy:v1.17.17 k8s.gcr.io/pause:3.1 k8s.gcr.io/etcd:3.4.3-0 k8s.gcr.io/coredns:1.6.5

如果是私有仓库,可以选择先下载对应版本,然后上传

docker pull k8smx/kube-apiserver:v1.17.17 docker pull k8smx/kube-controller-manager:v1.17.17 docker pull k8smx/kube-scheduler:v1.17.17 docker pull k8smx/kube-proxy:v1.17.17 docker pull k8smx/pause:3.1 docker pull k8smx/etcd:3.4.3-0 docker pull k8smx/coredns:1.6.5

根据实际私有仓库打tag

5、选择要升级到的版本,然后运行相应的命令

例如:

kubeadm upgrade apply v1.17.x

您应该可以看见与下面类似的输出:

[root@k8s-master package]# kubeadm upgrade apply v1.17.17 [upgrade/config] Making sure the configuration is correct: [upgrade/config] Reading configuration from the cluster... [upgrade/config] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -oyaml' [preflight] Running pre-flight checks. [upgrade] Making sure the cluster is healthy: [upgrade/version] You have chosen to change the cluster version to "v1.17.17" [upgrade/versions] Cluster version: v1.16.2 [upgrade/versions] kubeadm version: v1.17.17 [upgrade/confirm] Are you sure you want to proceed with the upgrade? [y/N]: y [upgrade/prepull] Will prepull images for components [kube-apiserver kube-controller-manager kube-scheduler etcd] [upgrade/prepull] Prepulling image for component etcd. [upgrade/prepull] Prepulling image for component kube-apiserver. [upgrade/prepull] Prepulling image for component kube-controller-manager. [upgrade/prepull] Prepulling image for component kube-scheduler. [apiclient] Found 0 Pods for label selector k8s-app=upgrade-prepull-etcd [apiclient] Found 1 Pods for label selector k8s-app=upgrade-prepull-kube-apiserver [apiclient] Found 1 Pods for label selector k8s-app=upgrade-prepull-kube-scheduler [apiclient] Found 1 Pods for label selector k8s-app=upgrade-prepull-kube-controller-manager [apiclient] Found 1 Pods for label selector k8s-app=upgrade-prepull-etcd [upgrade/prepull] Prepulled image for component kube-controller-manager. [upgrade/prepull] Prepulled image for component kube-scheduler. [upgrade/prepull] Prepulled image for component etcd. [upgrade/prepull] Prepulled image for component kube-apiserver. [upgrade/prepull] Successfully prepulled the images for all the control plane components [upgrade/apply] Upgrading your Static Pod-hosted control plane to version "v1.17.17"... Static pod: kube-apiserver-k8s-master hash: 91d4b8c2102a048ef29e7a5ccaf063ce Static pod: kube-controller-manager-k8s-master hash: daca7a89ab96e089e89abfe0f611827c Static pod: kube-scheduler-k8s-master hash: 46310b708d47f3b29e1c9e47f1f30387 [upgrade/etcd] Upgrading to TLS for etcd Static pod: etcd-k8s-master hash: 1cd9aa98760ddb514f33c44e4d1dc7c9 [upgrade/staticpods] Preparing for "etcd" upgrade [upgrade/staticpods] Renewing etcd-server certificate [upgrade/staticpods] Renewing etcd-peer certificate [upgrade/staticpods] Renewing etcd-healthcheck-client certificate [upgrade/staticpods] Moved new manifest to "/etc/kubernetes/manifests/etcd.yaml" and backed up old manifest to "/etc/kubernetes/tmp/kubeadm-backup-manifests-2021-06-30-16-54-19/etcd.yaml" [upgrade/staticpods] Waiting for the kubelet to restart the component [upgrade/staticpods] This might take a minute or longer depending on the component/version gap (timeout 5m0s) Static pod: etcd-k8s-master hash: 1cd9aa98760ddb514f33c44e4d1dc7c9 Static pod: etcd-k8s-master hash: 1cd9aa98760ddb514f33c44e4d1dc7c9 Static pod: etcd-k8s-master hash: 90917be8186700a9634eeb5b7deb2f29 [apiclient] Found 1 Pods for label selector component=etcd [upgrade/staticpods] Component "etcd" upgraded successfully! [upgrade/etcd] Waiting for etcd to become available [upgrade/staticpods] Writing new Static Pod manifests to "/etc/kubernetes/tmp/kubeadm-upgraded-manifests142017886" W0630 16:54:53.631085 50879 manifests.go:214] the default kube-apiserver authorization-mode is "Node,RBAC"; using "Node,RBAC" [upgrade/staticpods] Preparing for "kube-apiserver" upgrade [upgrade/staticpods] Renewing apiserver certificate [upgrade/staticpods] Renewing apiserver-kubelet-client certificate [upgrade/staticpods] Renewing front-proxy-client certificate [upgrade/staticpods] Renewing apiserver-etcd-client certificate [upgrade/staticpods] Moved new manifest to "/etc/kubernetes/manifests/kube-apiserver.yaml" and backed up old manifest to "/etc/kubernetes/tmp/kubeadm-backup-manifests-2021-06-30-16-54-19/kube-apiserver.yaml" [upgrade/staticpods] Waiting for the kubelet to restart the component [upgrade/staticpods] This might take a minute or longer depending on the component/version gap (timeout 5m0s) Static pod: kube-apiserver-k8s-master hash: 91d4b8c2102a048ef29e7a5ccaf063ce Static pod: kube-apiserver-k8s-master hash: 91d4b8c2102a048ef29e7a5ccaf063ce Static pod: kube-apiserver-k8s-master hash: 91d4b8c2102a048ef29e7a5ccaf063ce Static pod: kube-apiserver-k8s-master hash: 91d4b8c2102a048ef29e7a5ccaf063ce Static pod: kube-apiserver-k8s-master hash: d965feaece18a2e5aa89bb635179382f [apiclient] Found 1 Pods for label selector component=kube-apiserver [upgrade/staticpods] Component "kube-apiserver" upgraded successfully! [upgrade/staticpods] Preparing for "kube-controller-manager" upgrade [upgrade/staticpods] Renewing controller-manager.conf certificate [upgrade/staticpods] Moved new manifest to "/etc/kubernetes/manifests/kube-controller-manager.yaml" and backed up old manifest to "/etc/kubernetes/tmp/kubeadm-backup-manifests-2021-06-30-16-54-19/kube-controller-manager.yaml" [upgrade/staticpods] Waiting for the kubelet to restart the component [upgrade/staticpods] This might take a minute or longer depending on the component/version gap (timeout 5m0s) Static pod: kube-controller-manager-k8s-master hash: daca7a89ab96e089e89abfe0f611827c Static pod: kube-controller-manager-k8s-master hash: 8c68c0022d7a3531a62b75d38bc6bceb [apiclient] Found 1 Pods for label selector component=kube-controller-manager [upgrade/staticpods] Component "kube-controller-manager" upgraded successfully! [upgrade/staticpods] Preparing for "kube-scheduler" upgrade [upgrade/staticpods] Renewing scheduler.conf certificate [upgrade/staticpods] Moved new manifest to "/etc/kubernetes/manifests/kube-scheduler.yaml" and backed up old manifest to "/etc/kubernetes/tmp/kubeadm-backup-manifests-2021-06-30-16-54-19/kube-scheduler.yaml" [upgrade/staticpods] Waiting for the kubelet to restart the component [upgrade/staticpods] This might take a minute or longer depending on the component/version gap (timeout 5m0s) Static pod: kube-scheduler-k8s-master hash: 46310b708d47f3b29e1c9e47f1f30387 Static pod: kube-scheduler-k8s-master hash: 32acbfba0342d09536f3255176c57bd1 [apiclient] Found 1 Pods for label selector component=kube-scheduler [upgrade/staticpods] Component "kube-scheduler" upgraded successfully! [upload-config] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace [kubelet] Creating a ConfigMap "kubelet-config-1.17" in namespace kube-system with the configuration for the kubelets in the cluster [kubelet-start] Downloading configuration for the kubelet from the "kubelet-config-1.17" ConfigMap in the kube-system namespace [kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml" [bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials [bootstrap-token] configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token [bootstrap-token] configured RBAC rules to allow certificate rotation for all node client certificates in the cluster [addons]: Migrating CoreDNS Corefile [addons] Applied essential addon: CoreDNS [addons] Applied essential addon: kube-proxy [upgrade/successful] SUCCESS! Your cluster was upgraded to "v1.17.17". Enjoy!

手动升级你的 CNI 供应商插件。

您的容器网络接口(CNI)应该提供了程序自身的升级说明。 检查插件页面查找您 CNI 所提供的程序,并查看是否需要其他升级步骤。

如果 CNI 提供程序作为 DaemonSet 运行,则在其他控制平面节点上不需要此步骤。

6、取消对控制面节点的保护

kubectl uncordon $CP_NODE

7、升级控制平面节点上的 kubelet 和 kubectl

# 用最新的修补程序版本替换 1.17.x-00 中的 x yum install -y kubelet-1.17.x-0 kubectl-1.17.x-0 --disableexcludes=kubernetes

8、重启 kubelet

sudo systemctl daemon-reload

sudo systemctl restart kubelet

如果master为高可用,即有多master(控制平面)

升级其他控制平面节点

与第一个控制平面节点相同,但使用:

sudo kubeadm upgrade node experimental-control-plane

而不是:

sudo kubeadm upgrade apply

也不需要: sudo kubeadm upgrade plan

三、升级工作节点

工作节点上的升级过程应该一次执行一个节点,或者一次执行几个节点,以不影响运行工作负载所需的最小容量。

1、升级 kubeadm

在所有工作节点升级 kubeadm :

# 用最新的修补程序版本替换 1.17.x-00 中的 x yum install -y kubeadm-1.17.x-0 --disableexcludes=kubernetes

2、保护节点

通过将节点标记为不可调度并逐出工作负载,为维护做好准备。运行:

kubectl drain $NODE --ignore-daemonsets

您应该可以看见与下面类似的输出:

node/ip-172-31-85-18 cordoned WARNING: ignoring DaemonSet-managed Pods: kube-system/kube-proxy-dj7d7, kube-system/weave-net-z65qx node/ip-172-31-85-18 drained

3、升级 kubelet 配置

sudo kubeadm upgrade node config --kubelet-version v1.14.x ##### v1.17.x以下时执行这个命令 kubeadm upgrade node ####从v1.17.x升级到v.1.18.x时执行这个命令

用最新的修补程序版本替换 1.14.x-00 中的 x

4、升级 kubelet 与 kubectl

# 用最新的修补程序版本替换 1.17.x-00 中的 x yum install -y kubelet-1.17.x-0 kubectl-1.17.x-0 --disableexcludes=kubernetes

5、重启 kubelet

sudo systemctl daemon-reload

sudo systemctl restart kubelet

6、取消对节点的保护

通过将节点标记为可调度,让节点重新上线:

kubectl uncordon $NODE

验证集群的状态

在所有节点上升级 kubelet 后,通过从 kubectl 可以访问集群的任何位置运行以下命令,验证所有节点是否再次可用:

kubectl get nodes

STATUS 应显示所有节点为 Ready 状态,并且版本号已经被更新。

从故障状态恢复

如果 kubeadm upgrade 失败并且没有回滚,例如由于执行期间意外关闭,您可以再次运行 kubeadm upgrade。 此命令是幂等的,并最终确保实际状态是您声明的所需状态。 要从故障状态恢复,您还可以运行 kubeadm upgrade --force 而不去更改集群正在运行的版本

它是怎么工作的

kubeadm upgrade apply 做了以下工作:

- 检查您的集群是否处于可升级状态:

- API 服务器是可访问的

- 所有节点处于

Ready状态 - 控制平面是健康的

- 强制执行版本 skew 策略。

- 确保控制平面的镜像是可用的或可拉取到服务器上。

- 升级控制平面组件或回滚(如果其中任何一个组件无法启动)。

- 应用新的

kube-dns和kube-proxy清单,并强制创建所有必需的 RBAC 规则。 - 如果旧文件在 180 天后过期,将创建 API 服务器的新证书和密钥文件并备份旧文件。

kubeadm upgrade node experimental-control-plane 在其他控制平面节点上执行以下操作: - 从集群中获取 kubeadm ClusterConfiguration。 - 可选地备份 kube-apiserver 证书。 - 升级控制平面组件的静态 Pod 清单